|

Convolutional Coding

In telecommunication, a convolutional code is a type of error-correcting code that generates parity symbols via the sliding application of a boolean polynomial function to a data stream. The sliding application represents the 'convolution' of the encoder over the data, which gives rise to the term 'convolutional coding'. The sliding nature of the convolutional codes facilitates trellis decoding using a time-invariant trellis. Time invariant trellis decoding allows convolutional codes to be maximum-likelihood soft-decision decoded with reasonable complexity. The ability to perform economical maximum likelihood soft decision decoding is one of the major benefits of convolutional codes. This is in contrast to classic block codes, which are generally represented by a time-variant trellis and therefore are typically hard-decision decoded. Convolutional codes are often characterized by the base code rate and the depth (or memory) of the encoder ,k,K/math>. The base code rate is ty ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Telecommunications

Telecommunication, often used in its plural form or abbreviated as telecom, is the transmission of information over a distance using electronic means, typically through cables, radio waves, or other communication technologies. These means of transmission may be divided into communication channels for multiplexing, allowing for a single medium to transmit several concurrent Session (computer science), communication sessions. Long-distance technologies invented during the 20th and 21st centuries generally use electric power, and include the electrical telegraph, telegraph, telephone, television, and radio. Early telecommunication networks used metal wires as the medium for transmitting signals. These networks were used for telegraphy and telephony for many decades. In the first decade of the 20th century, a revolution in wireless communication began with breakthroughs including those made in radio communications by Guglielmo Marconi, who won the 1909 Nobel Prize in Physics. Othe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mobile Telephony

Mobile telephony is the provision of wireless telephone services to mobile phones, distinguishing it from fixed-location telephony provided via landline phones. Traditionally, telephony specifically refers to voice communication, though the distinction has become less clear with the integration of additional features such as text messaging and data services. Modern mobile phones connect to a terrestrial cellular network of base stations (commonly referred to as cell sites), using radio waves to facilitate communication. Satellite phones use wireless links to orbiting satellites, providing an alternative in areas lacking local terrestrial communication infrastructure, such as landline and cellular networks. Cellular networks, satellite networks, and landline systems are all linked to the public switched telephone network (PSTN), enabling calls to be made to and from nearly any telephone worldwide. As of 2010, global estimates indicated approximately five billion mobile ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Systematic Code

In coding theory, a systematic code is any error-correcting code in which the input data are embedded in the encoded output. Conversely, in a non-systematic code the output does not contain the input symbols. Systematic codes have the advantage that the parity data can simply be appended to the source block, and receivers do not need to recover the original source symbols if received correctly – this is useful for example if error-correction coding is combined with a hash function for quickly determining the correctness of the received source symbols, or in cases where errors occur in erasures and a received symbol is thus always correct. Furthermore, for engineering purposes such as synchronization and monitoring, it is desirable to get reasonable good estimates of the received source symbols without going through the lengthy decoding process which may be carried out at a remote site at a later time. Properties Every non-systematic linear code can be transformed into a syst ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bitwise Operation

In computer programming, a bitwise operation operates on a bit string, a bit array or a binary numeral (considered as a bit string) at the level of its individual bits. It is a fast and simple action, basic to the higher-level arithmetic operations and directly supported by the central processing unit, processor. Most bitwise operations are presented as two-operand instructions where the result replaces one of the input operands. On simple low-cost processors, typically, bitwise operations are substantially faster than division, several times faster than multiplication, and sometimes significantly faster than addition. While modern processors usually perform addition and multiplication just as fast as bitwise operations due to their longer instruction pipelines and other computer architecture, architectural design choices, bitwise operations do commonly use less power because of the reduced use of resources. Bitwise operators In the explanations below, any indication of a bit's p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Polynomial Code

In coding theory, a polynomial code is a type of linear code whose set of valid code words consists of those polynomials (usually of some fixed length) that are divisible by a given fixed polynomial (of shorter length, called the ''generator polynomial''). Definition Fix a finite field GF(q), whose elements we call ''symbols''. For the purposes of constructing polynomial codes, we identify a string of n symbols a_\ldots a_0 with the polynomial :a_x^ + \cdots + a_1x + a_0.\, Fix integers m \leq n and let g(x) be some fixed polynomial of degree m, called the ''generator polynomial''. The ''polynomial code generated by g(x)'' is the code whose code words are precisely the polynomials of degree less than n that are divisible (without remainder) by g(x). Example Consider the polynomial code over GF(2)=\ with n=5, m=2, and generator polynomial g(x)=x^2+x+1. This code consists of the following code words: :0\cdot g(x),\quad 1\cdot g(x),\quad x\cdot g(x), \quad (x+1) \cdot g(x), ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

XOR Gate

XOR gate (sometimes EOR, or EXOR and pronounced as Exclusive OR) is a digital logic gate that gives a true (1 or HIGH) output when the number of true inputs is odd. An XOR gate implements an exclusive disjunction, exclusive or (\nleftrightarrow) from mathematical logic; that is, a true output results if one, and only one, of the inputs to the gate is true. If both inputs are false (0/LOW) or both are true, a false output results. XOR represents the inequality function, i.e., the output is true if the inputs are not alike otherwise the output is false. A way to remember XOR is "must have one or the other but not both". An XOR gate may serve as a "programmable inverter" in which one input determines whether to invert the other input, or to simply pass it along with no change. Hence it functions as a Inverter (logic gate), inverter (a NOT gate) which may be activated or deactivated by a switch. XOR can also be viewed as addition Modular arithmetic, modulo 2. As a result, XOR gates ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boolean Algebra

In mathematics and mathematical logic, Boolean algebra is a branch of algebra. It differs from elementary algebra in two ways. First, the values of the variable (mathematics), variables are the truth values ''true'' and ''false'', usually denoted by 1 and 0, whereas in elementary algebra the values of the variables are numbers. Second, Boolean algebra uses logical operators such as Logical conjunction, conjunction (''and'') denoted as , disjunction (''or'') denoted as , and negation (''not'') denoted as . Elementary algebra, on the other hand, uses arithmetic operators such as addition, multiplication, subtraction, and division. Boolean algebra is therefore a formal way of describing logical operations in the same way that elementary algebra describes numerical operations. Boolean algebra was introduced by George Boole in his first book ''The Mathematical Analysis of Logic'' (1847), and set forth more fully in his ''An Investigation of the Laws of Thought'' (1854). According to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Adder (electronics)

An adder, or summer, is a digital circuit that performs addition of numbers. In many computers and other kinds of microprocessor, processors, adders are used in the arithmetic logic units (ALUs). They are also used in other parts of the processor, where they are used to calculate address space, addresses, database index, table indices, increment and decrement operators and similar operations. Although adders can be constructed for many number representations, such as binary-coded decimal or excess-3, the most common adders operate on binary numbers. In cases where two's complement or ones' complement is being used to represent negative numbers, it is trivial to modify an adder into an adder–subtractor. Other signed number representations require more logic around the basic adder. History George Stibitz invented the 2-bit binary adder (the Model K (calculator), Model K) in 1937. Binary adders Half adder The half adder adds two single binary digits A and B. It has two outputs, s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Processor Register

A processor register is a quickly accessible location available to a computer's processor. Registers usually consist of a small amount of fast storage, although some registers have specific hardware functions, and may be read-only or write-only. In computer architecture, registers are typically addressed by mechanisms other than main memory, but may in some cases be assigned a memory address e.g. DEC PDP-10, ICT 1900. Almost all computers, whether load/store architecture or not, load items of data from a larger memory into registers where they are used for arithmetic operations, bitwise operations, and other operations, and are manipulated or tested by machine instructions. Manipulated items are then often stored back to main memory, either by the same instruction or by a subsequent one. Modern processors use either static or dynamic random-access memory (RAM) as main memory, with the latter usually accessed via one or more cache levels. Processor registers are normal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

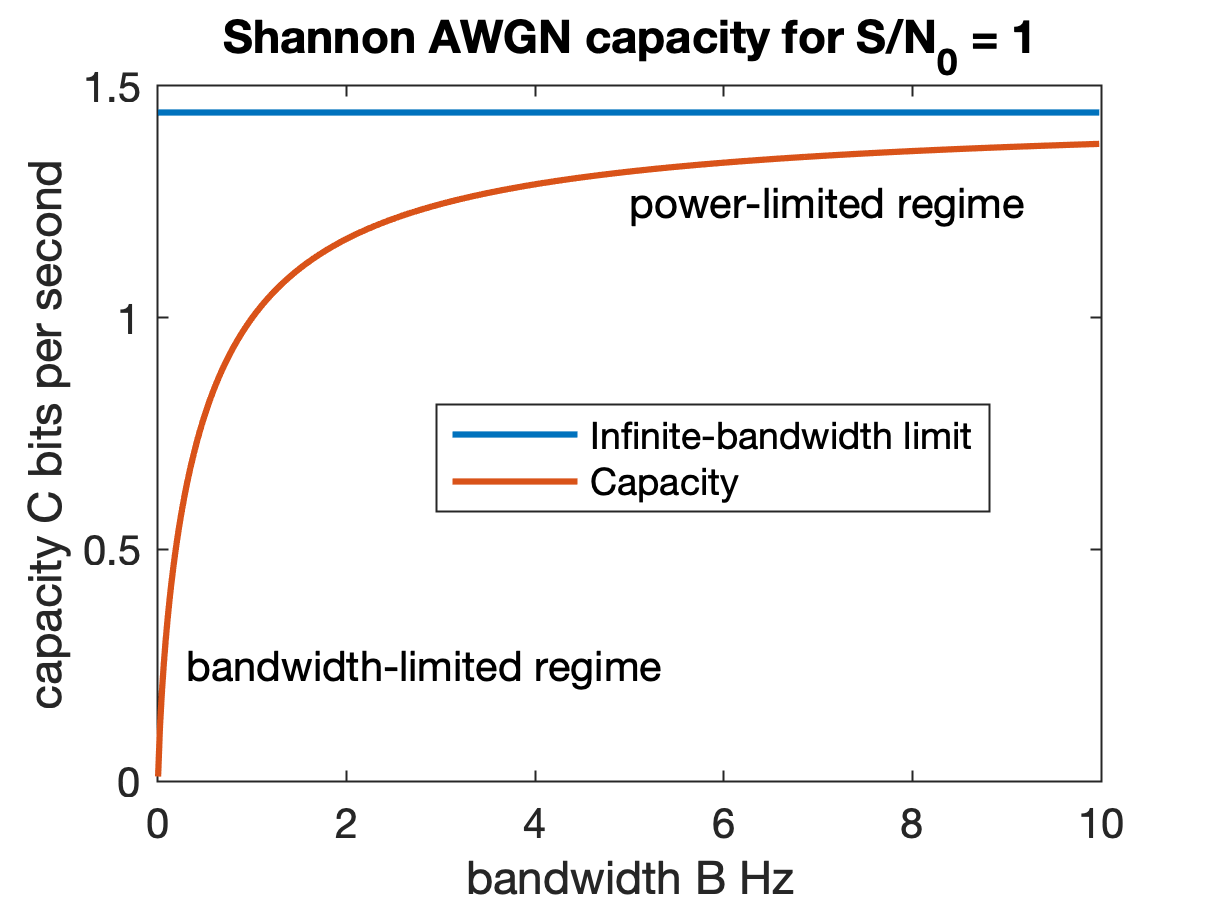

Shannon–Hartley Theorem

In information theory, the Shannon–Hartley theorem tells the maximum rate at which information can be transmitted over a communications channel of a specified bandwidth in the presence of noise. It is an application of the noisy-channel coding theorem to the archetypal case of a continuous-time analog communications channel subject to Gaussian noise. The theorem establishes Shannon's channel capacity for such a communication link, a bound on the maximum amount of error-free information per time unit that can be transmitted with a specified bandwidth in the presence of the noise interference, assuming that the signal power is bounded, and that the Gaussian noise process is characterized by a known power or power spectral density. The law is named after Claude Shannon and Ralph Hartley. Statement of the theorem The Shannon–Hartley theorem states the channel capacity C, meaning the theoretical tightest upper bound on the information rate of data that can be communicated a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |