|

Convex Volume Approximation

In the analysis of algorithms, several authors have studied the computation of the volume of high-dimensional convex bodies, a problem that can also be used to model many other problems in combinatorial enumeration. Often these works use a black box model of computation in which the input is given by a subroutine for testing whether a point is inside or outside of the convex body, rather than by an explicit listing of the vertices or faces of a convex polytope. It is known that, in this model, no deterministic algorithm can achieve an accurate approximation, and even for an explicit listing of faces or vertices the problem is #P-hard. However, a joint work by Martin Dyer, Alan M. Frieze and Ravindran Kannan provided a randomized polynomial time approximation scheme for the problem, providing a sharp contrast between the capabilities of randomized and deterministic algorithms. The main result of the paper is a randomized algorithm for finding an \varepsilon approximation to the v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analysis Of Algorithms

In computer science, the analysis of algorithms is the process of finding the computational complexity of algorithms—the amount of time, storage, or other resources needed to execute them. Usually, this involves determining a function that relates the size of an algorithm's input to the number of steps it takes (its time complexity) or the number of storage locations it uses (its space complexity). An algorithm is said to be efficient when this function's values are small, or grow slowly compared to a growth in the size of the input. Different inputs of the same size may cause the algorithm to have different behavior, so best, worst and average case descriptions might all be of practical interest. When not otherwise specified, the function describing the performance of an algorithm is usually an upper bound, determined from the worst case inputs to the algorithm. The term "analysis of algorithms" was coined by Donald Knuth. Algorithm analysis is an important part of a broa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

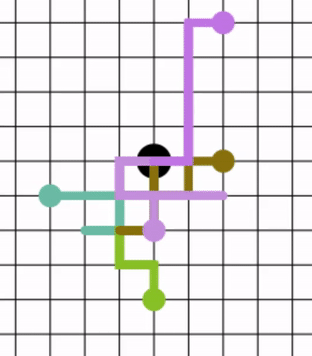

Random Walk

In mathematics, a random walk, sometimes known as a drunkard's walk, is a stochastic process that describes a path that consists of a succession of random steps on some Space (mathematics), mathematical space. An elementary example of a random walk is the random walk on the integer number line \mathbb Z which starts at 0, and at each step moves +1 or −1 with equal probability. Other examples include the path traced by a molecule as it travels in a liquid or a gas (see Brownian motion), the search path of a foraging animal, or the price of a fluctuating random walk hypothesis, stock and the financial status of a gambler. Random walks have applications to engineering and many scientific fields including ecology, psychology, computer science, physics, chemistry, biology, economics, and sociology. The term ''random walk'' was first introduced by Karl Pearson in 1905. Realizations of random walks can be obtained by Monte Carlo Simulation, Monte Carlo simulation. Lattice random ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of Computer And System Sciences

The ''Journal of Computer and System Sciences'' (JCSS) is a peer-reviewed scientific journal in the field of computer science. ''JCSS'' is published by Elsevier, and it was started in 1967. Many influential scientific articles have been published in ''JCSS''; these include five papers that have won the Gödel Prize The Gödel Prize is an annual prize for outstanding papers in the area of theoretical computer science, given jointly by the European Association for Theoretical Computer Science (EATCS) and the Association for Computing Machinery Special Inter .... Its managing editor is Michael Segal. Notes References * * External links * Journal homepageScienceDirect accessDBLP information Computer science journals Elsevier academic journals {{compu-journal-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of The ACM

The ''Journal of the ACM'' (''JACM'') is a peer-reviewed scientific journal covering computer science in general, especially theoretical aspects. It is an official journal of the Association for Computing Machinery. Its current editor-in-chief is Venkatesan Guruswami. The journal was established in 1954 and "computer scientists universally hold the ''Journal of the ACM'' in high esteem". See also * ''Communications of the ACM ''Communications of the ACM'' (''CACM'') is the monthly journal of the Association for Computing Machinery (ACM). History It was established in 1958, with Saul Rosen as its first managing editor. It is sent to all ACM members. Articles are i ...'' References External links * {{DEFAULTSORT:Journal Of The Acm Academic journals established in 1954 Computer science journals Association for Computing Machinery academic journals Bimonthly journals English-language journals ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SIAM Journal On Computing

The ''SIAM Journal on Computing'' is a scientific journal focusing on the mathematical and formal aspects of computer science. It is published by the Society for Industrial and Applied Mathematics (SIAM). Although its official ISO abbreviation is ''SIAM J. Comput.'', its publisher and contributors frequently use the shorter abbreviation ''SICOMP''. SICOMP typically hosts the special issues of the IEEE Annual Symposium on Foundations of Computer Science (FOCS) and the Annual ACM Symposium on Theory of Computing (STOC), where about 15% of papers published in FOCS and STOC each year are invited to these special issues. For example, Volume 48 contains 11 out of 85 papers published in FOCS 2016. References External linksSIAM Journal on Computing on |

Discrete And Computational Geometry

'' Discrete & Computational Geometry'' is a peer-reviewed mathematics journal published quarterly by Springer. Founded in 1986 by Jacob E. Goodman and Richard M. Pollack, the journal publishes articles on discrete geometry and computational geometry. Abstracting and indexing The journal is indexed in: * ''Mathematical Reviews'' * ''Zentralblatt MATH'' * ''Science Citation Index'' * ''Current Contents'' Notable articles Two articles published in ''Discrete & Computational Geometry'', one by Gil Kalai in 1992 with a proof of a subexponential upper bound on the diameter of a polytope and another by Samuel Ferguson in 2006 on the Kepler conjecture on optimal three-dimensional sphere packing, earned their authors the Fulkerson Prize The Fulkerson Prize for outstanding papers in the area of discrete mathematics is sponsored jointly by the Mathematical Optimization Society (MOS) and the American Mathematical Society (AMS). Up to three awards of $1,500 each are presented at ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Klee's Measure Problem

In computational geometry, Klee's measure problem is the problem of determining how efficiently the measure of a union of (multidimensional) rectangular ranges can be computed. Here, a ''d''-dimensional rectangular range is defined to be a Cartesian product of ''d'' intervals of real numbers, which is a subset of R''d''. The problem is named after Victor Klee, who gave an algorithm for computing the length of a union of intervals (the case ''d'' = 1) which was later shown to be optimally efficient in the sense of computational complexity theory. The computational complexity of computing the area of a union of 2-dimensional rectangular ranges is now also known, but the case ''d'' ≥ 3 remains an open problem. History and algorithms In 1977, Victor Klee considered the following problem: given a collection of ''n'' intervals in the real line, compute the length of their union. He then presented an algorithm to solve this problem with computational complexity (or "running time ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

American Mathematical Society

The American Mathematical Society (AMS) is an association of professional mathematicians dedicated to the interests of mathematical research and scholarship, and serves the national and international community through its publications, meetings, advocacy and other programs. The society is one of the four parts of the Joint Policy Board for Mathematics and a member of the Conference Board of the Mathematical Sciences. History The AMS was founded in 1888 as the New York Mathematical Society, the brainchild of Thomas Fiske, who was impressed by the London Mathematical Society on a visit to England. John Howard Van Amringe became the first president while Fiske became secretary. The society soon decided to publish a journal, but ran into some resistance over concerns about competing with the '' American Journal of Mathematics''. The result was the ''Bulletin of the American Mathematical Society'', with Fiske as editor-in-chief. The de facto journal, as intended, was influentia ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fulkerson Prize

The Fulkerson Prize for outstanding papers in the area of discrete mathematics is sponsored jointly by the Mathematical Optimization Society (MOS) and the American Mathematical Society (AMS). Up to three awards of $1,500 each are presented at each (triennial) International Symposium of the MOS. Originally, the prizes were paid out of a memorial fund administered by the AMS that was established by friends of the late Delbert Ray Fulkerson to encourage mathematical excellence in the fields of research exemplified by his work. The prizes are now funded by an endowment administered by MPS. Winners * 1979: ** Richard M. Karp for classifying many important NP-complete problems. ** Kenneth Appel and Wolfgang Haken for the four color theorem. ** Paul Seymour for generalizing the max-flow min-cut theorem to matroids. * 1982: ** D.B. Judin, Arkadi Nemirovski, Leonid Khachiyan, Martin Grötschel, László Lovász and Alexander Schrijver for the ellipsoid method in linear progr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rejection Sampling

In numerical analysis and computational statistics, rejection sampling is a basic technique used to generate observations from a distribution. It is also commonly called the acceptance-rejection method or "accept-reject algorithm" and is a type of exact simulation method. The method works for any distribution in \mathbb^m with a density. Rejection sampling is based on the observation that to sample a random variable in one dimension, one can perform a uniformly random sampling of the two-dimensional Cartesian graph, and keep the samples in the region under the graph of its density function. Note that this property can be extended to ''N''-dimension functions. Description To visualize the motivation behind rejection sampling, imagine graphing the probability density function (PDF) of a random variable onto a large rectangular board and throwing darts at it. Assume that the darts are uniformly distributed around the board. Now remove all of the darts that are outside the area und ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Chain Mixing Time

In probability theory, the mixing time of a Markov chain is the time until the Markov chain is "close" to its steady state distribution. More precisely, a fundamental result about Markov chains is that a finite state irreducible aperiodic chain has a unique stationary distribution and, regardless of the initial state, the time-''t'' distribution of the chain converges to as ''t'' tends to infinity. Mixing time refers to any of several variant formalizations of the idea: how large must ''t'' be until the time-''t'' distribution is approximately ? One variant, ''total variation distance mixing time'', is defined as the smallest ''t'' such that the total variation distance of probability measures is small: :t_(\varepsilon) = \min \left\. Choosing a different \varepsilon, as long as \varepsilon < 1/2, can only change the mixing time up to a constant factor (depending on ) and so one often fixes and simply writes [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Chain Monte Carlo

In statistics, Markov chain Monte Carlo (MCMC) is a class of algorithms used to draw samples from a probability distribution. Given a probability distribution, one can construct a Markov chain whose elements' distribution approximates it – that is, the Markov chain's Discrete-time Markov chain#Stationary distributions, equilibrium distribution matches the target distribution. The more steps that are included, the more closely the distribution of the sample matches the actual desired distribution. Markov chain Monte Carlo methods are used to study probability distributions that are too complex or too highly N-dimensional space, dimensional to study with analytic techniques alone. Various algorithms exist for constructing such Markov chains, including the Metropolis–Hastings algorithm. General explanation Markov chain Monte Carlo methods create samples from a continuous random variable, with probability density proportional to a known function. These samples can be used to e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |