|

Constraint-based Grammar

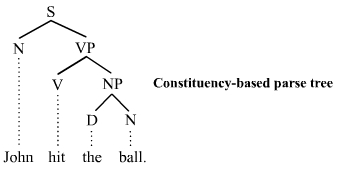

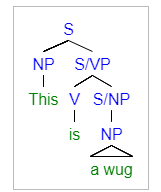

Model-theoretic grammars, also known as constraint-based grammars, contrast with generative grammars in the way they define sets of sentences: they state constraints on syntactic structure rather than providing operations for generating syntactic objects. A generative grammar provides a set of operations such as rewriting, insertion, deletion, movement, or combination, and is interpreted as a definition of the set of all and only the objects that these operations are capable of producing through iterative application. A model-theoretic grammar simply states a set of conditions that an object must meet, and can be regarded as defining the set of all and only the structures of a certain sort that satisfy all of the constraints. The approach applies the mathematical techniques of model theory to the task of syntactic description: a grammar is a theory in the logician's sense (a consistent set of statements) and the well-formed structures are the models that satisfy the theory. Histo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generative Grammar

Generative grammar is a research tradition in linguistics that aims to explain the cognitive basis of language by formulating and testing explicit models of humans' subconscious grammatical knowledge. Generative linguists, or generativists (), tend to share certain working assumptions such as the competence–performance distinction and the notion that some domain-specific aspects of grammar are partly innate in humans. These assumptions are rejected in non-generative approaches such as usage-based models of language. Generative linguistics includes work in core areas such as syntax, semantics, phonology, psycholinguistics, and language acquisition, with additional extensions to topics including biolinguistics and music cognition. Generative grammar began in the late 1950s with the work of Noam Chomsky, having roots in earlier approaches such as structural linguistics. The earliest version of Chomsky's model was called Transformational grammar, with subsequent itera ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexical Functional Grammar

Lexical functional grammar (LFG) is a constraint-based grammar framework in theoretical linguistics. It posits several parallel levels of syntactic structure, including a phrase structure grammar representation of word order and constituency, and a representation of grammatical functions such as subject and object, similar to dependency grammar. The development of the theory was initiated by Joan Bresnan and Ronald Kaplan in the 1970s, in reaction to the theory of transformational grammar which was current in the late 1970s. It mainly focuses on syntax, including its relation with morphology and semantics. There has been little LFG work on phonology (although ideas from optimality theory have recently been popular in LFG research). Some recent work combines LFG with Distributed Morphology in Lexical-Realizational Functional Grammar.Ash Asudeh, Paul B. Melchin & Daniel Siddiqi (2021). ''Constraints all the way down: DM in a representational model of grammar''. In ''WCCFL 39 Proc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Grammar Frameworks

In linguistics, grammar is the set of rules for how a natural language is structured, as demonstrated by its speakers or writers. Grammar rules may concern the use of clauses, phrases, and words. The term may also refer to the study of such rules, a subject that includes phonology, morphology, and syntax, together with phonetics, semantics, and pragmatics. There are, broadly speaking, two different ways to study grammar: traditional grammar and theoretical grammar. Fluency in a particular language variety involves a speaker internalizing these rules, many or most of which are acquired by observing other speakers, as opposed to intentional study or instruction. Much of this internalization occurs during early childhood; learning a language later in life usually involves more direct instruction. The term ''grammar'' can also describe the linguistic behaviour of groups of speakers and writers rather than individuals. Differences in scale are important to this meaning: for exam ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Grammaticality

In linguistics, grammaticality is determined by the conformity to language usage as derived by the grammar of a particular speech variety. The notion of grammaticality rose alongside the theory of generative grammar, the goal of which is to formulate rules that define well-formed, grammatical sentences. These rules of grammaticality also provide explanations of ill-formed, ungrammatical sentences. In theoretical linguistics, a speaker's judgement on the well-formedness of a linguistic 'string'—called a grammaticality judgement—is based on whether the sentence is interpreted in accordance with the rules and constraints of the relevant grammar. If the rules and constraints of the particular lect are followed, then the sentence is judged to be grammatical. In contrast, an ungrammatical sentence is one that violates the rules of the given language variety. Linguists use grammaticality judgements to investigate the syntactic structure of sentences. Generative linguists are larg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

The Cambridge Grammar Of The English Language

''The Cambridge Grammar of the English Language'' (''CamGEL''The abbreviation ''CamGEL'' is less commonly used for the work than is ''CGEL'' (and the authors themselves use ''CGEL'' in their other works), but ''CGEL'' is ambiguous because it has also often been used for the earlier work ''A Comprehensive Grammar of the English Language''. This article uses the unambiguous form.) is a descriptive grammar of the English language. Its primary authors are Rodney Huddleston and Geoffrey K. Pullum. Huddleston was the only author to work on every chapter. It was published by Cambridge University Press in 2002 and has been cited more than 8,000 times. Background In 1988, Huddleston published a very critical review of the 1985 book ''A Comprehensive Grammar of the English Language''. He wrote: [T]here are some respects in which it is seriously flawed and disappointing. A number of quite basic categories and concepts do not seem to have been thought through with sufficient care; this re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Constraint Handling Rules

Constraint Handling Rules (CHR) is a declarative, rule-based programming language, introduced in 1991 by Thom Frühwirth at the time with European Computer-Industry Research Centre (ECRC) in Munich, Germany.Thom Frühwirth. ''Theory and Practice of Constraint Handling Rules''. Special Issue on Constraint Logic Programming (P. Stuckey and K. Marriott, Eds.), Journal of Logic Programming, Vol 37(1-3), October 1998. Originally intended for constraint programming, CHR finds applications in grammar induction, type systems, abductive reasoning, multi-agent systems, natural language processing, compilation, scheduling, spatial-temporal reasoning, testing, and verification. A CHR program, sometimes called a ''constraint handler'', is a set of rules that maintain a ''constraint store'', a multi-set of logical formulas. Execution of rules may add or remove formulas from the store, thus changing the state of the program. The order in which rules "fire" on a given constraint store is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Head-driven Phrase Structure Grammar

Head-driven phrase structure grammar (HPSG) is a highly lexicalized, constraint-based grammar developed by Carl Pollard and Ivan Sag. It is a type of phrase structure grammar, as opposed to a dependency grammar, and it is the immediate successor to generalized phrase structure grammar. HPSG draws from other fields such as computer science (type system, data type theory and knowledge representation) and uses Ferdinand de Saussure's notion of the sign (linguistics), sign. It uses a uniform formalism and is organized in a modular way which makes it attractive for natural language processing. An HPSG includes principles and grammar rules and lexicon entries which are normally not considered to belong to a grammar. The formalism is based on lexicalism. This means that the lexicon is more than just a list of entries; it is in itself richly structured. Individual entries are marked with types. Types form a hierarchy. Early versions of the grammar were very lexicalized with few grammatica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ronald Kaplan

Ronald M. Kaplan (born 1946) has served as a vice president at Amazon.com and chief scientist for Amazon Search ( A9.com). He was previously vice president and distinguished scientist at Nuance Communications and director of Nuance' Natural Language and Artificial Intelligence Laboratory. Prior to that he served as chief scientist and a principal researcher at the Powerset division of Microsoft Bing. He is also an adjunct professor in the Linguistics Department at Stanford University and a principal of Stanford's Center for the Study of Language and Information (CSLI). He was previously a research fellow at the Palo Alto Research Center (formerly the Xerox Palo Alto Research Center), where he was the manager of research in Natural Language Theory and Technology. He received his bachelor's degree (1968) in mathematics and language behavior from the University of California, Berkeley and his master's (1970) and Ph.D. (1975) in social psychology from Harvard University. As a g ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalized Phrase Structure Grammar

Generalized phrase structure grammar (GPSG) is a framework for describing the syntax and semantics of natural languages. It is a type of constraint-based phrase structure grammar. Constraint based grammars are based around defining certain syntactic processes as ungrammatical for a given language and assuming everything not thus dismissed is grammatical within that language. Phrase structure grammars base their framework on constituency relationships, seeing the words in a sentence as ranked, with some words dominating the others. For example, in the sentence "The dog runs", "runs" is seen as dominating "dog" since it is the main focus of the sentence. This view stands in contrast to dependency grammars, which base their assumed structure on the relationship between a single word in a sentence (the sentence head) and its dependents. Origins GPSG was initially developed in the late 1970s by Gerald Gazdar. Other contributors include Ewan Klein, Ivan Sag, and Geoffrey Pullum. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Model Theory

In mathematical logic, model theory is the study of the relationship between theory (mathematical logic), formal theories (a collection of Sentence (mathematical logic), sentences in a formal language expressing statements about a Structure (mathematical logic), mathematical structure), and their Structure (mathematical logic), models (those Structure (mathematical logic), structures in which the statements of the theory hold). The aspects investigated include the number and size of models of a theory, the relationship of different models to each other, and their interaction with the formal language itself. In particular, model theorists also investigate the sets that can be definable set, defined in a model of a theory, and the relationship of such definable sets to each other. As a separate discipline, model theory goes back to Alfred Tarski, who first used the term "Theory of Models" in publication in 1954. Since the 1970s, the subject has been shaped decisively by Saharon Shel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |