|

Concurrent Data Structure

In computer science, a concurrent data structure (also called shared data structure) is a data structure designed for access and modification by multiple computing threads (or processes or nodes) on a computer, for example concurrent queues, concurrent stacks etc. The concurrent data structure is typically considered to reside in an abstract storage environment known as shared memory, which may be physically implemented as either a tightly coupled or a distributed collection of storage modules. Basic principles Concurrent data structures, intended for use in parallel or distributed computing environments, differ from "sequential" data structures, intended for use on a uni-processor machine, in several ways. Most notably, in a sequential environment one specifies the data structure's properties and checks that they are implemented correctly, by providing safety properties. In a concurrent environment, the specification must also describe liveness properties which an impl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, applied disciplines (including the design and implementation of Computer architecture, hardware and Software engineering, software). Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and preventing security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Synchronization (computer Science)

In computer science, synchronization is the task of coordinating multiple processes to join up or handshake at a certain point, in order to reach an agreement or commit to a certain sequence of action. Motivation The need for synchronization does not arise merely in multi-processor systems but for any kind of concurrent processes; even in single processor systems. Mentioned below are some of the main needs for synchronization: '' Forks and Joins:'' When a job arrives at a fork point, it is split into N sub-jobs which are then serviced by n tasks. After being serviced, each sub-job waits until all other sub-jobs are done processing. Then, they are joined again and leave the system. Thus, parallel programming requires synchronization as all the parallel processes wait for several other processes to occur. '' Producer-Consumer:'' In a producer-consumer relationship, the consumer process is dependent on the producer process until the necessary data has been produced. ''Exclusiv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Doug Lea

Douglas S. Lea is a professor of computer science and (as of 2025) head of the computer science department at State University of New York at Oswego, where he specializes in concurrent programming and the design of concurrent data structures. He was on the Executive Committee of the Java Community Process and chaired JSR 166, which added concurrency utilities to the Java programming language (see Java concurrency). On October 22, 2010, Doug Lea notified the Java Community Process Executive Committee he would not stand for reelection. Lea was re-elected as an at-large member for the 2012 OpenJDK governing board. Publications He wrote ''Concurrent Programming in Java: Design Principles and Patterns'', one of the first books about the subject. In 2000, a second edition was released. He is also the author of dlmalloc, a widely used public-domain implementation of malloc. Awards In 2010, he won the senior Dahl-Nygaard Prize. In 2013, he became a Fellow of the Association for Co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hagit Attiya

Hagit Attiya () is an Israeli computer scientist who holds the Harry W. Labov and Charlotte Ullman Labov Academic Chair of Computer Science at the Technion – Israel Institute of Technology in Haifa, Israel. Her research is in the area of distributed computing. Education and career Attiya was educated at the Hebrew University of Jerusalem, earning a B.S. in mathematics and computer science in 1981, a master's degree from the same university in 1983, and a doctorate in 1987, under the supervision of Danny Dolev.Curriculum vitae retrieved 2014-07-07. After postdoctoral studies at the , she joined the Technion faculty in 1990. [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nancy Lynch

Nancy Ann Lynch (born January 19, 1948) is a computer scientist affiliated with the Massachusetts Institute of Technology. She is the NEC Professor of Software Science and Engineering in the EECS department and heads the "Theory of Distributed Systems" research group at MIT's Computer Science and Artificial Intelligence Laboratory. Education and early life Lynch was born in Brooklyn, and her academic training was in mathematics. She attended Brooklyn College and MIT, where she received her Ph.D. in 1972 under the supervision of Albert R. Meyer. Work She served on the math and computer science faculty at several other universities, including Tufts University, the University of Southern California, Florida International University, and the Georgia Institute of Technology (Georgia Tech), prior to joining the MIT faculty in 1982. Since then, she has been working on applying mathematics to the tasks of understanding and constructing complex distributed systems. Her 1985 work wit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Java ConcurrentMap

The Java programming language's Java Collections Framework version 1.5 and later defines and implements the original regular single-threaded Maps, and also new thread-safe Maps implementing the interface among other concurrent interfaces. In Java 1.6, the interface was added, extending , and the interface was added as a subinterface combination. Java Map Interfaces The version 1.8 Map interface diagram has the shape below. Sets can be considered sub-cases of corresponding Maps in which the values are always a particular constant which can be ignored, although the Set API uses corresponding but differently named methods. At the bottom is the java.util.concurrent.ConcurrentNavigableMap, which is a multiple-inheritance. * ** *** **** ***** *** **** Implementations ConcurrentHashMap For unordered access as defined in the java.util.Map interface, the java.util.concurrent.ConcurrentHashMap implements java.util.concurrent.ConcurrentMap. The mechanism is a hash access t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Thread Safety

In multi-threaded computer programming, a function is thread-safe when it can be invoked or accessed concurrently by multiple threads without causing unexpected behavior, race conditions, or data corruption. As in the multi-threaded context where a program executes several threads simultaneously in a shared address space and each of those threads has access to every other thread's memory, thread-safe functions need to ensure that all those threads behave properly and fulfill their design specifications without unintended interaction. There are various strategies for making thread-safe data structures. Levels of thread safety Different vendors use slightly different terminology for thread-safety, but the most commonly used thread-safety terminology are: *Not thread safe: Data structures should not be accessed simultaneously by different threads. *Thread safe, serialization: Uses a single mutex for all resources to guarantee the thread to be free of race conditions when those reso ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rust (programming Language)

Rust is a General-purpose programming language, general-purpose programming language emphasizing Computer performance, performance, type safety, and Concurrency (computer science), concurrency. It enforces memory safety, meaning that all Reference (computer science), references point to valid memory. It does so without a conventional Garbage collection (computer science), garbage collector; instead, memory safety errors and data races are prevented by the "borrow checker", which tracks the object lifetime of references Compiler, at compile time. Rust does not enforce a programming paradigm, but was influenced by ideas from functional programming, including Immutable object, immutability, higher-order functions, algebraic data types, and pattern matching. It also supports object-oriented programming via structs, Enumerated type, enums, traits, and methods. It is popular for systems programming. Software developer Graydon Hoare created Rust as a personal project while working at ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

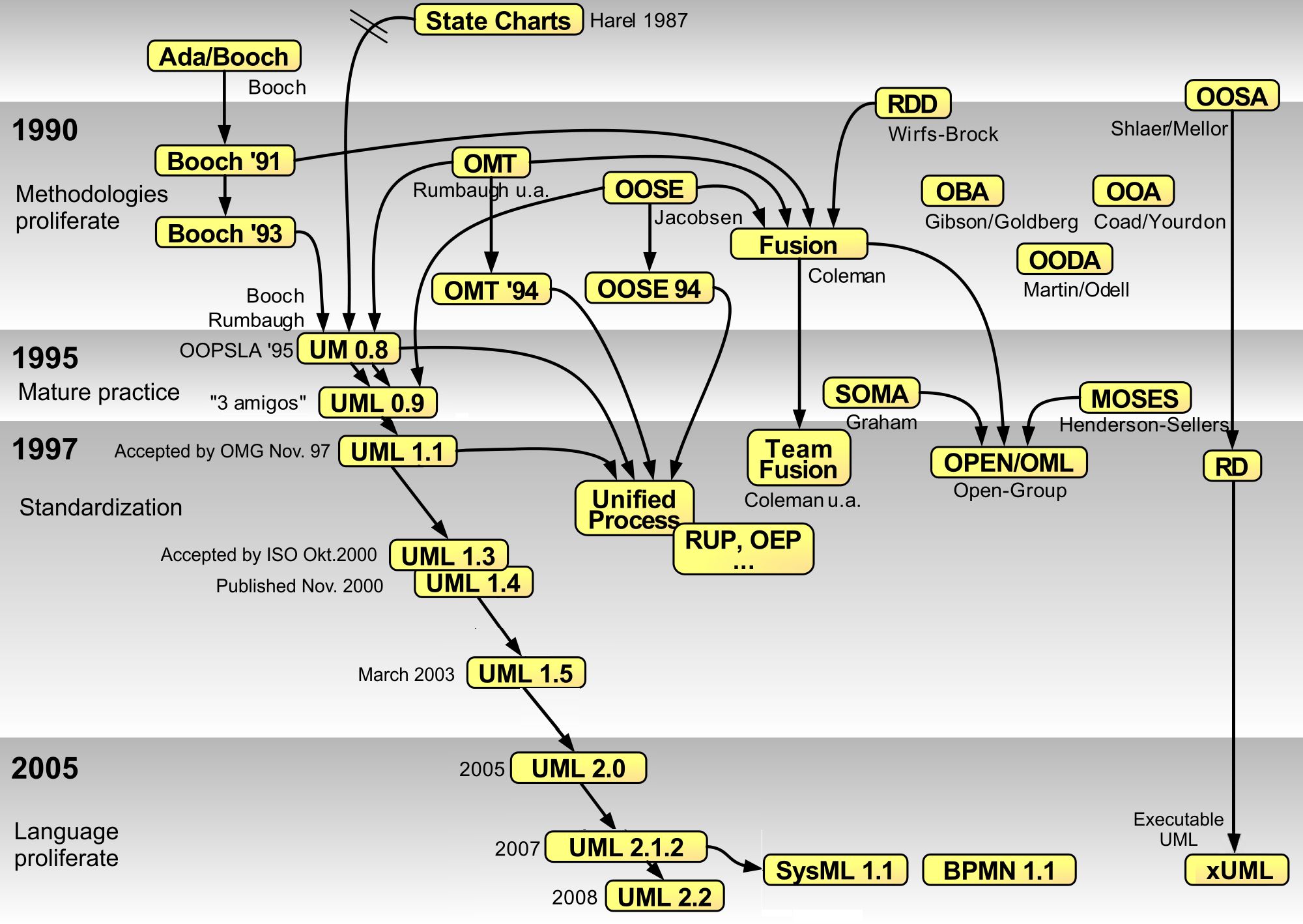

UML Dotnet Concurrent

The Unified Modeling Language (UML) is a general-purpose visual modeling language that is intended to provide a standard way to visualize the design of a system. UML provides a standard notation for many types of diagrams which can be roughly divided into three main groups: behavior diagrams, interaction diagrams, and structure diagrams. The creation of UML was originally motivated by the desire to standardize the disparate notational systems and approaches to software design. It was developed at Rational Software in 1994–1995, with further development led by them through 1996. In 1997, UML was adopted as a standard by the Object Management Group (OMG) and has been managed by this organization ever since. In 2005, UML was also published by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC) as the ISO/IEC 19501 standard. Since then the standard has been periodically revised to cover the latest revision of UML. In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Coherence

In computer architecture, cache coherence is the uniformity of shared resource data that is stored in multiple local caches. In a cache coherent system, if multiple clients have a cached copy of the same region of a shared memory resource, all copies are the same. Without cache coherence, a change made to the region by one client may not be seen by others, and errors can result when the data used by different clients is mismatched. A cache coherence protocol is used to maintain cache coherency. The two main types are snooping and directory-based protocols. Cache coherence is of particular relevance in multiprocessing systems, where each CPU may have its own local cache of a shared memory resource. Overview In a shared memory multiprocessor system with a separate cache memory for each processor, it is possible to have many copies of shared data: one copy in the main memory and one in the local cache of each processor that requested it. When one of the copies of data is c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gustafson's Law

In computer architecture, Gustafson's law (or Gustafson–Barsis's law) gives the speedup in the execution time of a task that theoretically gains from parallel computing, using a hypothetical run of ''the task'' on a single-core machine as the baseline. To put it another way, it is the theoretical "slowdown" of an ''already parallelized'' task if running on a serial machine. It is named after computer scientist John L. Gustafson and his colleague Edwin H. Barsis, and was presented in the article ''Reevaluating Amdahl's Law'' in 1988. Definition Gustafson estimated the speedup S of a program gained by using parallel computing as follows: \begin S &= s + p \times N \\ &= s + (1 - s) \times N \\ &= N + (1 - N) \times s \end where * S is the theoretical speedup of the program with parallelism (scaled speedup); * N is the number of processors; * s and p are the fractions of time spent executing the serial parts and the parallel parts of the program, respectively, on the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Amdahl's Law

In computer architecture, Amdahl's law (or Amdahl's argument) is a formula that shows how much faster a task can be completed when more resources are added to the system. The law can be stated as: "the overall performance improvement gained by optimizing a single part of a system is limited by the fraction of time that the improved part is actually used". It is named after computer scientist Gene Amdahl, and was presented at the American Federation of Information Processing Societies (AFIPS) Spring Joint Computer Conference in 1967. Amdahl's law is often used in parallel computing to predict the theoretical speedup when using multiple processors. Definition In the context of Amdahl's law, speedup can be defined as: \text = \frac or \text = \frac Amdahl's law can be formulated in the following way: : \text_\text = \frac where * \text_\text represents the total speedup of a program * \text_ represents the proportion of time spent on the portion of the code where improve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |