|

Changepoint Detection

In statistical analysis, change detection or change point detection tries to identify times when the probability distribution of a stochastic process or time series changes. In general the problem concerns both detecting whether or not a change has occurred, or whether several changes might have occurred, and identifying the times of any such changes. Specific applications, like step detection and edge detection, may be concerned with changes in the mean, variance, correlation, or spectral density of the process. More generally change detection also includes the detection of anomalous behavior: anomaly detection. In ''offline'' change point detection it is assumed that a sequence of length T is available and the goal is to identify whether any change point(s) occurred in the series. This is an example of post hoc analysis and is often approached using hypothesis testing methods. By contrast, ''online'' change point detection is concerned with detecting change points in an inco ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

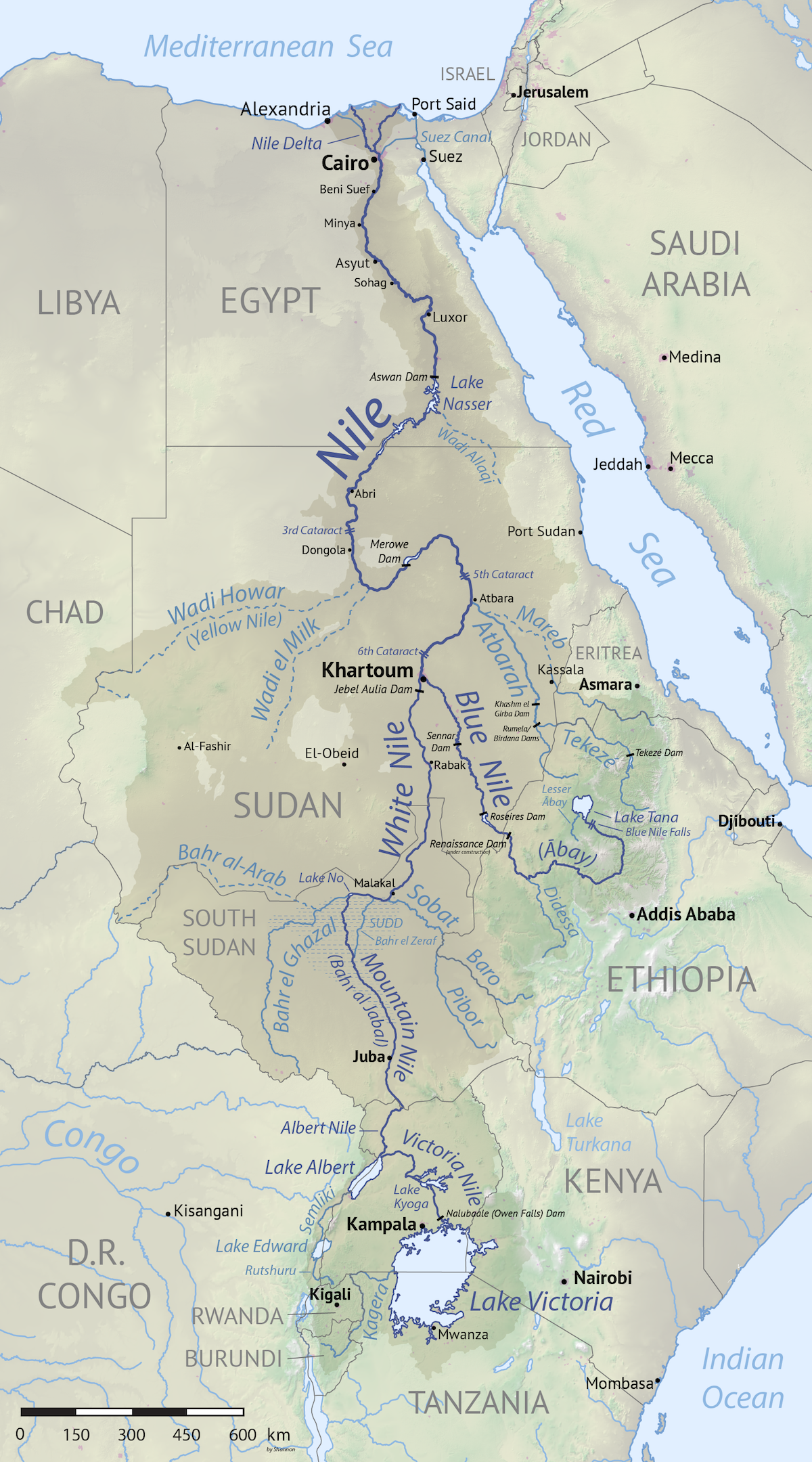

Nile Discharge Data

The Nile (also known as the Nile River or River Nile) is a major north-flowing river in northeastern Africa. It flows into the Mediterranean Sea. The Nile is the longest river in Africa. It has historically been considered the List of river systems by length, longest river in the world, though this has been contested by research suggesting that the Amazon River is slightly longer.Amazon Longer Than Nile River, Scientists Say Of the world's major rivers, the Nile has one of the lowest average annual flow rates. About long, its drainage basin covers eleven countries: the Democratic Republic of the Congo, Tanzania, Burundi, Rwanda, Uganda, Kenya, Ethiopia, Eritrea, South Sudan, Sudan, and Egypt. In pa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

False Positives And False Negatives

A false positive is an error in binary classification in which a test result incorrectly indicates the presence of a condition (such as a disease when the disease is not present), while a false negative is the opposite error, where the test result incorrectly indicates the absence of a condition when it is actually present. These are the two kinds of errors in a binary test, in contrast to the two kinds of correct result (a and a ). They are also known in medicine as a false positive (or false negative) diagnosis, and in statistical classification as a false positive (or false negative) error. In statistical hypothesis testing, the analogous concepts are known as type I and type II errors, where a positive result corresponds to rejecting the null hypothesis, and a negative result corresponds to not rejecting the null hypothesis. The terms are often used interchangeably, but there are differences in detail and interpretation due to the differences between medical testing and st ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spam Filtering

Spam most often refers to: * Spam (food), a consumer brand product of canned processed pork of the Hormel Foods Corporation * Spamming, unsolicited or undesired electronic messages ** Email spam, unsolicited, undesired, or illegal email messages ** Messaging spam, spam targeting users of instant messaging (IM) services, SMS or private messages within websites Art and entertainment * Spam (gaming), the repetition of an in-game action * Spam (Monty Python sketch), "Spam" (Monty Python sketch), a comedy sketch * "Spam", a song on the album ''It Means Everything'' (1997), by Save Ferris * "Spam", a song by "Weird Al" Yankovic on the album ''UHF – Original Motion Picture Soundtrack and Other Stuff'' * Spam Museum, a museum in Austin, Minnesota, US dedicated to the canned pork meat product Other uses * Smooth-particle applied mechanics, the use of smoothed-particle hydrodynamics computation to study impact fractures in solids * SPAM, a Bacterial phyla#Uncultivated Phyla and metag ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intrusion Detection

An intrusion detection system (IDS) is a device or software application that monitors a network or systems for malicious activity or policy violations. Any intrusion activity or violation is typically either reported to an administrator or collected centrally using a security information and event management (SIEM) system. A SIEM system combines outputs from multiple sources and uses alarm filtering techniques to distinguish malicious activity from false alarms. IDS types range in scope from single computers to large networks. The most common classifications are network intrusion detection systems (NIDS) and host-based intrusion detection systems (HIDS). A system that monitors important operating system files is an example of an HIDS, while a system that analyzes incoming network traffic is an example of an NIDS. It is also possible to classify IDS by detection approach. The most well-known variants are signature-based detection (recognizing bad patterns, such as exploitatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quality Control

Quality control (QC) is a process by which entities review the quality of all factors involved in production. ISO 9000 defines quality control as "a part of quality management focused on fulfilling quality requirements". This approach places emphasis on three aspects (enshrined in standards such as ISO 9001): # Elements such as controls, job management, defined and well managed processes, performance and integrity criteria, and identification of records # Competence, such as knowledge, skills, experience, and qualifications # Soft elements, such as personnel, integrity, confidence, organizational culture, motivation, team spirit, and quality relationships. Inspection is a major component of quality control, where physical product is examined visually (or the end results of a service are analyzed). Product inspectors will be provided with lists and descriptions of unacceptable product defects such as cracks or surface blemishes for example. History and introductio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Information Criterion

In statistics, the Bayesian information criterion (BIC) or Schwarz information criterion (also SIC, SBC, SBIC) is a criterion for model selection among a finite set of models; models with lower BIC are generally preferred. It is based, in part, on the likelihood function and it is closely related to the Akaike information criterion (AIC). When fitting models, it is possible to increase the maximum likelihood by adding parameters, but doing so may result in overfitting. Both BIC and AIC attempt to resolve this problem by introducing a penalty term for the number of parameters in the model; the penalty term is larger in BIC than in AIC for sample sizes greater than 7. The BIC was developed by Gideon E. Schwarz and published in a 1978 paper, as a large-sample approximation to the Bayes factor. Definition The BIC is formally defined as : \mathrm = k\ln(n) - 2\ln(\widehat L). \ where *\hat L = the maximized value of the likelihood function of the model M, i.e. \hat L=p(x\mid\wid ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Akaike Information Criterion

The Akaike information criterion (AIC) is an estimator of prediction error and thereby relative quality of statistical models for a given set of data. Given a collection of models for the data, AIC estimates the quality of each model, relative to each of the other models. Thus, AIC provides a means for model selection. AIC is founded on information theory. When a statistical model is used to represent the process that generated the data, the representation will almost never be exact; so some information will be lost by using the model to represent the process. AIC estimates the relative amount of information lost by a given model: the less information a model loses, the higher the quality of that model. In estimating the amount of information lost by a model, AIC deals with the trade-off between the goodness of fit of the model and the simplicity of the model. In other words, AIC deals with both the risk of overfitting and the risk of underfitting. The Akaike information crite ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nile River Flow Bayesian Changepoint Detection

The Nile (also known as the Nile River or River Nile) is a major north-flowing river in northeastern Africa. It flows into the Mediterranean Sea. The Nile is the longest river in Africa. It has historically been considered the longest river in the world, though this has been contested by research suggesting that the Amazon River is slightly longer.Amazon Longer Than Nile River, Scientists Say Of the world's major rivers, the Nile has one of the lowest average annual flow rates. About long, its covers eleven countries: the |

Mathematical Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maxima and minima, maximizing or minimizing a Function of a real variable, real function by systematically choosing Argument of a function, input values from within an allowed set and computing the Value (mathematics), value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. Optimization problems Opti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Likelihood Estimation

In statistics, maximum likelihood estimation (MLE) is a method of estimation theory, estimating the Statistical parameter, parameters of an assumed probability distribution, given some observed data. This is achieved by Mathematical optimization, maximizing a likelihood function so that, under the assumed statistical model, the Realization (probability), observed data is most probable. The point estimate, point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is Differentiable function, differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cluster Analysis

Cluster analysis or clustering is the data analyzing technique in which task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more Similarity measure, similar (in some specific sense defined by the analyst) to each other than to those in other groups (clusters). It is a main task of exploratory data analysis, and a common technique for statistics, statistical data analysis, used in many fields, including pattern recognition, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Cluster analysis refers to a family of algorithms and tasks rather than one specific algorithm. It can be achieved by various algorithms that differ significantly in their understanding of what constitutes a cluster and how to efficiently find them. Popular notions of clusters include groups with small Distance function, distances between cluster members, dense areas of the data space, intervals or pa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Two-phase Regression

Segmented regression, also known as piecewise regression or broken-stick regression, is a method in regression analysis in which the independent variable is partitioned into intervals and a separate line segment is fit to each interval. Segmented regression analysis can also be performed on multivariate data by partitioning the various independent variables. Segmented regression is useful when the independent variables, clustered into different groups, exhibit different relationships between the variables in these regions. The boundaries between the segments are ''breakpoints''. Segmented linear regression is segmented regression whereby the relations in the intervals are obtained by linear regression. Segmented linear regression, two segments Segmented linear regression with two segments separated by a ''breakpoint'' can be useful to quantify an abrupt change of the response function (Yr) of a varying influential factor (x). The breakpoint can be interpreted as a ''critica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |