|

Canonical Analysis

In statistics, canonical analysis (from bar, measuring rod, ruler) belongs to the family of regression methods for data analysis. Regression analysis quantifies a relationship between a predictor variable and a criterion variable by the coefficient of correlation ''r'', coefficient of determination ''r''2, and the standard regression coefficient ''β''. Multiple regression analysis expresses a relationship between a set of predictor variables and a single criterion variable by the multiple correlation ''R'', multiple coefficient of determination R2, and a set of standard partial regression weights ''β''1, ''β''2, etc. Canonical variate analysis captures a relationship between a set of predictor variables and a set of criterion variables by the canonical correlations ''ρ''1, ''ρ''2, ..., and by the sets of canonical weights C and D. Canonical analysis Canonical analysis belongs to a group of methods which involve solving the characteristic equation for its latent roots a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

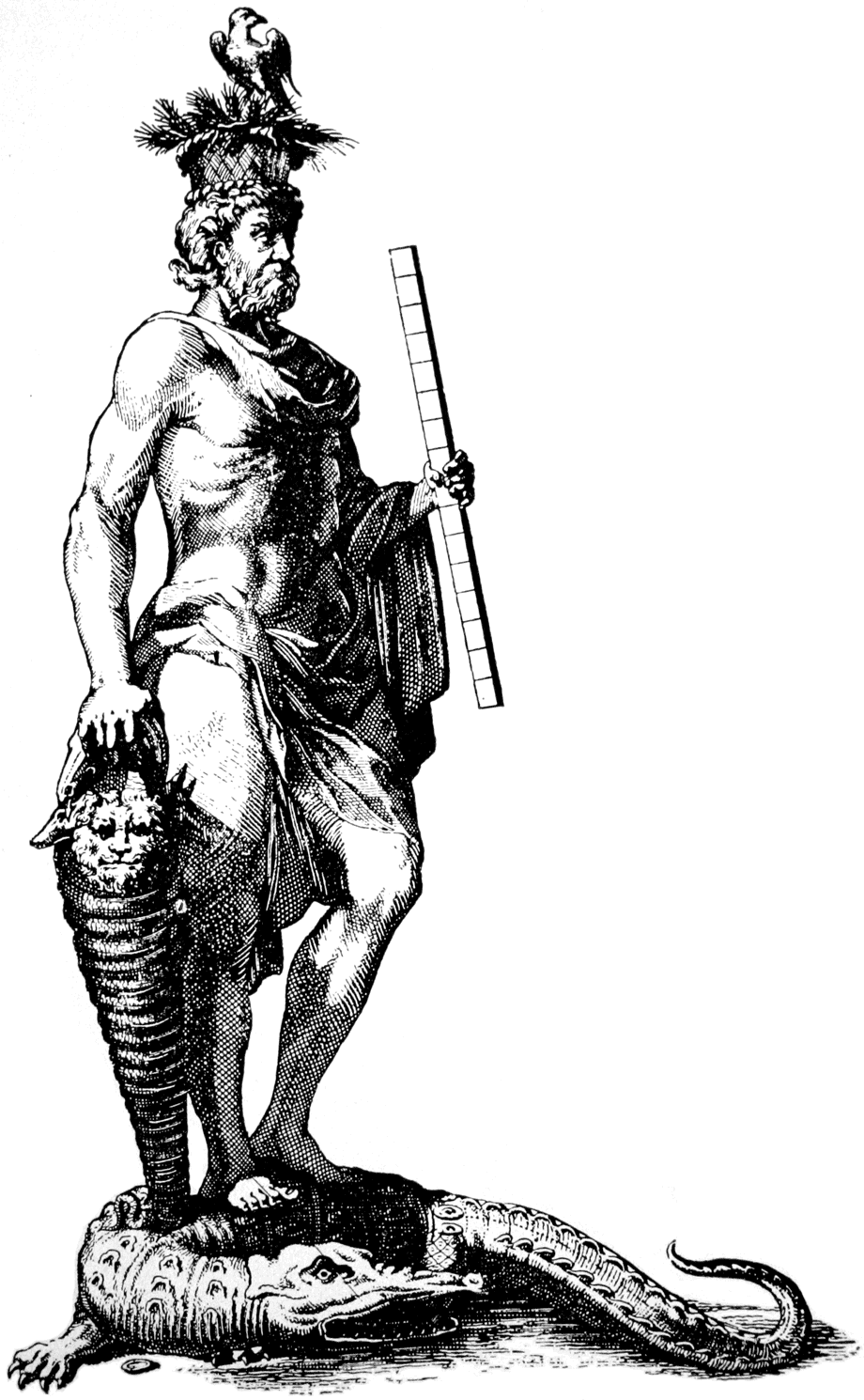

Measuring Rod

A measuring rod is a tool used to physically length measurement, measure lengths and surveying, survey areas of various sizes. Most measuring rods are round or square sectioned; however, they can also be flat boards. Some have markings at regular intervals. It is likely that the measuring rod was used before the line, chain or steel tapes used in modern measurement. History Ancient Sumer The oldest preserved measuring rod is a copper-alloy bar which was found by the Germans, German Assyriology, Assyriologist Eckhard Unger while excavating at Nippur (pictured below). The bar dates from c. 2650 BC. and Unger claimed it was used as a measurement standard. This irregularly formed and irregularly marked ''graduated rule'' supposedly defined the ''Sumerian cubit'' as about , although this does not agree with other evidence from the statues of Gudea from the same region, five centuries later. Ancient India Rulers made from ivory were in use by the Indus Valley Civilization in what ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Correlation

In statistics, correlation or dependence is any statistical relationship, whether causal or not, between two random variables or bivariate data. Although in the broadest sense, "correlation" may indicate any type of association, in statistics it usually refers to the degree to which a pair of variables are '' linearly'' related. Familiar examples of dependent phenomena include the correlation between the height of parents and their offspring, and the correlation between the price of a good and the quantity the consumers are willing to purchase, as it is depicted in the demand curve. Correlations are useful because they can indicate a predictive relationship that can be exploited in practice. For example, an electrical utility may produce less power on a mild day based on the correlation between electricity demand and weather. In this example, there is a causal relationship, because extreme weather causes people to use more electricity for heating or cooling. However, in g ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiple Correlation

In statistics, the coefficient of multiple correlation is a measure of how well a given variable can be predicted using a linear function of a set of other variables. It is the correlation between the variable's values and the best predictions that can be computed linearly from the predictive variables. The coefficient of multiple correlation takes values between 0 and 1. Higher values indicate higher predictability of the dependent variable from the independent variables, with a value of 1 indicating that the predictions are exactly correct and a value of 0 indicating that no linear combination of the independent variables is a better predictor than is the fixed mean of the dependent variable. The coefficient of multiple correlation is known as the square root of the coefficient of determination, but under the particular assumptions that an intercept is included and that the best possible linear predictors are used, whereas the coefficient of determination is defined for more ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Canonical Correlation

In statistics, canonical-correlation analysis (CCA), also called canonical variates analysis, is a way of inferring information from cross-covariance matrices. If we have two vectors ''X'' = (''X''1, ..., ''X''''n'') and ''Y'' = (''Y''1, ..., ''Y''''m'') of random variables, and there are correlations among the variables, then canonical-correlation analysis will find linear combinations of ''X'' and ''Y'' that have a maximum correlation with each other. T. R. Knapp notes that "virtually all of the commonly encountered parametric tests of significance can be treated as special cases of canonical-correlation analysis, which is the general procedure for investigating the relationships between two sets of variables." The method was first introduced by Harold Hotelling in 1936, although in the context of angles between flats the mathematical concept was published by Camille Jordan in 1875. CCA is now a cornerstone of multivariate statistics ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

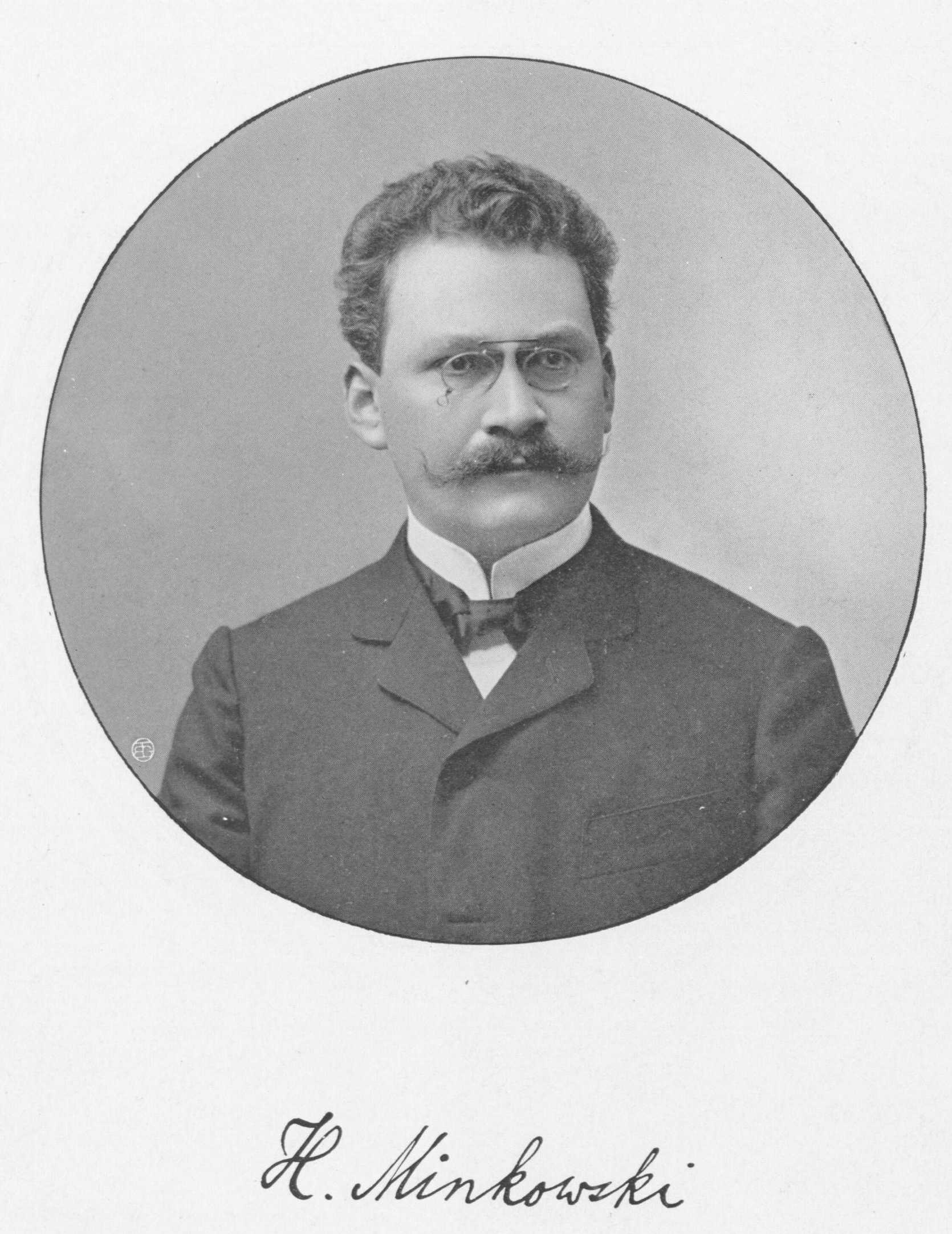

Minkowski Spacetime

In physics, Minkowski space (or Minkowski spacetime) () is the main mathematical description of spacetime in the absence of gravitation. It combines inertial space and time manifolds into a four-dimensional model. The model helps show how a spacetime interval between any two events is independent of the inertial frame of reference in which they are recorded. Mathematician Hermann Minkowski developed it from the work of Hendrik Lorentz, Henri Poincaré, and others said it "was grown on experimental physical grounds". Minkowski space is closely associated with Einstein's theories of special relativity and general relativity and is the most common mathematical structure by which special relativity is formalized. While the individual components in Euclidean space and time might differ due to length contraction and time dilation, in Minkowski spacetime, all frames of reference will agree on the total interval in spacetime between events.This makes spacetime distance an invarian ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Harold Hotelling

Harold Hotelling (; September 29, 1895 – December 26, 1973) was an American mathematical statistician and an influential economic theorist, known for Hotelling's law, Hotelling's lemma, and Hotelling's rule in economics, as well as Hotelling's T-squared distribution in statistics. He also developed and named the principal component analysis method widely used in finance, statistics and computer science. He was associate professor of mathematics at Stanford University from 1927 until 1931, a member of the faculty of Columbia University from 1931 until 1946, and a professor of Mathematical Statistics at the University of North Carolina at Chapel Hill from 1946 until his death. A street in Chapel Hill bears his name. In 1972, he received the North Carolina Award for contributions to science. Statistics Hotelling is known to statisticians because of Hotelling's T-squared distribution which is a generalization of the Student's t-distribution in multivariate setting, and i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Canonical Correlation Analysis

In statistics, canonical-correlation analysis (CCA), also called canonical variates analysis, is a way of inferring information from cross-covariance matrices. If we have two vectors ''X'' = (''X''1, ..., ''X''''n'') and ''Y'' = (''Y''1, ..., ''Y''''m'') of random variables, and there are correlations among the variables, then canonical-correlation analysis will find linear combinations of ''X'' and ''Y'' that have a maximum correlation with each other. T. R. Knapp notes that "virtually all of the commonly encountered parametric tests of significance can be treated as special cases of canonical-correlation analysis, which is the general procedure for investigating the relationships between two sets of variables." The method was first introduced by Harold Hotelling in 1936, although in the context of angles between flats the mathematical concept was published by Camille Jordan in 1875. CCA is now a cornerstone of multivariate statistics a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principal Components Analysis

Principal component analysis (PCA) is a Linear map, linear dimensionality reduction technique with applications in exploratory data analysis, visualization and Data Preprocessing, data preprocessing. The data is linear map, linearly transformed onto a new coordinate system such that the directions (principal components) capturing the largest variation in the data can be easily identified. The principal components of a collection of points in a real coordinate space are a sequence of p unit vectors, where the i-th vector is the direction of a line that best fits the data while being orthogonal to the first i-1 vectors. Here, a best-fitting line is defined as one that minimizes the average squared perpendicular distance, perpendicular Distance from a point to a line, distance from the points to the line. These directions (i.e., principal components) constitute an orthonormal basis in which different individual dimensions of the data are Linear correlation, linearly uncorrelated. Ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Factor Analysis

Factor analysis is a statistical method used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. For example, it is possible that variations in six observed variables mainly reflect the variations in two unobserved (underlying) variables. Factor analysis searches for such joint variations in response to unobserved latent variables. The observed variables are modelled as linear combinations of the potential factors plus "error" terms, hence factor analysis can be thought of as a special case of errors-in-variables models. Simply put, the factor loading of a variable quantifies the extent to which the variable is related to a given factor. A common rationale behind factor analytic methods is that the information gained about the interdependencies between observed variables can be used later to reduce the set of variables in a dataset. Factor analysis is commonly used in psychometrics, pers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eigenvalues

In linear algebra, an eigenvector ( ) or characteristic vector is a vector that has its direction unchanged (or reversed) by a given linear transformation. More precisely, an eigenvector \mathbf v of a linear transformation T is scaled by a constant factor \lambda when the linear transformation is applied to it: T\mathbf v=\lambda \mathbf v. The corresponding eigenvalue, characteristic value, or characteristic root is the multiplying factor \lambda (possibly a negative or complex number). Geometrically, vectors are multi-dimensional quantities with magnitude and direction, often pictured as arrows. A linear transformation rotates, stretches, or shears the vectors upon which it acts. A linear transformation's eigenvectors are those vectors that are only stretched or shrunk, with neither rotation nor shear. The corresponding eigenvalue is the factor by which an eigenvector is stretched or shrunk. If the eigenvalue is negative, the eigenvector's direction is reversed. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |