|

Binary Delta Compression

Binary delta compression is a technology used in software deployment for distributing patches. Explanation Downloading large amounts of data over the Internet for software updates can induce high network traffic problems, especially when a network of computers is involved. ''Binary Delta Compression'' technology allows a major reduction of download size by only transferring the difference between the old and the new files during the update process. Implementation In real-world implementations, it is common to also use standard compression techniques (such as Lempel-Ziv) while compressing. This makes sense because LZW already works by referring back to re-used stringsZDeltais a good example of this, as it is built from ZLib. The algorithm works by referring to common patterns not only in the file to be compressed, but also in a source file. The benefits of this are that even if there are few similarities between the original and the new file, a good data compression ratio is atta ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Software Deployment

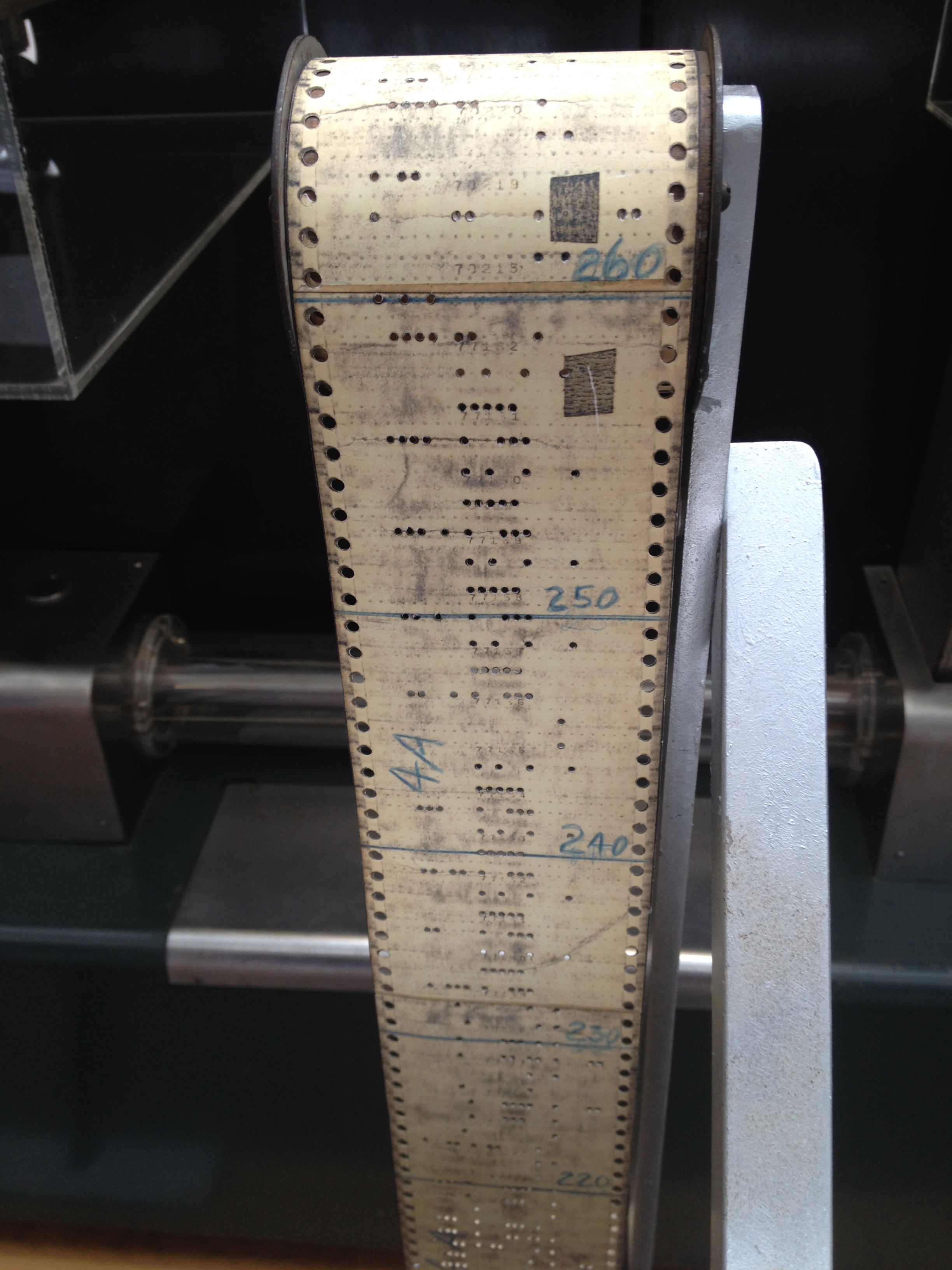

Software deployment is all of the activities that make a software system available for use. The general deployment process consists of several interrelated activities with possible transitions between them. These activities can occur on the producer side or on the consumer side or both. Because every software system is unique, the precise processes or procedures within each activity can hardly be defined. Therefore, "deployment" should be interpreted as a ''general process'' that has to be customized according to specific requirements or characteristics. History When computers were extremely large, expensive, and bulky (mainframes and minicomputers), the software was often bundled together with the hardware by manufacturers. If business software needed to be installed on an existing computer, this might require an expensive, time-consuming visit by a systems architect or a consultant. For complex, on-premises installation of enterprise software today, this can still someti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Patch (software)

A patch is a set of changes to a computer program or its supporting data designed to update, fix, or improve it. This includes fixing security vulnerabilities and other bugs, with such patches usually being called bugfixes or bug fixes. Patches are often written to improve the functionality, usability, or performance of a program. The majority of patches are provided by software vendors for operating system and application updates. Patches may be installed either under programmed control or by a human programmer using an editing tool or a debugger. They may be applied to program files on a storage device, or in computer memory. Patches may be permanent (until patched again) or temporary. Patching makes possible the modification of compiled and machine language object programs when the source code is unavailable. This demands a thorough understanding of the inner workings of the object code by the person creating the patch, which is difficult without close study of the source c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Zlib

zlib ( or "zeta-lib", ) is a software library used for data compression. zlib was written by Jean-loup Gailly and Mark Adler and is an abstraction of the DEFLATE compression algorithm used in their gzip file compression program. zlib is also a crucial component of many software platforms, including Linux, macOS, and iOS. It has also been used in gaming consoles such as the PlayStation 4, PlayStation 3, Wii U, Wii, Xbox One and Xbox 360. The first public version of zlib, 0.9, was released on 1 May 1995 and was originally intended for use with the libpng image library. It is free software, distributed under the zlib License. Capabilities Encapsulation zlib compressed data are typically written with a gzip or a zlib wrapper. The wrapper encapsulates the raw DEFLATE data by adding a header and trailer. This provides stream identification and error detection that are not provided by the raw DEFLATE data. The gzip header, used in the ubiquitous gzip file format, is larger t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression Ratio

Data compression ratio, also known as compression power, is a measurement of the relative reduction in size of data representation produced by a data compression algorithm. It is typically expressed as the division of uncompressed size by compressed size. Definition Data compression ratio is defined as the ratio between the ''uncompressed size'' and ''compressed size'': : = \frac Thus, a representation that compresses a file's storage size from 10 MB to 2 MB has a compression ratio of 10/2 = 5, often notated as an explicit ratio, 5:1 (read "five" to "one"), or as an implicit ratio, 5/1. This formulation applies equally for compression, where the uncompressed size is that of the original; and for decompression, where the uncompressed size is that of the reproduction. Sometimes the ''space saving'' is given instead, which is defined as the reduction in size relative to the uncompressed size: : = 1 - \frac Thus, a representation that compresses the storage size of a file from 1 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Delta Encoding

Delta encoding is a way of storing or transmitting data in the form of '' differences'' (deltas) between sequential data rather than complete files; more generally this is known as data differencing. Delta encoding is sometimes called delta compression, particularly where archival histories of changes are required (e.g., in revision control software). The differences are recorded in discrete files called "deltas" or "diffs". In situations where differences are small – for example, the change of a few words in a large document or the change of a few records in a large table – delta encoding greatly reduces data redundancy. Collections of unique deltas are substantially more space-efficient than their non-encoded equivalents. From a logical point of view the difference between two data values is the information required to obtain one value from the other – see relative entropy. The difference between identical values (under some equivalence) is often called ''0'' or the neut ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Remote Differential Compression

Remote Differential Compression (RDC) is a client–server synchronization algorithm that allows the contents of two files to be synchronized by communicating only the differences between them. It was introduced with Microsoft Windows Server 2003 R2, is included with later Windows client and server operating systems, but by 2019 is not being developed and is not used by any Microsoft product. Unlike Binary Delta Compression (BDC), which is designed to operate only on known versions of a single file, RDC does not make assumptions about file similarity or versioning. The differences between files are computed on the fly, therefore RDC is suitable for efficient synchronization of files that have been updated independently, where network bandwidth is small, or where the files are large but the differences between them are small. The algorithm used is based on fingerprinting blocks on each file locally at both ends of the replication partners. Since many types of file changes can cause ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |