|

Atomic Instruction

In concurrent programming, an operation (or set of operations) is linearizable if it consists of an ordered list of invocation and response events (event), that may be extended by adding response events such that: # The extended list can be re-expressed as a sequential history (is serializable). # That sequential history is a subset of the original unextended list. Informally, this means that the unmodified list of events is linearizable if and only if its invocations were serializable, but some of the responses of the serial schedule have yet to return. In a concurrent system, processes can access a shared object at the same time. Because multiple processes are accessing a single object, there may arise a situation in which while one process is accessing the object, another process changes its contents. Making a system linearizable is one solution to this problem. In a linearizable system, although operations overlap on a shared object, each operation appears to take place ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

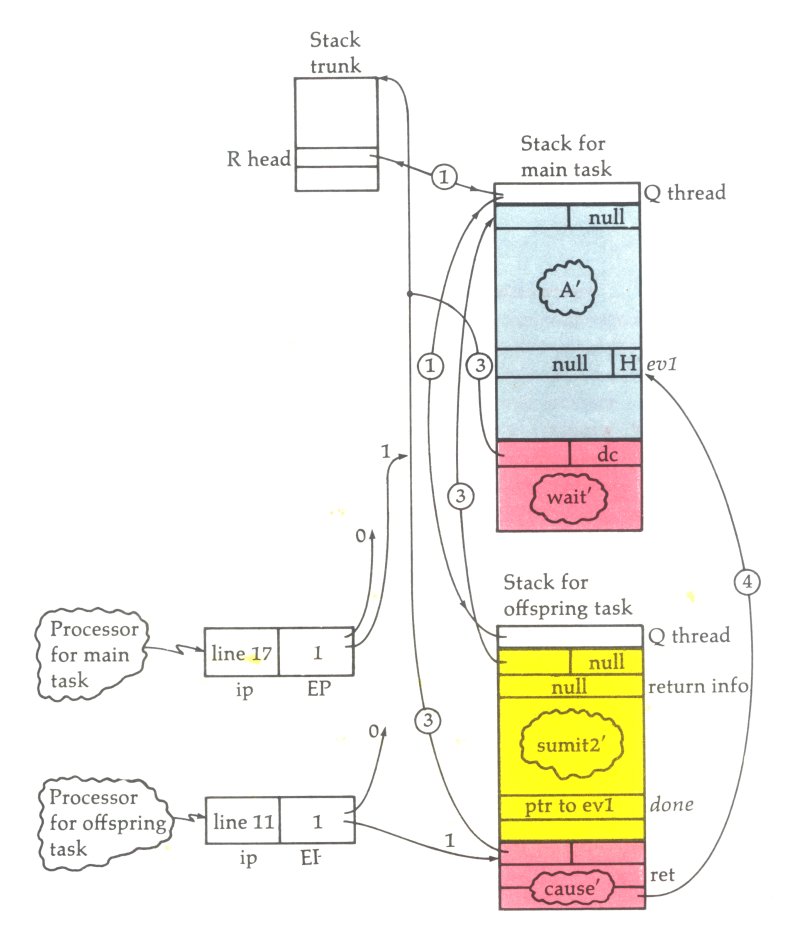

Burroughs Large Systems

The Burroughs Large Systems Group produced a family of large 48-bit mainframes using stack machine instruction sets with dense syllables.E.g., 12-bit syllables for B5000, 8-bit syllables for B6500 The first machine in the family was the B5000 in 1961. It was optimized for compiling ALGOL 60 programs extremely well, using single-pass compilers. It evolved into the B5500. Subsequent major redesigns include the B6500/B6700 line and its successors, as well as the separate B8500 line. In the 1970s, the Burroughs Corporation was organized into three divisions with very different product line architectures for high-end, mid-range, and entry-level business computer systems. Each division's product line grew from a different concept for how to optimize a computer's instruction set for particular programming languages. "Burroughs Large Systems" referred to all of these large-system product lines together, in contrast to the COBOL-optimized Medium Systems (B2000, B3000, and B4000) or the fl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

C11 (C Standard Revision)

C11 (formerly C1X) is an informal name for ISO/IEC 9899:2011, a past standard for the C programming language. It replaced C99 (standard ISO/IEC 9899:1999) and has been superseded by C17 (standard ISO/IEC 9899:2018). C11 mainly standardizes features already supported by common contemporary compilers, and includes a detailed memory model to better support multiple threads of execution. Due to delayed availability of conforming C99 implementations, C11 makes certain features optional, to make it easier to comply with the core language standard. The final draft, N1570, was published in April 2011. The new standard passed its final draft review on October 10, 2011 and was officially ratified by ISO and published as ISO/IEC 9899:2011 on December 8, 2011, with no comments requiring resolution by participating national bodies. A standard macro __STDC_VERSION__ is defined with value 201112L to indicate that C11 support is available. Some features of C11 are supported by the GCC star ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

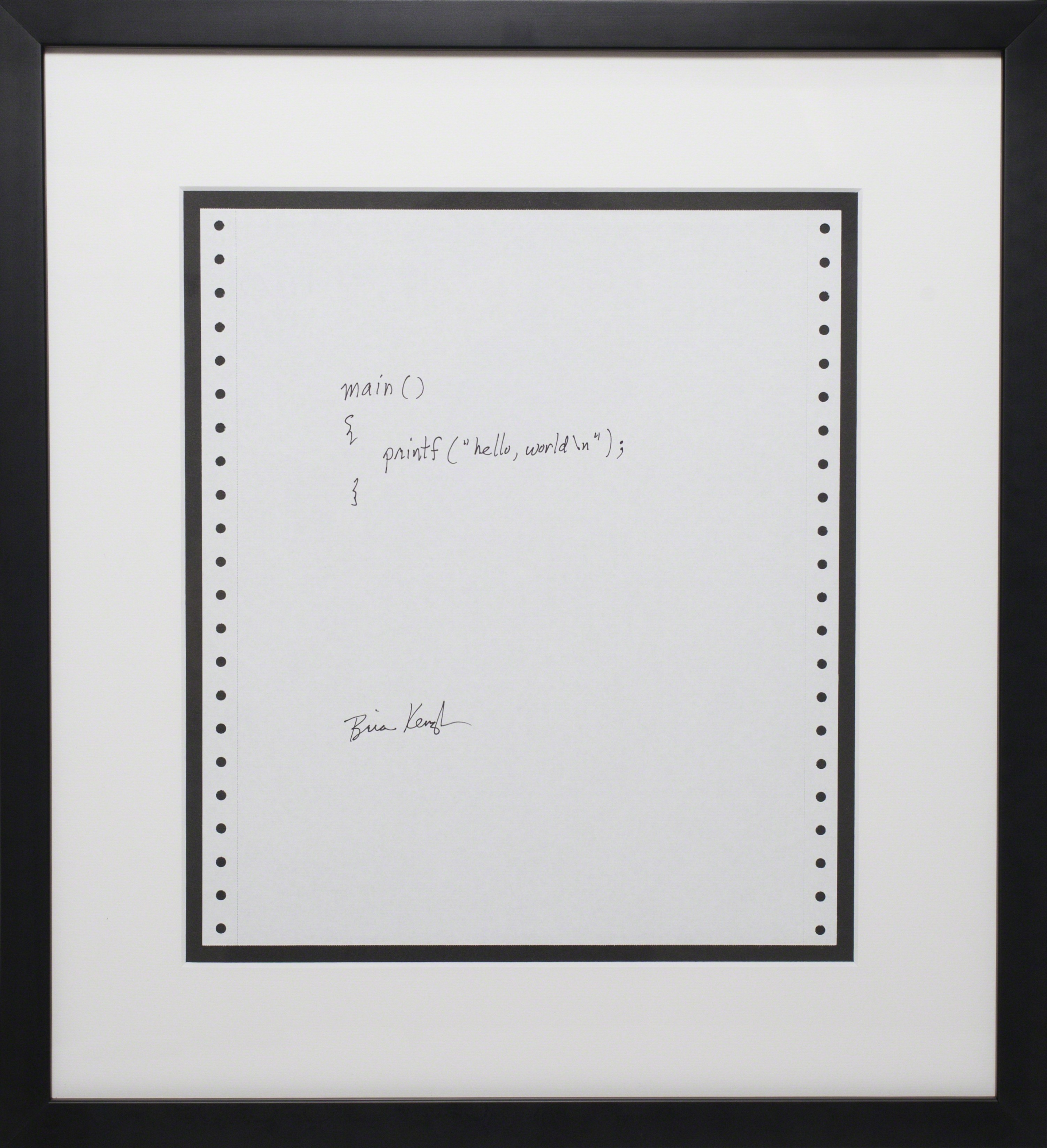

C Programming Language

''The C Programming Language'' (sometimes termed ''K&R'', after its authors' initials) is a computer programming book written by Brian Kernighan and Dennis Ritchie, the latter of whom originally designed and implemented the language, as well as co-designed the Unix operating system with which development of the language was closely intertwined. The book was central to the development and popularization of the C programming language and is still widely read and used today. Because the book was co-authored by the original language designer, and because the first edition of the book served for many years as the ''de facto'' standard for the language, the book was regarded by many to be the authoritative reference on C. History C was created by Dennis Ritchie at Bell Labs in the early 1970s as an augmented version of Ken Thompson's B. Another Bell Labs employee, Brian Kernighan, had written the first C tutorial, and he persuaded Ritchie to coauthor a book on the language. K ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cyrix Coma Bug

The Cyrix coma bug is a design flaw in Cyrix 6x86 (introduced in 1996), 6x86L, and early 6x86MX processors that allows a non-privileged program to hang the computer. Discovery According to Andrew Balsa, around the time of the discovery of the F00F bug on Intel Pentium, Serguei Shtyliov from Moscow found a flaw in a Cyrix processor while developing an IDE disk driver in assembly language. Alexandr Konosevich, from Omsk, further researched the bug and coauthored an article with Uwe Post in the German technology magazine '' c't'', calling it the "hidden CLI bug" (CLI is the instruction that disables interrupts in the x86 architecture). Balsa, as a member on the Linux kernel mailing list, confirmed that the following C program (which uses inline x86-specific assembly language) could be compiled and run by an unprivileged user: unsigned char c = ; int main() Execution of this program renders the processor completely useless until it is rebooted, as it enters an infin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Denial Of Service Attack

In computing, a denial-of-service attack (DoS attack) is a cyber-attack in which the perpetrator seeks to make a machine or network resource unavailable to its intended users by temporarily or indefinitely disrupting services of a host connected to a network. Denial of service is typically accomplished by flooding the targeted machine or resource with superfluous requests in an attempt to overload systems and prevent some or all legitimate requests from being fulfilled. In a distributed denial-of-service attack (DDoS attack), the incoming traffic flooding the victim originates from many different sources. More sophisticated strategies are required to mitigate this type of attack, as simply attempting to block a single source is insufficient because there are multiple sources. A DoS or DDoS attack is analogous to a group of people crowding the entry door of a shop, making it hard for legitimate customers to enter, thus disrupting trade. Criminal perpetrators of DoS attacks o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Infinite Loop

In computer programming, an infinite loop (or endless loop) is a sequence of instructions that, as written, will continue endlessly, unless an external intervention occurs ("pull the plug"). It may be intentional. Overview This differs from: * "a type of computer program that runs the same instructions continuously until it is either stopped or interrupted." Consider the following pseudocode: how_many = 0 while is_there_more_data() do how_many = how_many + 1 end display "the number of items counted = " how_many ''The same instructions'' were run ''continuously until it was stopped or interrupted'' . . . by the ''FALSE'' returned at some point by the function ''is_there_more_data''. By contrast, the following loop will not end by itself: birds = 1 fish = 2 while birds + fish > 1 do birds = 3 - birds fish = 3 - fish end ''birds'' will alternate being 1 or 2, while ''fish'' will alternate being 2 or 1. The loop will not stop unless an external intervention occu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instruction Pipeline

In computer engineering, instruction pipelining or ILP is a technique for implementing instruction-level parallelism within a single processor. Pipelining attempts to keep every part of the processor busy with some instruction by dividing incoming instructions into a series of sequential steps (the eponymous " pipeline") performed by different processor units with different parts of instructions processed in parallel. Concept and motivation In a pipelined computer, instructions flow through the central processing unit (CPU) in stages. For example, it might have one stage for each step of the von Neumann cycle: Fetch the instruction, fetch the operands, do the instruction, write the results. A pipelined computer usually has "pipeline registers" after each stage. These store information from the instruction and calculations so that the logic gates of the next stage can do the next step. This arrangement lets the CPU complete an instruction on each clock cycle. It is common f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Central Processing Unit

A central processing unit (CPU), also called a central processor, main processor or just processor, is the electronic circuitry that executes instructions comprising a computer program. The CPU performs basic arithmetic, logic, controlling, and input/output (I/O) operations specified by the instructions in the program. This contrasts with external components such as main memory and I/O circuitry, and specialized processors such as graphics processing units (GPUs). The form, design, and implementation of CPUs have changed over time, but their fundamental operation remains almost unchanged. Principal components of a CPU include the arithmetic–logic unit (ALU) that performs arithmetic and logic operations, processor registers that supply operands to the ALU and store the results of ALU operations, and a control unit that orchestrates the fetching (from memory), decoding and execution (of instructions) by directing the coordinated operations of the ALU, registers and oth ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Load-link/store-conditional

In computer science, load-linked/store-conditional (LL/SC), sometimes known as load-reserved/store-conditional (LR/SC), are a pair of instructions used in multithreading to achieve synchronization. Load-link returns the current value of a memory location, while a subsequent store-conditional to the same memory location will store a new value only if no updates have occurred to that location since the load-link. Together, this implements a lock-free atomic read-modify-write operation. "Load-linked" is also known as load-link, load-reserved, and load-locked. LL/SC was originally proposed by Jensen, Hagensen, and Broughton for the S-1 AAP multiprocessor at Lawrence Livermore National Laboratory. Comparison of LL/SC and compare-and-swap If any updates have occurred, the store-conditional is guaranteed to fail, even if the value read by the load-link has since been restored. As such, an LL/SC pair is stronger than a read followed by a compare-and-swap (CAS), which will not detec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compare-and-swap

In computer science, compare-and-swap (CAS) is an atomic instruction used in multithreading to achieve synchronization. It compares the contents of a memory location with a given value and, only if they are the same, modifies the contents of that memory location to a new given value. This is done as a single atomic operation. The atomicity guarantees that the new value is calculated based on up-to-date information; if the value had been updated by another thread in the meantime, the write would fail. The result of the operation must indicate whether it performed the substitution; this can be done either with a simple boolean response (this variant is often called compare-and-set), or by returning the value read from the memory location (''not'' the value written to it). Overview A compare-and-swap operation is an atomic version of the following pseudocode, where denotes access through a pointer: function cas(p: pointer to int, old: int, new: int) is if *p ≠ old ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |