|

Architectural Pattern (computer Science)

Software architecture pattern is a reusable, proven solution to a specific, recurring problem focused on architectural design challenges, which can be applied within various architectural styles. Examples Some examples of architectural patterns: * Publish–subscribe pattern * Message broker See also * List of software architecture styles and patterns * Process Driven Messaging Service * Enterprise architecture Enterprise architecture (EA) is a business function concerned with the structures and behaviours of a business, especially business roles and processes that create and use business data. The international definition according to the Federation of ... * Common layers in an information system logical architecture References Bibliography * * * {{Design Patterns patterns Software design patterns ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

List Of Software Architecture Styles And Patterns

Software Architecture Pattern refers to a reusable, proven solution to a recurring problem at the system level, addressing concerns related to the overall structure, component interactions, and quality attributes of the system. Software architecture patterns operate at a higher level of abstraction than software design patterns, solving broader system-level challenges. While these patterns typically affect system-level concerns, the distinction between architectural patterns and architectural styles can sometimes be blurry. Examples include Circuit Breaker. Software Architecture Style refers to a high-level structural organization that defines the overall system organization, specifying how components are organized, how they interact, and the constraints on those interactions. Architecture styles typically include a vocabulary of component and connector types, as well as semantic models for interpreting the system's properties. These styles represent the most coarse-grained level o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Publish–subscribe Pattern

In software architecture, the publish–subscribe pattern (pub/sub) is a messaging pattern in which message senders, called publishers, categorize messages into classes (or ''topics''), and send them without needing to know which components will receive them. Message recipients, called subscribers, express interest in one or more classes and only receive messages in those classes, without needing to know the identity of the publishers. This pattern decouples the components that produce messages from those that consume them, and supports asynchronous, many-to-many communication. The publish–subscribe model is commonly contrasted with message queue-based and point-to-point messaging models, where producers send messages directly to consumers. Publish–subscribe is a sibling of the message queue paradigm, and is typically a component of larger message-oriented middleware systems. Many modern messaging frameworks and protocols, such as the Java Message Service (JMS), Apac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Message Broker

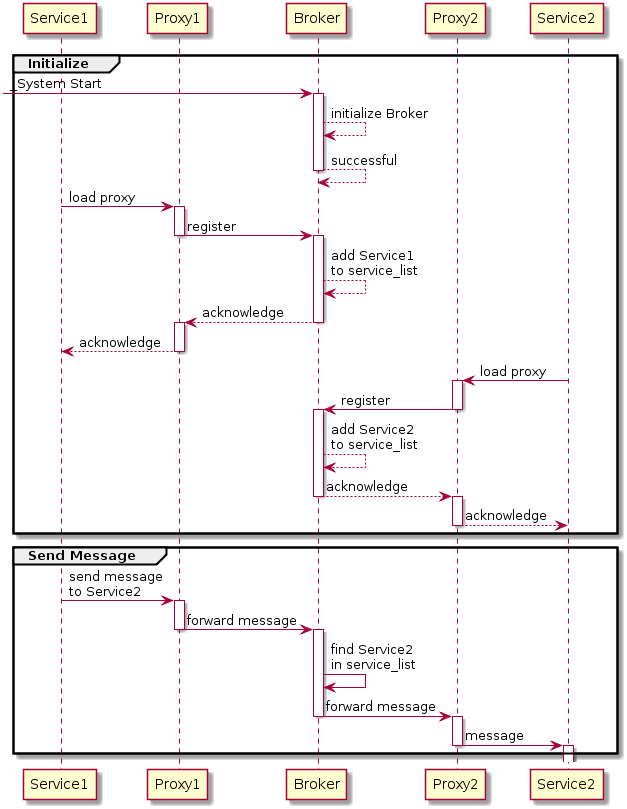

A message broker (also known as an integration broker or interface engine) is an intermediary computer program module that translates a message from the formal messaging protocol of the sender to the formal messaging protocol of the receiver. Message brokers are elements in telecommunication or computer networks where software applications communicate by exchanging formally-defined messages. Message brokers are a building block of message-oriented middleware (MOM) but are typically not a replacement for traditional middleware like MOM and remote procedure call (RPC). Overview A message broker is an architectural pattern for message validation, transformation, and routing. It mediates communication among applications, minimizing the mutual awareness that applications should have of each other in order to be able to exchange messages, effectively implementing decoupling. Purpose The primary purpose of a broker is to take incoming messages from applications and perform some acti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Process Driven Messaging Service

A process driven messaging service (PDMS) is a service that is process oriented and exchanges messages/data calls. A PDMS is a service where jobs and triggers can be put together to create a workflow for a message. Messaging platforms are considered key Internet infrastructure elements. A concept that once mainly encompassed email and IM has evolved to embrace complex multi-media email, instant messaging, and related fixed and mobile messaging infrastructure. Arguably, everything transmitted on the Internet and wireless telecommunication links are messages. PDMS exchanges messages for the purpose of all kind of messages/data calls between systems, applications and or human beings that is based upon event-driven process chains. Structure A process driven messaging service is a service where jobs and triggers can be put together to create a workflow for a message and the workflow can be seen as a process. A workflow is executed when a trigger is prompted. The trigger causes th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Enterprise Architecture

Enterprise architecture (EA) is a business function concerned with the structures and behaviours of a business, especially business roles and processes that create and use business data. The international definition according to the Federation of Enterprise Architecture Professional Organizations is "a well-defined practice for conducting enterprise (economics), enterprise analysis, design, planning, and implementation, using a comprehensive approach at all times, for the successful development and execution of strategy. Enterprise architecture applies architecture principles and practices to guide organizations through the business, information, process, and technology changes necessary to execute their strategies. These practices utilize the various aspects of an enterprise to identify, motivate, and achieve these changes." The United States Government, United States Federal Government is an example of an organization that practices EA, in this case with its Capital Planning an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Common Layers In An Information System Logical Architecture

In software engineering, multitier architecture (often referred to as ''n''-tier architecture) is a client–server architecture in which presentation, application processing and data management functions are physically separated. The most widespread use of multitier architecture is the three-tier architecture (for example, Cisco's Hierarchical internetworking model). ''N''-tier application architecture provides a model by which developers can create flexible and reusable applications. By segregating an application into tiers, developers acquire the option of modifying or adding a specific tier, instead of reworking the entire application. N-tier architecture is a good fit for small and simple applications because of its simplicity and low-cost. Also, it can be a good starting point when architectural requirements are not clear yet. A three-tier architecture is typically composed of a ''presentation'' tier, a ''logic'' tier, and a ''data'' tier. While the concepts of layer an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Architectural Pattern (computer Science)

Software architecture pattern is a reusable, proven solution to a specific, recurring problem focused on architectural design challenges, which can be applied within various architectural styles. Examples Some examples of architectural patterns: * Publish–subscribe pattern * Message broker See also * List of software architecture styles and patterns * Process Driven Messaging Service * Enterprise architecture Enterprise architecture (EA) is a business function concerned with the structures and behaviours of a business, especially business roles and processes that create and use business data. The international definition according to the Federation of ... * Common layers in an information system logical architecture References Bibliography * * * {{Design Patterns patterns Software design patterns ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |