|

WAN Optimization

WAN optimization is a collection of techniques for improving data transfer across wide area networks (WANs). In 2008, the WAN optimization market was estimated to be $1 billion, and was to grow to $4.4 billion by 2014 according to Gartner, a technology research firm. In 2015 Gartner estimated the WAN optimization market to be a $1.1 billion market. The most common measures of TCP data-transfer efficiencies (i.e., optimization) are throughput, bandwidth requirements, latency, protocol optimization, and congestion, as manifested in dropped packets. In addition, the WAN itself can be classified with regards to the distance between endpoints and the amounts of data transferred. Two common business WAN topologies are Branch to Headquarters and Data Center to Data Center (DC2DC). In general, "Branch" WAN links are closer, use less bandwidth, support more simultaneous connections, support smaller connections and more short-lived connections, and handle a greater variety of protocols. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Transfer

Data transmission and data reception or, more broadly, data communication or digital communications is the transfer and reception of data in the form of a digital bitstream or a digitized analog signal transmitted over a point-to-point or point-to-multipoint communication channel. Examples of such channels are copper wires, optical fibers, wireless communication using radio spectrum, storage media and computer buses. The data are represented as an electromagnetic signal, such as an electrical voltage, radiowave, microwave, or infrared signal. Analog transmission is a method of conveying voice, data, image, signal or video information using a continuous signal which varies in amplitude, phase, or some other property in proportion to that of a variable. The messages are either represented by a sequence of pulses by means of a line code (''baseband transmission''), or by a limited set of continuously varying waveforms (''passband transmission''), using a digital modulatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bandwidth Management

Bandwidth management is the process of measuring and controlling the communications (traffic, packets) on a network link, to avoid filling the link to capacity or overfilling the link,https://www.internetsociety.org/wp-content/uploads/2017/08/BWroundtable_report-1.0.pdf Internet Society on Bandwidth Management which would result in network congestion and poor performance of the network. Bandwidth is described by bit rate and measured in units of bits per second (bit/s) or bytes per second (B/s). Bandwidth management mechanisms and techniques Bandwidth management mechanisms may be used to further engineer performance and includes: * Traffic shaping (rate limiting): **Token bucket **Leaky bucket ** TCP rate control - artificially adjusting TCP window size as well as controlling the rate of ACKs being returned to the sender * Scheduling algorithms: ** Weighted fair queuing (WFQ) ** Class based weighted fair queuing ** Weighted round robin (WRR) ** Deficit weighted round robin (D ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Network Performance

Network performance refers to measures of service quality of a network as seen by the customer. There are many different ways to measure the performance of a network, as each network is different in nature and design. Performance can also be modeled and simulated instead of measured; one example of this is using state transition diagrams to model queuing performance or to use a Network Simulator. Performance measures The following measures are often considered important: * Bandwidth commonly measured in bits/second is the maximum rate that information can be transferred * Throughput is the actual rate that information is transferred * Latency the delay between the sender and the receiver decoding it, this is mainly a function of the signals travel time, and processing time at any nodes the information traverses * Jitter variation in packet delay at the receiver of the information * Error rate the number of corrupted bits expressed as a percentage or fraction of the total sent Ban ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wide Area Networks

A wide area network (WAN) is a telecommunications network that extends over a large geographic area. Wide area networks are often established with leased telecommunication circuits. Businesses, as well as schools and government entities, use wide area networks to relay data to staff, students, clients, buyers and suppliers from various locations around the world. In essence, this mode of telecommunication allows a business to effectively carry out its daily function regardless of location. The Internet may be considered a WAN. Design options The textbook definition of a WAN is a computer network spanning regions, countries, or even the world. However, in terms of the application of communication protocols and concepts, it may be best to view WANs as computer networking technologies used to transmit data over long distances, and between different networks. This distinction stems from the fact that common local area network (LAN) technologies operating at lower layers of the O ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

WAN Optimization

WAN optimization is a collection of techniques for improving data transfer across wide area networks (WANs). In 2008, the WAN optimization market was estimated to be $1 billion, and was to grow to $4.4 billion by 2014 according to Gartner, a technology research firm. In 2015 Gartner estimated the WAN optimization market to be a $1.1 billion market. The most common measures of TCP data-transfer efficiencies (i.e., optimization) are throughput, bandwidth requirements, latency, protocol optimization, and congestion, as manifested in dropped packets. In addition, the WAN itself can be classified with regards to the distance between endpoints and the amounts of data transferred. Two common business WAN topologies are Branch to Headquarters and Data Center to Data Center (DC2DC). In general, "Branch" WAN links are closer, use less bandwidth, support more simultaneous connections, support smaller connections and more short-lived connections, and handle a greater variety of protocols. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Traffic Shaping

Traffic shaping is a bandwidth management technique used on computer networks which delays some or all datagrams to bring them into compliance with a desired ''traffic profile''. Traffic shaping is used to optimize or guarantee performance, improve latency, or increase usable bandwidth for some kinds of packets by delaying other kinds. It is often confused with traffic policing, the distinct but related practice of packet dropping and packet marking. The most common type of traffic shaping is application-based traffic shaping. In application-based traffic shaping, fingerprinting tools are first used to identify applications of interest, which are then subject to shaping policies. Some controversial cases of application-based traffic shaping include bandwidth throttling of peer-to-peer file sharing traffic. Many application protocols use encryption to circumvent application-based traffic shaping. Another type of traffic shaping is route-based traffic shaping. Route-based traffi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CIFS

Server Message Block (SMB) is a communication protocol originally developed in 1983 by Barry A. Feigenbaum at IBM and intended to provide shared access to files and printers across nodes on a network of systems running IBM's OS/2. It also provides an authenticated inter-process communication (IPC) mechanism. In 1987, Microsoft and 3Com implemented SMB in LAN Manager for OS/2, at which time SMB used the NetBIOS service atop the NetBIOS Frames protocol as its underlying transport. Later, Microsoft implemented SMB in Windows NT 3.1 and has been updating it ever since, adapting it to work with newer underlying transports: TCP/IP and NetBT. SMB implementation consists of two vaguely named Windows services: "Server" (ID: LanmanServer) and "Workstation" (ID: LanmanWorkstation). It uses NTLM or Kerberos protocols for user authentication. In 1996, Microsoft published a version of SMB 1.0 with minor modifications under the Common Internet File System (CIFS ) moniker. CIFS was compatible w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Protocol Spoofing

Protocol spoofing is used in data communications to improve performance in situations where an existing protocol is inadequate, for example due to long delays or high error rates. Spoofing techniques In most applications of protocol spoofing, a communications device such as a modem or router simulates ("spoofs") the remote endpoint of a connection to a locally attached host, while using a more appropriate protocol to communicate with a compatible remote device that performs the equivalent spoof at the other end of the communications link. File transfer spoofing Error correction and file transfer protocols typically work by calculating a checksum or CRC for a block of data known as a ''packet'', and transmitting the resulting number at the end of the packet. At the other end of the connection, the receiver re-calculates the number based on the data it received and compares that result to what was sent from the remote machine. If the two match the packet was transmitted correctl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

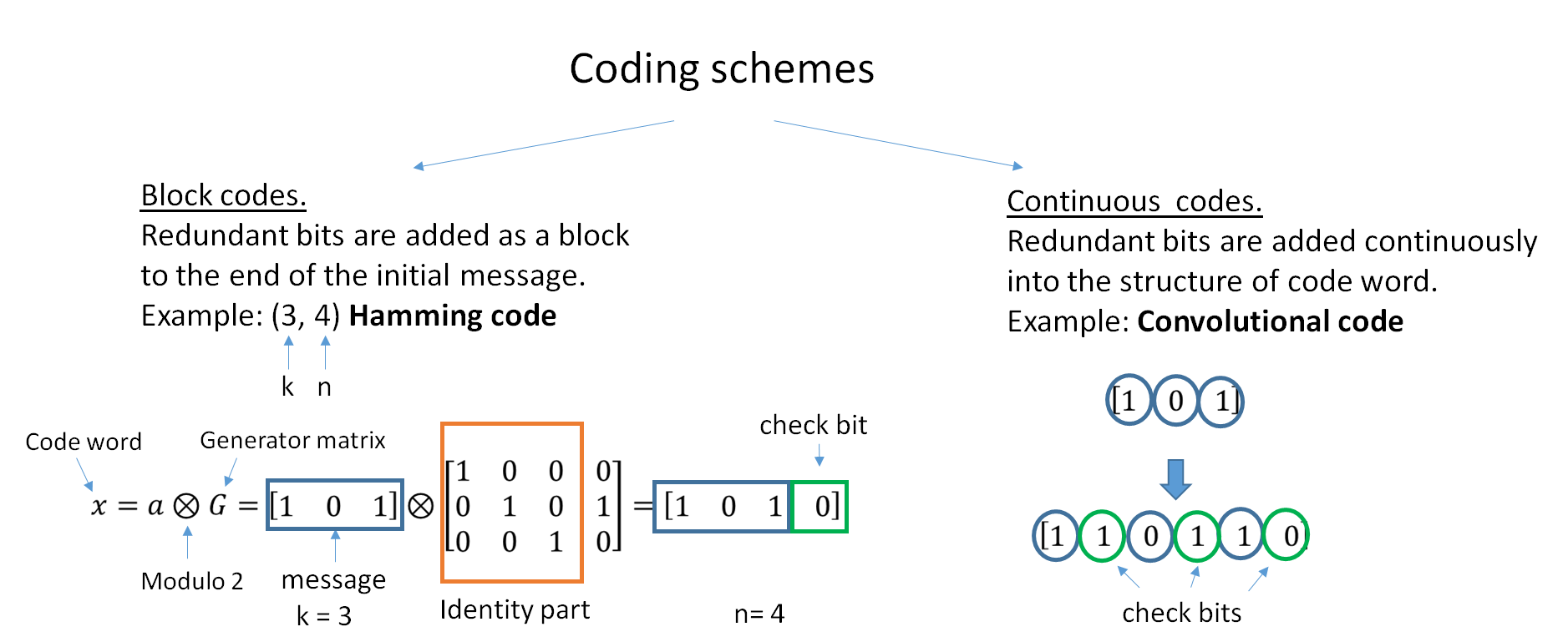

Forward Error Correction

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. ECC contrasts with error detection in that errors that are encountered can be corrected, not simply detected. The advantage is that a system using ECC does not require a reverse channel to request retransmission of data when an error occurs. The downside is that there is a fixed overhead that is added to the message, thereby requiring a h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Cache

A Web cache (or HTTP cache) is a system for optimizing the World Wide Web. It is implemented both client-side and server-side. The caching of multimedias and other files can result in less overall delay when browsing the Web. Parts of the system Forward and reverse A forward cache is a cache outside the web server's network, e.g. in the client's web browser, in an ISP, or within a corporate network. A network-aware forward cache only caches heavily accessed items. A proxy server sitting between the client and web server can evaluate HTTP headers and choose whether to store web content. A reverse cache sits in front of one or more web servers, accelerating requests from the Internet and reducing peak server load. This is usually a content delivery network (CDN) that retains copies of web content at various points throughout a network. HTTP options The Hypertext Transfer Protocol (HTTP) defines three basic mechanisms for controlling caches: freshness, validation, and invalidatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding; encoding done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a signal. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deduplication , in databases, refers to the task of finding entries that refer to the same entity in two or more files

{{Disambiguation ...

The term deduplication refers generally to eliminating duplicate or redundant information. *Data deduplication, in computer storage, refers to the elimination of redundant data *Record linkage Record linkage (also known as data matching, data linkage, entity resolution, and many other terms) is the task of finding records in a data set that refer to the same entity across different data sources (e.g., data files, books, websites, and da ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |