|

Web Search Timeline ...

This page provides a full timeline of web search engines, starting from the Archie search engine in 1990. It is complementary to the history of web search engines page that provides more qualitative detail on the history. Timeline See also * Timeline of Google Search References {{Timelines of computing Web search engines Web search engines A search engine is a software system designed to carry out web searches. They search the World Wide Web in a systematic way for particular information specified in a textual web search query. The search results are generally presented in a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Archie Search Engine

Archie is a tool for indexing FTP archives, allowing users to more easily identify specific files. It is considered the first Internet search engine. The original implementation was written in 1990 by Alan Emtage, then a postgraduate student at McGill University in Montreal, Canada. Archie has since been superseded by other, more sophisticated search engines, including Jughead and Veronica. These were in turn superseded by search engines like Yahoo! in 1995 and Google in 1997. Work on Archie ceased in the late 1990s. A legacy Archie server is still maintained active for historic purposes in Poland at University of Warsaw's Interdisciplinary Centre for Mathematical and Computational Modelling. Origin Archie began as a project for students and volunteer staff at the McGill University School of Computer Science in 1987, when Peter Deutsch (systems manager for the School), Alan Emtage, and Bill Heelan were asked to connect the School to the Internet. The name derives from the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

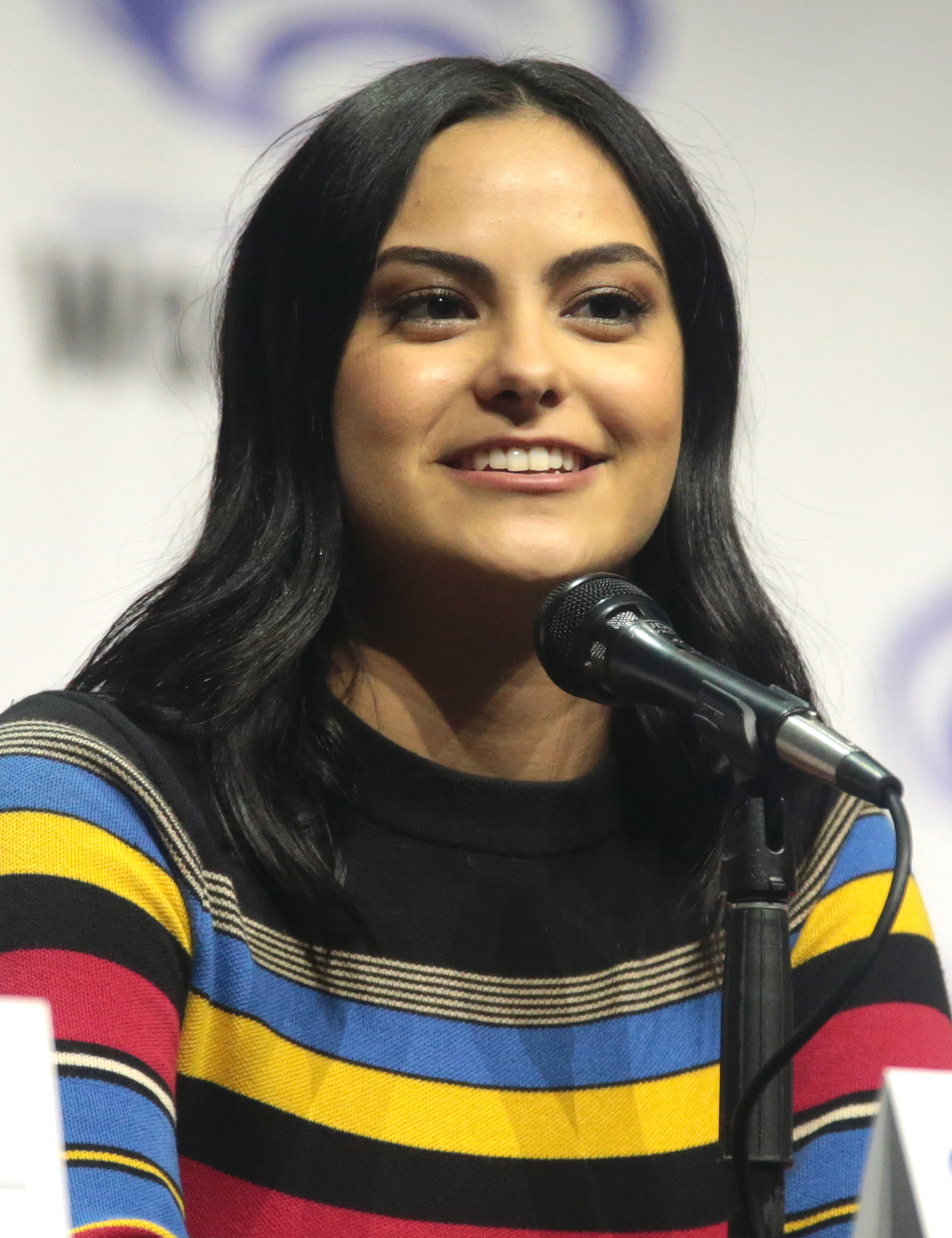

Veronica Lodge

Veronica Cecilia Lodge is one of the main characters in the Archie Comics franchise, and is the keyboardist and one of the three vocalists of rock band The Archies. She is from New York but currently resides in the town of Riverdale, with her parents Hiram Lodge and Hermione Lodge. She is portrayed by Camila Mendes in '' Riverdale'' and Suhana Khan in '' The Archies''. Fictional character biography Veronica Lodge is the only child of Hiram Lodge, the richest man in Riverdale, and his wife Hermione Lodge. She is called both by her name "Veronica" and her nicknames "Ronnie" and "Ron". Bob Montana knew the real-life Lodge family, because he had once painted a mural for them. Montana combined that name with actress Veronica Lake to create the character of Veronica Lodge. Her character was added in Pep Comics 38, just months after Archie Andrews, Betty Cooper, and Jughead Jones debuted, and just a few months before Reggie Mantle debuted. Veronica is a beautiful young woman w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

JumpStation

JumpStation was the first WWW search engine that behaved, and appeared to the user, the way current web search engines do. It started indexing on 12 December 1993 and was announced on the Mosaic "What's New" webpage on 21 December 1993. It was hosted at the University of Stirling in Scotland. It was written by Jonathon Fletcher, from Scarborough, England, who graduated from the University with a first class honours degree in Computing Science in the summer of 1992Googling was born in Stirling The Scotsman, 15 March 2009 and has subsequently been named "father of the search engine". He was subsequently employed there as a systems administrator. JumpStation's development discontinued when he left the University in late 1994, having failed to get any investors, including the Univ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Crawler

A Web crawler, sometimes called a spider or spiderbot and often shortened to crawler, is an Internet bot that systematically browses the World Wide Web and that is typically operated by search engines for the purpose of Web indexing (''web spidering''). Web search engines and some other websites use Web crawling or spidering software to update their web content or indices of other sites' web content. Web crawlers copy pages for processing by a search engine, which indexes the downloaded pages so that users can search more efficiently. Crawlers consume resources on visited systems and often visit sites unprompted. Issues of schedule, load, and "politeness" come into play when large collections of pages are accessed. Mechanisms exist for public sites not wishing to be crawled to make this known to the crawling agent. For example, including a robots.txt file can request bots to index only parts of a website, or nothing at all. The number of Internet pages is extremely large ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Martijn Koster

Martijn Koster (born ca 1970) is a Dutch software engineer noted for his pioneering work on Internet searching. Koster created ALIWEB, the Internet's first search engine, which was announced in November 1993 while working at Nexor and presented in May 1994 at the First International Conference on the World Wide Web. Koster also developed ArchiePlex, a search engine for FTP sites that pre-dates the Web, and CUSI, a simple tool that allowed you to search different search engines in quick succession, useful in the early days of search when services provided varying results. Koster also created the Robots Exclusion Standard The robots exclusion standard, also known as the robots exclusion protocol or simply robots.txt, is a standard used by websites to indicate to visiting web crawlers and other web robots which portions of the site they are allowed to visit. Th .... References Living people Dutch software engineers Dutch computer scientists 1970 births {{N ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

University Of Geneva

The University of Geneva (French: ''Université de Genève'') is a public research university located in Geneva, Switzerland. It was founded in 1559 by John Calvin as a theological seminary. It remained focused on theology until the 17th century, when it became a center for enlightenment scholarship. Today, it is the third largest university in Switzerland by number of students. In 1873, it dropped its religious affiliations and became officially secular. In 2009, the University of Geneva celebrated the 450th anniversary of its founding. Almost 40% of the students come from foreign countries. The university holds and actively pursues teaching, research, and community service as its primary objectives. In 2016, it was ranked 53rd worldwide by the Shanghai Academic Ranking of World Universities, 89th by the QS World University Rankings, and 131st in the Times Higher Education World University Ranking. UNIGE is a member of the League of European Research Universities (includi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Oscar Nierstrasz

Oscar Marius Nierstrasz (born ) is a professor at the Computer Science Institute (IAM) at the University of Berne, and a specialist in software engineering and programming languages. He is active in the field of * programming languages and mechanisms to support the flexible composition of high-level, component-based abstractions, * tools and environments to support the understanding, analysis and transformation of software systems to more flexible, component-based designs, * secure software engineering to understand the challenges current software systems face in terms of security and privacy, and * requirement engineering to support stakeholders and developers to have moldable and clear requirements. He has led the Software Composition Group at the University of Bern since 1994 to date (December 2011). Life Nierstrasz is born in Laren, the Netherlands. He lived there for three years and then his parents, Thomas Oscar Duyck (1930--) and Meta Maria van den Bos (1936-1988) moved ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

World Wide Web Wanderer

The World Wide Web Wanderer, also simply called The Wanderer, was a Perl-based web crawler that was first deployed in June 1993 to measure the size of the World Wide Web. The Wanderer was developed at the Massachusetts Institute of Technology by Matthew Gray, who also created back in 1993 one of the 100 first web servers in history: www.mit.edu, as of 2022, he has spent more than 15 years as a software engineer at Google. The crawler was used to generate an index called the ''Wandex'' later in 1993. While ''the Wanderer'' was probably the first web robot An Internet bot, web robot, robot or simply bot, is a software application that runs automated tasks (scripts) over the Internet, usually with the intent to imitate human activity on the Internet, such as messaging, on a large scale. An Internet b ..., and, with its index, clearly had the potential to become a general-purpose WWW search engine, the author does not make this claim and elsewhere it is stated that this was not i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Perl

Perl is a family of two High-level programming language, high-level, General-purpose programming language, general-purpose, Interpreter (computing), interpreted, dynamic programming languages. "Perl" refers to Perl 5, but from 2000 to 2019 it also referred to its redesigned "sister language", Perl 6, before the latter's name was officially changed to Raku (programming language), Raku in October 2019. Though Perl is not officially an acronym, there are various backronyms in use, including "Practical Data extraction, Extraction and Reporting Language". Perl was developed by Larry Wall in 1987 as a general-purpose Unix scripting language to make report processing easier. Since then, it has undergone many changes and revisions. Raku, which began as a redesign of Perl 5 in 2000, eventually evolved into a separate language. Both languages continue to be developed independently by different development teams and liberally borrow ideas from each other. The Perl languages borrow featur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Robot

An Internet bot, web robot, robot or simply bot, is a software application that runs automated tasks (scripts) over the Internet, usually with the intent to imitate human activity on the Internet, such as messaging, on a large scale. An Internet bot plays the client role in a client–server model whereas the server role is usually played by web servers. Internet bots are able to perform tasks, that are simple and repetitive, much faster than a person could ever do. The most extensive use of bots is for web crawling, in which an automated script fetches, analyzes and files information from web servers. More than half of all web traffic is generated by bots. Efforts by web servers to restrict bots vary. Some servers have a robots.txt file that contains the rules governing bot behavior on that server. Any bot that does not follow the rules could, in theory, be denied access to or removed from the affected website. If the posted text file has no associated program/software/app, then ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |