|

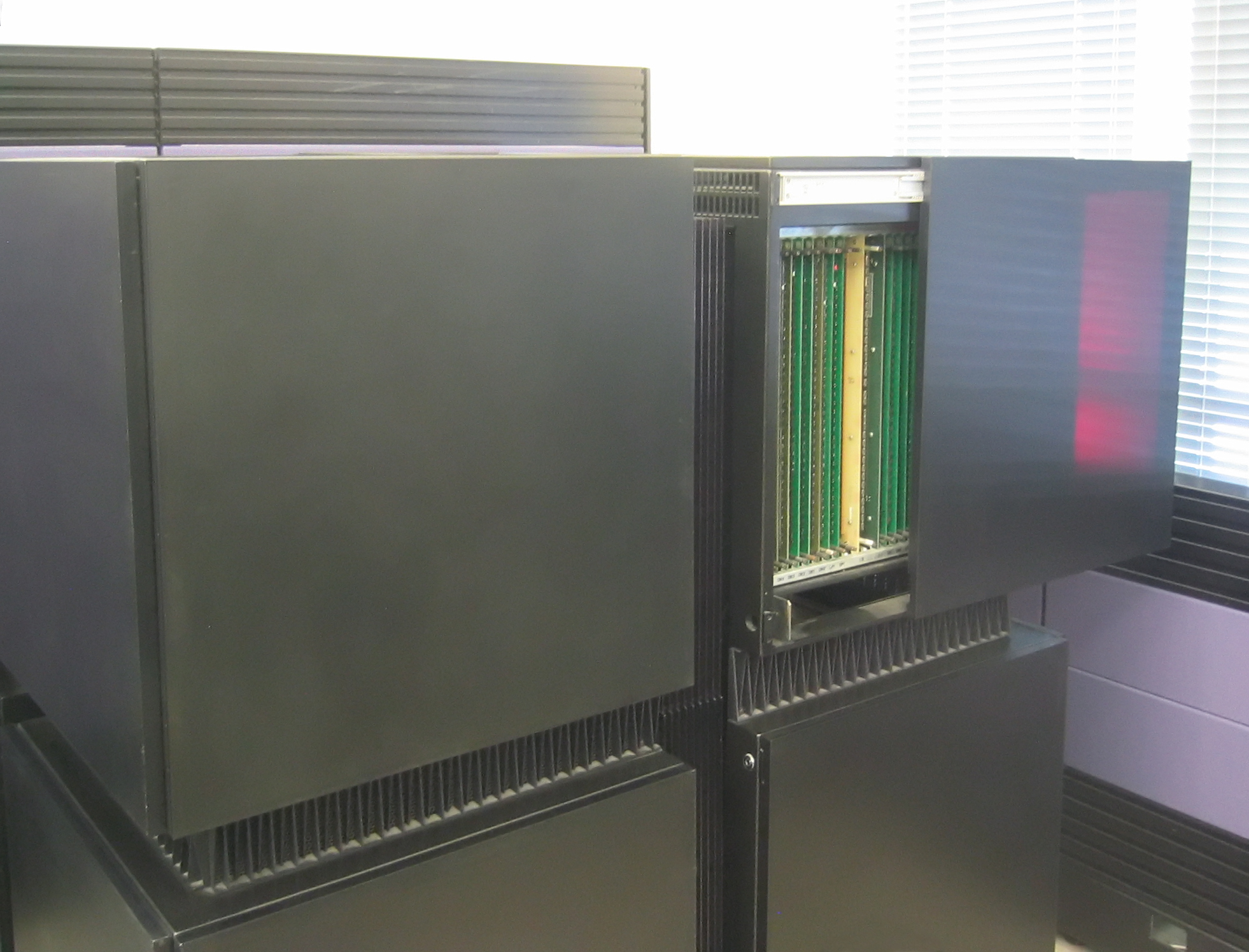

WARP (systolic Array)

The ''Warp'' machines were 3 generations of increasingly general-purpose systolic array processors. Each generation became increasingly general-purpose by increasing memory capacity and loosening the coupling between processors. Only the original WW-Warp forced a truly lock step sequencing of stages, which severely restricted its programmability but was in a sense the purest “systolic-array” design. History The Warp machines were created by Carnegie Mellon University (CMU), in conjunction with industrial partners G.E., Honeywell and Intel, and funded by the U.S. Defense Advanced Research Projects Agency (DARPA). The ''Warp'' projects were started in 1984 by H. T. Kung at Carnegie Mellon University. The Warp projects yielded research results, publications and advancements in general purpose systolic hardware design, compiler design and systolic software algorithms. A two cell prototype of WW-Warp was complete at Carnegie Mellon in June 1985. Two essentially identical ten ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Systolic Array

In parallel computer architectures, a systolic array is a homogeneous network of tightly coupled data processing units (DPUs) called cells or nodes. Each node or DPU independently computes a partial result as a function of the data received from its upstream neighbours, stores the result within itself and passes it downstream. Systolic arrays were first used in Colossus, which was an early computer used to break German Lorenz ciphers during World War II. Due to the classified nature of Colossus, they were independently invented or rediscovered by H. T. Kung and Charles Leiserson who described arrays for many dense linear algebra computations (matrix product, solving systems of linear equations, LU decomposition, etc.) for banded matrices. Early applications include computing greatest common divisors of integers and polynomials. They are sometimes classified as multiple-instruction single-data (MISD) architectures under Flynn's taxonomy, but this classification is questionable ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Magnetic Resonance Imaging

Magnetic resonance imaging (MRI) is a medical imaging technique used in radiology to generate pictures of the anatomy and the physiological processes inside the body. MRI scanners use strong magnetic fields, magnetic field gradients, and radio waves to form images of the organs in the body. MRI does not involve X-rays or the use of ionizing radiation, which distinguishes it from computed tomography (CT) and positron emission tomography (PET) scans. MRI is a medical application of nuclear magnetic resonance (NMR) which can also be used for imaging in other NMR applications, such as NMR spectroscopy. MRI is widely used in hospitals and clinics for medical diagnosis, staging and follow-up of disease. Compared to CT, MRI provides better contrast in images of soft tissues, e.g. in the brain or abdomen. However, it may be perceived as less comfortable by patients, due to the usually longer and louder measurements with the subject in a long, confining tube, although ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supercomputers

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2022, supercomputers have existed which can perform over 1018 FLOPS, so called exascale supercomputers. For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers. Supercomputers play an important role in the field of computational science, and are used for a wide range of computationally intensive tasks in various fields, including quantum mechan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Systolic Array

In parallel computer architectures, a systolic array is a homogeneous network of tightly coupled data processing units (DPUs) called cells or nodes. Each node or DPU independently computes a partial result as a function of the data received from its upstream neighbours, stores the result within itself and passes it downstream. Systolic arrays were first used in Colossus, which was an early computer used to break German Lorenz ciphers during World War II. Due to the classified nature of Colossus, they were independently invented or rediscovered by H. T. Kung and Charles Leiserson who described arrays for many dense linear algebra computations (matrix product, solving systems of linear equations, LU decomposition, etc.) for banded matrices. Early applications include computing greatest common divisors of integers and polynomials. They are sometimes classified as multiple-instruction single-data (MISD) architectures under Flynn's taxonomy, but this classification is questionable ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AT&T

AT&T Inc., an abbreviation for its predecessor's former name, the American Telephone and Telegraph Company, is an American multinational telecommunications holding company headquartered at Whitacre Tower in Downtown Dallas, Texas. It is the world's List of telecommunications companies, third largest telecommunications company by revenue and the List of mobile network operators in the United States, third largest wireless carrier in the United States behind T-Mobile US, T-Mobile and Verizon. As of 2023, AT&T was ranked 32nd on the Fortune 500, ''Fortune'' 500 rankings of the largest United States corporations, with revenues of $122.4 billion. The modern company to bear the AT&T name began its history as the American District Telegraph Company, formed in St. Louis in 1878. After expanding services to Arkansas, Kansas, Oklahoma and Texas through a series of mergers, it became the Southwestern Bell Telephone Company in 1920. Southwestern Bell was a subsidiary of AT&T Corporation, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ridge Computers

Ridge Computers, Inc., was an American computer manufacturer active from 1980 to 1990. The company began as a builder of deskside workstations and workgroup servers and progressed to superminicomputers. They claimed to have produced the first commercially available Reduced instruction set computer (RISC) systems. Company history Ridge Computers was established in May 1980 in Santa Clara, California by six original founders, five of whom had come from Hewlett-Packard (HP), and one from Zilog. The company was named for the Montebello Ridge, where two of the founders used to go cycling. Ridge's first prototype was running by autumn 1981, and entered beta testing one and a half years later in early 1983. The system was presented at the Comdex show in autumn 1983. The earliest CPUs were bit slice processors built from "Fast" ("F" infix) type 7400-series integrated circuits and Programmable Array Logic (PAL) devices. The Ridge CPU's qualification as a RISC design has been challe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Connection Machine

The Connection Machine (CM) is a member of a series of massively parallel supercomputers sold by Thinking Machines Corporation. The idea for the Connection Machine grew out of doctoral research on alternatives to the traditional von Neumann architecture of computers by Danny Hillis at Massachusetts Institute of Technology (MIT) in the early 1980s. Starting with CM-1, the machines were intended originally for applications in artificial intelligence (AI) and symbolic processing, but later versions found greater success in the field of computational science. Origin of idea Danny Hillis and Sheryl Handler founded Thinking Machines Corporation (TMC) in Waltham, Massachusetts, in 1983, moving in 1984 to Cambridge, MA. At TMC, Hillis assembled a team to develop what would become the CM-1 Connection Machine, a design for a massively parallel Hypercube internetwork topology, hypercube-based arrangement of thousands of microprocessors, springing from his PhD thesis work at MIT in Electric ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Backpropagation

In machine learning, backpropagation is a gradient computation method commonly used for training a neural network to compute its parameter updates. It is an efficient application of the chain rule to neural networks. Backpropagation computes the gradient of a loss function with respect to the weights of the network for a single input–output example, and does so efficiently, computing the gradient one layer at a time, iterating backward from the last layer to avoid redundant calculations of intermediate terms in the chain rule; this can be derived through dynamic programming. Strictly speaking, the term ''backpropagation'' refers only to an algorithm for efficiently computing the gradient, not how the gradient is used; but the term is often used loosely to refer to the entire learning algorithm – including how the gradient is used, such as by stochastic gradient descent, or as an intermediate step in a more complicated optimizer, such as Adaptive Moment Estimation. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sun Microsystems

Sun Microsystems, Inc., often known as Sun for short, was an American technology company that existed from 1982 to 2010 which developed and sold computers, computer components, software, and information technology services. Sun contributed significantly to the evolution of several key computing technologies, among them Unix, Reduced instruction set computer, RISC processors, thin client computing, and virtualization, virtualized computing. At its height, the Sun headquarters were in Santa Clara, California (part of Silicon Valley), on the former west campus of the Agnews Developmental Center. Sun products included computer servers and workstations built on its own Reduced instruction set computer, RISC-based SPARC processor architecture, as well as on x86-based AMD Opteron and Intel Xeon processors. Sun also developed its own computer storage, storage systems and a suite of software products, including the Unix-based SunOS and later Solaris operating system, Solaris operating s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Carnegie Mellon University

Carnegie Mellon University (CMU) is a private research university in Pittsburgh, Pennsylvania, United States. The institution was established in 1900 by Andrew Carnegie as the Carnegie Technical Schools. In 1912, it became the Carnegie Institute of Technology and began granting four-year degrees. In 1967, it became Carnegie Mellon University through its merger with the Mellon Institute of Industrial Research, founded in 1913 by Andrew Mellon and Richard B. Mellon and formerly a part of the University of Pittsburgh. The university consists of seven colleges, including the College of Engineering, the School of Computer Science, and the Tepper School of Business. The university has its main campus located 5 miles (8 km) from downtown Pittsburgh. It also has over a dozen degree-granting locations in six continents, including campuses in Qatar, Silicon Valley, and Kigali, Rwanda ( Carnegie Mellon University Africa) and partnerships with universities nationally and glob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Single-precision Floating-point Format

Single-precision floating-point format (sometimes called FP32 or float32) is a computer number format, usually occupying 32 bits in computer memory; it represents a wide dynamic range of numeric values by using a floating radix point. A floating-point variable can represent a wider range of numbers than a fixed-point variable of the same bit width at the cost of precision. A signed 32-bit integer variable has a maximum value of 231 − 1 = 2,147,483,647, whereas an IEEE 754 32-bit base-2 floating-point variable has a maximum value of (2 − 2−23) × 2127 ≈ 3.4028235 × 1038. All integers with seven or fewer decimal digits, and any 2''n'' for a whole number −149 ≤ ''n'' ≤ 127, can be converted exactly into an IEEE 754 single-precision floating-point value. In the IEEE 754 standard, the 32-bit base-2 format is officially referred to as binary32; it was called single in IEEE 754-1985. IEEE 754 specifies additional floating-point types, such as 64-bit base-2 ''doubl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |