|

Voigt Profile

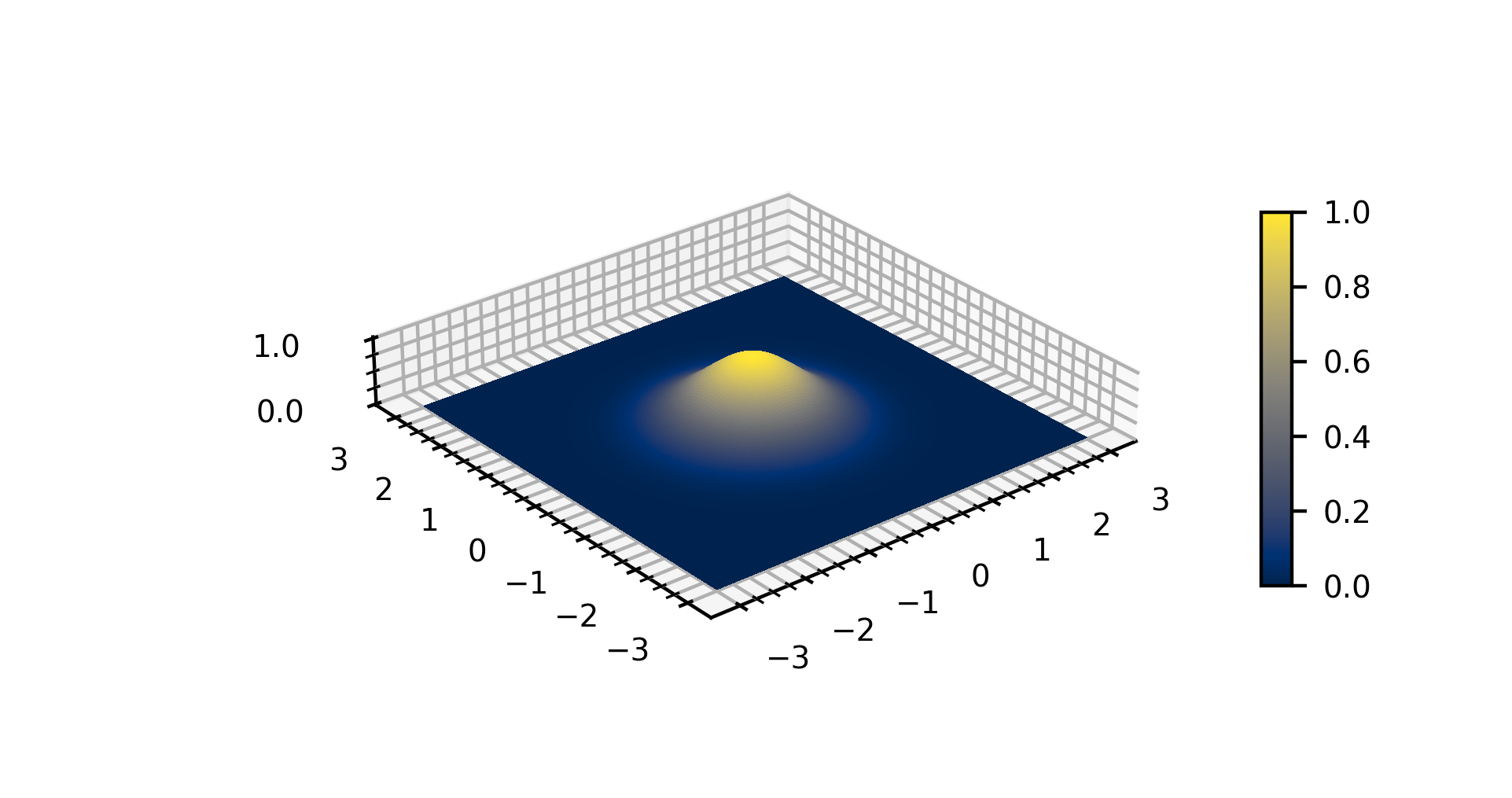

The Voigt profile (named after Woldemar Voigt) is a probability distribution given by a convolution of a Cauchy-Lorentz distribution and a Gaussian distribution. It is often used in analyzing data from spectroscopy or diffraction. Definition Without loss of generality, we can consider only centered profiles, which peak at zero. The Voigt profile is then : V(x;\sigma,\gamma) \equiv \int_^\infty G(x';\sigma)L(x-x';\gamma)\, dx', where ''x'' is the shift from the line center, G(x;\sigma) is the centered Gaussian profile: : G(x;\sigma) \equiv \frac, and L(x;\gamma) is the centered Lorentzian profile: : L(x;\gamma) \equiv \frac. The defining integral can be evaluated as: : V(x;\sigma,\gamma)=\frac, where Re 'w''(''z'')is the real part of the Faddeeva function evaluated for : z=\frac. In the limiting cases of \sigma=0 and \gamma =0 then V(x;\sigma,\gamma) simplifies to L(x;\gamma) and G(x;\sigma), respectively. History and applications In spectroscopy, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Continuous Distributions

Continuity or continuous may refer to: Mathematics * Continuity (mathematics), the opposing concept to discreteness; common examples include ** Continuous probability distribution or random variable in probability and statistics ** Continuous game, a generalization of games used in game theory ** Law of Continuity, a heuristic principle of Gottfried Leibniz * Continuous function, in particular: ** Continuity (topology), a generalization to functions between topological spaces ** Scott continuity, for functions between posets ** Continuity (set theory), for functions between ordinals ** Continuity (category theory), for functors ** Graph continuity, for payoff functions in game theory * Continuity theorem may refer to one of two results: ** Lévy's continuity theorem, on random variables ** Kolmogorov continuity theorem, on stochastic processes * In geometry: ** Parametric continuity, for parametrised curves ** Geometric continuity, a concept primarily applied to the conic secti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Full Width At Half Maximum

In a distribution, full width at half maximum (FWHM) is the difference between the two values of the independent variable at which the dependent variable is equal to half of its maximum value. In other words, it is the width of a spectrum curve measured between those points on the ''y''-axis which are half the maximum amplitude. Half width at half maximum (HWHM) is half of the FWHM if the function is symmetric. The term full duration at half maximum (FDHM) is preferred when the independent variable is time. FWHM is applied to such phenomena as the duration of pulse waveforms and the spectral width of sources used for optical communications and the resolution of spectrometers. The convention of "width" meaning "half maximum" is also widely used in signal processing to define bandwidth as "width of frequency range where less than half the signal's power is attenuated", i.e., the power is at least half the maximum. In signal processing terms, this is at most −3 dB of attenuatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Full Width At Half Maximum

In a distribution, full width at half maximum (FWHM) is the difference between the two values of the independent variable at which the dependent variable is equal to half of its maximum value. In other words, it is the width of a spectrum curve measured between those points on the ''y''-axis which are half the maximum amplitude. Half width at half maximum (HWHM) is half of the FWHM if the function is symmetric. The term full duration at half maximum (FDHM) is preferred when the independent variable is time. FWHM is applied to such phenomena as the duration of pulse waveforms and the spectral width of sources used for optical communications and the resolution of spectrometers. The convention of "width" meaning "half maximum" is also widely used in signal processing to define bandwidth as "width of frequency range where less than half the signal's power is attenuated", i.e., the power is at least half the maximum. In signal processing terms, this is at most −3 dB of attenuatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spectral Line Shape

Spectral line shape describes the form of a feature, observed in spectroscopy, corresponding to an energy change in an atom, molecule or ion. This shape is also referred to as the spectral line profile. Ideal line shapes include Lorentzian, Gaussian and Voigt functions, whose parameters are the line position, maximum height and half-width. Actual line shapes are determined principally by Doppler, collision and proximity broadening. For each system the half-width of the shape function varies with temperature, pressure (or concentration) and phase. A knowledge of shape function is needed for spectroscopic curve fitting and deconvolution. Origins An atomic transition is associated with a specific amount of energy, ''E''. However, when this energy is measured by means of some spectroscopic technique, the line is not infinitely sharp, but has a particular shape. Numerous factors can contribute to the broadening of spectral lines. Broadening can only be mitigated by the use of spe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gaussian Function

In mathematics, a Gaussian function, often simply referred to as a Gaussian, is a function of the base form f(x) = \exp (-x^2) and with parametric extension f(x) = a \exp\left( -\frac \right) for arbitrary real constants , and non-zero . It is named after the mathematician Carl Friedrich Gauss. The graph of a Gaussian is a characteristic symmetric " bell curve" shape. The parameter is the height of the curve's peak, is the position of the center of the peak, and (the standard deviation, sometimes called the Gaussian RMS width) controls the width of the "bell". Gaussian functions are often used to represent the probability density function of a normally distributed random variable with expected value and variance . In this case, the Gaussian is of the form g(x) = \frac \exp\left( -\frac \frac \right). Gaussian functions are widely used in statistics to describe the normal distributions, in signal processing to define Gaussian filters, in image processing where two-dimensio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Thor Tepper-García

Thor (; from non, Þórr ) is a prominent god in Germanic paganism. In Norse mythology, he is a hammer-wielding god associated with lightning, thunder, storms, sacred groves and trees, strength, the protection of humankind, hallowing, and fertility. Besides Old Norse , the deity occurs in Old English as , in Old Frisian as ', in Old Saxon as ', and in Old High German as , all ultimately stemming from the Proto-Germanic theonym , meaning 'Thunder'. Thor is a prominently mentioned god throughout the recorded history of the Germanic peoples, from the Roman Empire, Roman occupation of regions of , to the Germanic expansions of the Migration Period, to his high popularity during the Viking Age, when, in the face of the process of the Christianization of Scandinavia, emblems of his hammer, , were worn and Norse paganism, Norse pagan personal names containing the name of the god bear witness to his popularity. Due to the nature of the Germanic corpus, narratives featuring Thor ar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Complementary Error Function

In mathematics, the error function (also called the Gauss error function), often denoted by , is a complex function of a complex variable defined as: :\operatorname z = \frac\int_0^z e^\,\mathrm dt. This integral is a special (non-elementary) sigmoid function that occurs often in probability, statistics, and partial differential equations. In many of these applications, the function argument is a real number. If the function argument is real, then the function value is also real. In statistics, for non-negative values of , the error function has the following interpretation: for a random variable that is normally distributed with mean 0 and standard deviation , is the probability that falls in the range . Two closely related functions are the complementary error function () defined as :\operatorname z = 1 - \operatorname z, and the imaginary error function () defined as :\operatorname z = -i\operatorname iz, where is the imaginary unit Name The name "error function" an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Finite Differences

A finite difference is a mathematical expression of the form . If a finite difference is divided by , one gets a difference quotient. The approximation of derivatives by finite differences plays a central role in finite difference methods for the numerical solution of differential equations, especially boundary value problems. The difference operator, commonly denoted \Delta is the operator that maps a function to the function \Delta /math> defined by :\Delta x)= f(x+1)-f(x). A difference equation is a functional equation that involves the finite difference operator in the same way as a differential equation involves derivatives. There are many similarities between difference equations and differential equations, specially in the solving methods. Certain recurrence relations can be written as difference equations by replacing iteration notation with finite differences. In numerical analysis, finite differences are widely used for approximating derivatives, and the term "fini ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jacobian Matrix

In vector calculus, the Jacobian matrix (, ) of a vector-valued function of several variables is the matrix of all its first-order partial derivatives. When this matrix is square, that is, when the function takes the same number of variables as input as the number of vector components of its output, its determinant is referred to as the Jacobian determinant. Both the matrix and (if applicable) the determinant are often referred to simply as the Jacobian in literature. Suppose is a function such that each of its first-order partial derivatives exist on . This function takes a point as input and produces the vector as output. Then the Jacobian matrix of is defined to be an matrix, denoted by , whose th entry is \mathbf J_ = \frac, or explicitly :\mathbf J = \begin \dfrac & \cdots & \dfrac \end = \begin \nabla^ f_1 \\ \vdots \\ \nabla^ f_m \end = \begin \dfrac & \cdots & \dfrac\\ \vdots & \ddots & \vdots\\ \dfrac & \cdots ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |