|

Vision Transformer

A Vision Transformer (ViT) is a transformer that is targeted at vision processing tasks such as image recognition. Vision Transformers Transformers found their initial applications in natural language processing (NLP) tasks, as demonstrated by language models such as BERT and GPT-3. By contrast the typical image processing system uses a convolutional neural network (CNN). Well-known projects include Xception, ResNet, EfficientNet, DenseNet, and Inception. Transformers measure the relationships between pairs of input tokens (words in the case of text strings), termed attention. The cost is quadratic in the number of tokens. For images, the basic unit of analysis is the pixel. However, computing relationships for every pixel pair in a typical image is prohibitive in terms of memory and computation. Instead, ViT computes relationships among pixels in various small sections of the image (e.g., 16x16 pixels), at a drastically reduced cost. The sections (with positional embeddings) a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transformer (machine Learning Model)

A transformer is a deep learning model that adopts the mechanism of self-attention, differentially weighting the significance of each part of the input data. It is used primarily in the fields of natural language processing (NLP) and computer vision (CV). Like recurrent neural networks (RNNs), transformers are designed to process sequential input data, such as natural language, with applications towards tasks such as translation and text summarization. However, unlike RNNs, transformers process the entire input all at once. The attention mechanism provides context for any position in the input sequence. For example, if the input data is a natural language sentence, the transformer does not have to process one word at a time. This allows for more parallelization than RNNs and therefore reduces training times. Transformers were introduced in 2017 by a team at Google Brain and are increasingly the model of choice for NLP problems, replacing RNN models such as long short-term memor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inductive Bias

The inductive bias (also known as learning bias) of a learning algorithm is the set of assumptions that the learner uses to predict outputs of given inputs that it has not encountered. In machine learning, one aims to construct algorithms that are able to ''learn'' to predict a certain target output. To achieve this, the learning algorithm is presented some training examples that demonstrate the intended relation of input and output values. Then the learner is supposed to approximate the correct output, even for examples that have not been shown during training. Without any additional assumptions, this problem cannot be solved since unseen situations might have an arbitrary output value. The kind of necessary assumptions about the nature of the target function are subsumed in the phrase ''inductive bias''. A classical example of an inductive bias is Occam's razor, assuming that the simplest consistent hypothesis about the target function is actually the best. Here ''consisten ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning (also known as deep structured learning) is part of a broader family of machine learning methods based on artificial neural networks with representation learning. Learning can be supervised, semi-supervised or unsupervised. Deep-learning architectures such as deep neural networks, deep belief networks, deep reinforcement learning, recurrent neural networks, convolutional neural networks and Transformers have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, Climatology, climate science, material inspection and board game programs, where they have produced results comparable to and in some cases surpassing human expert performance. Artificial neural networks (ANNs) were inspired by information processing and distributed communication nodes in biological systems. ANNs have various differences from biological brains. Specifically, artificial ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Perceiver

Perceiver is a transformer adapted to be able to process non-textual data, such as images, sounds and video, and spatial data. Transformers underlie other notable systems such as BERT and GPT-3, which preceded Perceiver. It adopts an asymmetric attention mechanism to distill inputs into a latent bottleneck, allowing it to learn from large amounts of heterogeneous data. Perceiver matches or outperforms specialized models on classification tasks. Perceiver was introduced in June 2021 by DeepMind. It was followed by Perceiver IO in August 2021. Design Perceiver is designed without modality-specific elements. For example, it does not have elements specialized to handle images, or text, or audio. Further it can handle multiple correlated input streams of heterogeneous types. It uses a small set of latent units that forms an attention bottleneck through which the inputs must pass. One benefit is to eliminate the quadratic scaling problem found in early transformers. Earlier work used c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

TensorFlow

TensorFlow is a free and open-source software library for machine learning and artificial intelligence. It can be used across a range of tasks but has a particular focus on training and inference of deep neural networks. "It is machine learning software being used for various kinds of perceptual and language understanding tasks" – Jeffrey Dean, minute 0:47 / 2:17 from YouTube clip TensorFlow was developed by the Google Brain team for internal Google use in research and production. The initial version was released under the Apache License 2.0 in 2015. Google released the updated version of TensorFlow, named TensorFlow 2.0, in September 2019. TensorFlow can be used in a wide variety of programming languages, including Python, JavaScript, C++, and Java. This flexibility lends itself to a range of applications in many different sectors. History DistBelief Starting in 2011, Google Brain built DistBelief as a proprietary machine learning system based on deep learning neural n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PyTorch

PyTorch is a machine learning framework based on the Torch library, used for applications such as computer vision and natural language processing, originally developed by Meta AI and now part of the Linux Foundation umbrella. It is free and open-source software released under the modified BSD license. Although the Python interface is more polished and the primary focus of development, PyTorch also has a C++ interface. A number of pieces of deep learning software are built on top of PyTorch, including Tesla Autopilot, Uber's Pyro, Hugging Face's Transformers, PyTorch Lightning, and Catalyst. PyTorch provides two high-level features: * Tensor computing (like NumPy) with strong acceleration via graphics processing units (GPU) * Deep neural networks built on a tape-based automatic differentiation system History Meta (formerly known as Facebook) operates both ''PyTorch'' and ''Convolutional Architecture for Fast Feature Embedding'' (Caffe2), but models defined by the two framewor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autonomous Driving

A self-driving car, also known as an autonomous car, driver-less car, or robotic car (robo-car), is a car that is capable of traveling without human input.Xie, S.; Hu, J.; Bhowmick, P.; Ding, Z.; Arvin, F.,Distributed Motion Planning for Safe Autonomous Vehicle Overtaking via Artificial Potential Field IEEE Transactions on Intelligent Transportation Systems, 2022. Self-driving cars use sensors to perceive their surroundings, such as optical and thermographic cameras, radar, lidar, ultrasound/sonar, GPS, odometry and inertial measurement units. Control systems interpret sensory information to create a three-dimensional model of the surroundings. Based on the model, the car identifies appropriate navigation paths, and strategies for managing traffic controls (stop signs, etc.) and obstacles.Hu, J.; Bhowmick, P.; Jang, I.; Arvin, F.; Lanzon, A.,A Decentralized Cluster Formation Containment Framework for Multirobot Systems IEEE Transactions on Robotics, 2021. Once the techn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cluster Analysis

Cluster analysis or clustering is the task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more similar (in some sense) to each other than to those in other groups (clusters). It is a main task of exploratory data analysis, and a common technique for statistics, statistical data analysis, used in many fields, including pattern recognition, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Cluster analysis itself is not one specific algorithm, but the general task to be solved. It can be achieved by various algorithms that differ significantly in their understanding of what constitutes a cluster and how to efficiently find them. Popular notions of clusters include groups with small Distance function, distances between cluster members, dense areas of the data space, intervals or particular statistical distributions. Clustering can therefore be formulated as a multi-object ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

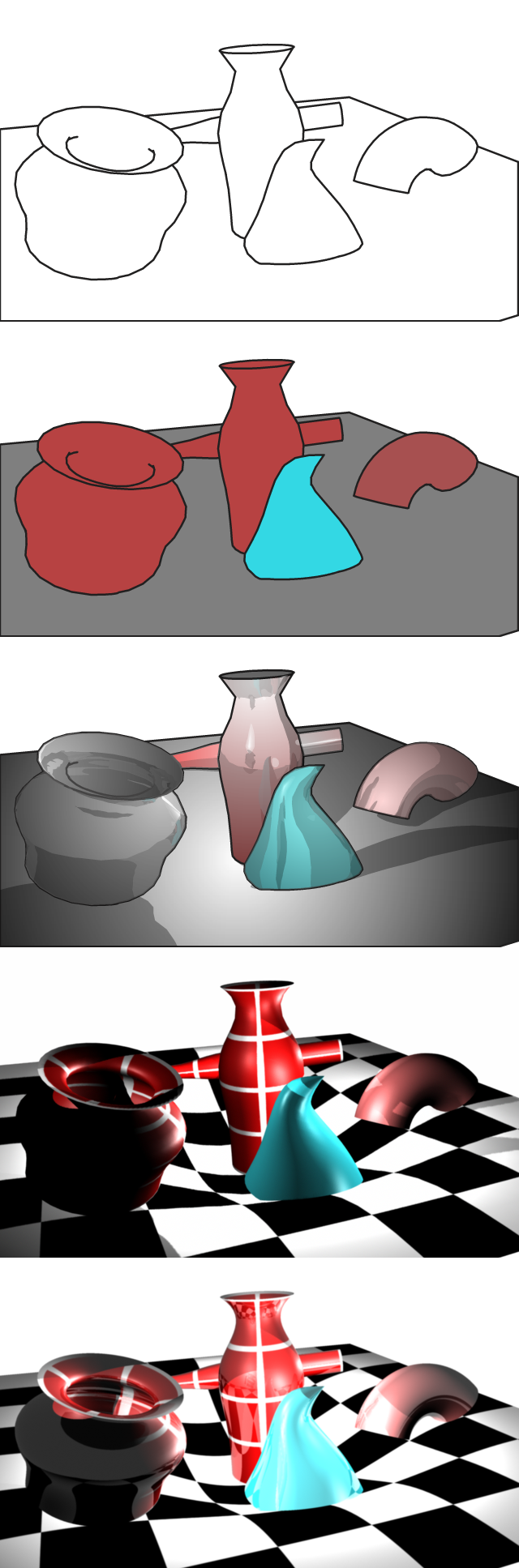

Image Synthesis

Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a ''scene file'' containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, texture, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output. Rendering is one of the major sub-topics of 3D computer graphics, and in practice it is always connected to the others. It is the last major step in the graph ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Anomaly Detection

In data analysis, anomaly detection (also referred to as outlier detection and sometimes as novelty detection) is generally understood to be the identification of rare items, events or observations which deviate significantly from the majority of the data and do not conform to a well defined notion of normal behaviour. Such examples may arouse suspicions of being generated by a different mechanism, or appear inconsistent with the remainder of that set of data. Anomaly detection finds application in many domains including cyber security, medicine, machine vision, statistics, neuroscience, law enforcement and financial fraud to name only a few. Anomalies were initially searched for clear rejection or omission from the data to aid statistical analysis, for example to compute the mean or standard deviation. They were also removed to better predictions from models such as linear regression, and more recently their removal aids the performance of machine learning algorithms. However, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Image Segmentation

In digital image processing and computer vision, image segmentation is the process of partitioning a digital image into multiple image segments, also known as image regions or image objects ( sets of pixels). The goal of segmentation is to simplify and/or change the representation of an image into something that is more meaningful and easier to analyze. Linda G. Shapiro and George C. Stockman (2001): “Computer Vision”, pp 279–325, New Jersey, Prentice-Hall, Image segmentation is typically used to locate objects and boundaries (lines, curves, etc.) in images. More precisely, image segmentation is the process of assigning a label to every pixel in an image such that pixels with the same label share certain characteristics. The result of image segmentation is a set of segments that collectively cover the entire image, or a set of contours extracted from the image (see edge detection). Each of the pixels in a region are similar with respect to some characteristic or computed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deepfake

Deepfakes (a portmanteau of "deep learning" and "fake") are synthetic media in which a person in an existing image or video is replaced with someone else's likeness. While the act of creating fake content is not new, deepfakes leverage powerful techniques from machine learning and artificial intelligence to manipulate or generate visual and audio content that can more easily deceive. The main machine learning methods used to create deepfakes are based on deep learning and involve training generative neural network architectures, such as autoencoders, or generative adversarial networks (GANs). Deepfakes have garnered widespread attention for their potential use in creating child sexual abuse material, celebrity pornographic videos, revenge porn, fake news, hoaxes, bullying, and financial fraud. This has elicited responses from both industry and government to detect and limit their use. From traditional entertainment to gaming, deepfake technology has evolved to be increasingly ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |