|

VEGAS Algorithm

The VEGAS algorithm, due to G. Peter Lepage, is a method for reducing error in Monte Carlo simulations by using a known or approximate probability distribution function to concentrate the search in those areas of the integrand that make the greatest contribution to the final integral. The VEGAS algorithm is based on importance sampling. It samples points from the probability distribution described by the function , f, , so that the points are concentrated in the regions that make the largest contribution to the integral. The GNU Scientific Library (GSL) provides a VEGAS routine. Sampling method In general, if the Monte Carlo integral of f over a volume \Omega is sampled with points distributed according to a probability distribution described by the function g, we obtain an estimate \mathrm_g(f; N), :\mathrm_g(f; N) = \sum_i^N / g(x_i) . The variance of the new estimate is then :\mathrm_g(f; N) = \mathrm(f/g; N) where \mathrm(f;N) is the variance of the original estimate, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variance Reduction

In mathematics, more specifically in the theory of Monte Carlo methods, variance reduction is a procedure used to increase the precision of the estimates obtained for a given simulation or computational effort. Every output random variable from the simulation is associated with a variance which limits the precision of the simulation results. In order to make a simulation statistically efficient, i.e., to obtain a greater precision and smaller confidence intervals for the output random variable of interest, variance reduction techniques can be used. The main ones are common random numbers, antithetic variates, control variates, importance sampling, stratified sampling, moment matching, conditional Monte Carlo and quasi random variables. For simulation with black-box models subset simulation and line sampling can also be used. Under these headings are a variety of specialized techniques; for example, particle transport simulations make extensive use of "weight windows" and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monte Carlo Simulation

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. They are often used in physical and mathematical problems and are most useful when it is difficult or impossible to use other approaches. Monte Carlo methods are mainly used in three problem classes: optimization, numerical integration, and generating draws from a probability distribution. In physics-related problems, Monte Carlo methods are useful for simulating systems with many coupled degrees of freedom, such as fluids, disordered materials, strongly coupled solids, and cellular structures (see cellular Potts model, interacting particle systems, McKean–Vlasov processes, kinetic models of gases). Other examples include modeling phenomena with significant uncertainty in inputs such as the calculation of ris ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that the coin is fair). Examples of random phenomena include the weather conditions at some future date, the height of a randomly selected person, the fraction of male students in a school, the results of a survey to be conducted, etc. Introduction A probability distribution is a mathematical description of the probabilities of events, subsets of the sample space. The sample space, often denoted by \Omega, is the set of all possible outcomes of a ra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Integrand

In mathematics, an integral assigns numbers to functions in a way that describes displacement, area, volume, and other concepts that arise by combining infinitesimal data. The process of finding integrals is called integration. Along with differentiation, integration is a fundamental, essential operation of calculus,Integral calculus is a very well established mathematical discipline for which there are many sources. See and , for example. and serves as a tool to solve problems in mathematics and physics involving the area of an arbitrary shape, the length of a curve, and the volume of a solid, among others. The integrals enumerated here are those termed definite integrals, which can be interpreted as the signed area of the region in the plane that is bounded by the graph of a given function between two points in the real line. Conventionally, areas above the horizontal axis of the plane are positive while areas below are negative. Integrals also refer to the concept of an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Integral

In mathematics, an integral assigns numbers to functions in a way that describes displacement, area, volume, and other concepts that arise by combining infinitesimal data. The process of finding integrals is called integration. Along with differentiation, integration is a fundamental, essential operation of calculus,Integral calculus is a very well established mathematical discipline for which there are many sources. See and , for example. and serves as a tool to solve problems in mathematics and physics involving the area of an arbitrary shape, the length of a curve, and the volume of a solid, among others. The integrals enumerated here are those termed definite integrals, which can be interpreted as the signed area of the region in the plane that is bounded by the graph of a given function between two points in the real line. Conventionally, areas above the horizontal axis of the plane are positive while areas below are negative. Integrals also refer to the concept of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Importance Sampling

Importance sampling is a Monte Carlo method for evaluating properties of a particular distribution, while only having samples generated from a different distribution than the distribution of interest. Its introduction in statistics is generally attributed to a paper by Teun Kloek and Herman K. van Dijk in 1978, but its precursors can be found in statistical physics as early as 1949. Importance sampling is also related to umbrella sampling in computational physics. Depending on the application, the term may refer to the process of sampling from this alternative distribution, the process of inference, or both. Basic theory Let X\colon \Omega\to \mathbb be a random variable in some probability space (\Omega,\mathcal,P). We wish to estimate the expected value of ''X'' under ''P'', denoted E 'X;P'' If we have statistically independent random samples x_1, \ldots, x_n, generated according to ''P'', then an empirical estimate of E 'X;P''is : \widehat_ ;P= \frac \sum_^n x_i \quad \m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GNU Scientific Library

The GNU Scientific Library (or GSL) is a software library for numerical computations in applied mathematics and science. The GSL is written in C; wrappers are available for other programming languages. The GSL is part of the GNU Project and is distributed under the GNU General Public License. Project history The GSL project was initiated in 1996 by physicists Mark Galassi and James Theiler of Los Alamos National Laboratory.GSL homepage They aimed at writing a modern replacement for widely used but somewhat outdated Fortran libraries such as . They carried out the overall design and wrote early modules; with that ready they recruited other scient ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variance

In probability theory and statistics, variance is the expectation of the squared deviation of a random variable from its population mean or sample mean. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbers is spread out from their average value. Variance has a central role in statistics, where some ideas that use it include descriptive statistics, statistical inference, hypothesis testing, goodness of fit, and Monte Carlo sampling. Variance is an important tool in the sciences, where statistical analysis of data is common. The variance is the square of the standard deviation, the second central moment of a distribution, and the covariance of the random variable with itself, and it is often represented by \sigma^2, s^2, \operatorname(X), V(X), or \mathbb(X). An advantage of variance as a measure of dispersion is that it is more amenable to algebraic manipulation than other measures of dispersion such as the expected absolute deviatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

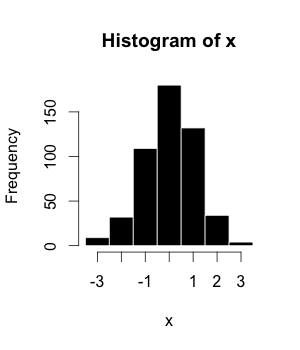

Histogram

A histogram is an approximate representation of the distribution of numerical data. The term was first introduced by Karl Pearson. To construct a histogram, the first step is to " bin" (or " bucket") the range of values—that is, divide the entire range of values into a series of intervals—and then count how many values fall into each interval. The bins are usually specified as consecutive, non-overlapping intervals of a variable. The bins (intervals) must be adjacent and are often (but not required to be) of equal size. If the bins are of equal size, a bar is drawn over the bin with height proportional to the frequency—the number of cases in each bin. A histogram may also be normalized to display "relative" frequencies showing the proportion of cases that fall into each of several categories, with the sum of the heights equaling 1. However, bins need not be of equal width; in that case, the erected rectangle is defined to have its ''area'' proportional to the frequenc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Projection (mathematics)

In mathematics, a projection is a mapping of a set (or other mathematical structure) into a subset (or sub-structure), which is equal to its square for mapping composition, i.e., which is idempotent. The restriction to a subspace of a projection is also called a ''projection'', even if the idempotence property is lost. An everyday example of a projection is the casting of shadows onto a plane (sheet of paper): the projection of a point is its shadow on the sheet of paper, and the projection (shadow) of a point on the sheet of paper is that point itself (idempotency). The shadow of a three-dimensional sphere is a closed disk. Originally, the notion of projection was introduced in Euclidean geometry to denote the projection of the three-dimensional Euclidean space onto a plane in it, like the shadow example. The two main projections of this kind are: * The projection from a point onto a plane or central projection: If ''C'' is a point, called the center of projection, then th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Las Vegas Algorithm

In computing, a Las Vegas algorithm is a randomized algorithm that always gives correct results; that is, it always produces the correct result or it informs about the failure. However, the runtime of a Las Vegas algorithm differs depending on the input. The usual definition of a Las Vegas algorithm includes the restriction that the ''expected'' runtime be finite, where the expectation is carried out over the space of random information, or entropy, used in the algorithm. An alternative definition requires that a Las Vegas algorithm always terminates (is effective), but may output a symbol not part of the solution space to indicate failure in finding a solution. The nature of Las Vegas algorithms makes them suitable in situations where the number of possible solutions is limited, and where verifying the correctness of a candidate solution is relatively easy while finding a solution is complex. Las Vegas algorithms are prominent in the field of artificial intelligence, and in other ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monte Carlo Integration

In mathematics, Monte Carlo integration is a technique for numerical integration using random numbers. It is a particular Monte Carlo method that numerically computes a definite integral. While other algorithms usually evaluate the integrand at a regular grid, Monte Carlo randomly chooses points at which the integrand is evaluated. This method is particularly useful for higher-dimensional integrals. There are different methods to perform a Monte Carlo integration, such as uniform sampling, stratified sampling, importance sampling, sequential Monte Carlo (also known as a particle filter), and mean-field particle methods. Overview In numerical integration, methods such as the trapezoidal rule use a deterministic approach. Monte Carlo integration, on the other hand, employs a non-deterministic approach: each realization provides a different outcome. In Monte Carlo, the final outcome is an approximation of the correct value with respective error bars, and the correct value ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |