|

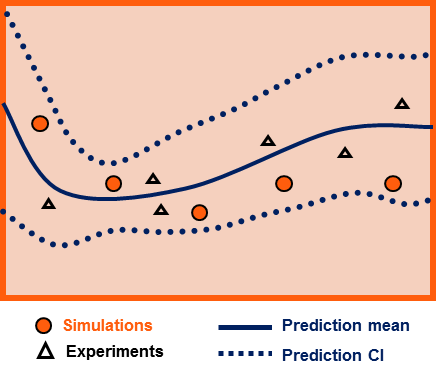

Uncertainty Quantification

Uncertainty quantification (UQ) is the science of quantitative characterization and reduction of uncertainties in both computational and real world applications. It tries to determine how likely certain outcomes are if some aspects of the system are not exactly known. An example would be to predict the acceleration of a human body in a head-on crash with another car: even if the speed was exactly known, small differences in the manufacturing of individual cars, how tightly every bolt has been tightened, etc., will lead to different results that can only be predicted in a statistical sense. Many problems in the natural sciences and engineering are also rife with sources of uncertainty. Computer experiments on computer simulations are the most common approach to study problems in uncertainty quantification. Sources Uncertainty can enter mathematical models and experimental measurements in various contexts. One way to categorize the sources of uncertainty is to consider: ; Paramet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uncertainty

Uncertainty refers to epistemic situations involving imperfect or unknown information. It applies to predictions of future events, to physical measurements that are already made, or to the unknown. Uncertainty arises in partially observable or stochastic environments, as well as due to ignorance, indolence, or both. It arises in any number of fields, including insurance, philosophy, physics, statistics, economics, finance, medicine, psychology, sociology, engineering, metrology, meteorology, ecology and information science. Concepts Although the terms are used in various ways among the general public, many specialists in decision theory, statistics and other quantitative fields have defined uncertainty, risk, and their measurement as: Uncertainty The lack of certainty, a state of limited knowledge where it is impossible to exactly describe the existing state, a future outcome, or more than one possible outcome. ;Measurement of uncertainty: A set of possible states or outc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moment (mathematics)

In mathematics, the moments of a function are certain quantitative measures related to the shape of the function's graph. If the function represents mass density, then the zeroth moment is the total mass, the first moment (normalized by total mass) is the center of mass, and the second moment is the moment of inertia. If the function is a probability distribution, then the first moment is the expected value, the second central moment is the variance, the third standardized moment is the skewness, and the fourth standardized moment is the kurtosis. The mathematical concept is closely related to the concept of moment in physics. For a distribution of mass or probability on a bounded interval, the collection of all the moments (of all orders, from to ) uniquely determines the distribution (Hausdorff moment problem). The same is not true on unbounded intervals (Hamburger moment problem). In the mid-nineteenth century, Pafnuty Chebyshev became the first person to think systematic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Importance Sampling

Importance sampling is a Monte Carlo method for evaluating properties of a particular distribution, while only having samples generated from a different distribution than the distribution of interest. Its introduction in statistics is generally attributed to a paper by Teun Kloek and Herman K. van Dijk in 1978, but its precursors can be found in statistical physics as early as 1949. Importance sampling is also related to umbrella sampling in computational physics. Depending on the application, the term may refer to the process of sampling from this alternative distribution, the process of inference, or both. Basic theory Let X\colon \Omega\to \mathbb be a random variable in some probability space (\Omega,\mathcal,P). We wish to estimate the expected value of ''X'' under ''P'', denoted E 'X;P'' If we have statistically independent random samples x_1, \ldots, x_n, generated according to ''P'', then an empirical estimate of E 'X;P''is : \widehat_ ;P= \frac \sum_^n x_i \quad \m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bias Correction

Bias is a disproportionate weight ''in favor of'' or ''against'' an idea or thing, usually in a way that is closed-minded, prejudicial, or unfair. Biases can be innate or learned. People may develop biases for or against an individual, a group, or a belief. In science and engineering, a bias is a systematic error. Statistical bias results from an unfair sampling of a population, or from an estimation process that does not give accurate results on average. Etymology The word appears to derive from Old Provençal into Old French ''biais'', "sideways, askance, against the grain". Whence comes French ''biais'', "a slant, a slope, an oblique". It seems to have entered English via the game of bowls, where it referred to balls made with a greater weight on one side. Which expanded to the figurative use, "a one-sided tendency of the mind", and, at first especially in law, "undue propensity or prejudice". Types of bias Cognitive biases A cognitive bias is a repeating or basic miss ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Utility

As a topic of economics, utility is used to model worth or value. Its usage has evolved significantly over time. The term was introduced initially as a measure of pleasure or happiness as part of the theory of utilitarianism by moral philosophers such as Jeremy Bentham and John Stuart Mill. The term has been adapted and reapplied within neoclassical economics, which dominates modern economic theory, as a utility function that represents a single consumer's preference ordering over a choice set but is not comparable across consumers. This concept of utility is personal and based on choice rather than on pleasure received, and so is specified more rigorously than the original concept but makes it less useful (and controversial) for ethical decisions. Utility function Consider a set of alternatives among which a person can make a preference ordering. The utility obtained from these alternatives is an unknown function of the utilities obtained from each alternative, not the sum of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reliability Engineering

Reliability engineering is a sub-discipline of systems engineering that emphasizes the ability of equipment to function without failure. Reliability describes the ability of a system or component to function under stated conditions for a specified period of time. Reliability is closely related to availability, which is typically described as the ability of a component or system to function at a specified moment or interval of time. The reliability function is theoretically defined as the probability of success at time t, which is denoted R(t). This probability is estimated from detailed (physics of failure) analysis, previous data sets or through reliability testing and reliability modelling. Availability, testability, maintainability and maintenance, repair and operations, maintenance are often defined as a part of "reliability engineering" in reliability programs. Reliability often plays the key role in the cost-effectiveness of systems. Reliability engineering deals with the p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variance

In probability theory and statistics, variance is the expectation of the squared deviation of a random variable from its population mean or sample mean. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbers is spread out from their average value. Variance has a central role in statistics, where some ideas that use it include descriptive statistics, statistical inference, hypothesis testing, goodness of fit, and Monte Carlo sampling. Variance is an important tool in the sciences, where statistical analysis of data is common. The variance is the square of the standard deviation, the second central moment of a distribution, and the covariance of the random variable with itself, and it is often represented by \sigma^2, s^2, \operatorname(X), V(X), or \mathbb(X). An advantage of variance as a measure of dispersion is that it is more amenable to algebraic manipulation than other measures of dispersion such as the expected absolute deviation; for e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Sample

In statistics, quality assurance, and survey methodology, sampling is the selection of a subset (a statistical sample) of individuals from within a statistical population to estimate characteristics of the whole population. Statisticians attempt to collect samples that are representative of the population in question. Sampling has lower costs and faster data collection than measuring the entire population and can provide insights in cases where it is infeasible to measure an entire population. Each observation measures one or more properties (such as weight, location, colour or mass) of independent objects or individuals. In survey sampling, weights can be applied to the data to adjust for the sample design, particularly in stratified sampling. Results from probability theory and statistical theory are employed to guide the practice. In business and medical research, sampling is widely used for gathering information about a population. Acceptance sampling is used to determine if ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that the coin is fair). Examples of random phenomena include the weather conditions at some future date, the height of a randomly selected person, the fraction of male students in a school, the results of a survey to be conducted, etc. Introduction A probability distribution is a mathematical description of the probabilities of events, subsets of the sample space. The sample space, often denoted by \Omega, is the set of all possible outcomes of a random phe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Probability

Bayesian probability is an Probability interpretations, interpretation of the concept of probability, in which, instead of frequentist probability, frequency or propensity probability, propensity of some phenomenon, probability is interpreted as reasonable expectation representing a state of knowledge or as quantification of a personal belief. The Bayesian interpretation of probability can be seen as an extension of propositional logic that enables reasoning with Hypothesis, hypotheses; that is, with propositions whose truth value, truth or falsity is unknown. In the Bayesian view, a probability is assigned to a hypothesis, whereas under frequentist inference, a hypothesis is typically tested without being assigned a probability. Bayesian probability belongs to the category of evidential probabilities; to evaluate the probability of a hypothesis, the Bayesian probabilist specifies a prior probability. This, in turn, is then updated to a posterior probability in the light of new, re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Polynomial Chaos

Polynomial chaos (PC), also called polynomial chaos expansion (PCE) and Wiener chaos expansion, is a method for representing a random variable in terms of a polynomial function of other random variables. The polynomials are chosen to be orthogonal with respect to the joint probability distribution of these random variables. PCE can be used, e.g., to determine the evolution of uncertainty in a dynamical system when there is probabilistic uncertainty in the system parameters. Note that despite its name, PCE has no immediate connections to chaos theory. PCE was first introduced in 1938 by Norbert Wiener using Hermite polynomials to model stochastic processes with Gaussian random variables. It was introduced to the physics and engineering community by R. Ghanem and P. D. Spanos in 1991 and generalized to other orthogonal polynomial families by D. Xiu and G. E. Karniadakis in 2002. Mathematically rigorous proofs of existence and convergence of generalized PCE were given by O. G ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |