|

Uniform Binary Search

Uniform binary search is an optimization of the classic binary search algorithm invented by Donald Knuth and given in Knuth's ''The Art of Computer Programming''. It uses a lookup table to update a single array index, rather than taking the midpoint of an upper and a lower bound on each iteration; therefore, it is optimized for architectures (such as Knuth's MIX) on which *a table lookup is generally faster than an addition and a shift, and *many searches will be performed on the same array, or on several arrays of the same length C implementation The uniform binary search algorithm looks like this, when implemented in C. #define LOG_N 4 static int delta OG_N void make_delta(int N) int unisearch(int *a, int key) /* Example of use: */ #define N 10 int main(void) { int a = {1, 3, 5, 6, 7, 9, 14, 15, 17, 19}; make_delta(N); for (int i = 0; i < 20; ++i) printf("%d is at index %d\n", i, unisearch(a, i)); return 0; } Refe ...

|

Binary Search

In computer science, binary search, also known as half-interval search, logarithmic search, or binary chop, is a search algorithm that finds the position of a target value within a sorted array. Binary search compares the target value to the middle element of the array. If they are not equal, the half in which the target cannot lie is eliminated and the search continues on the remaining half, again taking the middle element to compare to the target value, and repeating this until the target value is found. If the search ends with the remaining half being empty, the target is not in the array. Binary search runs in logarithmic time in the worst case, making O(\log n) comparisons, where n is the number of elements in the array. Binary search is faster than linear search except for small arrays. However, the array must be sorted first to be able to apply binary search. There are specialized data structures designed for fast searching, such as hash tables, that can be searched ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

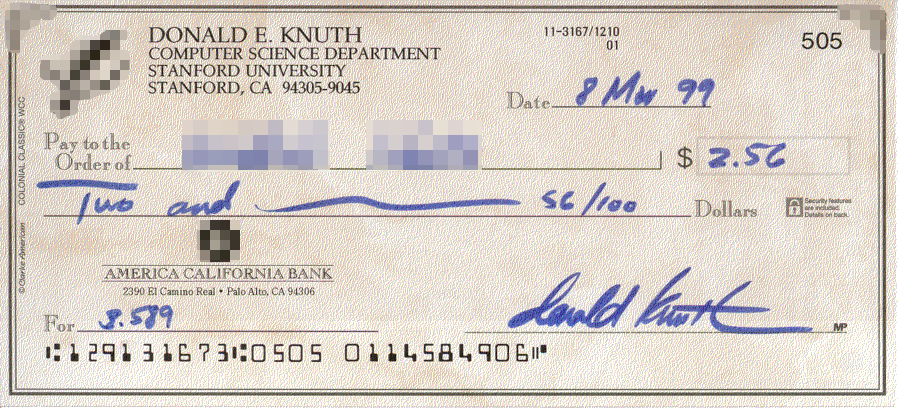

Donald Knuth

Donald Ervin Knuth ( ; born January 10, 1938) is an American computer scientist, mathematician, and professor emeritus at Stanford University. He is the 1974 recipient of the ACM Turing Award, informally considered the Nobel Prize of computer science. Knuth has been called the "father of the analysis of algorithms". He is the author of the multi-volume work '' The Art of Computer Programming'' and contributed to the development of the rigorous analysis of the computational complexity of algorithms and systematized formal mathematical techniques for it. In the process, he also popularized the asymptotic notation. In addition to fundamental contributions in several branches of theoretical computer science, Knuth is the creator of the TeX computer typesetting system, the related METAFONT font definition language and rendering system, and the Computer Modern family of typefaces. As a writer and scholar, Knuth created the WEB and CWEB computer programming systems designed to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

The Art Of Computer Programming

''The Art of Computer Programming'' (''TAOCP'') is a comprehensive monograph written by the computer scientist Donald Knuth presenting programming algorithms and their analysis. Volumes 1–5 are intended to represent the central core of computer programming for sequential machines. When Knuth began the project in 1962, he originally conceived of it as a single book with twelve chapters. The first three volumes of what was then expected to be a seven-volume set were published in 1968, 1969, and 1973. Work began in earnest on Volume 4 in 1973, but was suspended in 1977 for work on typesetting prompted by the second edition of Volume 2. Writing of the final copy of Volume 4A began in longhand in 2001, and the first online pre-fascicle, 2A, appeared later in 2001. The first published installment of Volume 4 appeared in paperback as Fascicle 2 in 2005. The hardback Volume 4A, combining Volume 4, Fascicles 0–4, was published in 2011. Volume 4, Fascicle 6 ("Satisfiability") was r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lookup Table

In computer science, a lookup table (LUT) is an array that replaces runtime computation with a simpler array indexing operation. The process is termed as "direct addressing" and LUTs differ from hash tables in a way that, to retrieve a value v with key k, a hash table would store the value v in the slot h(k) where h is a hash function i.e. k is used to compute the slot, while in the case of LUT, the value v is stored in slot k, thus directly addressable. The savings in processing time can be significant, because retrieving a value from memory is often faster than carrying out an "expensive" computation or input/output operation. The tables may be precalculated and stored in static program storage, calculated (or "pre-fetched") as part of a program's initialization phase (memoization), or even stored in hardware in application-specific platforms. Lookup tables are also used extensively to validate input values by matching against a list of valid (or invalid) items in an array an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Search Algorithm

In computer science, binary search, also known as half-interval search, logarithmic search, or binary chop, is a search algorithm that finds the position of a target value within a sorted array. Binary search compares the target value to the middle element of the array. If they are not equal, the half in which the target cannot lie is eliminated and the search continues on the remaining half, again taking the middle element to compare to the target value, and repeating this until the target value is found. If the search ends with the remaining half being empty, the target is not in the array. Binary search runs in logarithmic time in the worst case, making O(\log n) comparisons, where n is the number of elements in the array. Binary search is faster than linear search except for small arrays. However, the array must be sorted first to be able to apply binary search. There are specialized data structures designed for fast searching, such as hash tables, that can be searched m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

C (programming Language)

C (''pronounced like the letter c'') is a General-purpose language, general-purpose computer programming language. It was created in the 1970s by Dennis Ritchie, and remains very widely used and influential. By design, C's features cleanly reflect the capabilities of the targeted CPUs. It has found lasting use in operating systems, device drivers, protocol stacks, though decreasingly for application software. C is commonly used on computer architectures that range from the largest supercomputers to the smallest microcontrollers and embedded systems. A successor to the programming language B (programming language), B, C was originally developed at Bell Labs by Ritchie between 1972 and 1973 to construct utilities running on Unix. It was applied to re-implementing the kernel of the Unix operating system. During the 1980s, C gradually gained popularity. It has become one of the measuring programming language popularity, most widely used programming languages, with C compilers avail ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pascal (programming Language)

Pascal is an imperative and procedural programming language, designed by Niklaus Wirth as a small, efficient language intended to encourage good programming practices using structured programming and data structuring. It is named in honour of the French mathematician, philosopher and physicist Blaise Pascal. Pascal was developed on the pattern of the ALGOL 60 language. Wirth was involved in the process to improve the language as part of the ALGOL X efforts and proposed a version named ALGOL W. This was not accepted, and the ALGOL X process bogged down. In 1968, Wirth decided to abandon the ALGOL X process and further improve ALGOL W, releasing this as Pascal in 1970. On top of ALGOL's scalars and arrays, Pascal enables defining complex datatypes and building dynamic and recursive data structures such as lists, trees and graphs. Pascal has strong typing on all objects, which means that one type of data cannot be converted to or interpreted as another without explicit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Go (programming Language)

Go is a statically typed, compiled programming language designed at Google by Robert Griesemer, Rob Pike, and Ken Thompson. It is syntactically similar to C, but with memory safety, garbage collection, structural typing, and CSP-style concurrency. It is often referred to as Golang because of its former domain name, golang.org, but its proper name is Go. There are two major implementations: * Google's self-hosting "gc" compiler toolchain, targeting multiple operating systems and WebAssembly. * gofrontend, a frontend to other compilers, with the ''libgo'' library. With GCC the combination is gccgo; with LLVM the combination is gollvm. A third-party source-to-source compiler, GopherJS, compiles Go to JavaScript for front-end web development. History Go was designed at Google in 2007 to improve programming productivity in an era of multicore, networked machines and large codebases. The designers wanted to address criticism of other languages in use at Google ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Search Algorithms

In computer science, a search algorithm is an algorithm designed to solve a search problem. Search algorithms work to retrieve information stored within particular data structure, or calculated in the search space of a problem domain, with either discrete or continuous values. algorithms are Although search engines use search algorithms, they belong to the study of information retrieval, not algorithmics. The appropriate search algorithm often depends on the data structure being searched, and may also include prior knowledge about the data. Search algorithms can be made faster or more efficient by specially constructed database structures, such as search trees, hash maps, and database indexes. Search algorithms can be classified based on their mechanism of searching into three types of algorithms: linear, binary, and hashing. Linear search algorithms check every record for the one associated with a target key in a linear fashion. Binary, or half-interval, searches repeated ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |