|

Toom–Cook Multiplication

Toom–Cook, sometimes known as Toom-3, named after Andrei Toom, who introduced the new algorithm with its low complexity, and Stephen Cook, who cleaned the description of it, is a multiplication algorithm for large integers. Given two large integers, ''a'' and ''b'', Toom–Cook splits up ''a'' and ''b'' into ''k'' smaller parts each of length ''l'', and performs operations on the parts. As ''k'' grows, one may combine many of the multiplication sub-operations, thus reducing the overall computational complexity of the algorithm. The multiplication sub-operations can then be computed recursively using Toom–Cook multiplication again, and so on. Although the terms "Toom-3" and "Toom–Cook" are sometimes incorrectly used interchangeably, Toom-3 is only a single instance of the Toom–Cook algorithm, where ''k'' = 3. Toom-3 reduces 9 multiplications to 5, and runs in Θ(''n''log(5)/log(3)) ≈ Θ(''n''1.46). In general, Toom-''k'' runs in , where , ''ne'' is the time spent on sub-mul ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Andrei Toom

Andrei Leonovich Toom (in Russian: Андрей Леонович Тоом), also known as André Toom, (1942 Tashkent, Soviet Union - 2022 Queens, New York City) was a Russian mathematician known for the Toom–Cook algorithm and Toom's rule. Toom was a retired professor of the statistics department at Federal University of Pernambuco in Brazil. Toom died of unknown causes at his apartment in Flushing, Queens, NYC. Toom was a student of Ilya Piatetski-Shapiro Ilya Piatetski-Shapiro (Hebrew: איליה פיאטצקי-שפירו; russian: Илья́ Ио́сифович Пяте́цкий-Шапи́ро; 30 March 1929 – 21 February 2009) was a Soviet-born Israeli mathematician. During a career that sp .... ReferencesHis personal Website [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stephen Cook

Stephen Arthur Cook (born December 14, 1939) is an American-Canadian computer scientist and mathematician who has made significant contributions to the fields of complexity theory and proof complexity. He is a university professor at the University of Toronto, Department of Computer Science and Department of Mathematics. Biography Cook received his bachelor's degree in 1961 from the University of Michigan, and his master's degree and PhD from Harvard University, respectively in 1962 and 1966, from the Mathematics Department. He joined the University of California, Berkeley, mathematics department in 1966 as an assistant professor, and stayed there until 1970 when he was denied reappointment. In a speech celebrating the 30th anniversary of the Berkeley electrical engineering and computer sciences department, fellow Turing Award winner and Berkeley professor Richard Karp said that, "It is to our everlasting shame that we were unable to persuade the math department to give him t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiplication Algorithm

A multiplication algorithm is an algorithm (or method) to multiply two numbers. Depending on the size of the numbers, different algorithms are more efficient than others. Efficient multiplication algorithms have existed since the advent of the decimal system. Long multiplication If a positional numeral system is used, a natural way of multiplying numbers is taught in schools as long multiplication, sometimes called grade-school multiplication, sometimes called the Standard Algorithm: multiply the multiplicand by each digit of the multiplier and then add up all the properly shifted results. It requires memorization of the multiplication table for single digits. This is the usual algorithm for multiplying larger numbers by hand in base 10. A person doing long multiplication on paper will write down all the products and then add them together; an abacus-user will sum the products as soon as each one is computed. Example This example uses ''long multiplication'' to multiply 23,958 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Complexity

In computer science, the computational complexity or simply complexity of an algorithm is the amount of resources required to run it. Particular focus is given to computation time (generally measured by the number of needed elementary operations) and memory storage requirements. The complexity of a problem is the complexity of the best algorithms that allow solving the problem. The study of the complexity of explicitly given algorithms is called analysis of algorithms, while the study of the complexity of problems is called computational complexity theory. Both areas are highly related, as the complexity of an algorithm is always an upper bound on the complexity of the problem solved by this algorithm. Moreover, for designing efficient algorithms, it is often fundamental to compare the complexity of a specific algorithm to the complexity of the problem to be solved. Also, in most cases, the only thing that is known about the complexity of a problem is that it is lower than the c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Karatsuba Algorithm

The Karatsuba algorithm is a fast multiplication algorithm. It was discovered by Anatoly Karatsuba in 1960 and published in 1962. Knuth D.E. (1969) ''The Art of Computer Programming. v.2.'' Addison-Wesley Publ.Co., 724 pp. It is a divide-and-conquer algorithm that reduces the multiplication of two ''n''-digit numbers to three multiplications of ''n''/2-digit numbers and, by repeating this reduction, to at most n^\approx n^ single-digit multiplications. It is therefore asymptotically faster than the traditional algorithm, which performs n^2 single-digit products. For example, the traditional algorithm requires (210)2 = 1,048,576 single-digit multiplications to multiply two 1024-digit numbers (''n'' = 1024 = 210), whereas the Karatsuba algorithm requires 310 = 59,049 thus being ~17.758 times faster. The Karatsuba algorithm was the first multiplication algorithm asymptotically faster than the quadratic "grade school" algorithm. The Toom–Cook algorithm (1963) is a fas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

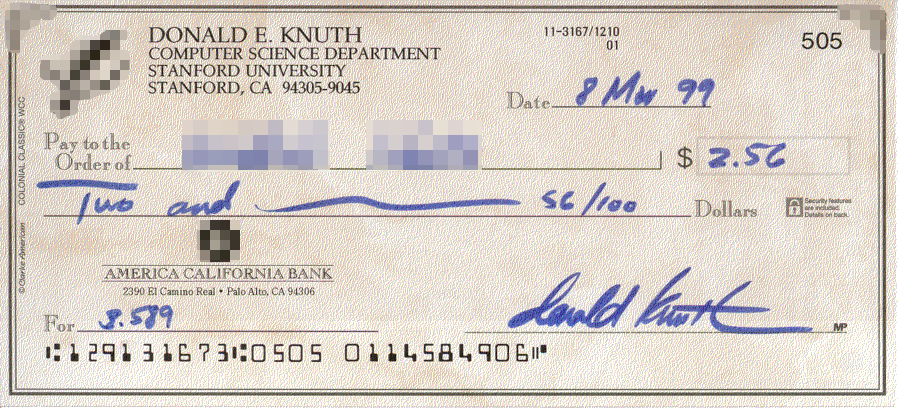

Donald Knuth

Donald Ervin Knuth ( ; born January 10, 1938) is an American computer scientist, mathematician, and professor emeritus at Stanford University. He is the 1974 recipient of the ACM Turing Award, informally considered the Nobel Prize of computer science. Knuth has been called the "father of the analysis of algorithms". He is the author of the multi-volume work ''The Art of Computer Programming'' and contributed to the development of the rigorous analysis of the computational complexity of algorithms and systematized formal mathematical techniques for it. In the process, he also popularized the asymptotic notation. In addition to fundamental contributions in several branches of theoretical computer science, Knuth is the creator of the TeX computer typesetting system, the related METAFONT font definition language and rendering system, and the Computer Modern family of typefaces. As a writer and scholar, Knuth created the WEB and CWEB computer programming systems designed to encou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Schönhage–Strassen Algorithm

The Schönhage–Strassen algorithm is an asymptotically fast multiplication algorithm for large integers. It was developed by Arnold Schönhage and Volker Strassen in 1971.A. Schönhage and V. Strassen,Schnelle Multiplikation großer Zahlen, ''Computing'' 7 (1971), pp. 281–292. The run-time bit complexity is, in big O notation, O(n \cdot \log n \cdot \log \log n) for two ''n''-digit numbers. The algorithm uses recursive fast Fourier transforms in rings with 2''n''+1 elements, a specific type of number theoretic transform. The Schönhage–Strassen algorithm was the asymptotically fastest multiplication method known from 1971 until 2007, when a new method, Fürer's algorithm, was announced with lower asymptotic complexity, and is used in the Basic Polynomial Algebra Subprograms (BPAS) open source library. The algorithm does not adapt for polynomials over finite fields though. The current best multiplication algorithm in terms of asymptotic complexity is by David Harvey and Jor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Toom–Cook Multiplication

Toom–Cook, sometimes known as Toom-3, named after Andrei Toom, who introduced the new algorithm with its low complexity, and Stephen Cook, who cleaned the description of it, is a multiplication algorithm for large integers. Given two large integers, ''a'' and ''b'', Toom–Cook splits up ''a'' and ''b'' into ''k'' smaller parts each of length ''l'', and performs operations on the parts. As ''k'' grows, one may combine many of the multiplication sub-operations, thus reducing the overall computational complexity of the algorithm. The multiplication sub-operations can then be computed recursively using Toom–Cook multiplication again, and so on. Although the terms "Toom-3" and "Toom–Cook" are sometimes incorrectly used interchangeably, Toom-3 is only a single instance of the Toom–Cook algorithm, where ''k'' = 3. Toom-3 reduces 9 multiplications to 5, and runs in Θ(''n''log(5)/log(3)) ≈ Θ(''n''1.46). In general, Toom-''k'' runs in , where , ''ne'' is the time spent on sub-mul ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Positional Notation

Positional notation (or place-value notation, or positional numeral system) usually denotes the extension to any base of the Hindu–Arabic numeral system (or decimal system). More generally, a positional system is a numeral system in which the contribution of a digit to the value of a number is the value of the digit multiplied by a factor determined by the position of the digit. In early numeral systems, such as Roman numerals, a digit has only one value: I means one, X means ten and C a hundred (however, the value may be negated if placed before another digit). In modern positional systems, such as the decimal system, the position of the digit means that its value must be multiplied by some value: in 555, the three identical symbols represent five hundreds, five tens, and five units, respectively, due to their different positions in the digit string. The Babylonian numeral system, base 60, was the first positional system to be developed, and its influence is present today ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiplication Algorithm

A multiplication algorithm is an algorithm (or method) to multiply two numbers. Depending on the size of the numbers, different algorithms are more efficient than others. Efficient multiplication algorithms have existed since the advent of the decimal system. Long multiplication If a positional numeral system is used, a natural way of multiplying numbers is taught in schools as long multiplication, sometimes called grade-school multiplication, sometimes called the Standard Algorithm: multiply the multiplicand by each digit of the multiplier and then add up all the properly shifted results. It requires memorization of the multiplication table for single digits. This is the usual algorithm for multiplying larger numbers by hand in base 10. A person doing long multiplication on paper will write down all the products and then add them together; an abacus-user will sum the products as soon as each one is computed. Example This example uses ''long multiplication'' to multiply 23,958 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gaussian Elimination

In mathematics, Gaussian elimination, also known as row reduction, is an algorithm for solving systems of linear equations. It consists of a sequence of operations performed on the corresponding matrix of coefficients. This method can also be used to compute the rank of a matrix, the determinant of a square matrix, and the inverse of an invertible matrix. The method is named after Carl Friedrich Gauss (1777–1855) although some special cases of the method—albeit presented without proof—were known to Chinese mathematicians as early as circa 179 AD. To perform row reduction on a matrix, one uses a sequence of elementary row operations to modify the matrix until the lower left-hand corner of the matrix is filled with zeros, as much as possible. There are three types of elementary row operations: * Swapping two rows, * Multiplying a row by a nonzero number, * Adding a multiple of one row to another row. (subtraction can be achieved by multiplying one row with -1 and adding ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vandermonde Matrix

In linear algebra, a Vandermonde matrix, named after Alexandre-Théophile Vandermonde, is a matrix with the terms of a geometric progression in each row: an matrix :V=\begin 1 & x_1 & x_1^2 & \dots & x_1^\\ 1 & x_2 & x_2^2 & \dots & x_2^\\ 1 & x_3 & x_3^2 & \dots & x_3^\\ \vdots & \vdots & \vdots & \ddots &\vdots \\ 1 & x_m & x_m^2 & \dots & x_m^ \end, or :V_ = x_i^ \, for all indices and . Some authors define the Vandermonde matrix as the transpose of the above matrix. The determinant of a square Vandermonde matrix is called a ''Vandermonde polynomial'' or ''Vandermonde determinant''. Its value is the polynomial :\det(V) = \prod_ (x_j - x_i) which is non-zero if and only if all x_i are distinct. The Vandermonde determinant was sometimes called the ''discriminant'', although, presently, the discriminant of a polynomial is the square of the Vandermonde determinant of the roots of the polynomial. The Vandermonde determinant is an alternating form in the x_i, meaning that exchang ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)