|

Tokenization of minorities

{{disambiguation ...

Tokenization may refer to: * Tokenization (lexical analysis) in language processing * Tokenization (data security) in the field of data security * Word segmentation * Tokenism Tokenism is the practice of making only a perfunctory or symbolic effort to be inclusive to members of minority groups, especially by recruiting people from underrepresented groups in order to give the appearance of racial or gender equality wit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tokenization (data Security)

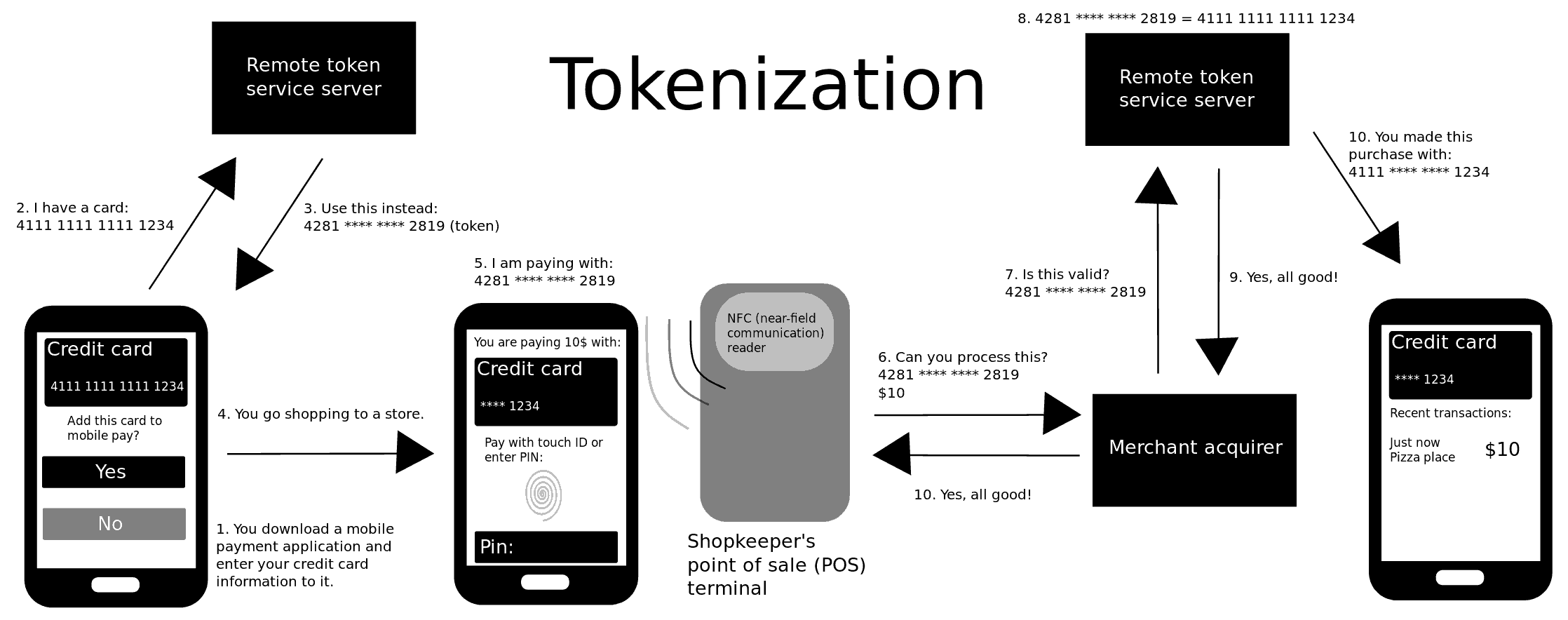

Tokenization, when applied to data security, is the process of substituting a sensitive data element with a non-sensitive equivalent, referred to as a token, that has no intrinsic or exploitable meaning or value. The token is a reference (i.e. identifier) that maps back to the sensitive data through a tokenization system. The mapping from original data to a token uses methods that render tokens infeasible to reverse in the absence of the tokenization system, for example using tokens created from random numbers. A one-way cryptographic function is used to convert the original data into tokens, making it difficult to recreate the original data without obtaining entry to the tokenization system's resources. To deliver such services, the system maintains a vault database of tokens that are connected to the corresponding sensitive data. Protecting the system vault is vital to the system, and improved processes must be put in place to offer database integrity and physical security. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tokenization (lexical Analysis)

In computer science, lexical analysis, lexing or tokenization is the process of converting a sequence of characters (such as in a computer program or web page) into a sequence of ''lexical tokens'' (strings with an assigned and thus identified meaning). A program that performs lexical analysis may be termed a ''lexer'', ''tokenizer'', or ''scanner'', although ''scanner'' is also a term for the first stage of a lexer. A lexer is generally combined with a parser, which together analyze the syntax of programming languages, web pages, and so forth. Applications A lexer forms the first phase of a compiler frontend in modern processing. Analysis generally occurs in one pass. In older languages such as ALGOL, the initial stage was instead line reconstruction, which performed unstropping and removed whitespace and comments (and had scannerless parsers, with no separate lexer). These steps are now done as part of the lexer. Lexers and parsers are most often used for compilers, but ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Word Segmentation

Text segmentation is the process of dividing written text into meaningful units, such as words, sentences, or topics. The term applies both to mental processes used by humans when reading text, and to artificial processes implemented in computers, which are the subject of natural language processing. The problem is non-trivial, because while some written languages have explicit word boundary markers, such as the word spaces of written English and the distinctive initial, medial and final letter shapes of Arabic, such signals are sometimes ambiguous and not present in all written languages. Compare speech segmentation, the process of dividing speech into linguistically meaningful portions. Segmentation problems Word segmentation Word segmentation is the problem of dividing a string of written language into its component words. In English and many other languages using some form of the Latin alphabet, the space is a good approximation of a word divider (word delimiter), al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |