|

Theil Index

The Theil index is a statistic primarily used to measure economic inequality and other economic phenomena, though it has also been used to measure racial segregation. The Theil index ''T''T is the same as redundancy in information theory which is the maximum possible entropy of the data minus the observed entropy. It is a special case of the generalized entropy index. It can be viewed as a measure of redundancy, lack of diversity, isolation, segregation, inequality, non-randomness, and compressibility. It was proposed by a Dutch econometrician Henri Theil (1924-2000) at the Erasmus University Rotterdam. Henri Theil himself said (1967): "The (Theil) index can be interpreted as the expected information content of the indirect message which transforms the population shares as prior probabilities into the income shares as posterior probabilities." Amartya Sen noted, "But the fact remains that the Theil index is an arbitrary formula, and the average of the logarithms of the reciproca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Economic Inequality

There are wide varieties of economic inequality, most notably income inequality measured using the distribution of income (the amount of money people are paid) and wealth inequality measured using the distribution of wealth (the amount of wealth people own). Besides economic inequality between countries or states, there are important types of economic inequality between different groups of people. Important types of economic measurements focus on wealth, income, and consumption. There are many methods for measuring economic inequality, the Gini coefficient being a widely used one. Another type of measure is the Inequality-adjusted Human Development Index, which is a statistic composite index that takes inequality into account. Important concepts of equality include equity, equality of outcome, and equality of opportunity. Whereas globalization has reduced global inequality (between nations), it has increased inequality within nations. Income inequality between nat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

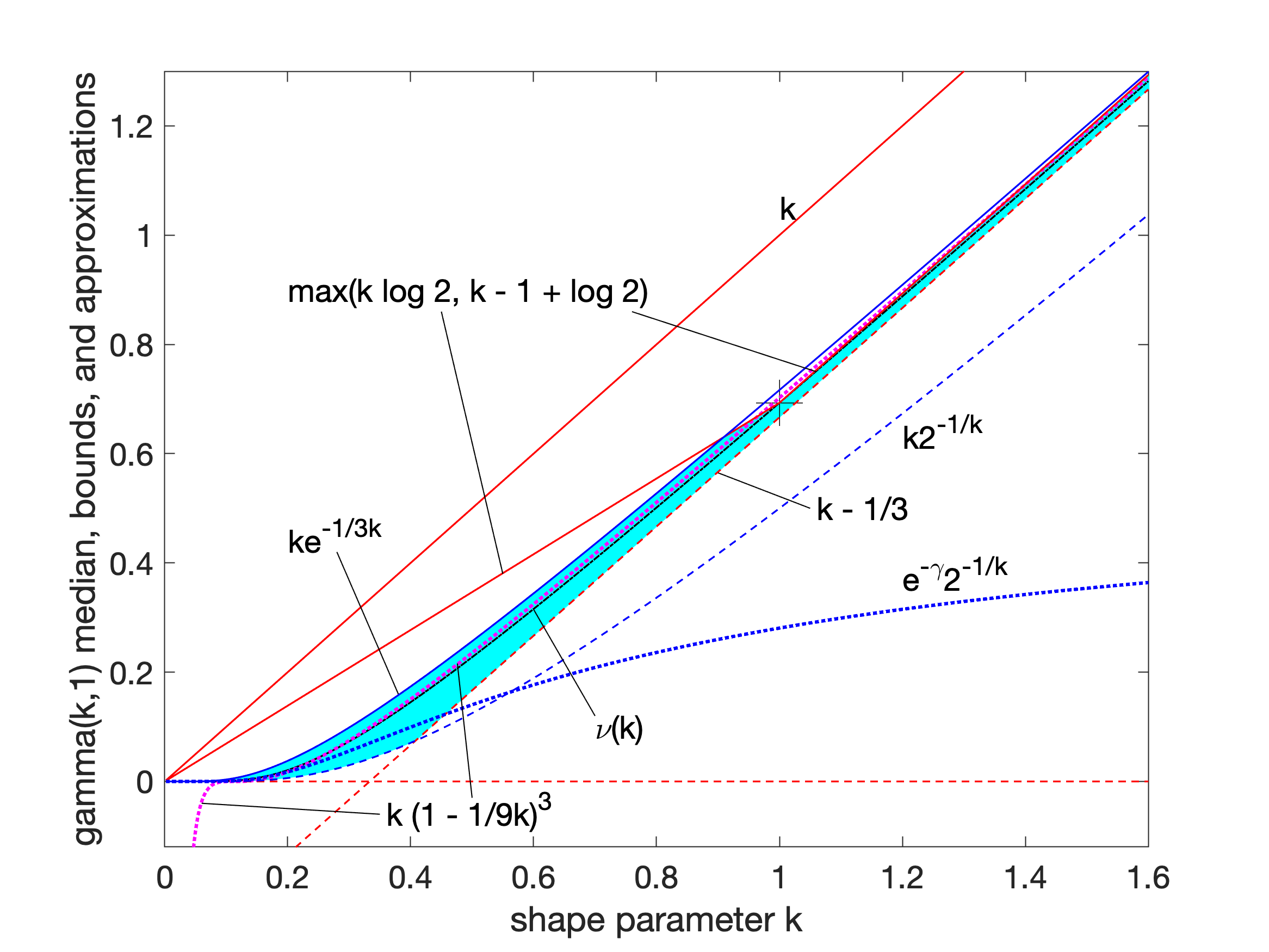

Gamma Distribution

In probability theory and statistics, the gamma distribution is a two- parameter family of continuous probability distributions. The exponential distribution, Erlang distribution, and chi-square distribution are special cases of the gamma distribution. There are two equivalent parameterizations in common use: #With a shape parameter k and a scale parameter \theta. #With a shape parameter \alpha = k and an inverse scale parameter \beta = 1/ \theta , called a rate parameter. In each of these forms, both parameters are positive real numbers. The gamma distribution is the maximum entropy probability distribution (both with respect to a uniform base measure and a 1/x base measure) for a random variable X for which E 'X''= ''kθ'' = ''α''/''β'' is fixed and greater than zero, and E n(''X'')= ''ψ''(''k'') + ln(''θ'') = ''ψ''(''α'') − ln(''β'') is fixed (''ψ'' is the digamma function). Definitions The parameterization with ''k'' and ''θ'' appears to be more common ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Price Signal

A price signal is information conveyed to consumers and producers, via the prices offered or requested for, and the amount requested or offered of a product or service, which provides a signal to increase or decrease quantity supplied or quantity demanded. It also provides potential business opportunities. When a certain kind of product is in shortage supply and the price rises, people will pay more attention to and produce this kind of product. The information carried by prices is an essential function in the fundamental coordination of an economic system, coordinating things such as what has to be produced, how to produce it and what resources to use in its production. In mainstream (neoclassical) economics, under perfect competition relative prices signal to producers and consumers what production or consumption decisions will contribute to allocative efficiency. According to Friedrich Hayek, in a system in which the knowledge of the relevant facts is dispersed among ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shannon Index

A diversity index is a quantitative measure that reflects how many different types (such as species) there are in a dataset (a community), and that can simultaneously take into account the phylogenetic relations among the individuals distributed among those types, such as ''richness'', ''divergence'' or ''evenness''. These indices are statistical representations of biodiversity in different aspects ( richness, evenness, and dominance). Effective number of species or Hill numbers When diversity indices are used in ecology, the types of interest are usually species, but they can also be other categories, such as genera, families, functional types, or haplotypes. The entities of interest are usually individual plants or animals, and the measure of abundance can be, for example, number of individuals, biomass or coverage. In demography, the entities of interest can be people, and the types of interest various demographic groups. In information science, the entities can be charac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

E (mathematical Constant)

The number , also known as Euler's number, is a mathematical constant approximately equal to 2.71828 that can be characterized in many ways. It is the base of the natural logarithms. It is the limit of as approaches infinity, an expression that arises in the study of compound interest. It can also be calculated as the sum of the infinite series e = \sum\limits_^ \frac = 1 + \frac + \frac + \frac + \cdots. It is also the unique positive number such that the graph of the function has a slope of 1 at . The (natural) exponential function is the unique function that equals its own derivative and satisfies the equation ; hence one can also define as . The natural logarithm, or logarithm to base , is the inverse function to the natural exponential function. The natural logarithm of a number can be defined directly as the area under the curve between and , in which case is the value of for which this area equals one (see image). There are various other character ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Logarithm

The natural logarithm of a number is its logarithm to the base of the mathematical constant , which is an irrational and transcendental number approximately equal to . The natural logarithm of is generally written as , , or sometimes, if the base is implicit, simply . Parentheses are sometimes added for clarity, giving , , or . This is done particularly when the argument to the logarithm is not a single symbol, so as to prevent ambiguity. The natural logarithm of is the power to which would have to be raised to equal . For example, is , because . The natural logarithm of itself, , is , because , while the natural logarithm of is , since . The natural logarithm can be defined for any positive real number as the area under the curve from to (with the area being negative when ). The simplicity of this definition, which is matched in many other formulas involving the natural logarithm, leads to the term "natural". The definition of the natural logarithm can the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Logarithm

In mathematics, the binary logarithm () is the power to which the number must be raised to obtain the value . That is, for any real number , :x=\log_2 n \quad\Longleftrightarrow\quad 2^x=n. For example, the binary logarithm of is , the binary logarithm of is , the binary logarithm of is , and the binary logarithm of is . The binary logarithm is the logarithm to the base and is the inverse function of the power of two function. As well as , an alternative notation for the binary logarithm is (the notation preferred by ISO 31-11 and ISO 80000-2). Historically, the first application of binary logarithms was in music theory, by Leonhard Euler: the binary logarithm of a frequency ratio of two musical tones gives the number of octaves by which the tones differ. Binary logarithms can be used to calculate the length of the representation of a number in the binary numeral system, or the number of bits needed to encode a message in information theory. In compute ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logarithmic Function

In mathematics, the logarithm is the inverse function to exponentiation. That means the logarithm of a number to the base is the exponent to which must be raised, to produce . For example, since , the ''logarithm base'' 10 of is , or . The logarithm of to ''base'' is denoted as , or without parentheses, , or even without the explicit base, , when no confusion is possible, or when the base does not matter such as in big O notation. The logarithm base is called the decimal or common logarithm and is commonly used in science and engineering. The natural logarithm has the number as its base; its use is widespread in mathematics and physics, because of its very simple derivative. The binary logarithm uses base and is frequently used in computer science. Logarithms were introduced by John Napier in 1614 as a means of simplifying calculations. They were rapidly adopted by navigators, scientists, engineers, surveyors and others to perform high-accu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boltzmann Constant

The Boltzmann constant ( or ) is the proportionality factor that relates the average relative kinetic energy of particles in a gas with the thermodynamic temperature of the gas. It occurs in the definitions of the kelvin and the gas constant, and in Planck's law of black-body radiation and Boltzmann's entropy formula, and is used in calculating thermal noise in resistors. The Boltzmann constant has dimensions of energy divided by temperature, the same as entropy. It is named after the Austrian scientist Ludwig Boltzmann. As part of the 2019 redefinition of SI base units, the Boltzmann constant is one of the seven " defining constants" that have been given exact definitions. They are used in various combinations to define the seven SI base units. The Boltzmann constant is defined to be exactly . Roles of the Boltzmann constant Macroscopically, the ideal gas law states that, for an ideal gas, the product of pressure and volume is proportional to the product of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Entropy

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable X, which takes values in the alphabet \mathcal and is distributed according to p: \mathcal\to, 1/math>: \Eta(X) := -\sum_ p(x) \log p(x) = \mathbb \log p(X), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or " shannons"), while base ''e'' gives "natural units" nat, and base 10 gives units of "dits", "bans", or " hartleys". An equivalent definition of entropy is the expected value of the self-information of a variable. The concept of information entropy was introduced by Claude Shannon in his 1948 paper " A Mathematical Theory of Communication",PDF archived froherePDF archived frohere and is also referred to as Shannon entropy. Shannon's theory ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Claude Shannon

Claude Elwood Shannon (April 30, 1916 – February 24, 2001) was an American mathematician, electrical engineer, and cryptographer known as a "father of information theory". As a 21-year-old master's degree student at the Massachusetts Institute of Technology (MIT), he wrote his thesis demonstrating that electrical applications of Boolean algebra could construct any logical numerical relationship. Shannon contributed to the field of cryptanalysis for national defense of the United States during World War II, including his fundamental work on codebreaking and secure telecommunications. Biography Childhood The Shannon family lived in Gaylord, Michigan, and Claude was born in a hospital in nearby Petoskey. His father, Claude Sr. (1862–1934), was a businessman and for a while, a judge of probate in Gaylord. His mother, Mabel Wolf Shannon (1890–1945), was a language teacher, who also served as the principal of Gaylord High School. Claude Sr. was a descendant of New ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalized Entropy Index

The generalized entropy index has been proposed as a measure of income inequality in a population. It is derived from information theory as a measure of redundancy in data. In information theory a measure of redundancy can be interpreted as non-randomness or data compression; thus this interpretation also applies to this index. In addition, interpretation of biodiversity as entropy has also been proposed leading to uses of generalized entropy to quantify biodiversity. Formula The formula for general entropy for real values of \alpha is: GE(\alpha) = \begin \frac\sum_^N\left left(\frac\right)^\alpha - 1\right& \alpha \ne 0, 1,\\ \frac\sum_^N\frac\ln\frac,& \alpha=1,\\ -\frac\sum_^N\ln\frac,& \alpha=0. \end where N is the number of cases (e.g., households or families), y_i is the income for case i and \alpha is a parameter which regulates the weight given to distances between incomes at different parts of the income distribution. For large \alpha the index is especially sensit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |