|

The Lexer Hack

In computer programming, the lexer hack is a common solution to the problems in parsing ANSI C, due to the reference grammar being context-sensitive. In C, classifying a sequence of characters as a variable name or a type name requires contextual information of the phrase structure, which prevents one from having a context-free lexer. Problem The problem is that in the following code, the lexical class of A cannot be determined without further contextual information: (A)*B This code could be multiplication of two variables, in which case A is a variable; written unambiguously: A * B Alternatively, it could be casting the dereferenced value of B to the type A, in which case A is a typedef name; written unambiguously: (A) (*B) In more detail, in a compiler, the lexer performs one of the earliest stages of converting the source code to a program. It scans the text to extract meaningful ''tokens'', such as words, numbers, and strings. The parser analyzes sequences of tokens at ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Programming

Computer programming is the process of performing a particular computation (or more generally, accomplishing a specific computing result), usually by designing and building an executable computer program. Programming involves tasks such as analysis, generating algorithms, profiling algorithms' accuracy and resource consumption, and the implementation of algorithms (usually in a chosen programming language, commonly referred to as coding). The source code of a program is written in one or more languages that are intelligible to programmers, rather than machine code, which is directly executed by the central processing unit. The purpose of programming is to find a sequence of instructions that will automate the performance of a task (which can be as complex as an operating system) on a computer, often for solving a given problem. Proficient programming thus usually requires expertise in several different subjects, including knowledge of the application domain, specialized algori ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexerless Parsing

In computer science, scannerless parsing (also called lexerless parsing) performs tokenization (breaking a stream of characters into words) and parsing (arranging the words into phrases) in a single step, rather than breaking it up into a pipeline of a lexer followed by a parser, executing concurrently. A language grammar is scannerless if it uses a single formalism to express both the lexical (word level) and phrase level structure of the language. Dividing processing into a lexer followed by a parser is more modular; scannerless parsing is primarily used when a clear lexer–parser distinction is unneeded or unwanted. Examples of when this is appropriate include TeX, most wiki grammars, makefiles, simple application-specific scripting languages, and Raku. Advantages * Only one metalanguage is needed * Non-regular lexical structure is handled easily * "Token classification" is unneeded which removes the need for design accommodations such as "the lexer hack" and language r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

C (programming Language)

C (''pronounced like the letter c'') is a General-purpose language, general-purpose computer programming language. It was created in the 1970s by Dennis Ritchie, and remains very widely used and influential. By design, C's features cleanly reflect the capabilities of the targeted CPUs. It has found lasting use in operating systems, device drivers, protocol stacks, though decreasingly for application software. C is commonly used on computer architectures that range from the largest supercomputers to the smallest microcontrollers and embedded systems. A successor to the programming language B (programming language), B, C was originally developed at Bell Labs by Ritchie between 1972 and 1973 to construct utilities running on Unix. It was applied to re-implementing the kernel of the Unix operating system. During the 1980s, C gradually gained popularity. It has become one of the measuring programming language popularity, most widely used programming languages, with C compilers avail ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dangling Else

The dangling else is a problem in programming of parser generators in which an optional else clause in an if–then(–else) statement results in nested conditionals being ambiguous. Formally, the reference context-free grammar of the language is ambiguous, meaning there is more than one correct parse tree. In many programming languages one may write conditionally executed code in two forms: the if-then form, and the if-then-else form – the else clause is optional: if a then s if b then s1 else s2 This gives rise to an ambiguity in interpretation when there are nested statements, specifically whenever an if-then form appears as s1 in an if-then-else form: if a then if b then s else s2 In this example, s is unambiguously executed when a is true and b is true, but one may interpret s2 as being executed when a is false (thus attaching the else to the first if) or when a is true and b is false (thus attaching the else to the second if). In other words, one may see the previou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Encapsulation (computer Programming)

In software systems, encapsulation refers to the bundling of data with the mechanisms or methods that operate on the data, or the limiting of direct access to some data, such as an object's components. Encapsulation allows developers to present a consistent and usable interface which is independent of how a system is implemented internally. As one example, encapsulation can be used to hide the values or state of a structured data object inside a class, preventing direct access to them by clients in a way that could expose hidden implementation details or violate state invariance maintained by the methods. All object-oriented programming (OOP) systems support encapsulation, but encapsulation is not unique to OOP. Implementations of abstract data types, modules, and libraries, among other systems, also offer encapsulation. The similarity has been explained by programming language theorists in terms of existential types. Meaning In object-oriented programming languages, and other r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Separation Of Concerns

In computer science, separation of concerns is a design principle for separating a computer program into distinct sections. Each section addresses a separate '' concern'', a set of information that affects the code of a computer program. A concern can be as general as "the details of the hardware for an application", or as specific as "the name of which class to instantiate". A program that embodies SoC well is called a modular program. Modularity, and hence separation of concerns, is achieved by encapsulating information inside a section of code that has a well-defined interface. Encapsulation is a means of information hiding. Layered designs in information systems are another embodiment of separation of concerns (e.g., presentation layer, business logic layer, data access layer, persistence layer). Separation of concerns results in more degrees of freedom for some aspect of the program's design, deployment, or usage. Common among these is increased freedom for simplification and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantic Analysis (compilers)

Semantic analysis or context sensitive analysis is a process in compiler construction, usually after parsing, to gather necessary semantic information from the source code. It usually includes type checking, or makes sure a variable is declared before use which is impossible to describe in the extended Backus–Naur form and thus not easily detected during parsing. See also * Attribute grammar * Context-sensitive language * Semantic analysis (computer science) In computing, a compiler is a computer program that Translator (computing), translates computer code written in one programming language (the ''source'' language) into another language (the ''target'' language). The name "compiler" is primarily ... References Compiler construction Program analysis {{plt-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

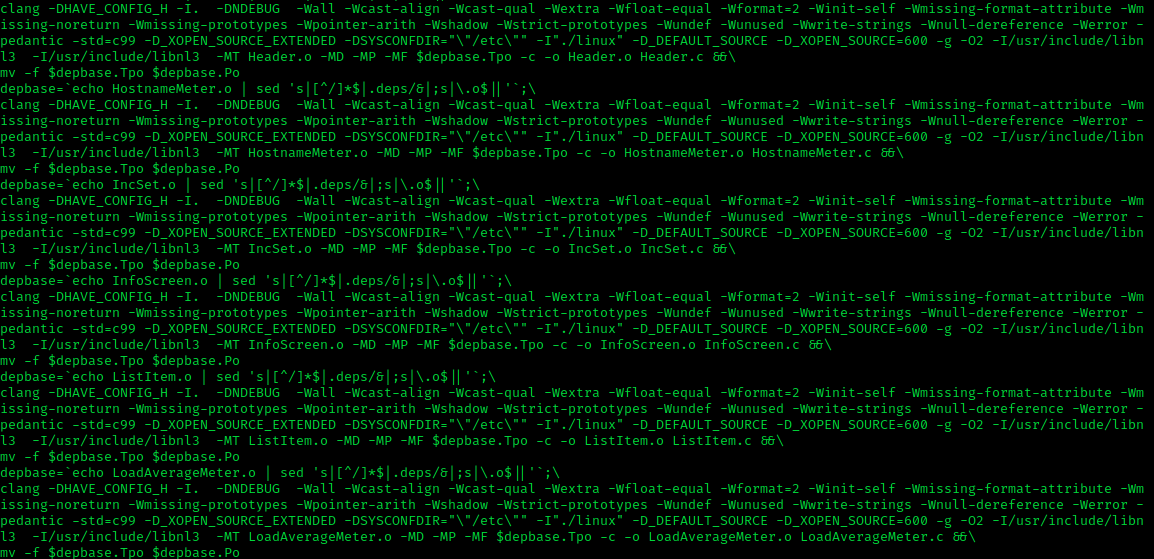

Clang

Clang is a compiler front end for the C, C++, Objective-C, and Objective-C++ programming languages, as well as the OpenMP, OpenCL, RenderScript, CUDA, and HIP frameworks. It acts as a drop-in replacement for the GNU Compiler Collection (GCC), supporting most of its compilation flags and unofficial language extensions. It includes a static analyzer, and several code analysis tools. Clang operates in tandem with the LLVM compiler back end and has been a subproject of LLVM 2.6 and later. As with LLVM, it is free and open-source software under the Apache License 2.0 software license. Its contributors include Apple, Microsoft, Google, ARM, Sony, Intel, and AMD. Clang 14, the latest major version of Clang as of March 2022, has full support for all published C++ standards up to C++17, implements most features of C++20, and has initial support for the upcoming C++23 standard. Since v6.0.0, Clang compiles C++ using the GNU++14 dialect by default, which includes features from the C+ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Berkeley Yacc

Berkeley Yacc (byacc) is a Unix parser generator designed to be compatible with Yacc. It was originally written by Robert Corbett and released in 1989. Due to its liberal license and because it was faster than the AT&T Yacc, it quickly became the most popular version of Yacc. It has the advantages of being written in ANSI C89 and being public domain software. It contains features not available in Yacc, such as reentrancy, which is implemented in a way that is broadly compatible with GNU Bison. History In 1985, Robert Corbett developed an original LALR parser generator based on a 1982 paper by DeRemer and Pennello. Corbett wrote it as part of his research towards the Ph.D. he received from University of California, Berkeley in June 1985. It was originally named Byson and was incompatible with Yacc but it was subsequently renamed Bison and became the basis of GNU Bison. Later in 1985, Corbett developed his LALR parser generator, making it Yacc-compatible and naming it Zeus but s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Concurrent Computation

Concurrent computing is a form of computing in which several computations are executed '' concurrently''—during overlapping time periods—instead of ''sequentially—''with one completing before the next starts. This is a property of a system—whether a program, computer, or a network—where there is a separate execution point or "thread of control" for each process. A ''concurrent system'' is one where a computation can advance without waiting for all other computations to complete. Concurrent computing is a form of modular programming. In its paradigm an overall computation is factored into subcomputations that may be executed concurrently. Pioneers in the field of concurrent computing include Edsger Dijkstra, Per Brinch Hansen, and C.A.R. Hoare. Introduction The concept of concurrent computing is frequently confused with the related but distinct concept of parallel computing, Pike, Rob (2012-01-11). "Concurrency is not Parallelism". ''Waza conference'', 11 January ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hack (computer Science)

A kludge or kluge () is a workaround or quick-and-dirty solution that is clumsy, inelegant, inefficient, difficult to extend and hard to maintain. This term is used in diverse fields such as computer science, aerospace engineering, Internet slang, evolutionary neuroscience, and government. It is similar in meaning to the naval term ''jury rig''. Etymology The word has alternate spellings (''kludge'' and ''kluge''), pronunciations ( and , rhyming with ''judge'' and ''stooge'', respectively), and several proposed etymologies. Jackson W. Granholm The ''Oxford English Dictionary'' (2nd ed., 1989), cites Jackson W. Granholm's 1962 "How to Design a Kludge" article in the American computer magazine '' Datamation''. kludge Also kluge. . W. Granholm's jocular invention: see first quot.; cf. also ''bodge'' v., ''fudge'' v.br>'An ill-assorted collection of poorly-matching parts, forming a distressing whole' (Granholm); esp. in ''Computing'', a machine, system, or program that has b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parsing

Parsing, syntax analysis, or syntactic analysis is the process of analyzing a string of symbols, either in natural language, computer languages or data structures, conforming to the rules of a formal grammar. The term ''parsing'' comes from Latin ''pars'' (''orationis''), meaning part (of speech). The term has slightly different meanings in different branches of linguistics and computer science. Traditional sentence parsing is often performed as a method of understanding the exact meaning of a sentence or word, sometimes with the aid of devices such as sentence diagrams. It usually emphasizes the importance of grammatical divisions such as subject and predicate. Within computational linguistics the term is used to refer to the formal analysis by a computer of a sentence or other string of words into its constituents, resulting in a parse tree showing their syntactic relation to each other, which may also contain semantic and other information (p-values). Some parsing algor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |