|

TI Advanced Scientific Computer

The Advanced Scientific Computer (ASC) is a supercomputer designed and manufactured by Texas Instruments (TI) between 1966 and 1973. The ASC's central processing unit (CPU) supported vector processing, a performance-enhancing technique which was key to its high-performance. The ASC, along with the Control Data Corporation STAR-100 supercomputer (which was introduced in the same year), were the first computers to feature vector processing. However, this technique's potential was not fully realized by either the ASC or STAR-100 due to an insufficient understanding of the technique; it was the Cray Research Cray-1 supercomputer, announced in 1975 that would fully realize and popularize vector processing. The more successful implementation of vector processing in the Cray-1 would demarcate the ASC (and STAR-100) as first-generation vector processors, with the Cray-1 belonging in the second. History TI began as a division of Geophysical Service Incorporated (GSI), a company that per ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Supercomputer

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2022, supercomputers have existed which can perform over 1018 FLOPS, so called Exascale computing, exascale supercomputers. For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the TOP500, world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers. Supercomputers play an important role in the field of computational science, and are used for a wide range of computationally intensive tasks in various fields, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

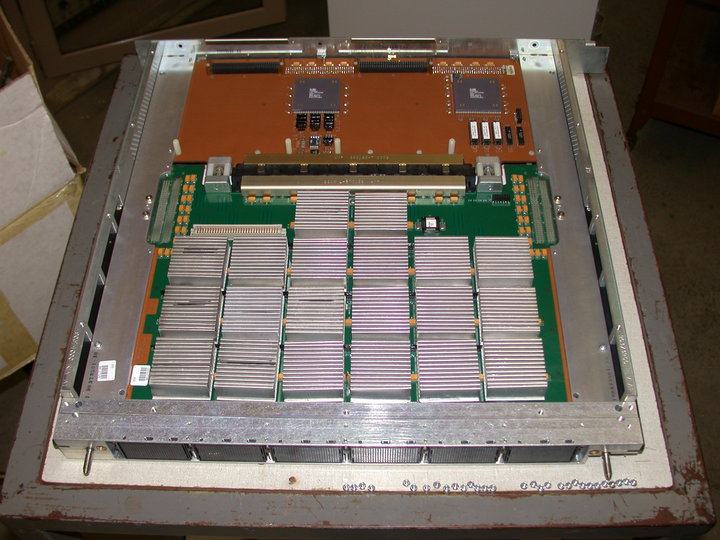

CPU Cache

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit (M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Taylor & Francis

Taylor & Francis Group is an international company originating in the United Kingdom that publishes books and academic journals. Its parts include Taylor & Francis, CRC Press, Routledge, F1000 (publisher), F1000 Research and Dovepress. It is a division of Informa, a United Kingdom-based publisher and conference company. Overview Founding The company was founded in 1852 when William Francis (chemist), William Francis joined Richard Taylor (editor), Richard Taylor in his publishing business. Taylor had founded his company in 1798. Their subjects covered agriculture, chemistry, education, engineering, geography, law, mathematics, medicine, and social sciences. Publications included the ''Philosophical Magazine''. Francis's son, Richard Taunton Francis (1883–1930), was sole partner in the firm from 1917 to 1930. Acquisitions and mergers In 1965, Taylor & Francis launched Wykeham Publications and began book publishing. T&F acquired Hemisphere Publishing in 1988, and the compa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Fast Fourier Transform

A fast Fourier transform (FFT) is an algorithm that computes the discrete Fourier transform (DFT) of a sequence, or its inverse (IDFT). A Fourier transform converts a signal from its original domain (often time or space) to a representation in the frequency domain and vice versa. The DFT is obtained by decomposing a sequence of values into components of different frequencies. This operation is useful in many fields, but computing it directly from the definition is often too slow to be practical. An FFT rapidly computes such transformations by Matrix decomposition, factorizing the DFT matrix into a product of Sparse matrix, sparse (mostly zero) factors. As a result, it manages to reduce the Computational complexity theory, complexity of computing the DFT from O(n^2), which arises if one simply applies the definition of DFT, to O(n \log n), where is the data size. The difference in speed can be enormous, especially for long data sets where may be in the thousands or millions. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Channel Controller

In computing, channel I/O is a high-performance input/output (I/O) architecture that is implemented in various forms on a number of computer architectures, especially on mainframe computers. In the past, channels were generally implemented with custom devices, variously named channel, I/O processor, I/O controller, I/O synchronizer, or '' DMA controller''. Overview Many I/O tasks can be complex and require logic to be applied to the data to convert formats and other similar duties. In these situations, the simplest solution is to ask the CPU to handle the logic, but because I/O devices are relatively slow, a CPU could waste time waiting for the data from the device. This situation is called 'I/O bound'. Channel architecture avoids this problem by processing some or all of the I/O task without the aid of the CPU by offloading the work to dedicated logic. Channels are logically self-contained, with sufficient logic and working storage to handle I/O tasks. Some are powerful or fle ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

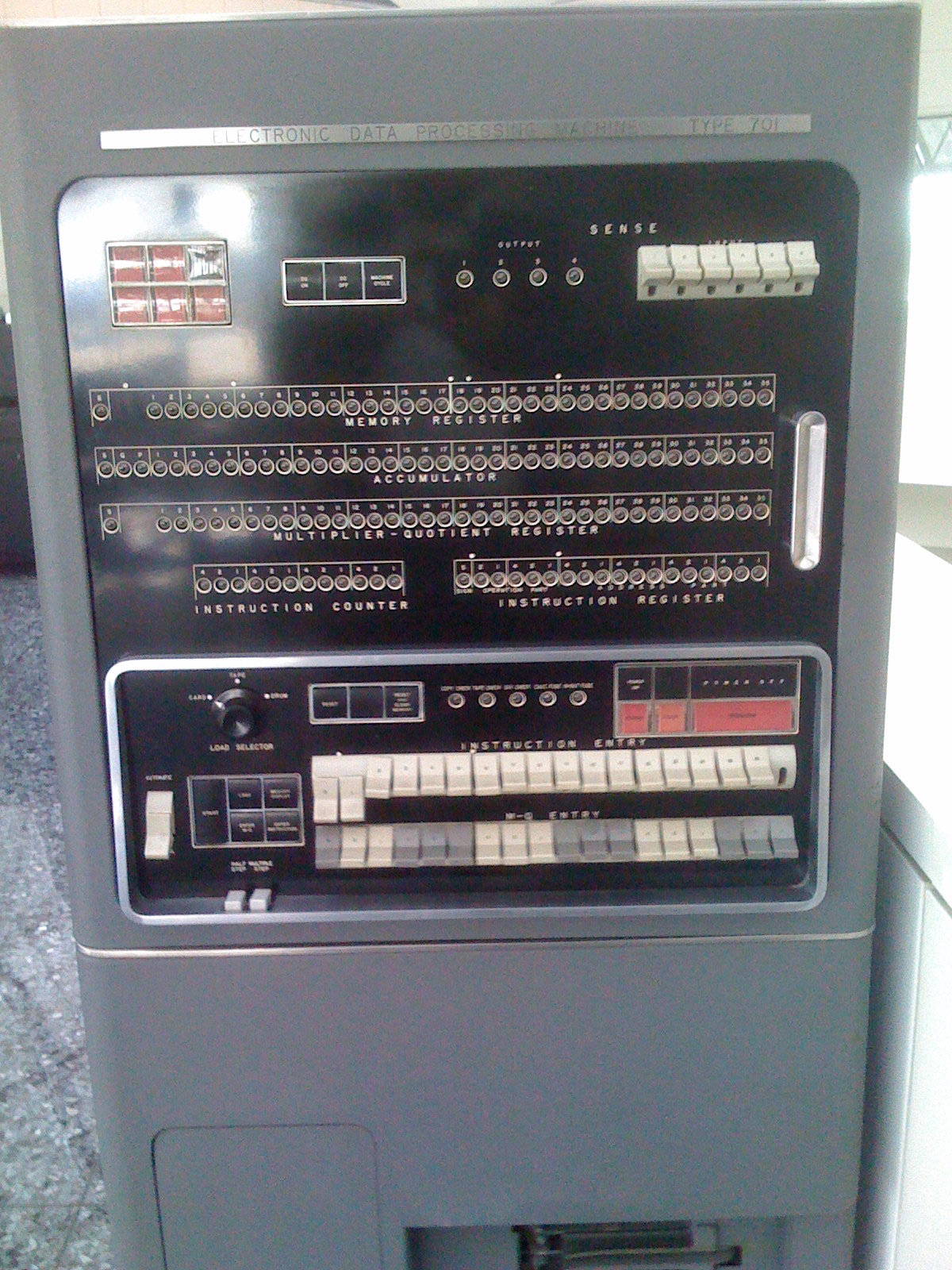

Program Counter

The program counter (PC), commonly called the instruction pointer (IP) in Intel x86 and Itanium microprocessors, and sometimes called the instruction address register (IAR), the instruction counter, or just part of the instruction sequencer, is a processor register that indicates where a computer is in its program sequence. Usually, the PC is incremented after fetching an instruction, and holds the memory address of (" points to") the next instruction that would be executed. Processors usually fetch instructions sequentially from memory, but ''control transfer'' instructions change the sequence by placing a new value in the PC. These include branches (sometimes called jumps), subroutine calls, and returns. A transfer that is conditional on the truth of some assertion lets the computer follow a different sequence under different conditions. A branch provides that the next instruction is fetched from elsewhere in memory. A subroutine call not only branches but saves the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Vector Processor

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called ''vectors''. This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of those same scalar processors having additional single instruction, multiple data (SIMD) or SIMD within a register (SWAR) Arithmetic Units. Vector processors can greatly improve performance on certain workloads, notably numerical simulation, compression and similar tasks. Vector processing techniques also operate in video-game console hardware and in graphics accelerators. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Microcode

In processor design, microcode serves as an intermediary layer situated between the central processing unit (CPU) hardware and the programmer-visible instruction set architecture of a computer. It consists of a set of hardware-level instructions that implement the higher-level machine code instructions or control internal finite-state machine sequencing in many digital processing components. While microcode is utilized in Intel and AMD general-purpose CPUs in contemporary desktops and laptops, it functions only as a fallback path for scenarios that the faster hardwired control unit is unable to manage. Housed in special high-speed memory, microcode translates machine instructions, state machine data, or other input into sequences of detailed circuit-level operations. It separates the machine instructions from the underlying electronics, thereby enabling greater flexibility in designing and altering instructions. Moreover, it facilitates the construction of complex multi-step inst ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

ILLIAC IV

The ILLIAC IV was the first massively parallel computer. The system was originally designed to have 256 64-bit floating-point units (FPUs) and four central processing units (CPUs) able to process 1 billion operations per second. Due to budget constraints, only a single "quadrant" with 64 FPUs and a single CPU was built. Since the FPUs all processed the same instruction — etc. — in modern terminology, the design would be considered to be single instruction, multiple data, or SIMD. The concept of building a computer using an array of processors came to Daniel Slotnick while working as a programmer on the IAS machine in 1952. A formal design did not start until 1960, when Slotnick was working at Westinghouse Electric Corporation and arranged development funding under a United States Air Force contract. When that funding ended in 1964, Slotnick moved to the University of Illinois Urbana-Champaign and joined the Illinois Automatic Computer (ILLIAC) team. With funding f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Integrated Circuit

An integrated circuit (IC), also known as a microchip or simply chip, is a set of electronic circuits, consisting of various electronic components (such as transistors, resistors, and capacitors) and their interconnections. These components are etched onto a small, flat piece ("chip") of semiconductor material, usually silicon. Integrated circuits are used in a wide range of electronic devices, including computers, smartphones, and televisions, to perform various functions such as processing and storing information. They have greatly impacted the field of electronics by enabling device miniaturization and enhanced functionality. Integrated circuits are orders of magnitude smaller, faster, and less expensive than those constructed of discrete components, allowing a large transistor count. The IC's mass production capability, reliability, and building-block approach to integrated circuit design have ensured the rapid adoption of standardized ICs in place of designs using discre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Emitter-coupled Logic

In electronics, emitter-coupled logic (ECL) is a high-speed integrated circuit bipolar transistor logic family. ECL uses a bipolar junction transistor (BJT) differential amplifier with single-ended input and limited emitter current to avoid the saturated (fully on) region of operation and the resulting slow turn-off behavior. As the current is steered between two legs of an emitter-coupled pair, ECL is sometimes called ''current-steering logic'' (CSL), ''current-mode logic'' (CML) or ''current-switch emitter-follower'' (CSEF) logic. In ECL, the transistors are never in saturation, the input and output voltages have a small swing (0.8 V), the input impedance is high and the output impedance is low. As a result, the transistors change states quickly, gate delays are low, and the fanout capability is high. In addition, the essentially constant current draw of the differential amplifiers minimizes delays and glitches due to supply-line inductance and capacitance, and the compl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |