|

Two-factor Theory Of Intelligence

Charles Spearman developed his two-factor theory of intelligence using factor analysis. His research not only led him to develop the concept of the ''g factor'' of general intelligence, but also the ''s'' factor of specific intellectual abilities. L. L. Thurstone, Howard Gardner, and Robert Sternberg also researched the structure of intelligence, and in analyzing their data, concluded that a single underlying factor was influencing the general intelligence of individuals. However, Spearman was criticized in 1916 by Godfrey Thomson, who claimed that the evidence was not as crucial as it seemed. Modern research is still expanding this theory by investigating Spearman's law of diminishing returns, and adding connected concepts to the research. Spearman's two-factor theory of intelligence In 1904,Kalat, J.W. (2014). ''Introduction to Psychology, 10th Edition.'' Cengage Learning. pg. 295 Charles Spearman had developed a statistical procedure called factor analysis. In factor anal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Charles Spearman

Charles Edward Spearman, FRS (10 September 1863 – 17 September 1945) was an English psychologist known for work in statistics, as a pioneer of factor analysis, and for Spearman's rank correlation coefficient. He also did seminal work on models for human intelligence, including his theory that disparate cognitive test scores reflect a single General intelligence factor and coining the term ''g'' factor. Biography Spearman had an unusual background for a psychologist. In his childhood he was ambitious to follow an academic career. He first joined the army as a regular officer of engineers in August 1883, and was promoted to captain on 8 July 1893, serving in the Munster Fusiliers. After 15 years he resigned in 1897 to study for a PhD in experimental psychology. In Britain, psychology was generally seen as a branch of philosophy and Spearman chose to study in Leipzig under Wilhelm Wundt, because it was a center of the "new psychology"—one that used the scientific method inst ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robert Plomin

Robert Joseph Plomin (born 1948) is an American/British psychologist and geneticist best known for his work in twin studies and behavior genetics. A ''Review of General Psychology'' survey, published in 2002, ranked Plomin as the 71st most cited psychologist of the 20th century. He is the author of several books on genetics and psychology. Biography Plomin was born in Chicago to a family of Polish-German extraction. He graduated high school from DePaul University Academy in Chicago, he then earned a B.A. in psychology from DePaul University in 1970 and a Ph.D. in psychology in 1974 from the University of Texas at Austin under personality psychologist Arnold H. Buss. He then worked at the Institute for Behavioral Genetics at the University of Colorado Boulder. From 1986 until 1994 he worked at Pennsylvania State University, studying elderly twins reared apart and twins reared together to study aging and since 1994 has been at the Institute of Psychiatry (King's College London). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Personality Theories

Personality is the characteristic sets of behaviors, cognitions, and emotional patterns that are formed from biological and environmental factors, and which change over time. While there is no generally agreed-upon definition of personality, most theories focus on motivation and psychological interactions with the environment one is surrounded by. Trait-based personality theories, such as those defined by Raymond Cattell, define personality as traits that predict an individual's behavior. On the other hand, more behaviorally-based approaches define personality through learning and habits. Nevertheless, most theories view personality as relatively stable. The study of the psychology of personality, called personality psychology, attempts to explain the tendencies that underlie differences in behavior. Psychologists have taken many different approaches to the study of personality, including biological, cognitive, learning, and trait-based theories, as well as psychodynamic, and hum ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Environmental Factor

An environmental factor, ecological factor or eco factor is any factor, abiotic or biotic, that influences living organisms. Abiotic factors include ambient temperature, amount of sunlight, and pH of the water soil in which an organism lives. Biotic factors would include the availability of food organisms and the presence of biological specificity, competitors, predators, and parasites. Overview An organism's genotype (e.g., in the zygote) translated into the adult phenotype through development during an organism's ontogeny, and subject to influences by many environmental effects. In this context, a phenotype (or phenotypic trait) can be viewed as any definable and measurable characteristic of an organism, such as its body mass or skin color. Apart from the true monogenic genetic disorders, environmental factors may determine the development of disease in those genetically predisposed to a particular condition. Stress, physical and mental abuse, diet, exposure to toxins, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nature Versus Nurture

Nature versus nurture is a long-standing debate in biology and society about the balance between two competing factors which determine fate: genetics (nature) and environment (nurture). The alliterative expression "nature and nurture" in English has been in use since at least the Elizabethan period and goes back to medieval French. The complementary combination of the two concepts is an ancient concept ( grc, ἁπό φύσεως καὶ εὐτροφίας). Nature is what people think of as pre-wiring and is influenced by genetic inheritance and other biological factors. Nurture is generally taken as the influence of external factors after conception e.g. the product of exposure, experience and learning on an individual. The phrase in its modern sense was popularized by the Victorian polymath Francis Galton, the modern founder of eugenics and behavioral genetics when he was discussing the influence of heredity and environment on social advancement. Galton was influenced by ''O ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wechsler Intelligence Scale For Children

The Wechsler Intelligence Scale for Children (WISC) is an individually administered intelligence test for children between the ages of 6 and 16. The Fifth Edition (WISC-V; Wechsler, 2014) is the most recent version. The WISC-V takes 45 to 65 minutes to administer. It generates a Full Scale IQ (formerly known as an intelligence quotient or IQ score) that represents a child's general intellectual ability. It also provides five primary index scores, namely Verbal Comprehension Index, Visual Spatial Index, Fluid Reasoning Index, Working Memory Index, and Processing Speed Index. These indices represent a child's abilities in discrete cognitive domains. Five ancillary composite scores can be derived from various combinations of primary or primary and secondary subtests. Five complementary subtests yield three complementary composite scores to measure related cognitive abilities. Technical papers by the publishers support other indices such as VECI, EFI, and GAI (Raiford et al., 2015). Va ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Confirmatory Factor Analysis

In statistics, confirmatory factor analysis (CFA) is a special form of factor analysis, most commonly used in social science research.Kline, R. B. (2010). ''Principles and practice of structural equation modeling (3rd ed.).'' New York, New York: Guilford Press. It is used to test whether measures of a construct are consistent with a researcher's understanding of the nature of that construct (or factor). As such, the objective of confirmatory factor analysis is to test whether the data fit a hypothesized measurement model. This hypothesized model is based on theory and/or previous analytic research. CFA was first developed by Jöreskog (1969) and has built upon and replaced older methods of analyzing construct validity such as the MTMM Matrix as described in Campbell & Fiske (1959). In confirmatory factor analysis, the researcher first develops a hypothesis about what factors they believe are underlying the measures used (e.g., " Depression" being the factor underlying the Beck Dep ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Differential Ability Scales

The Differential Ability Scales (DAS) is a nationally normed (in the US), and individually administered battery of cognitive and achievement tests. Into its second edition (DAS-II), the test can be administered to children ages 2 years 6 months to 17 years 11 months across a range of developmental levels. The diagnostic subtests measure a variety of cognitive abilities including verbal and visual working memory, immediate and delayed recall, visual recognition and matching, processing and naming speed, phonological processing, and understanding of basic number concepts. The original DAS was developed from the BAS ''British Ability Scales'' both by Colin D. Elliot and published by Harcourt Assessment in 1990. Test Structure The DAS-II consists of 20 cognitive subtests which include 17 subtests from the original DAS. The subtests are grouped into the Early Years and School-Age cognitive batteries with subtests that are common to both batteries and those that are unique to each b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fluid Intelligence

The concepts of fluid intelligence (''g''f) and crystallized intelligence (''g''c) were introduced in 1963 by the psychologist Raymond Cattell. According to Cattell's psychometrically-based theory, general intelligence (''g'') is subdivided into ''g''f and ''g''c. Fluid intelligence is the ability to solve novel reasoning problems and is correlated with a number of important skills such as comprehension, problem-solving, and learning. Crystallized intelligence, on the other hand, involves the ability to deduce secondary relational abstractions by applying previously learned primary relational abstractions. History Fluid and crystallized intelligence are constructs originally conceptualized by Raymond Cattell. The concepts of fluid and crystallized intelligence were further developed by Cattell and his former student John L. Horn. Fluid versus crystallized intelligence Fluid intelligence (''g''f) refers to basic processes of reasoning and other mental activities that depend on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Working Memory

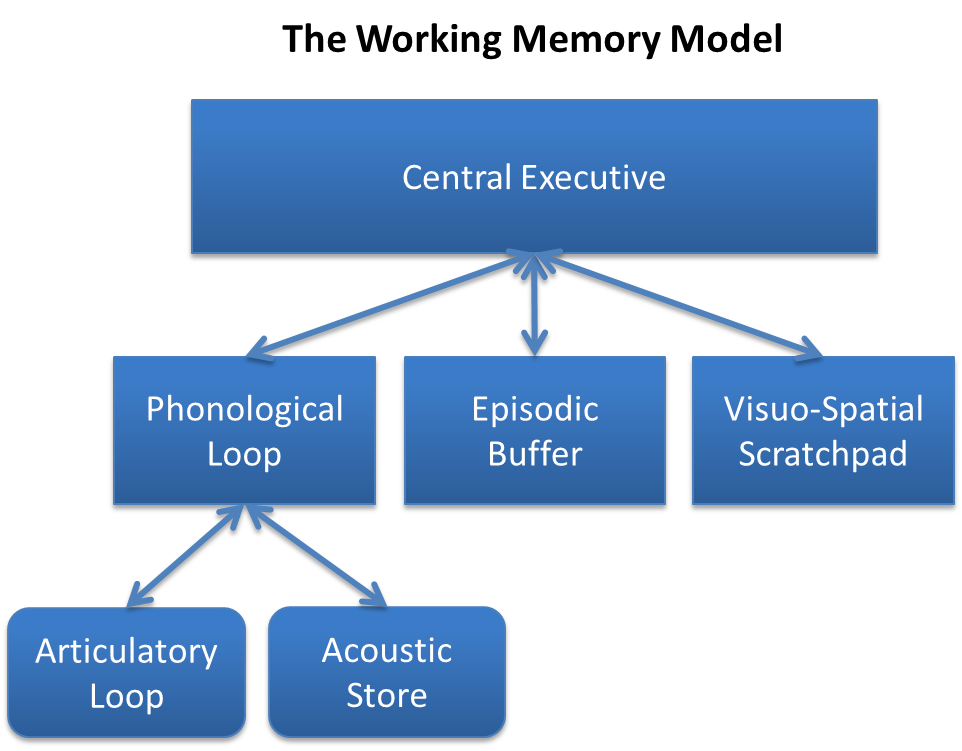

Working memory is a cognitive system with a limited capacity that can hold information temporarily. It is important for reasoning and the guidance of decision-making and behavior. Working memory is often used synonymously with short-term memory, but some theorists consider the two forms of memory distinct, assuming that working memory allows for the manipulation of stored information, whereas short-term memory only refers to the short-term storage of information. Working memory is a theoretical concept central to cognitive psychology, neuropsychology, and neuroscience. History The term "working memory" was coined by Miller, Galanter, and Pribram, and was used in the 1960s in the context of theories that likened the mind to a computer. In 1968, Atkinson and Shiffrin used the term to describe their "short-term store". What we now call working memory was formerly referred to variously as a "short-term store" or short-term memory, primary memory, immediate memory, operant memo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Venn Diagram

A Venn diagram is a widely used diagram style that shows the logical relation between set (mathematics), sets, popularized by John Venn (1834–1923) in the 1880s. The diagrams are used to teach elementary set theory, and to illustrate simple set relationships in probability, logic, statistics, linguistics and computer science. A Venn diagram uses simple closed curves drawn on a plane to represent sets. Very often, these curves are circles or ellipses. Similar ideas had been proposed before Venn. Christian Weise in 1712 (''Nucleus Logicoe Wiesianoe'') and Leonhard Euler (''Letters to a German Princess'') in 1768, for instance, came up with similar ideas. The idea was popularised by Venn in ''Symbolic Logic'', Chapter V "Diagrammatic Representation", 1881. Details A Venn diagram may also be called a ''set diagram'' or ''logic diagram''. It is a diagram that shows ''all'' possible logical relations between a finite collection of different sets. These diagrams depict element ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pearson Product-moment Correlation Coefficient

In statistics, the Pearson correlation coefficient (PCC, pronounced ) ― also known as Pearson's ''r'', the Pearson product-moment correlation coefficient (PPMCC), the bivariate correlation, or colloquially simply as the correlation coefficient ― is a measure of linear correlation between two sets of data. It is the ratio between the covariance of two variables and the product of their standard deviations; thus, it is essentially a normalized measurement of the covariance, such that the result always has a value between −1 and 1. As with covariance itself, the measure can only reflect a linear correlation of variables, and ignores many other types of relationships or correlations. As a simple example, one would expect the age and height of a sample of teenagers from a high school to have a Pearson correlation coefficient significantly greater than 0, but less than 1 (as 1 would represent an unrealistically perfect correlation). Naming and history It was developed by Karl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)