|

Triangular Matrix

In mathematics, a triangular matrix is a special kind of square matrix. A square matrix is called if all the entries ''above'' the main diagonal are zero. Similarly, a square matrix is called if all the entries ''below'' the main diagonal are zero. Because matrix equations with triangular matrices are easier to solve, they are very important in numerical analysis. By the LU decomposition algorithm, an invertible matrix may be written as the matrix multiplication, product of a lower triangular matrix ''L'' and an upper triangular matrix ''U'' if and only if all its leading principal minor (linear algebra), minors are non-zero. Description A matrix of the form :L = \begin \ell_ & & & & 0 \\ \ell_ & \ell_ & & & \\ \ell_ & \ell_ & \ddots & & \\ \vdots & \vdots & \ddots & \ddots & \\ \ell_ & \ell_ & \ldots & \ell_ & \ell_ \end is called a lower trian ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Triangular Array

In mathematics and computing, a triangular array of numbers, polynomials, or the like, is a doubly indexed sequence in which each row is only as long as the row's own index. That is, the ''i''th row contains only ''i'' elements. Examples Notable particular examples include these: *The Bell triangle, whose numbers count the Partition of a set, partitions of a set in which a given element is the largest singleton (mathematics), singleton * Catalan's triangle, which counts strings of matched parentheses * Euler's triangle, which counts permutations with a given number of ascents * Floyd's triangle, whose entries are all of the integers in order * Hosoya's triangle, based on the Fibonacci numbers * Lozanić's triangle, used in the mathematics of chemical compounds * Narayana triangle, counting strings of balanced parentheses with a given number of distinct nestings * Pascal's triangle, whose entries are the binomial coefficients Triangular arrays of integers in which each row is symm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Determinant

In mathematics, the determinant is a Scalar (mathematics), scalar-valued function (mathematics), function of the entries of a square matrix. The determinant of a matrix is commonly denoted , , or . Its value characterizes some properties of the matrix and the linear map represented, on a given basis (linear algebra), basis, by the matrix. In particular, the determinant is nonzero if and only if the matrix is invertible matrix, invertible and the corresponding linear map is an linear isomorphism, isomorphism. However, if the determinant is zero, the matrix is referred to as singular, meaning it does not have an inverse. The determinant is completely determined by the two following properties: the determinant of a product of matrices is the product of their determinants, and the determinant of a triangular matrix is the product of its diagonal entries. The determinant of a matrix is :\begin a & b\\c & d \end=ad-bc, and the determinant of a matrix is : \begin a & b & c \\ d & e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Similar Matrix

In linear algebra, two ''n''-by-''n'' matrices and are called similar if there exists an invertible ''n''-by-''n'' matrix such that B = P^ A P . Similar matrices represent the same linear map under two possibly different bases, with being the change-of-basis matrix. A transformation is called a similarity transformation or conjugation of the matrix . In the general linear group, similarity is therefore the same as conjugacy, and similar matrices are also called conjugate; however, in a given subgroup of the general linear group, the notion of conjugacy may be more restrictive than similarity, since it requires that be chosen to lie in . Motivating example When defining a linear transformation, it can be the case that a change of basis can result in a simpler form of the same transformation. For example, the matrix representing a rotation in when the axis of rotation is not aligned with the coordinate axis can be complicated to compute. If the axis of rotation were ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Block Matrix

In mathematics, a block matrix or a partitioned matrix is a matrix that is interpreted as having been broken into sections called blocks or submatrices. Intuitively, a matrix interpreted as a block matrix can be visualized as the original matrix with a collection of horizontal and vertical lines, which break it up, or partition it, into a collection of smaller matrices. For example, the 3x4 matrix presented below is divided by horizontal and vertical lines into four blocks: the top-left 2x3 block, the top-right 2x1 block, the bottom-left 1x3 block, and the bottom-right 1x1 block. : \left \begin a_ & a_ & a_ & b_ \\ a_ & a_ & a_ & b_ \\ \hline c_ & c_ & c_ & d \end \right Any matrix may be interpreted as a block matrix in one or more ways, with each interpretation defined by how its rows and columns are partitioned. This notion can be made more precise for an n by m matrix M by partitioning n into a collection \text, and then partitioning m into a collection \text. The original m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Off-diagonal Element

In linear algebra, a diagonal matrix is a matrix in which the entries outside the main diagonal are all zero; the term usually refers to square matrices. Elements of the main diagonal can either be zero or nonzero. An example of a 2×2 diagonal matrix is \left begin 3 & 0 \\ 0 & 2 \end\right/math>, while an example of a 3×3 diagonal matrix is \left begin 6 & 0 & 0 \\ 0 & 5 & 0 \\ 0 & 0 & 4 \end\right/math>. An identity matrix of any size, or any multiple of it is a diagonal matrix called a ''scalar matrix'', for example, \left begin 0.5 & 0 \\ 0 & 0.5 \end\right/math>. In geometry, a diagonal matrix may be used as a '' scaling matrix'', since matrix multiplication with it results in changing scale (size) and possibly also shape; only a scalar matrix results in uniform change in scale. Definition As stated above, a diagonal matrix is a matrix in which all off-diagonal entries are zero. That is, the matrix with columns and rows is diagonal if \forall i,j \in \, i \ne j \im ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

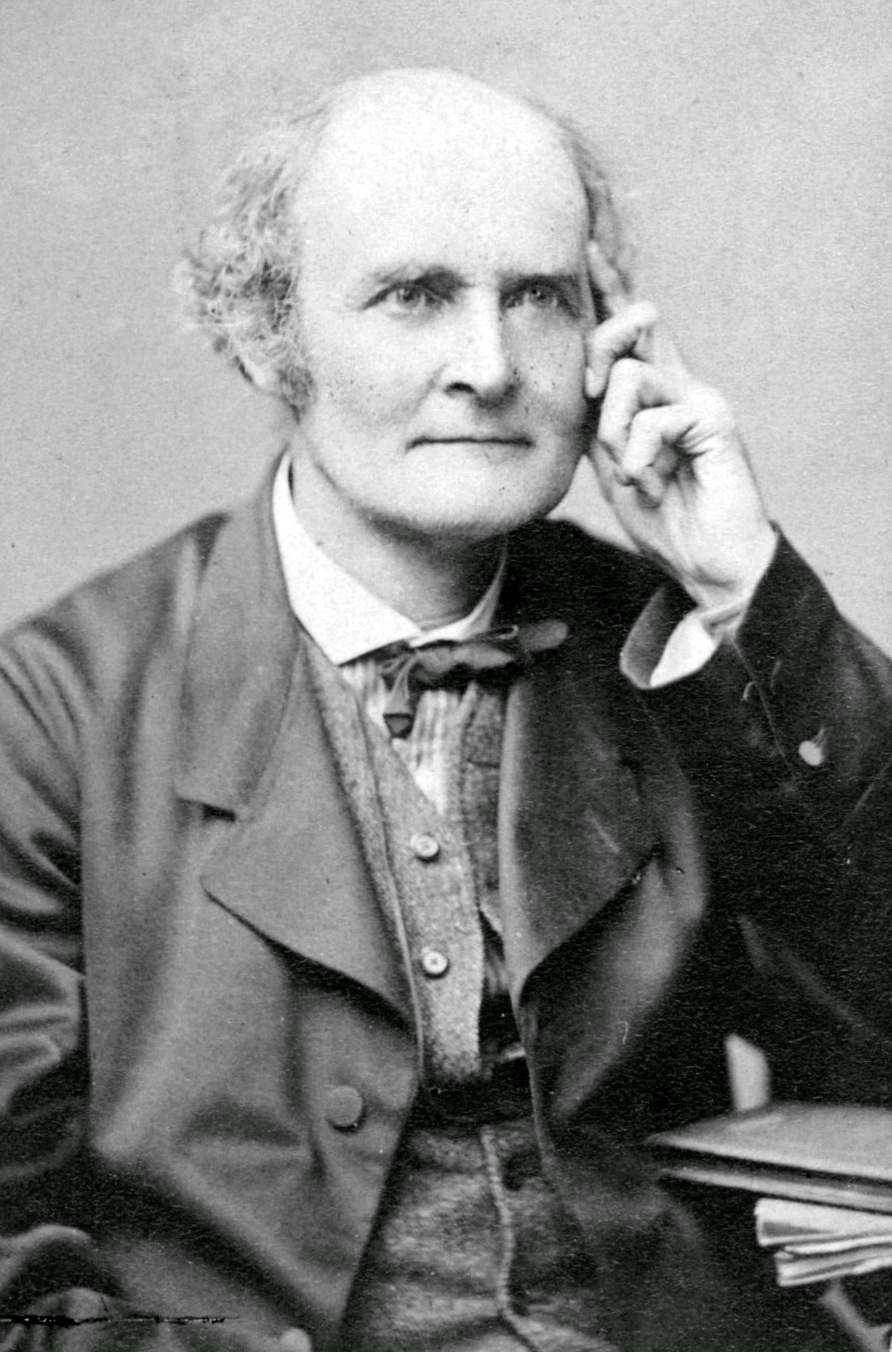

Cayley–Hamilton Theorem

In linear algebra, the Cayley–Hamilton theorem (named after the mathematicians Arthur Cayley and William Rowan Hamilton) states that every square matrix over a commutative ring (such as the real or complex numbers or the integers) satisfies its own characteristic equation. The characteristic polynomial of an matrix is defined as p_A(\lambda)=\det(\lambda I_n-A), where is the determinant operation, is a variable scalar element of the base ring, and is the identity matrix. Since each entry of the matrix (\lambda I_n-A) is either constant or linear in , the determinant of (\lambda I_n-A) is a degree- monic polynomial in , so it can be written as p_A(\lambda) = \lambda^n + c_\lambda^ + \cdots + c_1\lambda + c_0. By replacing the scalar variable with the matrix , one can define an analogous matrix polynomial expression, p_A(A) = A^n + c_A^ + \cdots + c_1A + c_0I_n. (Here, A is the given matrix—not a variable, unlike \lambda—so p_A(A) is a constant rather than ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nilpotent Matrix

In linear algebra, a nilpotent matrix is a square matrix ''N'' such that :N^k = 0\, for some positive integer k. The smallest such k is called the index of N, sometimes the degree of N. More generally, a nilpotent transformation is a linear transformation L of a vector space such that L^k = 0 for some positive integer k (and thus, L^j = 0 for all j \geq k). Both of these concepts are special cases of a more general concept of nilpotent, nilpotence that applies to elements of ring (algebra), rings. Examples Example 1 The matrix : A = \begin 0 & 1 \\ 0 & 0 \end is nilpotent with index 2, since A^2 = 0. Example 2 More generally, any n-dimensional triangular matrix with zeros along the main diagonal is nilpotent, with index \le n . For example, the matrix : B=\begin 0 & 2 & 1 & 6\\ 0 & 0 & 1 & 2\\ 0 & 0 & 0 & 3\\ 0 & 0 & 0 & 0 \end is nilpotent, with : B^2=\begin 0 & 0 & 2 & 7\\ 0 & 0 & 0 & 3\\ 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 \end ;\ B^3=\begin 0 & 0 & 0 & 6\\ 0 & 0 & 0 & ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unipotent

In mathematics, a unipotent element ''r'' of a ring ''R'' is one such that ''r'' − 1 is a nilpotent element; in other words, (''r'' − 1)''n'' is zero for some ''n''. In particular, a square matrix ''M'' is a unipotent matrix if and only if its characteristic polynomial ''P''(''t'') is a power of ''t'' − 1. Thus all the eigenvalues of a unipotent matrix are 1. The term quasi-unipotent means that some power is unipotent, for example for a diagonalizable matrix with eigenvalues that are all roots of unity. In the theory of algebraic groups, a group element is unipotent if it acts unipotently in a certain natural group representation. A unipotent affine algebraic group is then a group with all elements unipotent. Definition Definition with matrices Consider the group \mathbb_n of upper-triangular matrices with 1's along the diagonal, so they are the group of matrices :\mathbb_n = \left\. Then, a unipotent group can be define ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix Norm

In the field of mathematics, norms are defined for elements within a vector space. Specifically, when the vector space comprises matrices, such norms are referred to as matrix norms. Matrix norms differ from vector norms in that they must also interact with matrix multiplication. Preliminaries Given a field \ K\ of either real or complex numbers (or any complete subset thereof), let \ K^\ be the -vector space of matrices with m rows and n columns and entries in the field \ K ~. A matrix norm is a norm on \ K^~. Norms are often expressed with double vertical bars (like so: \ \, A\, \ ). Thus, the matrix norm is a function \ \, \cdot\, : K^ \to \R^\ that must satisfy the following properties: For all scalars \ \alpha \in K\ and matrices \ A, B \in K^\ , * \, A\, \ge 0\ (''positive-valued'') * \, A\, = 0 \iff A=0_ (''definite'') * \left\, \alpha\ A \right\, = \left, \alpha \\ \left\, A\right\, \ (''absolutely homogeneous'') * \, A + B \, \le \, A \, + \, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Identity Matrix

In linear algebra, the identity matrix of size n is the n\times n square matrix with ones on the main diagonal and zeros elsewhere. It has unique properties, for example when the identity matrix represents a geometric transformation, the object remains unchanged by the transformation. In other contexts, it is analogous to multiplying by the number 1. Terminology and notation The identity matrix is often denoted by I_n, or simply by I if the size is immaterial or can be trivially determined by the context. I_1 = \begin 1 \end ,\ I_2 = \begin 1 & 0 \\ 0 & 1 \end ,\ I_3 = \begin 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end ,\ \dots ,\ I_n = \begin 1 & 0 & 0 & \cdots & 0 \\ 0 & 1 & 0 & \cdots & 0 \\ 0 & 0 & 1 & \cdots & 0 \\ \vdots & \vdots & \vdots & \ddots & \vdots \\ 0 & 0 & 0 & \cdots & 1 \end. The term unit matrix has also been widely used, but the term ''identity matrix'' is now standard. The term ''unit matrix'' is ambiguous, because it is also used for a matrix of on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Characteristic Polynomial

In linear algebra, the characteristic polynomial of a square matrix is a polynomial which is invariant under matrix similarity and has the eigenvalues as roots. It has the determinant and the trace of the matrix among its coefficients. The characteristic polynomial of an endomorphism of a finite-dimensional vector space is the characteristic polynomial of the matrix of that endomorphism over any basis (that is, the characteristic polynomial does not depend on the choice of a basis). The characteristic equation, also known as the determinantal equation, is the equation obtained by equating the characteristic polynomial to zero. In spectral graph theory, the characteristic polynomial of a graph is the characteristic polynomial of its adjacency matrix. Motivation In linear algebra, eigenvalues and eigenvectors play a fundamental role, since, given a linear transformation, an eigenvector is a vector whose direction is not changed by the transformation, and the correspondi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiplicity Of A Root Of A Polynomial

In mathematics, the multiplicity of a member of a multiset is the number of times it appears in the multiset. For example, the number of times a given polynomial has a root at a given point is the multiplicity of that root. The notion of multiplicity is important to be able to count correctly without specifying exceptions (for example, ''double roots'' counted twice). Hence the expression, "counted with multiplicity". If multiplicity is ignored, this may be emphasized by counting the number of ''distinct'' elements, as in "the number of distinct roots". However, whenever a set (as opposed to multiset) is formed, multiplicity is automatically ignored, without requiring use of the term "distinct". Multiplicity of a prime factor In prime factorization, the multiplicity of a prime factor is its p-adic valuation. For example, the prime factorization of the integer is : the multiplicity of the prime factor is , while the multiplicity of each of the prime factors and is . T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |