|

Stochastic Computing

Stochastic computing is a collection of techniques that represent continuous values by streams of random bits. Complex computations can then be computed by simple bit-wise operations on the streams. Stochastic computing is distinct from the study of randomized algorithms. Motivation and a simple example Suppose that p,q \in ,1/math> is given, and we wish to compute p \times q. Stochastic computing performs this operation using probability instead of arithmetic. Specifically, suppose that there are two random, independent bit streams called ''stochastic number''s (i.e. Bernoulli processes), where the probability of a 1 in the first stream is p, and the probability in the second stream is q. We can take the logical AND of the two streams. The probability of a 1 in the output stream is pq. By observing enough output bits and measuring the frequency of 1s, it is possible to estimate pq to arbitrary accuracy. The operation above converts a fairly complicated computation (multipli ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Randomized Algorithm

A randomized algorithm is an algorithm that employs a degree of randomness as part of its logic or procedure. The algorithm typically uses uniformly random bits as an auxiliary input to guide its behavior, in the hope of achieving good performance in the "average case" over all possible choices of random determined by the random bits; thus either the running time, or the output (or both) are random variables. There is a distinction between algorithms that use the random input so that they always terminate with the correct answer, but where the expected running time is finite (Las Vegas algorithms, for example Quicksort), and algorithms which have a chance of producing an incorrect result ( Monte Carlo algorithms, for example the Monte Carlo algorithm for the MFAS problem) or fail to produce a result either by signaling a failure or failing to terminate. In some cases, probabilistic algorithms are the only practical means of solving a problem. In common practice, randomized alg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

And Gate

The AND gate is a basic digital logic gate that implements the logical conjunction (∧) from mathematical logic AND gates behave according to their truth table. A HIGH output (1) results only if all the inputs to the AND gate are HIGH (1). If any of the inputs to the AND gate are not HIGH, a LOW (0) is outputted. The function can be extended to any number of inputs by multiple gates up in a chain. Symbols There are three symbols for AND gates: the American (ANSI or 'military') symbol and the IEC ('European' or 'rectangular') symbol, as well as the deprecated DIN symbol. Additional inputs can be added as needed. For more information see the Logic gate symbols article. It can also be denoted as symbol "^" or "&". The AND gate with inputs ''A'' and ''B'' and output ''C'' implements the logical expression C = A \cdot B. This expression also may be denoted as C=A \wedge B or C=A \And B. As of Unicode 16.0.0, the AND gate is also encoded in the Symbols for Legacy Computing Su ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

History Of Computing Hardware

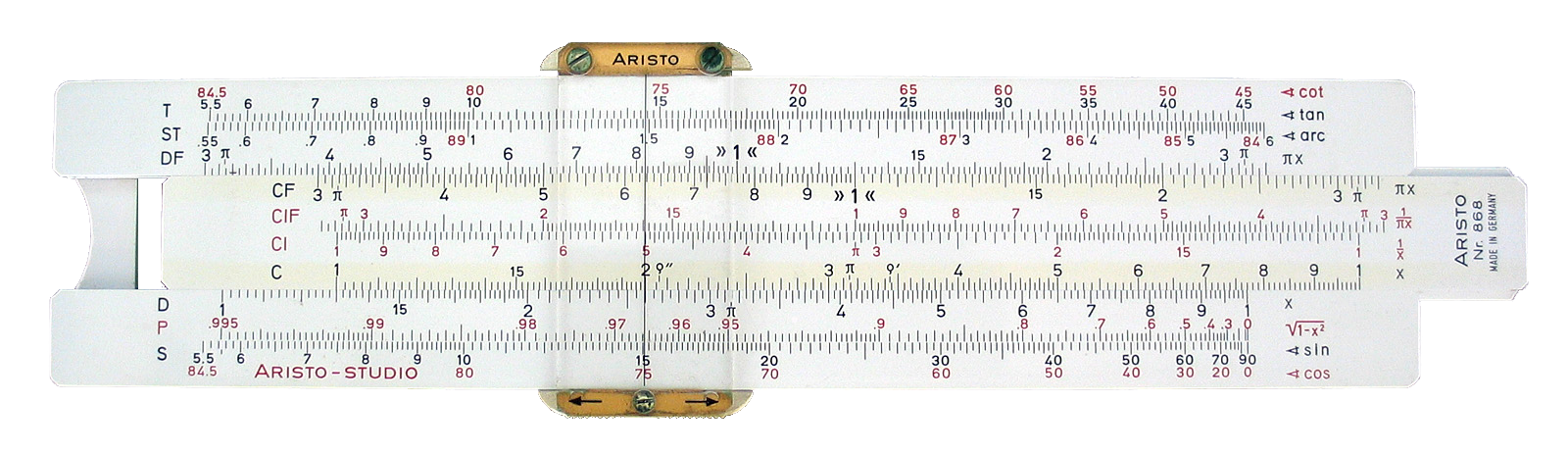

The history of computing hardware spans the developments from early devices used for simple calculations to today's complex computers, encompassing advancements in both analog and digital technology. The first aids to computation were purely mechanical devices which required the operator to set up the initial values of an elementary arithmetic operation, then manipulate the device to obtain the result. In later stages, computing devices began representing numbers in continuous forms, such as by distance along a scale, rotation of a shaft, or a specific voltage level. Numbers could also be represented in the form of digits, automatically manipulated by a mechanism. Although this approach generally required more complex mechanisms, it greatly increased the precision of results. The development of transistor technology, followed by the invention of integrated circuit chips, led to revolutionary breakthroughs. Transistor-based computers and, later, integrated circuit-based computers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Unconventional Computing

Unconventional computing (also known as alternative computing or nonstandard computation) is computing by any of a wide range of new or unusual methods. The term ''unconventional computation'' was coined by Cristian S. Calude and John Casti and used at the First International Conference on Unconventional Models of Computation in 1998. Background The general theory of computation allows for a variety of methods of computation. Computing technology was first developed using mechanical systems and then evolved into the use of electronic devices. Other fields of modern physics provide additional avenues for development. Models of Computation A model of computation describes how the output of a mathematical function is computed given its input. The model describes how units of computations, memories, and communications are organized. The computational complexity of an algorithm can be measured given a model of computation. Using a model allows studying the performance of algorit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Field-programmable Gate Array

A field-programmable gate array (FPGA) is a type of configurable integrated circuit that can be repeatedly programmed after manufacturing. FPGAs are a subset of logic devices referred to as programmable logic devices (PLDs). They consist of an array of programmable logic device, programmable logic block, logic blocks with a connecting grid, that can be configured "in the field" to interconnect with other logic blocks to perform various digital functions. FPGAs are often used in limited (low) quantity production of custom-made products, and in research and development, where the higher cost of individual FPGAs is not as important, and where creating and manufacturing a custom circuit would not be feasible. Other applications for FPGAs include the telecommunications, automotive, aerospace, and industrial sectors, which benefit from their flexibility, high signal processing speed, and parallel processing abilities. A FPGA configuration is generally written using a hardware descr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Belief Propagation

Belief propagation, also known as sum–product message passing, is a message-passing algorithm for performing inference on graphical models, such as Bayesian networks and Markov random fields. It calculates the marginal distribution for each unobserved node (or variable), conditional on any observed nodes (or variables). Belief propagation is commonly used in artificial intelligence and information theory, and has demonstrated empirical success in numerous applications, including low-density parity-check codes, turbo codes, free energy approximation, and satisfiability. The algorithm was first proposed by Judea Pearl in 1982, who formulated it as an exact inference algorithm on trees, later extended to polytrees. While the algorithm is not exact on general graphs, it has been shown to be a useful approximate algorithm. Motivation Given a finite set of discrete random variables X_1, \ldots, X_n with joint probability mass function p, a common task is to compute ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Low-density Parity-check Code

Low-density parity-check (LDPC) codes are a class of error correction codes which (together with the closely-related turbo codes) have gained prominence in coding theory and information theory since the late 1990s. The codes today are widely used in applications ranging from wireless communications to flash-memory storage. Together with turbo codes, they sparked a revolution in coding theory, achieving order-of-magnitude improvements in performance compared to traditional error correction codes. Central to the performance of LDPC codes is their adaptability to the iterative belief propagation decoding algorithm. Under this algorithm, they can be designed to approach theoretical limits (Channel capacity, capacities) of many channels at low computation costs. Theoretically, analysis of LDPC codes focuses on sequences of codes of fixed code rate and increasing block length. These sequences are typically tailored to a set of channels. For appropriately designed sequences, the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Pseudorandom Number Generator

A pseudorandom number generator (PRNG), also known as a deterministic random bit generator (DRBG), is an algorithm for generating a sequence of numbers whose properties approximate the properties of sequences of random number generation, random numbers. The PRNG-generated sequence is not truly random, because it is completely determined by an initial value, called the PRNG's ''random seed, seed'' (which may include truly random values). Although sequences that are closer to truly random can be generated using hardware random number generators, ''pseudorandom number generators'' are important in practice for their speed in number generation and their reproducibility. PRNGs are central in applications such as simulations (e.g. for the Monte Carlo method), electronic games (e.g. for procedural generation), and cryptography. Cryptographic applications require the output not to be predictable from earlier outputs, and more cryptographically-secure pseudorandom number generator, elabora ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Arithmetic Logic Unit

In computing, an arithmetic logic unit (ALU) is a Combinational logic, combinational digital circuit that performs arithmetic and bitwise operations on integer binary numbers. This is in contrast to a floating-point unit (FPU), which operates on floating point numbers. It is a fundamental building block of many types of computing circuits, including the central processing unit (CPU) of computers, FPUs, and graphics processing units (GPUs). The inputs to an ALU are the data to be operated on, called operands, and a code indicating the operation to be performed (opcode); the ALU's output is the result of the performed operation. In many designs, the ALU also has status inputs or outputs, or both, which convey information about a previous operation or the current operation, respectively, between the ALU and external status registers. Signals An ALU has a variety of input and output net (electronics), nets, which are the electrical conductors used to convey Digital signal (electroni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Least Significant Bit

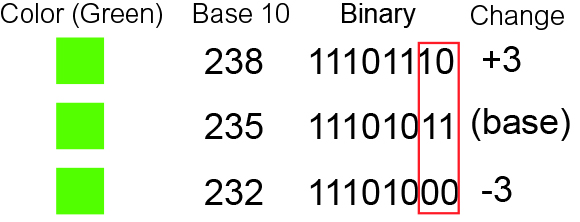

In computing, bit numbering is the convention used to identify the bit positions in a binary number. Bit significance and indexing In computing, the least significant bit (LSb) is the bit position in a binary integer representing the lowest-order place of the integer. Similarly, the most significant bit (MSb) represents the highest-order place of the binary integer. The LSb is sometimes referred to as the ''low-order bit''. Due to the convention in positional notation of writing less significant digits further to the right, the LSb also might be referred to as the ''right-most bit''. The MSb is similarly referred to as the ''high-order bit'' or ''left-most bit''. In both cases, the LSb and MSb correlate directly to the least significant digit and most significant digit of a decimal integer. Bit indexing correlates to the positional notation of the value in base 2. For this reason, bit index is not affected by how the value is stored on the device, such as the value's byte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Most Significant Bit

In computing, bit numbering is the convention used to identify the bit positions in a binary numeral system, binary number. Bit significance and indexing In computing, the least significant bit (LSb) is the bit position in a Binary numeral system, binary Integer (computer science), integer representing the lowest-order place of the integer. Similarly, the most significant bit (MSb) represents the highest-order place of the binary integer. The LSb is sometimes referred to as the ''low-order bit''. Due to the convention in positional notation of writing less significant digits further to the right, the LSb also might be referred to as the ''right-most bit''. The MSb is similarly referred to as the ''high-order bit'' or ''left-most bit''. In both cases, the LSb and MSb correlate directly to the least significant Numerical digit, digit and most significant digit of a decimal integer. Bit indexing correlates to the positional notation of the value in base 2. For this reason, bit in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Adder (electronics)

An adder, or summer, is a digital circuit that performs addition of numbers. In many computers and other kinds of microprocessor, processors, adders are used in the arithmetic logic units (ALUs). They are also used in other parts of the processor, where they are used to calculate address space, addresses, database index, table indices, increment and decrement operators and similar operations. Although adders can be constructed for many number representations, such as binary-coded decimal or excess-3, the most common adders operate on binary numbers. In cases where two's complement or ones' complement is being used to represent negative numbers, it is trivial to modify an adder into an adder–subtractor. Other signed number representations require more logic around the basic adder. History George Stibitz invented the 2-bit binary adder (the Model K (calculator), Model K) in 1937. Binary adders Half adder The half adder adds two single binary digits A and B. It has two outputs, s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |