|

Steele (supercomputer)

Steele is a supercomputer that was installed at Purdue University on May 5, 2008. The high-performance computing cluster is operated by Information Technology at Purdue (ITaP), the university's central information technology organization. ITaP also operates clusters named Coates built in 2009, Rossmann built in 2010, and Hansen and Carter built in 2011. Steele was the largest campus supercomputer in the Big Ten outside a national center when built. It ranked 104th on the November 2008 TOP500 Supercomputer Sites list. Hardware Steele consisted of 893 64-bit, 8-core Dell PowerEdge 1950 and nine 64-bit, 8-core Dell PowerEdge 2950 systems with various combinations of 16-32 gigabytes RAM, 160 GB to 2 terabytes of disk, and Gigabit Ethernet and SDR InfiniBand to each node. The cluster had a theoretical peak performance of more than 60 teraflops. Steele and its 7,216 cores replaced the Purdue Lear cluster supercomputer which had 1,024 cores but was substantially slower. Steele is prim ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supercomputer

A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second ( FLOPS) instead of million instructions per second (MIPS). Since 2017, there have existed supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers. Supercomputers play an important role in the field of computational science, and are used for a wide range of computationally intensive tasks in var ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HP Performance Optimized Datacenter

The HP Performance Optimized Datacenter (POD) is a range of three modular data centers manufactured by HP. Housed in purpose-built modules of standard shipping container form-factor of either 20 feet or 40 feet in length the data centers are shipped preconfigured with racks, cabling and equipment for power and cooling. They can support technologies from HP or third parties. The claimed capacity is the equivalent of up to 10,000 square feet of typical data center capacity depending on the model. Depending on the model, they use either chilled water cooling or a combination of direct expansion air cooling. HP POD 20c and 40c The POD 40c was launched in 2008. This 40-foot modular data center has a maximum power capacity up to 27 kW per rack. The POD 40c supports 3,500 compute nodes or 12,000 LFF hard drives. HP has claimed this offers the computing equivalent of 4,000 square foot of traditional data center space. The POD 20c was launched in 2010. This modular data cent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rossmann (supercomputer)

Rossmann is a supercomputer at Purdue University that went into production September 1, 2010. The high-performance computing cluster is operated by Information Technology at Purdue (ITaP), the university's central information technology organization. ITaP also operates clusters named Steele built in 2008, Coates built in 2009, Hansen built in the summer of 2011 and Carter built in the fall of 2012 in partnership with Intel. Rossmann ranked 126 on the November 2010 TOP500 list. Hardware The Rossmann cluster consists of HP ProLiant DL165 G7 compute nodes with 64-bit, dual 12-core AMD Opteron 6172 processors (24 cores per node), either 48 or 96 GB of memory and 250 GB of local disk for system software and scratch storage. Nodes with 192 GB of memory and either 1 terabyte or 2 TB of local scratch disk also are available. Rossmann consists of five logical sub-clusters, each with a different memory and storage configuration. All nodes have 10 Gigabit Ethernet interconnects. Software ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DiaGrid (distributed Computing Network)

DiaGrid is a large, multicampus distributed research computing network utilizing the HTCondor system and centered at Purdue University in West Lafayette, Indiana. In 2012, it included nearly 43,000 processors representing 301 teraflops of computing power. DiaGrid received a Campus Technology Innovators Award from Campus Technology magazine and an IDG InfoWorld 100 Award in 2009 and was employed at the SC09 supercomputing conference in Portland, Ore., to capture nearly 150 days of compute time for science jobs. Partners DiaGrid is a partnership with Purdue, Indiana University, Indiana State University, the University of Notre Dame, the University of Louisville, the University of Nebraska, the University of Wisconsin, Purdue's Calumet and North Central campuses, and Indiana University-Purdue University Fort Wayne. It is designed to accommodate computers at other campuses as new members join. The Purdue portion of the pool, named BoilerGrid, is the largest academic system of its kind. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Condor High-Throughput Computing System

HTCondor is an open-source software, open-source high-throughput computing software framework for coarse-grained distributed parallelization of computationally intensive tasks. It can be used to manage workload on a dedicated Computer cluster, cluster of computers, or to farm out work to idle desktop computersso-called CPU scavenging, cycle scavenging. HTCondor runs on Linux, Unix, Mac OS X, FreeBSD, and Microsoft Windows operating systems. HTCondor can integrate both dedicated resources (rack-mounted clusters) and non-dedicated desktop machines (cycle scavenging) into one computing environment. HTCondor is developed by the HTCondor team at the University of Wisconsin–Madison and is freely available for use. HTCondor follows an open-source software, open-source philosophy and is licensed under the Apache License 2.0. While HTCondor makes use of unused computing time, leaving computers turned on for use with HTCondor will increase energy consumption and associated costs. Star ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Hadron Collider

The Large Hadron Collider (LHC) is the world's largest and highest-energy particle collider. It was built by the European Organization for Nuclear Research (CERN) between 1998 and 2008 in collaboration with over 10,000 scientists and hundreds of universities and laboratories, as well as more than 100 countries. It lies in a tunnel in circumference and as deep as beneath the France–Switzerland border near Geneva. The first collisions were achieved in 2010 at an energy of 3.5 teraelectronvolts (TeV) per beam, about four times the previous world record. After upgrades it reached 6.5 TeV per beam (13 TeV total collision energy). At the end of 2018, it was shut down for three years for further upgrades. The collider has four crossing points where the accelerated particles collide. Seven detectors, each designed to detect different phenomena, are positioned around the crossing points. The LHC primarily collides proton beams, but it can also accelerate beams of heavy ion ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compact Muon Solenoid

The Compact Muon Solenoid (CMS) experiment is one of two large general-purpose particle physics detectors built on the Large Hadron Collider (LHC) at CERN in Switzerland and France. The goal of the CMS experiment is to investigate a wide range of physics, including the search for the Higgs boson, extra dimensions, and particles that could make up dark matter. CMS is 21 metres long, 15 m in diameter, and weighs about 14,000 tonnes. Over 4,000 people, representing 206 scientific institutes and 47 countries, form the CMS collaboration who built and now operate the detector. It is located in a cavern at Cessy in France, just across the border from Geneva. In July 2012, along with ATLAS, CMS tentatively discovered the Higgs boson. By March 2013 its existence was confirmed. Background Recent collider experiments such as the now-dismantled Large Electron-Positron Collider and the newly renovated Large Hadron Collider (LHC) at CERN, as well as the () recently closed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Effects Of Global Warming

The effects of climate change impact the physical environment, ecosystems and human societies. The environmental effects of climate change are broad and far-reaching. They affect the Effects of climate change on the water cycle, water cycle, Effects of climate change on oceans, oceans, Arctic sea ice decline, sea and land ice (Retreat of glaciers since 1850, glaciers), Sea level rise, sea level, as well as weather and Climate extremes, climate extreme events. The changes in climate are not uniform across the Earth. In particular, most land areas have warmed faster than most ocean areas, and the Arctic is warming faster than most other regions. The regional changes vary: at high latitudes it is the average temperature that is increasing, while for the oceans and tropics it is in particular the rainfall and the water cycle where changes are observed. The magnitude of future impacts of climate change can be reduced by climate change mitigation and adaptation. Climate change has l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Open Science Grid Consortium

The Open Science Grid Consortium is an organization that administers a worldwide grid of technological resources called the Open Science Grid, which facilitates distributed computing for scientific research. Founded in 2004, the consortium is composed of service and resource providers, researchers from universities and national laboratories, as well as computing centers across the United States. Members independently own and manage the resources which make up the distributed facility, and consortium agreements provide the framework for technological and organizational integration. Use The OSG is used by scientists and researchers for data analysis tasks which are too computationally intensive for a single data center or supercomputer. While most of the grid's resources are used for particle physics, research teams from disciplines like biology, chemistry, astronomy, and geographic information systems are currently using the grid to analyze data. Research using the grid's resource ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Extreme Science And Engineering Discovery Environment

TeraGrid was an e-Science grid computing infrastructure combining resources at eleven partner sites. The project started in 2001 and operated from 2004 through 2011. The TeraGrid integrated high-performance computers, data resources and tools, and experimental facilities. Resources included more than a petaflops of computing capability and more than 30 petabytes of online and archival data storage, with rapid access and retrieval over high-performance computer network connections. Researchers could also access more than 100 discipline-specific databases. TeraGrid was coordinated through the Grid Infrastructure Group (GIG) at the University of Chicago, working in partnership with the resource provider sites in the United States. History The US National Science Foundation (NSF) issued a solicitation asking for a "distributed terascale facility" from program director Richard L. Hilderbrandt. The TeraGrid project was launched in August 2001 with $53 million in funding to four sites: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

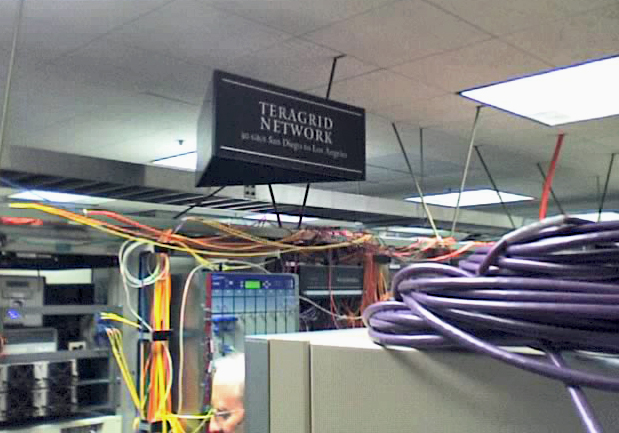

TeraGrid

TeraGrid was an e-Science grid computing infrastructure combining resources at eleven partner sites. The project started in 2001 and operated from 2004 through 2011. The TeraGrid integrated high-performance computers, data resources and tools, and experimental facilities. Resources included more than a petaflops of computing capability and more than 30 petabytes of online and archival data storage, with rapid access and retrieval over high-performance computer network connections. Researchers could also access more than 100 discipline-specific databases. TeraGrid was coordinated through the Grid Infrastructure Group (GIG) at the University of Chicago, working in partnership with the resource provider sites in the United States. History The US National Science Foundation (NSF) issued a solicitation asking for a "distributed terascale facility" from program director Richard L. Hilderbrandt. The TeraGrid project was launched in August 2001 with $53 million in funding to four sites: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

National Science Foundation

The National Science Foundation (NSF) is an independent agency of the United States government that supports fundamental research and education in all the non-medical fields of science and engineering. Its medical counterpart is the National Institutes of Health. With an annual budget of about $8.3 billion (fiscal year 2020), the NSF funds approximately 25% of all federally supported basic research conducted by the United States' colleges and universities. In some fields, such as mathematics, computer science, economics, and the social sciences, the NSF is the major source of federal backing. The NSF's director and deputy director are appointed by the President of the United States and confirmed by the United States Senate, whereas the 24 president-appointed members of the National Science Board (NSB) do not require Senate confirmation. The director and deputy director are responsible for administration, planning, budgeting and day-to-day operations of the foundation, while t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |