|

Spoken Dialog Systems

A spoken dialog system (SDS) is a computer system able to converse with a human with voice. It has two essential components that do not exist in a written text dialog system: a speech recognizer and a text-to-speech module (written text dialog systems usually use other input systems provided by an OS). It can be further distinguished from command and control speech systems that can respond to requests but do not attempt to maintain continuity over time. Components * An automatic Speech recognizer (ASR) decodes speech into text. Domain-specific recognizers can be configured for language designed for a given application. A "cloud" recognizer will be suitable for domains that do not depend on very specific vocabularies. * Natural language understanding transforms a recognition into a concept structure that can drive system behavior. Some approaches will combine recognition and understanding processing but are thought to be less flexible since interpretation has to be coded into the gr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dialog System

A dialogue system, or conversational agent (CA), is a computer system intended to converse with a human. Dialogue systems employed one or more of text, speech, graphics, haptics, gestures, and other modes for communication on both the input and output channel. The elements of a dialogue system are not defined because this idea is under research, however, they are different from chatbot. The typical GUI wizard engages in a sort of dialogue, but it includes very few of the common dialogue system components, and the dialogue state is trivial. Background After dialogue systems based only on written text processing starting from the early Sixties, the first ''speaking'' dialogue system was issued by the DARPA Project in the USA in 1977. After the end of this 5-year project, some European projects issued the first dialogue system able to speak many languages (also French, German and Italian).Alberto Ciaramella, ''A prototype performance evaluation report'', Sundial work package 8000 ( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CSELT

Centro Studi e Laboratori Telecomunicazioni (CSELT) was an Italian research center for telecommunication based in Torino, the biggest in Italy and one of the most important in Europe. It played a major role internationally especially in the standardization of protocols and technologies in telecommunication: perhaps the most widely well known is the standardization of mp3. CSELT has been active from 1964 to 2001, initially as a part of the Istituto per la Ricostruzione Industriale-STET – Società Finanziaria Telefonica group, the major conglomerate of Italian public Industries in the 1960s and 1970s; it later became part of Telecom Italia Group. In 2001 was renamed TILAB as part of Telecom Italia Group. Research areas Transmission technology and fiber optics CSELT became internationally known at the end of 1960s thanks to a cooperation with the US-based company COMSAT for a pilot project of TDMA (and PCM) satellite communication system. Furthermore, in 1971 it started a jo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

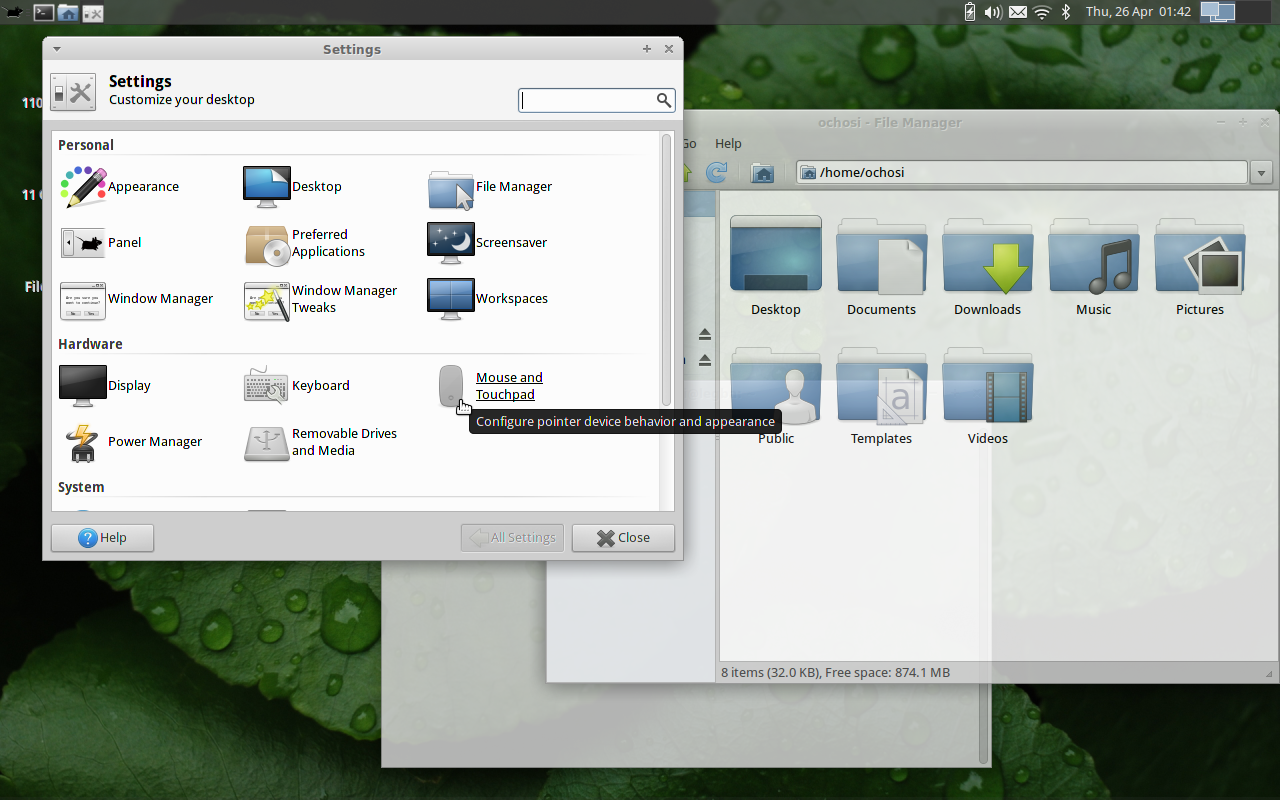

User Interfaces

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine from the human end, while the machine simultaneously feeds back information that aids the operators' decision-making process. Examples of this broad concept of user interfaces include the interactive aspects of computer operating systems, hand tools, heavy machinery operator controls and process controls. The design considerations applicable when creating user interfaces are related to, or involve such disciplines as, ergonomics and psychology. Generally, the goal of user interface design is to produce a user interface that makes it easy, efficient, and enjoyable (user-friendly) to operate a machine in the way which produces the desired result (i.e. maximum usability). This generally means that the operator needs to provide minimal input t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multimodal Interaction

Multimodal interaction provides the user with multiple modes of interacting with a system. A multimodal interface provides several distinct tools for input and output of data. Introduction Multimodal human-computer interaction refers to the "interaction with the virtual and physical environment through natural modes of communication", This implies that multimodal interaction enables a more free and natural communication, interfacing users with automated systems in both input and output. Specifically, multimodal systems can offer a flexible, efficient and usable environment allowing users to interact through input modalities, such as speech, handwriting, hand gesture and gaze, and to receive information by the system through output modalities, such as speech synthesis, smart graphics and other modalities, opportunely combined. Then a multimodal system has to recognize the inputs from the different modalities combining them according to temporal and contextual constraintsCasche ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speech Recognition

Speech recognition is an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that enable the recognition and translation of spoken language into text by computers with the main benefit of searchability. It is also known as automatic speech recognition (ASR), computer speech recognition or speech to text (STT). It incorporates knowledge and research in the computer science, linguistics and computer engineering fields. The reverse process is speech synthesis. Some speech recognition systems require "training" (also called "enrollment") where an individual speaker reads text or isolated vocabulary into the system. The system analyzes the person's specific voice and uses it to fine-tune the recognition of that person's speech, resulting in increased accuracy. Systems that do not use training are called "speaker-independent" systems. Systems that use training are called "speaker dependent". Speech recognition ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AVIOS

International Consolidated Airlines Group S.A., trading as International Airlines Group and usually shortened to IAG, is an Anglo-Spanish multinational airline holding company with its registered office in Madrid, Spain, and its global headquarters in London, England. It was formed in January 2011 after a merger agreement between British Airways and Iberia, the flag carriers of the United Kingdom and Spain respectively, when British Airways and Iberia became wholly owned subsidiaries of IAG. British Airways shareholders were given 55% of the shares in the new company. Since its creation, IAG has expanded its portfolio of operations and brands by purchasing other airlines – BMI (2011), Vueling (2012) and Aer Lingus (2015). The Group also owns the Level brand and Avios, the IAG rewards programme. The company is listed on the London Stock Exchange and the Madrid Stock Exchange. It is a constituent of the FTSE 100 Index and IBEX 35 Index. History Creation of IAG as BA/Iberi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AT&T

AT&T Inc. is an American multinational telecommunications holding company headquartered at Whitacre Tower in Downtown Dallas, Texas. It is the world's largest telecommunications company by revenue and the third largest provider of mobile telephone services in the U.S. , AT&T was ranked 13th on the ''Fortune'' 500 rankings of the largest United States corporations, with revenues of $168.8 billion. During most of the 20th century, AT&T had a monopoly on phone service in the United States. The company began its history as the American District Telegraph Company, formed in St. Louis in 1878. After expanding services to Arkansas, Kansas, Oklahoma and Texas, through a series of mergers, it became Southwestern Bell Telephone Company in 1920, which was then a subsidiary of American Telephone and Telegraph Company. The latter was a successor of the original Bell Telephone Company founded by Alexander Graham Bell in 1877. The American Bell Telephone Company formed the American Teleph ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speech Recognizer

Speech recognition is an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that enable the recognition and translation of spoken language into text by computers with the main benefit of searchability. It is also known as automatic speech recognition (ASR), computer speech recognition or speech to text (STT). It incorporates knowledge and research in the computer science, linguistics and computer engineering fields. The reverse process is speech synthesis. Some speech recognition systems require "training" (also called "enrollment") where an individual speaker reads text or isolated vocabulary into the system. The system analyzes the person's specific voice and uses it to fine-tune the recognition of that person's speech, resulting in increased accuracy. Systems that do not use training are called "speaker-independent" systems. Systems that use training are called "speaker dependent". Speech recognition appl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text-to-speech

Speech synthesis is the artificial production of human speech. A computer system used for this purpose is called a speech synthesizer, and can be implemented in software or hardware products. A text-to-speech (TTS) system converts normal language text into speech; other systems render symbolic linguistic representations like phonetic transcriptions into speech. The reverse process is speech recognition. Synthesized speech can be created by concatenating pieces of recorded speech that are stored in a database. Systems differ in the size of the stored speech units; a system that stores phones or diphones provides the largest output range, but may lack clarity. For specific usage domains, the storage of entire words or sentences allows for high-quality output. Alternatively, a synthesizer can incorporate a model of the vocal tract and other human voice characteristics to create a completely "synthetic" voice output. The quality of a speech synthesizer is judged by its similarity to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |