|

Software Brittleness

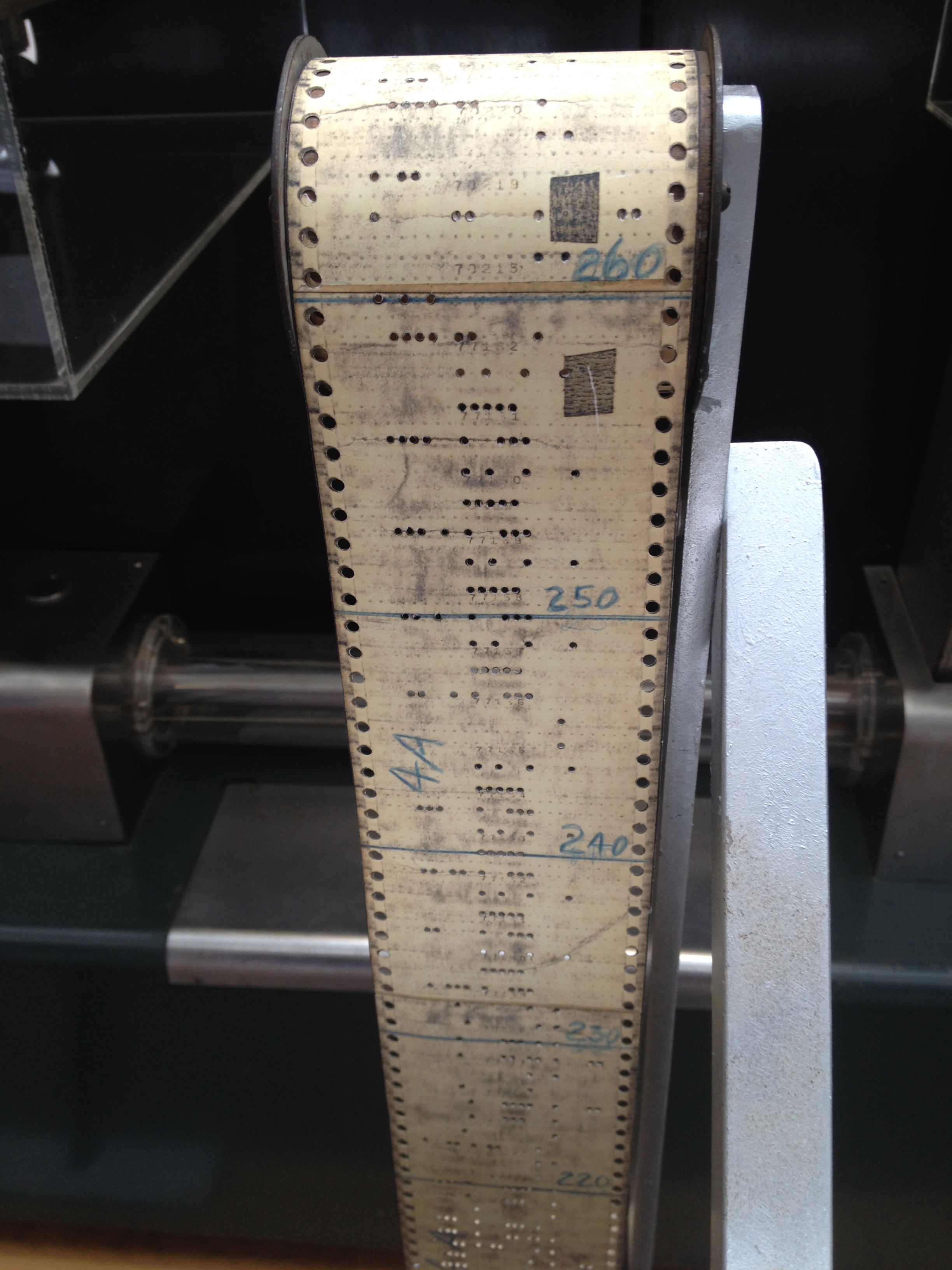

In computer programming and software engineering, software brittleness is the increased difficulty in fixing older software that may appear reliable, but actually fails badly when presented with unusual data or altered in a seemingly minor way. The phrase is derived from analogies to brittleness in metalworking. Causes When software is new, it is very malleable; it can be formed to be whatever is wanted by the implementers. But as the software in a given project grows larger and larger, and develops a larger base of users with long experience with the software, it becomes less and less malleable. Like a metal that has been work-hardened, the software becomes a legacy system, brittle and unable to be easily maintained without fracturing the entire system. Brittleness in software can be caused by algorithms that do not work well for the full range of input data. A good example is an algorithm that allows a divide by zero to occur, or a curve-fitting equation that is used to extrap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Programming

Computer programming is the process of performing a particular computation (or more generally, accomplishing a specific computing result), usually by designing and building an executable computer program. Programming involves tasks such as analysis, generating algorithms, profiling algorithms' accuracy and resource consumption, and the implementation of algorithms (usually in a chosen programming language, commonly referred to as coding). The source code of a program is written in one or more languages that are intelligible to programmers, rather than machine code, which is directly executed by the central processing unit. The purpose of programming is to find a sequence of instructions that will automate the performance of a task (which can be as complex as an operating system) on a computer, often for solving a given problem. Proficient programming thus usually requires expertise in several different subjects, including knowledge of the application domain, specialized algori ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Display Device

A display device is an output device for presentation of information in visual or tactile form (the latter used for example in tactile electronic displays for blind people). When the input information that is supplied has an electrical signal the display is called an ''electronic display''. Common applications for ''electronic visual displays'' are television sets or computer monitors. Types of electronic displays In use These are the technologies used to create the various displays in use today. * Liquid crystal display (LCD) ** Light-emitting diode (LED) backlit LCD ** Thin-film transistor (TFT) LCD ** Quantum dot (QLED) display * Light-emitting diode (LED) display ** OLED display ** AMOLED display ** Super AMOLED display Segment displays Some displays can show only digits or alphanumeric characters. They are called segment displays, because they are composed of several segments that switch on and off to give appearance of desired glyph. The segments are us ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Brittle System

Brittle systems theory creates an analogy between communication theory and mechanical systems. A brittle system is a system characterized by a sudden and steep decline in performance as the system state changes. This can be due to input parameters that exceed a specified input, or environmental conditions that exceed specified operating boundaries. This is the opposite of a gracefully degrading system. Brittle system analysis develops an analogy with materials science in order to analyze system brittleness.Stephen F. Bush, John Hershey and Kirby Vosburgh, Brittle System Analysis, A system that is brittle (but initially robust enough to gain at least some foothold in the marketplace) will tend to operate with acceptable performance until it reaches a limit and then degrade suddenly and catastrophically. The table below illustrates the concept behind the analysis using an example of a communication system. See also * Reliability engineering * Catastrophe theory In mathematic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Systems Development Life Cycle

In systems engineering, information systems and software engineering, the systems development life cycle (SDLC), also referred to as the application development life cycle, is a process for planning, creating, testing, and deploying an information system. The systems development life cycle concept applies to a range of hardware and software configurations, as a system can be composed of hardware only, software only, or a combination of both. There are usually six stages in this cycle: requirement analysis, design, development and testing, implementation, documentation, and evaluation. Overview A systems development life cycle is composed of a number of clearly defined and distinct work phases which are used by systems engineers and systems developers to plan for, design, build, test, and deliver information systems. Like anything that is manufactured on an assembly line, an SDLC aims to produce high-quality systems that meet or exceed customer expectations, based on customer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Run Time (program Lifecycle Phase)

In computer science, runtime, run time, or execution time is the final phase of a computer programs life cycle, in which the code is being executed on the computer's central processing unit (CPU) as machine code. In other words, "runtime" is the running phase of a program. A runtime error is detected after or during the execution (running state) of a program, whereas a compile-time error is detected by the compiler before the program is ever executed. Type checking, register allocation, code generation, and code optimization are typically done at compile time, but may be done at runtime depending on the particular language and compiler. Many other runtime errors exist and are handled differently by different programming languages, such as division by zero errors, domain errors, array subscript out of bounds errors, arithmetic underflow errors, several types of underflow and overflow errors, and many other runtime errors generally considered as software bugs which may or may ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coupling (computer Programming)

In software engineering, coupling is the degree of interdependence between software modules; a measure of how closely connected two routines or modules are; the strength of the relationships between modules. Coupling is usually contrasted with cohesion. Low coupling often correlates with high cohesion, and vice versa. Low coupling is often thought to be a sign of a well-structured computer system and a good design, and when combined with high cohesion, supports the general goals of high readability and maintainability. History The software quality metrics of coupling and cohesion were invented by Larry Constantine in the late 1960s as part of a structured design, based on characteristics of “good” programming practices that reduced maintenance and modification costs. Structured design, including cohesion and coupling, were published in the article ''Stevens, Myers & Constantine'' (1974) and the book ''Yourdon & Constantine'' (1979), and the latter subsequently became stan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dependency Hell

Dependency hell is a colloquial term for the frustration of some software users who have installed software packages which have dependencies on specific versions of other software packages. The dependency issue arises when several packages have dependencies on the same ''shared'' packages or libraries, but they depend on different and incompatible versions of the shared packages. If the shared package or library can only be installed in a single version, the user may need to address the problem by obtaining newer or older versions of the dependent packages. This, in turn, may break other dependencies and push the problem to another set of packages. Problems Dependency hell takes several forms: ; Many dependencies : An application depends on many libraries, requiring lengthy downloads, large amounts of disk space, and being very portable (all libraries are already ported enabling the application itself to be ported easily). It can also be difficult to locate all the dependencies ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech recognition, computer vision, translation between (natural) languages, as well as other mappings of inputs. The ''Oxford English Dictionary'' of Oxford University Press defines artificial intelligence as: the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages. AI applications include advanced web search engines (e.g., Google), recommendation systems (used by YouTube, Amazon and Netflix), understanding human speech (such as Siri and Alexa), self-driving cars (e.g., Tesla), automated decision-making and competing at the highest level in strategic game systems (such as chess and Go). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression Testing

Regression testing (rarely, ''non-regression testing'') is re-running functional and non-functional tests to ensure that previously developed and tested software still performs as expected after a change. If not, that would be called a '' regression''. Changes that may require regression testing include bug fixes, software enhancements, configuration changes, and even substitution of electronic components. As regression test suites tend to grow with each found defect, test automation is frequently involved. Sometimes a change impact analysis is performed to determine an appropriate subset of tests (''non-regression analysis''). Background As software is updated or changed, or reused on a modified target, emergence of new faults and/or re-emergence of old faults is quite common. Sometimes re-emergence occurs because a fix gets lost through poor revision control practices (or simple human error in revision control). Often, a fix for a problem will be "fragile" in that it fi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Patch (computing)

A patch is a set of changes to a computer program or its supporting data designed to update, fix, or improve it. This includes fixing security vulnerabilities and other bugs, with such patches usually being called bugfixes or bug fixes. Patches are often written to improve the functionality, usability, or performance of a program. The majority of patches are provided by software vendors for operating system and application updates. Patches may be installed either under programmed control or by a human programmer using an editing tool or a debugger. They may be applied to program files on a storage device, or in computer memory. Patches may be permanent (until patched again) or temporary. Patching makes possible the modification of compiled and machine language object programs when the source code is unavailable. This demands a thorough understanding of the inner workings of the object code by the person creating the patch, which is difficult without close study of the sourc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Software Archaeology

Software archaeology or source code archeology is the study of poorly documented or undocumented legacy software implementations, as part of software maintenance. Software archaeology, named by analogy with archaeology, includes the reverse engineering of software modules, and the application of a variety of tools and processes for extracting and understanding program structure and recovering design information. Software archaeology may reveal dysfunctional team processes which have produced poorly designed or even unused software modules, and in some cases deliberately obfuscatory code may be found. The term has been in use for decades. Software archaeology has continued to be a topic of discussion at more recent software engineering conferences. Techniques A workshop on Software Archaeology at the 2001 OOPSLA (Object-Oriented Programming, Systems, Languages & Applications) conference identified the following software archaeology techniques, some of which are specific to object-or ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Baby Duck Syndrome

In psychology and ethology, imprinting is any kind of phase-sensitive learning (learning occurring at a particular age or a particular life stage) that is rapid and apparently independent of the consequences of behaviour. It was first used to describe situations in which an animal or person learns the characteristics of some stimulus, which is therefore said to be "imprinted" onto the subject. Imprinting is hypothesized to have a critical period. Filial imprinting The best-known form of imprinting is ''filial imprinting'', in which a young animal narrows its social preferences to an object (typically a parent) as a result of exposure to that object. It is most obvious in nidifugous birds, which imprint on their parents and then follow them around. It was first reported in domestic chickens, by Sir Thomas More in 1516 as described in his treatise ''Utopia'', 350years earlier than by the 19th-century amateur biologist Douglas Spalding. It was rediscovered by the early ethologist O ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |