|

Soft-in Soft-out Decoder

A soft-in soft-out decoder (SISO decoder) is a type of soft-decision decoder used with error correcting codes. "Soft-in" refers to the fact that the incoming data may take on values other than 0 or 1, in order to indicate reliability. "Soft-out" refers to the fact that each bit in the decoded output also takes on a value indicating reliability. Typically, the soft output is used as the soft input to an outer decoder in a system using concatenated codes, or to modify the input to a further decoding iteration such as in the decoding of turbo codes. Examples include the BCJR algorithm and the soft output Viterbi algorithm. See also * Decoding methods * Error detection and correction * Forward error correction In computing, telecommunication, information theory, and coding theory, forward error correction (FEC) or channel coding is a technique used for controlling errors in data transmission over unreliable or noisy communication channels. The centra ... References {{Reflis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Soft-decision Decoder

In information theory, a soft-decision decoder is a kind of decoding method – a class of algorithm used to decode data that has been encoded with an error correcting code. Whereas a hard-decision decoder operates on data that take on a fixed set of possible values (typically 0 or 1 in a binary code), the inputs to a soft-decision decoder may take on a whole range of values in-between. This extra information indicates the reliability of each input data point, and is used to form better estimates of the original data. Therefore, a soft-decision decoder will typically perform better in the presence of corrupted data than its hard-decision counterpart. Soft-decision decoders are often used in Viterbi decoders and turbo code decoders. See also * Forward error correction In computing, telecommunication, information theory, and coding theory, forward error correction (FEC) or channel coding is a technique used for controlling errors in data transmission over unreliable or no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Error Correcting Code

In computing, telecommunication, information theory, and coding theory, forward error correction (FEC) or channel coding is a technique used for controlling errors in data transmission over unreliable or noisy communication channels. The central idea is that the sender encodes the message in a redundant way, most often by using an error correction code, or error correcting code (ECC). The redundancy allows the receiver not only to detect errors that may occur anywhere in the message, but often to correct a limited number of errors. Therefore a reverse channel to request re-transmission may not be needed. The cost is a fixed, higher forward channel bandwidth. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. FEC can be applied in situations where re-transmissions are costly or impossible, such as one-way communication links or when transmitting to multiple receivers in m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Concatenated Code

In coding theory, concatenated codes form a class of error-correcting codes that are derived by combining an inner code and an outer code. They were conceived in 1966 by Dave Forney as a solution to the problem of finding a code that has both exponentially decreasing error probability with increasing block length and polynomial-time decoding complexity. Concatenated codes became widely used in space communications in the 1970s. Background The field of channel coding is concerned with sending a stream of data at the highest possible rate over a given communications channel, and then decoding the original data reliably at the receiver, using encoding and decoding algorithms that are feasible to implement in a given technology. Shannon's channel coding theorem shows that over many common channels there exist channel coding schemes that are able to transmit data reliably at all rates R less than a certain threshold C, called the channel capacity of the given channel. In fact, the p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BCJR Algorithm

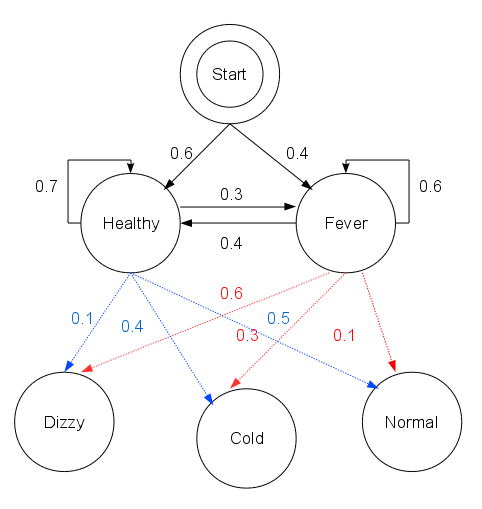

The Bahl-Cocke-Jelinek-Raviv (BCJR) algorithm is an algorithm for maximum a posteriori decoding of error correcting codes defined on trellises (principally convolutional codes). The algorithm is named after its inventors: Bahl, Cocke, Jelinek and Raviv. This algorithm is critical to modern iteratively-decoded error-correcting codes, including turbo codes and low-density parity-check codes. Steps involved Based on the trellis: * Compute forward probabilities \alpha * Compute backward probabilities \beta * Compute smoothed probabilities based on other information (i.e. noise variance for AWGN, bit crossover probability for binary symmetric channel) Variations SBGT BCJR Berrou, Glavieux and Thitimajshima simplification. Log-Map BCJR Implementations Susaframework implements BCJR algorithm for forward error correction codes and channel equalization in C++. See also * Forward-backward algorithm * Maximum a posteriori (MAP) estimation * Hidden Markov model A hidden M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Soft Output Viterbi Algorithm

The Viterbi algorithm is a dynamic programming algorithm for obtaining the maximum a posteriori probability estimate of the most likely sequence of hidden states—called the Viterbi path—that results in a sequence of observed events. This is done especially in the context of Markov information sources and hidden Markov models (HMM). The algorithm has found universal application in decoding the convolutional codes used in both CDMA and GSM digital cellular, dial-up modems, satellite, deep-space communications, and 802.11 wireless LANs. It is now also commonly used in speech recognition, speech synthesis, diarization, keyword spotting, computational linguistics, and bioinformatics. For example, in speech-to-text (speech recognition), the acoustic signal is treated as the observed sequence of events, and a string of text is considered to be the "hidden cause" of the acoustic signal. The Viterbi algorithm finds the most likely string of text given the acoustic signal. Hist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decoding Methods

In coding theory, decoding is the process of translating received messages into Code word (communication), codewords of a given code. There have been many common methods of mapping messages to codewords. These are often used to recover messages sent over a noisy channel, such as a binary symmetric channel. Notation C \subset \mathbb_2^n is considered a binary code with the length n; x,y shall be elements of \mathbb_2^n; and d(x,y) is the distance between those elements. Ideal observer decoding One may be given the message x \in \mathbb_2^n, then ideal observer decoding generates the codeword y \in C. The process results in this solution: :\mathbb(y \mbox \mid x \mbox) For example, a person can choose the codeword y that is most likely to be received as the message x after transmission. Decoding conventions Each codeword does not have an expected possibility: there may be more than one codeword with an equal likelihood of mutating into the received message. In such a case, the s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Error Detection And Correction

In information theory and coding theory with applications in computer science and telecommunications, error detection and correction (EDAC) or error control are techniques that enable reliable delivery of digital data over unreliable communication channels. Many communication channels are subject to channel noise, and thus errors may be introduced during transmission from the source to a receiver. Error detection techniques allow detecting such errors, while error correction enables reconstruction of the original data in many cases. Definitions ''Error detection'' is the detection of errors caused by noise or other impairments during transmission from the transmitter to the receiver. ''Error correction'' is the detection of errors and reconstruction of the original, error-free data. History In classical antiquity, copyists of the Hebrew Bible were paid for their work according to the number of stichs (lines of verse). As the prose books of the Bible were hardly ever w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Forward Error Correction

In computing, telecommunication, information theory, and coding theory, forward error correction (FEC) or channel coding is a technique used for controlling errors in data transmission over unreliable or noisy communication channels. The central idea is that the sender encodes the message in a redundant way, most often by using an error correction code, or error correcting code (ECC). The redundancy allows the receiver not only to detect errors that may occur anywhere in the message, but often to correct a limited number of errors. Therefore a reverse channel to request re-transmission may not be needed. The cost is a fixed, higher forward channel bandwidth. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. FEC can be applied in situations where re-transmissions are costly or impossible, such as one-way communication links or when transmitting to multiple receivers in m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |