|

SimHash

In computer science, SimHash is a technique for quickly estimating how Similarity measure, similar two sets are. The algorithm is used by the Google Web crawler, Crawler to find near duplicate pages. It was created by Moses Charikar. In 2021 Google announced its intent to also use the algorithm in their newly created Federated Learning of Cohorts, FLoC (Federated Learning of Cohorts) system. Evaluation and benchmarks A large scale evaluation has been conducted by Google in 2006 to compare the performance of Minhash and Simhash algorithms. In 2007 Google reported using Simhash for duplicate detection for web crawling and using Minhash and Locality-sensitive hashing, LSH for Google News personalization. . See also * MinHash * w-shingling * Count–min sketch * Locality-sensitive hashing References External linksSimhash Princeton Paper [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Federated Learning Of Cohorts

Federated Learning of Cohorts (FLoC) is a type of web tracking. It groups people into "cohorts" based on their browsing history for the purpose of Targeted advertising, interest-based advertising. FLoC was being developed as a part of Google's Privacy Sandbox initiative, which includes several other advertising-related technologies with bird-themed names. Despite "federated learning" in the name, FLoC does not utilize any federated learning. Google began testing the technology in Google Chrome, Chrome 89 released in March 2021 as a replacement for third-party cookies. By April 2021, every major browser aside from Google Chrome that is based on Google's open-source Chromium (web browser), Chromium platform had declined to implement FLoC. The technology was criticized on privacy grounds by groups including the Electronic Frontier Foundation and DuckDuckGo, and has been described as Anti-competitive practices, anti-competitive; it generated an AntiTrust, antitrust response in mult ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Moses Charikar

Moses Samson Charikar is an Indian-American computer scientist who works as a professor at Stanford University. He was previously a professor at Princeton University. The topics of his research include approximation algorithms, streaming algorithms, and metric embeddings. He is known for the creation of the SimHash algorithm used by Google for near duplicate detection. Charikar was born in Bombay, India, and competed for India at the 1990 and 1991 International Mathematical Olympiads, winning bronze and silver medals respectively. He did his undergraduate studies at the Indian Institute of Technology Bombay. In 2000 he completed a doctorate from Stanford University, under the supervision of Rajeev Motwani; he joined the Princeton faculty in 2001. In 2012 he was awarded the Paris Kanellakis Award along with Andrei Broder and Piotr Indyk for their research on locality-sensitive hashing In computer science, locality-sensitive hashing (LSH) is a fuzzy hashing technique that hash ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Minhash

In computer science and data mining, MinHash (or the min-wise independent permutations locality sensitive hashing scheme) is a technique for quickly estimating how similar two sets are. The scheme was published by Andrei Broder in a 1997 conference, and initially used in the AltaVista search engine to detect duplicate web pages and eliminate them from search results.. It has also been applied in large-scale clustering problems, such as clustering documents by the similarity of their sets of words.. Jaccard similarity and minimum hash values The Jaccard similarity coefficient is a commonly used indicator of the similarity between two sets. Let be a set and and be subsets of , then the Jaccard index is defined to be the ratio of the number of elements of their intersection and the number of elements of their union: : J(A,B) = . This value is 0 when the two sets are disjoint, 1 when they are equal, and strictly between 0 and 1 otherwise. Two sets are more similar (i.e. have ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Locality-sensitive Hashing

In computer science, locality-sensitive hashing (LSH) is a fuzzy hashing technique that hashes similar input items into the same "buckets" with high probability. (The number of buckets is much smaller than the universe of possible input items.) Since similar items end up in the same buckets, this technique can be used for data clustering and nearest neighbor search. It differs from conventional hashing techniques in that hash collisions are maximized, not minimized. Alternatively, the technique can be seen as a way to reduce the dimensionality of high-dimensional data; high-dimensional input items can be reduced to low-dimensional versions while preserving relative distances between items. Hashing-based approximate nearest-neighbor search algorithms generally use one of two main categories of hashing methods: either data-independent methods, such as locality-sensitive hashing (LSH); or data-dependent methods, such as locality-preserving hashing (LPH). Locality-preserving hashin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

MinHash

In computer science and data mining, MinHash (or the min-wise independent permutations locality sensitive hashing scheme) is a technique for quickly estimating how similar two sets are. The scheme was published by Andrei Broder in a 1997 conference, and initially used in the AltaVista search engine to detect duplicate web pages and eliminate them from search results.. It has also been applied in large-scale clustering problems, such as clustering documents by the similarity of their sets of words.. Jaccard similarity and minimum hash values The Jaccard similarity coefficient is a commonly used indicator of the similarity between two sets. Let be a set and and be subsets of , then the Jaccard index is defined to be the ratio of the number of elements of their intersection and the number of elements of their union: : J(A,B) = . This value is 0 when the two sets are disjoint, 1 when they are equal, and strictly between 0 and 1 otherwise. Two sets are more similar (i.e. have ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computer Science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, applied disciplines (including the design and implementation of Computer architecture, hardware and Software engineering, software). Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and preventing security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Similarity Measure

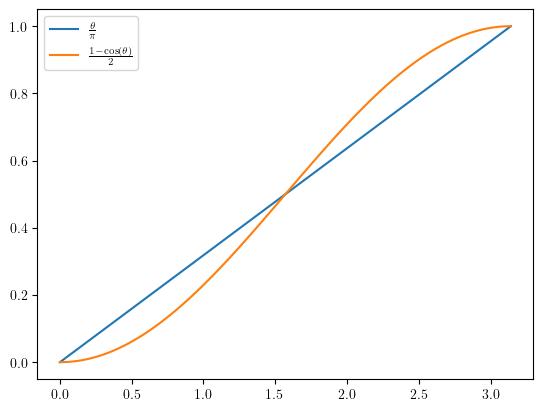

In statistics and related fields, a similarity measure or similarity function or similarity metric is a real-valued function that quantifies the similarity between two objects. Although no single definition of a similarity exists, usually such measures are in some sense the inverse of distance metrics: they take on large values for similar objects and either zero or a negative value for very dissimilar objects. Though, in more broad terms, a similarity function may also satisfy metric axioms. Cosine similarity is a commonly used similarity measure for real-valued vectors, used in (among other fields) information retrieval to score the similarity of documents in the vector space model. In machine learning, common kernel functions such as the RBF kernel can be viewed as similarity functions. Use of different similarity measure formulas Different types of similarity measures exist for various types of objects, depending on the objects being compared. For each type of object there ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can use Conditional (computer programming), conditionals to divert the code execution through various routes (referred to as automated decision-making) and deduce valid inferences (referred to as automated reasoning). In contrast, a Heuristic (computer science), heuristic is an approach to solving problems without well-defined correct or optimal results.David A. Grossman, Ophir Frieder, ''Information Retrieval: Algorithms and Heuristics'', 2nd edition, 2004, For example, although social media recommender systems are commonly called "algorithms", they actually rely on heuristics as there is no truly "correct" recommendation. As an e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Google

Google LLC (, ) is an American multinational corporation and technology company focusing on online advertising, search engine technology, cloud computing, computer software, quantum computing, e-commerce, consumer electronics, and artificial intelligence (AI). It has been referred to as "the most powerful company in the world" by the BBC and is one of the world's List of most valuable brands, most valuable brands. Google's parent company, Alphabet Inc., is one of the five Big Tech companies alongside Amazon (company), Amazon, Apple Inc., Apple, Meta Platforms, Meta, and Microsoft. Google was founded on September 4, 1998, by American computer scientists Larry Page and Sergey Brin. Together, they own about 14% of its publicly listed shares and control 56% of its stockholder voting power through super-voting stock. The company went public company, public via an initial public offering (IPO) in 2004. In 2015, Google was reorganized as a wholly owned subsidiary of Alphabet Inc. Go ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Web Crawler

Web crawler, sometimes called a spider or spiderbot and often shortened to crawler, is an Internet bot that systematically browses the World Wide Web and that is typically operated by search engines for the purpose of Web indexing (''web spidering''). Web search engines and some other websites use Web crawling or spidering software to update their web content or indices of other sites' web content. Web crawlers copy pages for processing by a search engine, which Index (search engine), indexes the downloaded pages so that users can search more efficiently. Crawlers consume resources on visited systems and often visit sites unprompted. Issues of schedule, load, and "politeness" come into play when large collections of pages are accessed. Mechanisms exist for public sites not wishing to be crawled to make this known to the crawling agent. For example, including a robots.txt file can request Software agent, bots to index only parts of a website, or nothing at all. The number of In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Google News

Google News is a news aggregator service developed by Google. It presents a continuous flow of links to articles organized from thousands of publishers and magazines. Google News is available as an app on Android, iOS, and the Web. Google released a beta version in September 2002 and the official app in January 2006. The initial idea was developed by Krishna Bharat. The service has been described as the world's largest news aggregator. In 2020, Google announced they would be spending billion to work with publishers to create Showcases, "a new format for insightful feature stories". History As of 2014, Google News was watching more than 50,000 news sources worldwide. Versions for more than 60 regions in 28 languages were available in March 2012. , service is offered in the following 38 languages: Afrikaans, Arabic, Bengali, Bulgarian, Catalan, Cantonese, Chinese, Czech, Dutch, English, French, German, Greek, Hebrew, Hindi, Hungarian, Italian, Indonesian, Ja ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |