|

Sidi's Generalized Secant Method

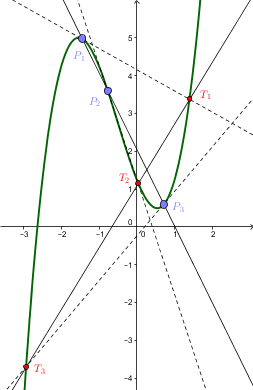

Sidi's generalized secant method is a root-finding algorithm, that is, a numerical method for solving equations of the form f(x)=0 . The method was published by Avram Sidi. Sidi, Avram, "Generalization Of The Secant Method For Nonlinear Equations", Applied Mathematics E-notes 8 (2008), 115–123, http://www.math.nthu.edu.tw/~amen/2008/070227-1.pdf The method is a generalization of the secant method. Like the secant method, it is an iterative method which requires one evaluation of f in each iteration and no derivatives of f. The method can converge much faster though, with an order which approaches 2 provided that f satisfies the regularity conditions described below. Algorithm We call \alpha the root of f, that is, f(\alpha)=0. Sidi's method is an iterative method which generates a sequence \ of approximations of \alpha. Starting with ''k'' + 1 initial approximations x_1 , \dots , x_, the approximation x_ is calculated in the first iteration, the approximation x_ is calculated ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Root-finding Algorithm

In numerical analysis, a root-finding algorithm is an algorithm for finding zeros, also called "roots", of continuous functions. A zero of a function is a number such that . As, generally, the zeros of a function cannot be computed exactly nor expressed in closed form, root-finding algorithms provide approximations to zeros. For functions from the real numbers to real numbers or from the complex numbers to the complex numbers, these are expressed either as floating-point numbers without error bounds or as floating-point values together with error bounds. The latter, approximations with error bounds, are equivalent to small isolating intervals for real roots or disks for complex roots. Solving an equation is the same as finding the roots of the function . Thus root-finding algorithms can be used to solve any equation of continuous functions. However, most root-finding algorithms do not guarantee that they will find all roots of a function, and if such an algorithm does not f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Derivative-free Optimization

Derivative-free optimization (sometimes referred to as blackbox optimization) is a discipline in mathematical optimization that does not use derivative information in the classical sense to find optimal solutions: Sometimes information about the derivative of the objective function ''f'' is unavailable, unreliable or impractical to obtain. For example, ''f'' might be non-smooth, or time-consuming to evaluate, or in some way noisy, so that methods that rely on derivatives or approximate them via finite differences are of little use. The problem to find optimal points in such situations is referred to as derivative-free optimization, algorithms that do not use derivatives or finite differences are called derivative-free algorithms. Introduction The problem to be solved is to numerically optimize an objective function f\colon A\to\mathbb for some set A (usually A\subset\mathbb^n), i.e. find x_0\in A such that without loss of generality f(x_0)\leq f(x) for all x\in A. When applicable, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cubic Function

In mathematics, a cubic function is a function of the form f(x)=ax^3+bx^2+cx+d, that is, a polynomial function of degree three. In many texts, the ''coefficients'' , , , and are supposed to be real numbers, and the function is considered as a real function that maps real numbers to real numbers or as a complex function that maps complex numbers to complex numbers. In other cases, the coefficients may be complex numbers, and the function is a complex function that has the set of the complex numbers as its codomain, even when the domain is restricted to the real numbers. Setting produces a cubic equation of the form :ax^3+bx^2+cx+d=0, whose solutions are called roots of the function. The derivative of a cubic function is a quadratic function. A cubic function with real coefficients has either one or three real roots ( which may not be distinct); all odd-degree polynomials with real coefficients have at least one real root. The graph of a cubic function always has a single ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Newton's Method

In numerical analysis, the Newton–Raphson method, also known simply as Newton's method, named after Isaac Newton and Joseph Raphson, is a root-finding algorithm which produces successively better approximations to the roots (or zeroes) of a real-valued function. The most basic version starts with a real-valued function , its derivative , and an initial guess for a root of . If satisfies certain assumptions and the initial guess is close, then x_ = x_0 - \frac is a better approximation of the root than . Geometrically, is the x-intercept of the tangent of the graph of at : that is, the improved guess, , is the unique root of the linear approximation of at the initial guess, . The process is repeated as x_ = x_n - \frac until a sufficiently precise value is reached. The number of correct digits roughly doubles with each step. This algorithm is first in the class of Householder's methods, and was succeeded by Halley's method. The method can also be extended t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Descartes's Rule Of Signs

In mathematics, Descartes' rule of signs, described by René Descartes in his ''La Géométrie'', counts the roots of a polynomial by examining sign changes in its coefficients. The number of positive real roots is at most the number of sign changes in the sequence of the polynomial's coefficients (omitting zero coefficients), and the difference between the root count and the sign change count is always even. In particular, when the number of sign changes is zero or one, then there are exactly zero or one positive roots. A linear fractional transformation of the variable makes it possible to use the rule of signs to count roots in any interval. This is the basic idea of Budan's theorem and the Budan–Fourier theorem. Repeated division of an interval in two results in a set of disjoint intervals, each containing one root, and together listing all the roots. This approach is used in the fastest algorithms today for computer computation of real roots of polynomials (see real-root is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Limit Of A Sequence

As the positive integer n becomes larger and larger, the value n\times \sin\left(\tfrac1\right) becomes arbitrarily close to 1. We say that "the limit of the sequence n \times \sin\left(\tfrac1\right) equals 1." In mathematics, the limit of a sequence is the value that the terms of a sequence "tend to", and is often denoted using the \lim symbol (e.g., \lim_a_n).Courant (1961), p. 29. If such a limit exists and is finite, the sequence is called convergent. A sequence that does not converge is said to be divergent. The limit of a sequence is said to be the fundamental notion on which the whole of mathematical analysis ultimately rests. Limits can be defined in any metric space, metric or topological space, but are usually first encountered in the real numbers. History The Greek philosopher Zeno of Elea is famous for formulating Zeno's paradoxes, paradoxes that involve limiting processes. Leucippus, Democritus, Antiphon (person), Antiphon, Eudoxus of Cnidus, Eudoxus, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Open Interval

In mathematics, a real interval is the set (mathematics), set of all real numbers lying between two fixed endpoints with no "gaps". Each endpoint is either a real number or positive or negative infinity, indicating the interval extends without a Bounded set, bound. A real interval can contain neither endpoint, either endpoint, or both endpoints, excluding any endpoint which is infinite. For example, the set of real numbers consisting of , , and all numbers in between is an interval, denoted and called the unit interval; the set of all positive real numbers is an interval, denoted ; the set of all real numbers is an interval, denoted ; and any single real number is an interval, denoted . Intervals are ubiquitous in mathematical analysis. For example, they occur implicitly in the epsilon-delta definition of continuity; the intermediate value theorem asserts that the image of an interval by a continuous function is an interval; integrals of real functions are defined over an int ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Smooth Function

In mathematical analysis, the smoothness of a function is a property measured by the number of continuous derivatives (''differentiability class)'' it has over its domain. A function of class C^k is a function of smoothness at least ; that is, a function of class C^k is a function that has a th derivative that is continuous in its domain. A function of class C^\infty or C^\infty-function (pronounced C-infinity function) is an infinitely differentiable function, that is, a function that has derivatives of all orders (this implies that all these derivatives are continuous). Generally, the term smooth function refers to a C^-function. However, it may also mean "sufficiently differentiable" for the problem under consideration. Differentiability classes Differentiability class is a classification of functions according to the properties of their derivatives. It is a measure of the highest order of derivative that exists and is continuous for a function. Consider an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Newton Polynomial

In the mathematical field of numerical analysis, a Newton polynomial, named after its inventor Isaac Newton, is an interpolation polynomial for a given set of data points. The Newton polynomial is sometimes called Newton's divided differences interpolation polynomial because the coefficients of the polynomial are calculated using Newton's divided differences method. Definition Given a set of ''k'' + 1 data points :(x_0, y_0),\ldots,(x_j, y_j),\ldots,(x_k, y_k) where no two ''x''''j'' are the same, the Newton interpolation polynomial is a linear combination of Newton basis polynomials :N(x) := \sum_^ a_ n_(x) with the Newton basis polynomials defined as :n_j(x) := \prod_^ (x - x_i) for ''j'' > 0 and n_0(x) \equiv 1. The coefficients are defined as :a_j := _0,\ldots,y_j/math> where _0,\ldots,y_j/math> are the divided differences defined as \begin \mathopen _k&:= y_k, && k \in \ \\ \mathopen _k,\ldots,y_&:= \frac, && k\in\,\ j\in\. \end Thus the Newton polynomi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Degree Of A Polynomial

In mathematics, the degree of a polynomial is the highest of the degrees of the polynomial's monomials (individual terms) with non-zero coefficients. The degree of a term is the sum of the exponents of the variables that appear in it, and thus is a non-negative integer. For a univariate polynomial, the degree of the polynomial is simply the highest exponent occurring in the polynomial. The term order has been used as a synonym of ''degree'' but, nowadays, may refer to several other concepts (see Order of a polynomial (other)). For example, the polynomial 7x^2y^3 + 4x - 9, which can also be written as 7x^2y^3 + 4x^1y^0 - 9x^0y^0, has three terms. The first term has a degree of 5 (the sum of the powers 2 and 3), the second term has a degree of 1, and the last term has a degree of 0. Therefore, the polynomial has a degree of 5, which is the highest degree of any term. To determine the degree of a polynomial that is not in standard form, such as (x+1)^2 - (x-1)^2, one c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Numerical Method

In numerical analysis, a numerical method is a mathematical tool designed to solve numerical problems. The implementation of a numerical method with an appropriate convergence check in a programming language is called a numerical algorithm. Mathematical definition Let F(x,y)=0 be a well-posed problem, i.e. F:X \times Y \rightarrow \mathbb is a real or complex functional relationship, defined on the Cartesian product of an input data set X and an output data set Y, such that exists a locally lipschitz function g:X \rightarrow Y called resolvent, which has the property that for every root (x,y) of F, y=g(x). We define numerical method for the approximation of F(x,y)=0, the sequence In mathematics, a sequence is an enumerated collection of objects in which repetitions are allowed and order matters. Like a set, it contains members (also called ''elements'', or ''terms''). The number of elements (possibly infinite) is cal ... of problems : \left \_ = \left \_, with F_n:X_n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |