|

Self-play (reinforcement Learning Technique)

Self-play is a technique for improving the performance of reinforcement learning agents. Intuitively, agents learn to improve their performance by playing "against themselves". Definition and motivation In multi-agent reinforcement learning experiments, researchers try to optimize the performance of a learning agent on a given task, in cooperation or competition with one or more agents. These agents learn by trial-and-error, and researchers may choose to have the learning algorithm play the role of two or more of the different agents. When successfully executed, this technique has a double advantage: # It provides a straightforward way to determine the actions of the other agents, resulting in a meaningful challenge. # It increases the amount of experience that can be used to improve the policy, by a factor of two or more, since the viewpoints of each of the different agents can be used for learning. Usage Self-play is used by the AlphaZero program to improve its perform ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reinforcement Learning

Reinforcement learning (RL) is an area of machine learning concerned with how intelligent agents ought to take actions in an environment in order to maximize the notion of cumulative reward. Reinforcement learning is one of three basic machine learning paradigms, alongside supervised learning and unsupervised learning. Reinforcement learning differs from supervised learning in not needing labelled input/output pairs to be presented, and in not needing sub-optimal actions to be explicitly corrected. Instead the focus is on finding a balance between exploration (of uncharted territory) and exploitation (of current knowledge). The environment is typically stated in the form of a Markov decision process (MDP), because many reinforcement learning algorithms for this context use dynamic programming techniques. The main difference between the classical dynamic programming methods and reinforcement learning algorithms is that the latter do not assume knowledge of an exact mathematica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multi-agent Reinforcement Learning

] Multi-agent reinforcement learning (MARL) is a sub-field of reinforcement learning. It focuses on studying the behavior of multiple learning agents that coexist in a shared environment. Each agent is motivated by its own rewards, and does actions to advance its own interests; in some environments these interests are opposed to the interests of other agents, resulting in complex group dynamics. Multi-agent reinforcement learning is closely related to game theory and especially repeated games, as well as multi-agent systems. Its study combines the pursuit of finding ideal algorithms that maximize rewards with a more sociological set of concepts. While research in single-agent reinforcement learning is concerned with finding the algorithm that gets the biggest number of points for one agent, research in multi-agent reinforcement learning evaluates and quantifies social metrics, such as cooperation, reciprocity, equity, social influence, language and discrimination. Definition ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AlphaZero

AlphaZero is a computer program developed by artificial intelligence research company DeepMind to master the games of chess, shogi and go. This algorithm uses an approach similar to AlphaGo Zero. On December 5, 2017, the DeepMind team released a preprint introducing AlphaZero, which within 24 hours of training achieved a superhuman level of play in these three games by defeating world-champion programs Stockfish, elmo, and the three-day version of AlphaGo Zero. In each case it made use of custom tensor processing units (TPUs) that the Google programs were optimized to use. AlphaZero was trained solely via self-play using 5,000 first-generation TPUs to generate the games and 64 second-generation TPUs to train the neural networks, all in parallel, with no access to opening books or endgame tables. After four hours of training, DeepMind estimated AlphaZero was playing chess at a higher Elo rating than Stockfish 8; after nine hours of training, the algorithm defeated Stockf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chess

Chess is a board game for two players, called White and Black, each controlling an army of chess pieces in their color, with the objective to checkmate the opponent's king. It is sometimes called international chess or Western chess to distinguish it from related games, such as xiangqi (Chinese chess) and shogi (Japanese chess). The recorded history of chess goes back at least to the emergence of a similar game, chaturanga, in seventh-century India. The rules of chess as we know them today emerged in Europe at the end of the 15th century, with standardization and universal acceptance by the end of the 19th century. Today, chess is one of the world's most popular games, played by millions of people worldwide. Chess is an abstract strategy game that involves no hidden information and no use of dice or cards. It is played on a chessboard with 64 squares arranged in an eight-by-eight grid. At the start, each player controls sixteen pieces: one king, one queen, two rooks, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shogi

, also known as Japanese chess, is a strategy board game for two players. It is one of the most popular board games in Japan and is in the same family of games as Western chess, ''chaturanga, Xiangqi'', Indian chess, and '' janggi''. ''Shōgi'' means general's (''shō'' ) board game (''gi'' ). Western chess is sometimes called (''Seiyō Shōgi'' ) in Japan. Shogi was the earliest chess-related historical game to allow captured pieces to be returned to the board by the capturing player. This drop rule is speculated to have been invented in the 15th century and possibly connected to the practice of 15th century mercenaries switching loyalties when captured instead of being killed. The earliest predecessor of the game, chaturanga, originated in India in the sixth century, and the game was likely transmitted to Japan via China or Korea sometime after the Nara period."Shogi". ''Encyclopædia Britannica''. 2002. Shogi in its present form was played as early as the 16th century, while ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Go (game)

Go is an abstract strategy board game for two players in which the aim is to surround more territory than the opponent. The game was invented in China more than 2,500 years ago and is believed to be the oldest board game continuously played to the present day. A 2016 survey by the International Go Federation's 75 member nations found that there are over 46 million people worldwide who know how to play Go and over 20 million current players, the majority of whom live in East Asia. The playing pieces are called stones. One player uses the white stones and the other, black. The players take turns placing the stones on the vacant intersections (''points'') of a board. Once placed on the board, stones may not be moved, but stones are removed from the board if the stone (or group of stones) is surrounded by opposing stones on all orthogonally adjacent points, in which case the stone or group is ''captured''. The game proceeds until neither player wishes to make another move. Wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Diplomacy (game)

''Diplomacy'' is a strategy game, strategic board game created by Allan B. Calhamer in 1954 and released commercially in the United States in 1959. Its main distinctions from most wargaming, board wargames are its negotiation phases (players spend much of their time forming and betraying alliances with other players and forming beneficial strategies)Parlett, David. ''The Oxford History of Board Games''. Oxford University Press, UK, 1999. . pp. 361–362. and the absence of dice and other game elements that produce random effects. Set in Europe in the years leading to the World War I, Great War, ''Diplomacy'' is played by two to seven players, each controlling the armed forces of a major European power (or, with fewer players, multiple powers). Each player aims to move their few starting units and defeat those of others to win possession of a majority of strategic cities and provinces marked as "supply centers" on the map; these supply centers allow players who control them to produ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

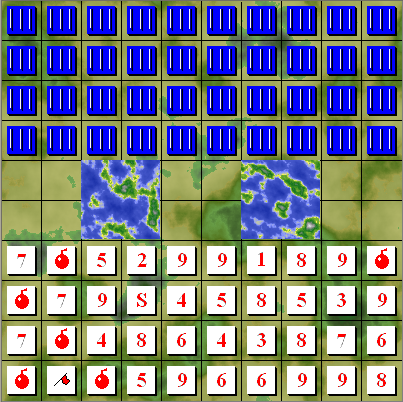

Stratego

''Stratego'' ( ) is a strategy board game for two players on a board of 10×10 squares. Each player controls 40 pieces representing individual officer and soldier ranks in an army. The pieces have Napoleonic insignia. The objective of the game is to find and capture the opponent's ''Flag'', or to capture so many enemy pieces that the opponent cannot make any further moves. ''Stratego'' has simple enough rules for young children to play but a depth of strategy that is also appealing to adults. The game is a slightly modified copy of an early 20th century French game named ' ("''The Attack''"). It has been in production in Europe since World War II and the United States since 1961. There are now two- and four-player versions, versions with 10, 30 or 40 pieces per player, and boards with smaller sizes (number of spaces). There are also variant pieces and different . The International Stratego Federation, the game's governing body, sponsors an annual Stratego World Championship ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

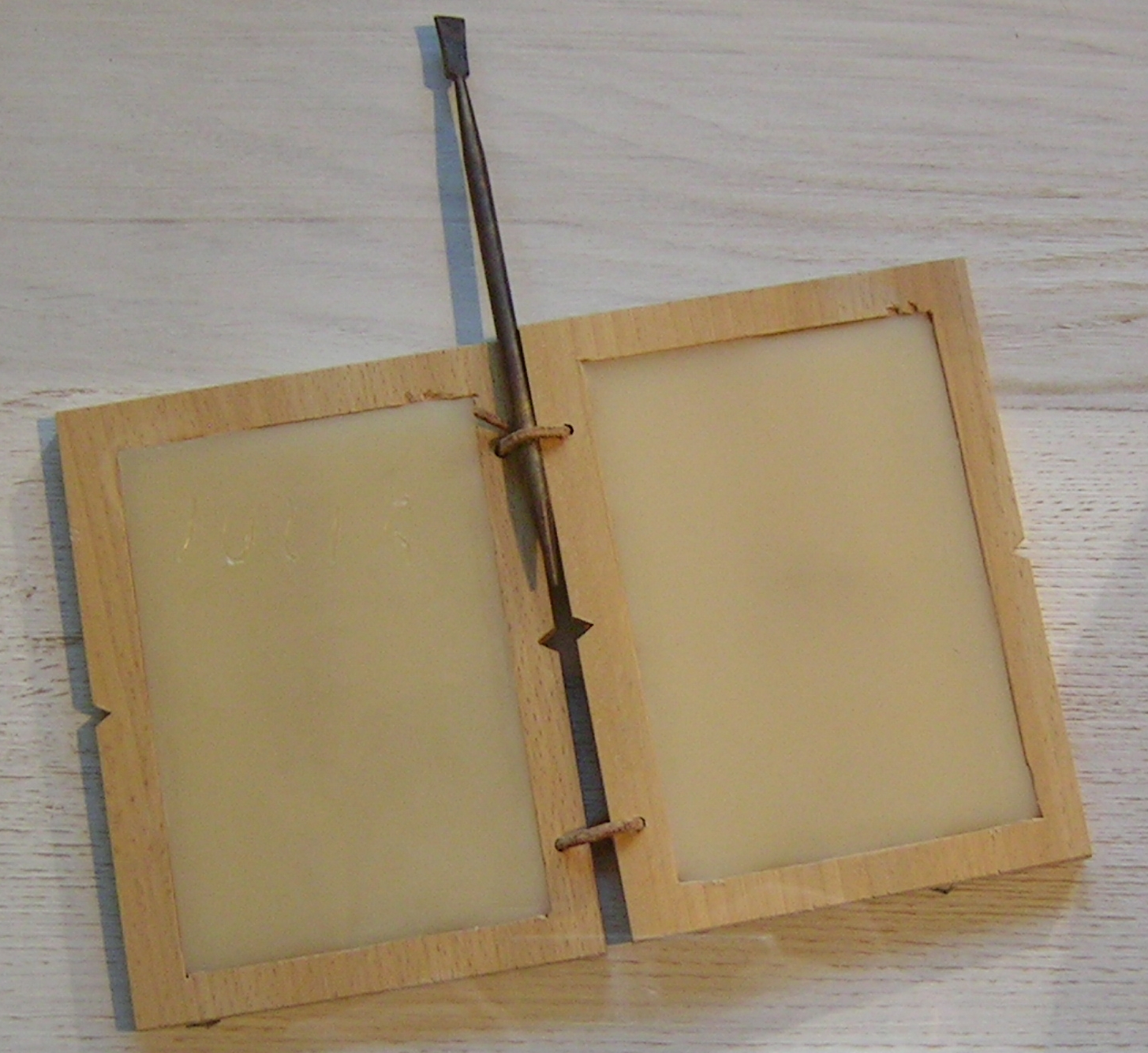

Tabula Rasa

''Tabula rasa'' (; "blank slate") is the theory that individuals are born without built-in mental content, and therefore all knowledge comes from experience or perception. Epistemological proponents of ''tabula rasa'' disagree with the doctrine of innatism, which holds that the mind is born already in possession of certain knowledge. Proponents of the ''tabula rasa'' theory also favour the "nurture" side of the nature versus nurture debate when it comes to aspects of one's personality, social and emotional behaviour, knowledge, and sapience. Etymology ''Tabula rasa'' is a Latin phrase often translated as ''clean slate'' in English and originates from the Roman ''tabula'', a wax-covered tablet used for notes, which was blanked ('' rasa'') by heating the wax and then smoothing it. This roughly equates to the English term "blank slate" (or, more literally, "erased slate") which refers to the emptiness of a slate prior to it being written on with chalk. Both may be renewed repe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reinforcement Learning

Reinforcement learning (RL) is an area of machine learning concerned with how intelligent agents ought to take actions in an environment in order to maximize the notion of cumulative reward. Reinforcement learning is one of three basic machine learning paradigms, alongside supervised learning and unsupervised learning. Reinforcement learning differs from supervised learning in not needing labelled input/output pairs to be presented, and in not needing sub-optimal actions to be explicitly corrected. Instead the focus is on finding a balance between exploration (of uncharted territory) and exploitation (of current knowledge). The environment is typically stated in the form of a Markov decision process (MDP), because many reinforcement learning algorithms for this context use dynamic programming techniques. The main difference between the classical dynamic programming methods and reinforcement learning algorithms is that the latter do not assume knowledge of an exact mathematica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |