|

Structured Prediction

Structured prediction or structured output learning is an umbrella term for supervised machine learning techniques that involves predicting structured objects, rather than discrete or real values. Similar to commonly used supervised learning techniques, structured prediction models are typically trained by means of observed data in which the predicted value is compared to the ground truth, and this is used to adjust the model parameters. Due to the complexity of the model and the interrelations of predicted variables, the processes of model training and inference are often computationally infeasible, so approximate inference and learning methods are used. Applications An example application is the problem of translating a natural language sentence into a syntactic representation such as a parse tree. This can be seen as a structured prediction problem in which the structured output domain is the set of all possible parse trees. Structured prediction is used in a wide variety ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Umbrella Term

Hypernymy and hyponymy are the wikt:Wiktionary:Semantic relations, semantic relations between a generic term (''hypernym'') and a more specific term (''hyponym''). The hypernym is also called a ''supertype'', ''umbrella term'', or ''blanket term''. The hyponym names a subset, subtype of the hypernym. The semantic field of the hyponym is included within that of the hypernym. For example, "pigeon", "crow", and "hen" are all hyponyms of "bird" and "animal"; "bird" and "animal" are both hypernyms of "pigeon", "crow", and "hen". A core concept of hyponymy is ''type of'', whereas ''instance of'' is differentiable. For example, for the noun "city", a hyponym (naming a type of city) is "capital city" or "capital", whereas "Paris" and "London" are instances of a city, not types of city. Discussion In linguistics, semantics, general semantics, and ontology components, ontologies, hyponymy () shows the relationship between a generic term (hypernym) and a specific instance of it (hyponym ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Verb

A verb is a word that generally conveys an action (''bring'', ''read'', ''walk'', ''run'', ''learn''), an occurrence (''happen'', ''become''), or a state of being (''be'', ''exist'', ''stand''). In the usual description of English, the basic form, with or without the particle ''to'', is the infinitive. In many languages, verbs are inflected (modified in form) to encode tense, aspect, mood, and voice. A verb may also agree with the person, gender or number of some of its arguments, such as its subject, or object. In English, three tenses exist: present, to indicate that an action is being carried out; past, to indicate that an action has been done; and future, to indicate that an action will be done, expressed with the auxiliary verb ''will'' or ''shall''. For example: * Lucy ''will go'' to school. ''(action, future)'' * Barack Obama ''became'' the President of the United States in 2009. ''(occurrence, past)'' * Mike Trout ''is'' a center fielder. ''(state of bein ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inductive Logic Programming

Inductive logic programming (ILP) is a subfield of symbolic artificial intelligence which uses logic programming as a uniform representation for examples, background knowledge and hypotheses. The term "''inductive''" here refers to philosophical (i.e. suggesting a theory to explain observed facts) rather than mathematical (i.e. proving a property for all members of a well-ordered set) induction. Given an encoding of the known background knowledge and a set of examples represented as a logical database of facts, an ILP system will derive a hypothesised logic program which entails all the positive and none of the negative examples. * Schema: ''positive examples'' + ''negative examples'' + ''background knowledge'' ⇒ ''hypothesis''. Inductive logic programming is particularly useful in bioinformatics and natural language processing. History Building on earlier work on Inductive inference, Gordon Plotkin was the first to formalise induction in a clausal setting around 1970, ad ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Field

In physics and mathematics, a random field is a random function over an arbitrary domain (usually a multi-dimensional space such as \mathbb^n). That is, it is a function f(x) that takes on a random value at each point x \in \mathbb^n(or some other domain). It is also sometimes thought of as a synonym for a stochastic process with some restriction on its index set. That is, by modern definitions, a random field is a generalization of a stochastic process where the underlying parameter need no longer be real or integer valued "time" but can instead take values that are multidimensional vectors or points on some manifold. Formal definition Given a probability space (\Omega, \mathcal, P), an ''X''-valued random field is a collection of ''X''-valued random variables indexed by elements in a topological space ''T''. That is, a random field ''F'' is a collection : \ where each F_t is an ''X''-valued random variable. Examples In its discrete version, a random field is a list of ran ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Network

A Bayesian network (also known as a Bayes network, Bayes net, belief network, or decision network) is a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG). While it is one of several forms of causal notation, causal networks are special cases of Bayesian networks. Bayesian networks are ideal for taking an event that occurred and predicting the likelihood that any one of several possible known causes was the contributing factor. For example, a Bayesian network could represent the probabilistic relationships between diseases and symptoms. Given symptoms, the network can be used to compute the probabilities of the presence of various diseases. Efficient algorithms can perform inference and learning in Bayesian networks. Bayesian networks that model sequences of variables (''e.g.'' speech signals or protein sequences) are called dynamic Bayesian networks. Generalizations of Bayesian networks tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graphical Model

A graphical model or probabilistic graphical model (PGM) or structured probabilistic model is a probabilistic model for which a graph expresses the conditional dependence structure between random variables. Graphical models are commonly used in probability theory, statistics—particularly Bayesian statistics—and machine learning. Types of graphical models Generally, probabilistic graphical models use a graph-based representation as the foundation for encoding a distribution over a multi-dimensional space and a graph that is a compact or factorized representation of a set of independences that hold in the specific distribution. Two branches of graphical representations of distributions are commonly used, namely, Bayesian networks and Markov random fields. Both families encompass the properties of factorization and independences, but they differ in the set of independences they can encode and the factorization of the distribution that they induce. Undirected Graphical Model ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Viterbi Algorithm

The Viterbi algorithm is a dynamic programming algorithm for obtaining the maximum a posteriori probability estimate of the most likely sequence of hidden states—called the Viterbi path—that results in a sequence of observed events. This is done especially in the context of Markov information sources and hidden Markov models (HMM). The algorithm has found universal application in decoding the convolutional codes used in both CDMA and GSM digital cellular, dial-up modems, satellite, deep-space communications, and 802.11 wireless LANs. It is now also commonly used in speech recognition, speech synthesis, diarization, keyword spotting, computational linguistics, and bioinformatics. For example, in speech-to-text (speech recognition), the acoustic signal is treated as the observed sequence of events, and a string of text is considered to be the "hidden cause" of the acoustic signal. The Viterbi algorithm finds the most likely string of text given the acoustic signal. His ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Random Field

Conditional random fields (CRFs) are a class of statistical modeling methods often applied in pattern recognition and machine learning and used for structured prediction. Whereas a classifier predicts a label for a single sample without considering "neighbouring" samples, a CRF can take context into account. To do so, the predictions are modelled as a graphical model, which represents the presence of dependencies between the predictions. The kind of graph used depends on the application. For example, in natural language processing, "linear chain" CRFs are popular, for which each prediction is dependent only on its immediate neighbours. In image processing, the graph typically connects locations to nearby and/or similar locations to enforce that they receive similar predictions. Other examples where CRFs are used are: labeling or parsing of sequential data for natural language processing or biological sequences, part-of-speech tagging, shallow parsing, named entity recogn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hidden Markov Model

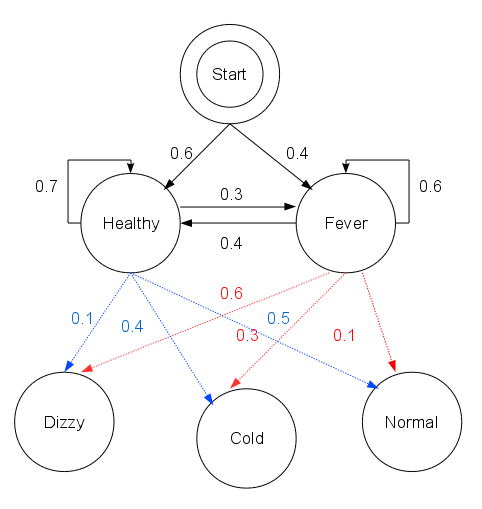

A hidden Markov model (HMM) is a Markov model in which the observations are dependent on a latent (or ''hidden'') Markov process (referred to as X). An HMM requires that there be an observable process Y whose outcomes depend on the outcomes of X in a known way. Since X cannot be observed directly, the goal is to learn about state of X by observing Y. By definition of being a Markov model, an HMM has an additional requirement that the outcome of Y at time t = t_0 must be "influenced" exclusively by the outcome of X at t = t_0 and that the outcomes of X and Y at t < t_0 must be conditionally independent of at given at time . Estimation of the parameters in an HMM can be performed using maximum likelihood estimation. For linear chain HMMs, the Baum–Welch algorithm can be used to estimate parameters. Hidden Markov models are known for their applications to thermodynamics, statistical mechanics, physics, chem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Dependence

In probability theory, conditional dependence is a relationship between two or more events that are dependent when a third event occurs.Introduction to Artificial Intelligence by Sebastian Thrun and Peter Norvig, 201"Unit 3: Conditional Dependence"/ref> For example, if A and B are two events that individually increase the probability of a third event C, and do not directly affect each other, then initially (when it has not been observed whether or not the event C occurs) \operatorname(A \mid B) = \operatorname(A) \quad \text \quad \operatorname(B \mid A) = \operatorname(B) (A \text B are independent). But suppose that now C is observed to occur. If event B occurs then the probability of occurrence of the event A will decrease because its positive relation to C is less necessary as an explanation for the occurrence of C (similarly, event A occurring will decrease the probability of occurrence of B). Hence, now the two events A and B are conditionally negatively dependent on each ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexical Analysis

Lexical tokenization is conversion of a text into (semantically or syntactically) meaningful ''lexical tokens'' belonging to categories defined by a "lexer" program. In case of a natural language, those categories include nouns, verbs, adjectives, punctuations etc. In case of a programming language, the categories include identifiers, operators, grouping symbols, data types and language keywords. Lexical tokenization is related to the type of tokenization used in large language models (LLMs) but with two differences. First, lexical tokenization is usually based on a lexical grammar, whereas LLM tokenizers are usually probability-based. Second, LLM tokenizers perform a second step that converts the tokens into numerical values. Rule-based programs A rule-based program, performing lexical tokenization, is called ''tokenizer'', or ''scanner'', although ''scanner'' is also a term for the first stage of a lexer. A lexer forms the first phase of a compiler frontend in processing. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |