|

Structural Estimation

Structural estimation is a technique for estimating deep "structural" parameters of theoretical economic models. The term is inherited from the simultaneous equations model. Structural estimation is extensively using the equations from the economics theory, and in this sense is contrasted with "reduced form estimation" and other nonstructural estimations that study the statistical relationships between the observed variables while utilizing the economics theory very lightly (mostly to distinguish between the exogenous and endogenous variables, so called "descriptive models"). The idea of combining statistical and economic models dates to mid-20th century and work of the Cowles Commission. The difference between a structural parameter and a reduced-form parameter was formalized in the work of the Cowles Foundation. A structural parameter is also said to be "policy invariant" whereas the value of reduced-form parameter can depend on exogenously determined parameters set by public po ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Econometrics

Econometrics is the application of statistical methods to economic data in order to give empirical content to economic relationships.M. Hashem Pesaran (1987). "Econometrics," '' The New Palgrave: A Dictionary of Economics'', v. 2, p. 8 p. 8–22 Reprinted in J. Eatwell ''et al.'', eds. (1990). ''Econometrics: The New Palgrave''p. 1p. 1–34Abstract (2008 revision by J. Geweke, J. Horowitz, and H. P. Pesaran). More precisely, it is "the quantitative analysis of actual economic phenomena based on the concurrent development of theory and observation, related by appropriate methods of inference". An introductory economics textbook describes econometrics as allowing economists "to sift through mountains of data to extract simple relationships". Jan Tinbergen is one of the two founding fathers of econometrics. The other, Ragnar Frisch, also coined the term in the sense in which it is used today. A basic tool for econometrics is the multiple linear regression model. ''Econometric the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dependent Variables

Dependent and independent variables are variables in mathematical modeling, statistical modeling and experimental sciences. Dependent variables receive this name because, in an experiment, their values are studied under the supposition or demand that they depend, by some law or rule (e.g., by a mathematical function), on the values of other variables. Independent variables, in turn, are not seen as depending on any other variable in the scope of the experiment in question. In this sense, some common independent variables are time, space, density, mass, fluid flow rate, and previous values of some observed value of interest (e.g. human population size) to predict future values (the dependent variable). Of the two, it is always the dependent variable whose variation is being studied, by altering inputs, also known as regressors in a statistical context. In an experiment, any variable that can be attributed a value without attributing a value to any other variable is called an inde ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimation Methods

Estimation (or estimating) is the process of finding an estimate or approximation, which is a value that is usable for some purpose even if input data may be incomplete, uncertain, or unstable. The value is nonetheless usable because it is derived from the best information available.C. Lon Enloe, Elizabeth Garnett, Jonathan Miles, ''Physical Science: What the Technology Professional Needs to Know'' (2000), p. 47. Typically, estimation involves "using the value of a statistic derived from a sample to estimate the value of a corresponding population parameter".Raymond A. Kent, "Estimation", ''Data Construction and Data Analysis for Survey Research'' (2001), p. 157. The sample provides information that can be projected, through various formal or informal processes, to determine a range most likely to describe the missing information. An estimate that turns out to be incorrect will be an overestimate if the estimate exceeds the actual result and an underestimate if the estimate fal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Methodology Of Econometrics

The methodology of econometrics is the study of the range of differing approaches to undertaking econometric analysis. Commonly distinguished differing approaches that have been identified and studied include: * the Cowles Commission approach * the vector autoregression approach * the LSE approach to econometrics - originated with Denis Sargan now associated with David Hendry (and his general-to-specific modeling). Also associated this approach is the work on integrated and cointegrated systems originating on the work of Engle and Granger and Johansen and Juselius (Juselius 1999) * the use of calibration - Finn Kydland and Edward Prescott * the ''experimentalist'' or difference in differences approach - Joshua Angrist and Jörn-Steffen Pischke. In addition to these more clearly defined approaches, Hoover identifies a range of ''heterogeneous'' or ''textbook approaches'' that those less, or even un-, concerned with methodology, tend to follow. Methods Econometrics may use ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Indirect Inference

Indirect inference is a simulation-based method for estimating the parameters of economic models. It is a computational method for determining acceptable macroeconomic model parameters in circumstances where the available data is too voluminous or unsuitable for formal modeling. Approximate Bayesian computation can be understood as a kind of Bayesian version of indirect inference.Drovandi, Christopher C. "ABC and indirect inference." Handbook of Approximate Bayesian Computation (2018): 179-209. https://arxiv.org/abs/1803.01999 Core idea Given a dataset of real observations and a generative model with parameters \theta for which no likelihood function can easily be provided. Then we can ask the question of which choice of parameters \theta could have generated the observations. Since a maximum likelihood estimation cannot be performed, indirect inference proposes to fit a (possibly misspecified) auxiliary model\hat_ to the observations, which will result in a set of auxiliary mod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Likelihood

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed statistical model, the observed data is most probable. The point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when all observed outcomes are assumed to have Normal distributions with the same variance. From the perspective of Bayesian in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression Analysis

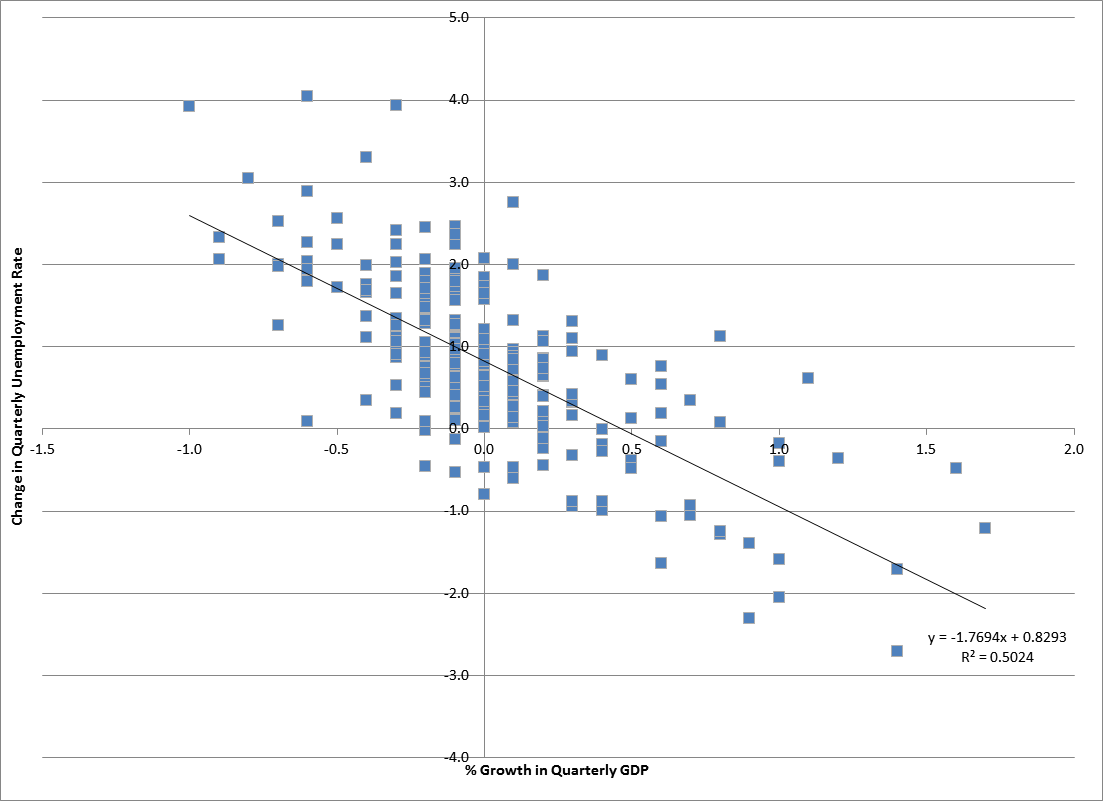

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one or more independent variables (often called 'predictors', 'covariates', 'explanatory variables' or 'features'). The most common form of regression analysis is linear regression, in which one finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion. For example, the method of ordinary least squares computes the unique line (or hyperplane) that minimizes the sum of squared differences between the true data and that line (or hyperplane). For specific mathematical reasons (see linear regression), this allows the researcher to estimate the conditional expectation (or population average value) of the dependent variable when the independent variables take on a given ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Simultaneous Equations

In mathematics, a set of simultaneous equations, also known as a system of equations or an equation system, is a finite set of equations for which common solutions are sought. An equation system is usually classified in the same manner as single equations, namely as a: * System of linear equations, * System of nonlinear equations, * System of bilinear equations, * System of polynomial equations A system of polynomial equations (sometimes simply a polynomial system) is a set of simultaneous equations where the are polynomials in several variables, say , over some field . A ''solution'' of a polynomial system is a set of values for the ..., * System of differential equations, or a * System of difference equations See also * Simultaneous equations model, a statistical model in the form of simultaneous linear equations * Elementary algebra, for elementary methods {{set index article Equations Broad-concept articles de:Gleichung#Gleichungssysteme ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lucas Critique

The Lucas critique, named for American economist Robert Lucas's work on macroeconomic policymaking, argues that it is naive to try to predict the effects of a change in economic policy entirely on the basis of relationships observed in historical data, especially highly aggregated historical data. More formally, it states that the decision rules of Keynesian models—such as the consumption function—cannot be considered as structural in the sense of being invariant with respect to changes in government policy variables. The Lucas critique is significant in the history of economic thought as a representative of the paradigm shift that occurred in macroeconomic theory in the 1970s towards attempts at establishing micro-foundations. Thesis The basic idea pre-dates Lucas's contribution—related ideas are expressed as Campbell's law and Goodhart's law—but in a 1976 paper, Lucas drove to the point that this simple notion invalidated policy advice based on conclusions drawn f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parameter

A parameter (), generally, is any characteristic that can help in defining or classifying a particular system (meaning an event, project, object, situation, etc.). That is, a parameter is an element of a system that is useful, or critical, when identifying the system, or when evaluating its performance, status, condition, etc. ''Parameter'' has more specific meanings within various disciplines, including mathematics, computer programming, engineering, statistics, logic, linguistics, and electronic musical composition. In addition to its technical uses, there are also extended uses, especially in non-scientific contexts, where it is used to mean defining characteristics or boundaries, as in the phrases 'test parameters' or 'game play parameters'. Modelization When a system is modeled by equations, the values that describe the system are called ''parameters''. For example, in mechanics, the masses, the dimensions and shapes (for solid bodies), the densities and the viscosit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cowles Foundation

The Cowles Foundation for Research in Economics is an economic research institute at Yale University. It was created as the Cowles Commission for Research in Economics at Colorado Springs in 1932 by businessman and economist Alfred Cowles. In 1939, the Cowles Commission moved to the University of Chicago under Theodore O. Yntema. Jacob Marschak directed it from 1943 until 1948, when Tjalling C. Koopmans assumed leadership. Increasing opposition to the Cowles Commission from the department of economics of the University of Chicago during the 1950s impelled Koopmans to persuade the Cowles family to move the commission to Yale University in 1955 where it became the Cowles Foundation. As its motto ''Theory and Measurement'' implies, the Cowles Commission focuses on linking economic theory to mathematics and statistics. Its advances in economics involved the creation and integration of general equilibrium theory and econometrics. The thrust of the Cowles approach was a specific, pr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |