|

Statistical Semantics

In linguistics, statistical semantics applies the methods of statistics to the problem of determining the meaning of words or phrases, ideally through unsupervised learning, to a degree of precision at least sufficient for the purpose of information retrieval. History The term ''statistical semantics'' was first used by Warren Weaver in his well-known paper on machine translation. He argued that word-sense disambiguation for machine translation should be based on the co-occurrence frequency of the context words near a given target word. The underlying assumption that "a word is characterized by the company it keeps" was advocated by J. R. Firth. This assumption is known in linguistics as the distributional hypothesis. Emile Delavenay defined ''statistical semantics'' as the "statistical study of the meanings of words and their frequency and order of recurrence". " Furnas et al. 1983" is frequently cited as a foundational contribution to statistical semantics. An early success i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linguistics

Linguistics is the scientific study of language. The areas of linguistic analysis are syntax (rules governing the structure of sentences), semantics (meaning), Morphology (linguistics), morphology (structure of words), phonetics (speech sounds and equivalent gestures in sign languages), phonology (the abstract sound system of a particular language, and analogous systems of sign languages), and pragmatics (how the context of use contributes to meaning). Subdisciplines such as biolinguistics (the study of the biological variables and evolution of language) and psycholinguistics (the study of psychological factors in human language) bridge many of these divisions. Linguistics encompasses Outline of linguistics, many branches and subfields that span both theoretical and practical applications. Theoretical linguistics is concerned with understanding the universal grammar, universal and Philosophy of language#Nature of language, fundamental nature of language and developing a general ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text Mining

Text mining, text data mining (TDM) or text analytics is the process of deriving high-quality information from text. It involves "the discovery by computer of new, previously unknown information, by automatically extracting information from different written resources." Written resources may include websites, books, emails, reviews, and articles. High-quality information is typically obtained by devising patterns and trends by means such as statistical pattern learning. According to Hotho et al. (2005), there are three perspectives of text mining: information extraction, data mining, and knowledge discovery in databases (KDD). Text mining usually involves the process of structuring the input text (usually parsing, along with the addition of some derived linguistic features and the removal of others, and subsequent insertion into a database), deriving patterns within the structured data, and finally evaluation and interpretation of the output. 'High quality' in text mining usually ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Natural Language Processing

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related to information retrieval, knowledge representation and computational linguistics, a subfield of linguistics. Major tasks in natural language processing are speech recognition, text classification, natural language understanding, and natural language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, though at the time that was not articulated as a problem separate from artificial intelligence. The proposed test includes a task that involves the automated interpretation and generation of natural language. Symbolic NLP (1950s – ea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantic Analytics

Semantic analytics, also termed ''semantic relatedness'', is the use of ontologies to analyze content in web resources. This field of research combines text analytics and Semantic Web technologies like RDF. Semantic analytics measures the relatedness of different ontological concepts. Some academic research groups that have active project in this area include Kno.e.sis Center at Wright State University among others. History An important milestone in the beginning of semantic analytics occurred in 1996, although the historical progression of these algorithms is largely subjective. In his seminal study publication, Philip Resnik established that computers have the capacity to emulate human judgement. Spanning the publications of multiple journals, improvements to the accuracy of general semantic analytic computations all claimed to revolutionize the field. However, the lack of a standard terminology throughout the late 1990s was the cause of much miscommunication. This prompted Bu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Latent Semantic Indexing

Latent semantic analysis (LSA) is a technique in natural language processing, in particular distributional semantics, of analyzing relationships between a set of documents and the terms they contain by producing a set of concepts related to the documents and terms. LSA assumes that words that are close in meaning will occur in similar pieces of text (the distributional hypothesis). A matrix containing word counts per document (rows represent unique words and columns represent each document) is constructed from a large piece of text and a mathematical technique called singular value decomposition (SVD) is used to reduce the number of rows while preserving the similarity structure among columns. Documents are then compared by cosine similarity between any two columns. Values close to 1 represent very similar documents while values close to 0 represent very dissimilar documents. An information retrieval technique using latent semantic structure was patented in 1988 by Scott De ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Retrieval

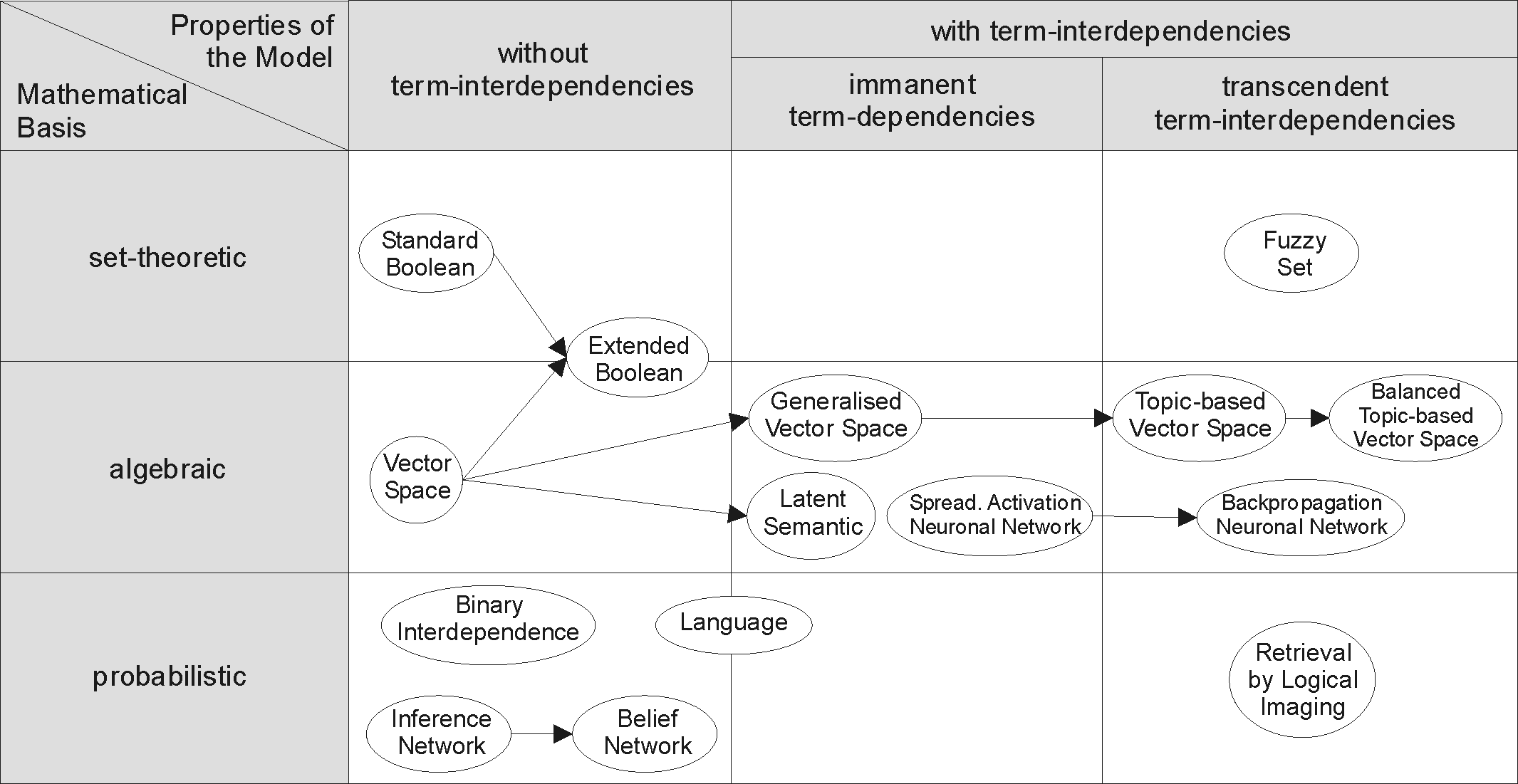

Information retrieval (IR) in computing and information science is the task of identifying and retrieving information system resources that are relevant to an Information needs, information need. The information need can be specified in the form of a search query. In the case of document retrieval, queries can be based on full-text search, full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds. Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; it also stores and manages those documents. Web search engines are the most visible IR applications. Overview An information retrieval process begins when a user enters a query into the sys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Linguistics

Computational linguistics is an interdisciplinary field concerned with the computational modelling of natural language, as well as the study of appropriate computational approaches to linguistic questions. In general, computational linguistics draws upon linguistics, computer science, artificial intelligence, mathematics, logic, philosophy, cognitive science, cognitive psychology, psycholinguistics, anthropology and neuroscience, among others. Computational linguistics is closely related to mathematical linguistics. Origins The field overlapped with artificial intelligence since the efforts in the United States in the 1950s to use computers to automatically translate texts from foreign languages, particularly Russian scientific journals, into English. Since rule-based approaches were able to make arithmetic (systematic) calculations much faster and more accurately than humans, it was expected that lexicon, morphology, syntax and semantics can be learned using explicit rules, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Co-occurrence

In linguistics, co-occurrence or cooccurrence is an above-chance frequency of ordered occurrence of two adjacent terms in a text corpus. Co-occurrence in this linguistic sense can be interpreted as an indicator of semantic proximity or an idiomatic expression. Corpus linguistics and its statistic analyses reveal patterns of co-occurrences within a language and enable to work out typical collocations for its lexical items. A ''co-occurrence restriction'' is identified when linguistic elements never occur together. Analysis of these restrictions can lead to discoveries about the structure and development of a language. Co-occurrence can be seen an extension of word counting in higher dimensions. Co-occurrence can be quantitatively described using measures like a massive correlation or mutual information. See also * Distributional hypothesis * Statistical semantics * Idiom (language structure) * Co-occurrence matrix * Co-occurrence networks * Similarity measure * Dice ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text Corpus

In linguistics and natural language processing, a corpus (: corpora) or text corpus is a dataset, consisting of natively digital and older, digitalized, language resources, either annotated or unannotated. Annotated, they have been used in corpus linguistics for statistical statistical hypothesis testing, hypothesis testing, checking occurrences or validating linguistic rules within a specific language territory. Overview A corpus may contain texts in a single language (''monolingual corpus'') or text data in multiple languages (''multilingual corpus''). In order to make the corpora more useful for doing linguistic research, they are often subjected to a process known as annotation. An example of annotating a corpus is part-of-speech tagging, or ''POS-tagging'', in which information about each word's part of speech (verb, noun, adjective, etc.) is added to the corpus in the form of ''tags''. Another example is indicating the Lemma (morphology), lemma (base) form of each word ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexicon

A lexicon (plural: lexicons, rarely lexica) is the vocabulary of a language or branch of knowledge (such as nautical or medical). In linguistics, a lexicon is a language's inventory of lexemes. The word ''lexicon'' derives from Greek word (), neuter of () meaning 'of or for words'. Linguistic theories generally regard human languages as consisting of two parts: a lexicon, essentially a catalogue of a language's words (its wordstock); and a grammar, a system of rules which allow for the combination of those words into meaningful sentences. The lexicon is also thought to include bound morphemes, which cannot stand alone as words (such as most affixes). In some analyses, compound words and certain classes of idiomatic expressions, collocations and other phrasemes are also considered to be part of the lexicon. Dictionaries are lists of the lexicon, in alphabetical order, of a given language; usually, however, bound morphemes are not included. Size and organization Items ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |