|

Software Brittleness

In computer programming and software engineering, software brittleness is the increased difficulty in fixing older software that may appear reliable, but instead, fails, when presented with unusual data or data that is altered in a seemingly minor way. The phrase is derived from analogies to brittleness in metalworking. Causes When software is new, it is very malleable; it can be formed to be whatever is wanted by the implementers. But as the software in a given project grows larger and larger, and develops a larger base of users with long experience with the software, it becomes less and less malleable. Like a metal that has been work-hardened, the software becomes a legacy system, brittle and unable to be easily maintained without fracturing the entire system. Brittleness in software can be caused by algorithms that do not work well for the full range of input data. Following, are some examples: * A good example is an algorithm that allows a divide by zero to occur, or a cur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

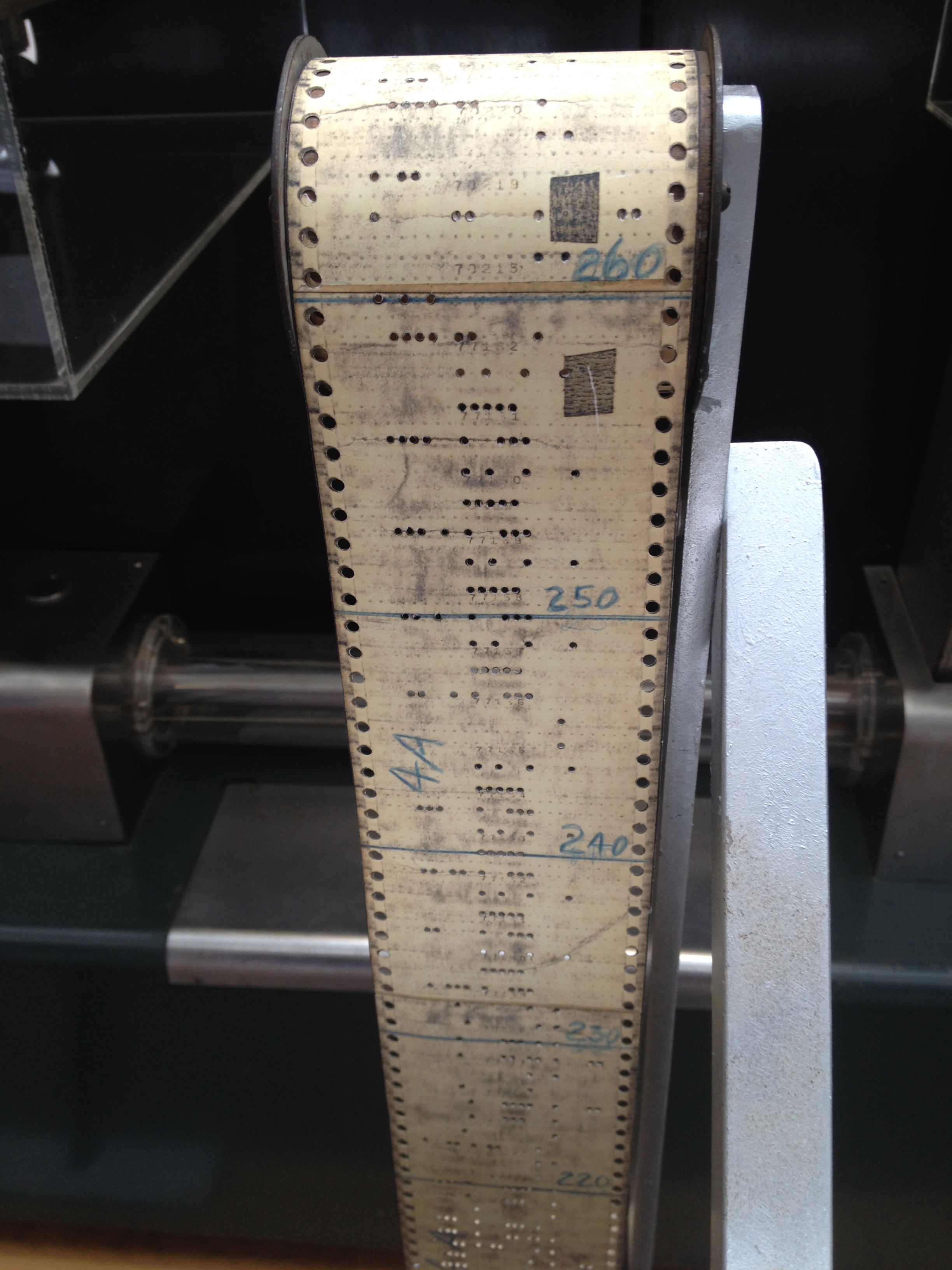

Computer Programming

Computer programming or coding is the composition of sequences of instructions, called computer program, programs, that computers can follow to perform tasks. It involves designing and implementing algorithms, step-by-step specifications of procedures, by writing source code, code in one or more programming languages. Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit. Proficient programming usually requires expertise in several different subjects, including knowledge of the Domain (software engineering), application domain, details of programming languages and generic code library (computing), libraries, specialized algorithms, and Logic#Formal logic, formal logic. Auxiliary tasks accompanying and related to programming include Requirements analysis, analyzing requirements, Software testing, testing, debugging (investigating and fixing problems), imple ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Display Device

A display device is an output device for presentation of information in visual or tactile form (the latter used for example in tactile electronic displays for blind people). When the input information that is supplied has an electrical signal the display is called an '' electronic display''. Common applications for ''electronic visual displays'' are television sets or computer monitors. Types of electronic displays In use These are the technologies used to create the various displays in use today. * Liquid-crystal display (LCD) ** Light-emitting diode (LED) backlit LCD ** Thin-film transistor (TFT) LCD ** Quantum dot (QLED) display * Light-emitting diode (LED) display ** OLED display ** AMOLED display ** Super AMOLED display Segment displays Some displays can show only digits or alphanumeric characters. They are called segment displays, because they are composed of several segments that switch on and off to give appearance of desired glyph. The segments ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

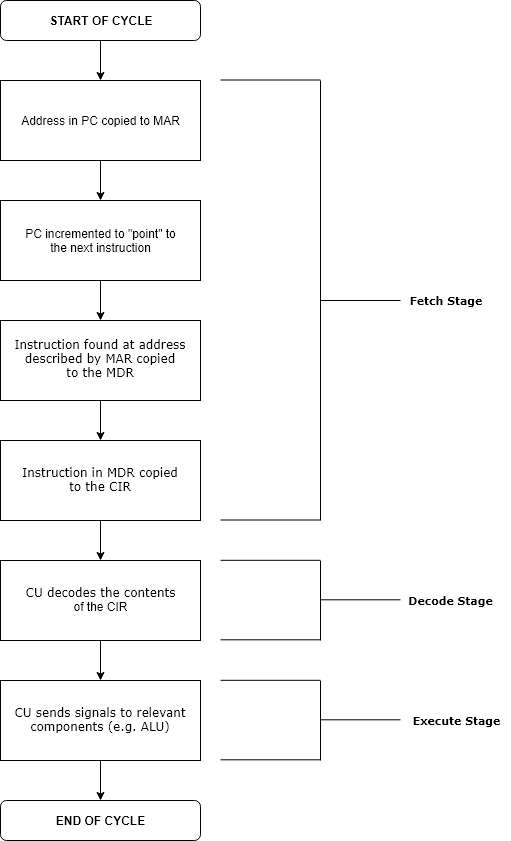

Run Time (program Lifecycle Phase)

Execution in computer and software engineering is the process by which a computer or virtual machine interprets and acts on the instructions of a computer program. Each instruction of a program is a description of a particular action which must be carried out, in order for a specific problem to be solved. Execution involves repeatedly following a " fetch–decode–execute" cycle for each instruction done by the control unit. As the executing machine follows the instructions, specific effects are produced in accordance with the semantics of those instructions. Programs for a computer may be executed in a batch process without human interaction or a user may type commands in an interactive session of an interpreter. In this case, the "commands" are simply program instructions, whose execution is chained together. The term run is used almost synonymously. A related meaning of both "to run" and "to execute" refers to the specific action of a user starting (or ''launching'' o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compiling

In computing, a compiler is a computer program that translates computer code written in one programming language (the ''source'' language) into another language (the ''target'' language). The name "compiler" is primarily used for programs that translate source code from a high-level programming language to a low-level programming language (e.g. assembly language, object code, or machine code) to create an executable program. Compilers: Principles, Techniques, and Tools by Alfred V. Aho, Ravi Sethi, Jeffrey D. Ullman - Second Edition, 2007 There are many different types of compilers which produce output in different useful forms. A ''cross-compiler'' produces code for a different CPU or operating system than the one on which the cross-compiler itself runs. A ''bootstrap compiler'' is often a temporary compiler, used for compiling a more permanent or better optimised compiler for a language. Related software include ''decompilers'', programs that translate from low-level la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coupling (computer Programming)

A coupling is a device used to connect two shafts together at their ends for the purpose of transmitting power. The primary purpose of couplings is to join two pieces of rotating equipment while permitting some degree of misalignment or end movement or both. In a more general context, a coupling can also be a mechanical device that serves to connect the ends of adjacent parts or objects. Couplings do not normally allow disconnection of shafts during operation, however there are torque-limiting couplings which can slip or disconnect when some torque limit is exceeded. Selection, installation and maintenance of couplings can lead to reduced maintenance time and maintenance cost. Uses Shaft couplings are used in machinery for several purposes. A primary function is to transfer power from one end to another end (ex: motor transfer power to pump through coupling). Other common uses: * To alter the vibration characteristics of rotating units * To connect the driving and the driven ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dependency Hell

Dependency hell is a colloquial term for the frustration of some software users who have installed software packages which have dependencies on specific versions of other software packages. The dependency issue arises when several packages have dependencies on the same ''shared'' packages or libraries, but they depend on different and incompatible versions of the shared packages. If the shared package or library can only be installed in a single version, the user may need to address the problem by obtaining newer or older versions of the dependent packages. This, in turn, may break other dependencies and push the problem to another set of packages. Problems Dependency hell takes several forms: ; Many dependencies : An application depends on many libraries, requiring lengthy downloads, large amounts of disk space, and being very portable (all libraries are already ported enabling the application itself to be ported easily). It can also be difficult to locate all the depende ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is the capability of computer, computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of research in computer science that develops and studies methods and software that enable machines to machine perception, perceive their environment and use machine learning, learning and intelligence to take actions that maximize their chances of achieving defined goals. High-profile applications of AI include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon (company), Amazon, and Netflix); virtual assistants (e.g., Google Assistant, Siri, and Amazon Alexa, Alexa); autonomous vehicles (e.g., Waymo); Generative artificial intelligence, generative and Computational creativity, creative tools (e.g., ChatGPT and AI art); and Superintelligence, superhuman play and analysis in strategy games (e.g., ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression Testing

Regression testing (rarely, ''non-regression testing'') is re-running functional and non-functional tests to ensure that previously developed and tested software still performs as expected after a change. If not, that would be called a '' regression''. Changes that may require regression testing include bug fixes, software enhancements, configuration changes, and even substitution of electronic components ( hardware). As regression test suites tend to grow with each found defect, test automation is frequently involved. Sometimes a change impact analysis is performed to determine an appropriate subset of tests (''non-regression analysis''). Background As software is updated or changed, or reused on a modified target, emergence of new faults and/or re-emergence of old faults is quite common. Sometimes re-emergence occurs because a fix gets lost through poor revision control practices (or simple human error in revision control). Often, a fix for a problem will be " fragil ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Patch (computing)

A patch is data that is intended to be used to modify an existing software resource such as a computer program, program or a computer file, file, often to fix software bug, bugs and security vulnerability, security vulnerabilities. A patch may be created to improve functionality, usability, or Computer performance, performance. A patch is typically provided by a vendor for updating the software that they provide. A patch may be created manually, but commonly it is created via a tool that compares two versions of the resource and generates data that can be used to transform one to the other. Typically, a patch needs to be applied to the specific version of the resource it is intended to modify, although there are exceptions. Some patching tools can detect the version of the existing resource and apply the appropriate patch, even if it supports multiple versions. As more patches are released, their cumulative size can grow significantly, sometimes exceeding the size of the resource ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Software Archaeology

Software archaeology or source code archeology is the study of poorly documented or undocumented legacy software implementations, as part of software maintenance. Software archaeology, named by analogy with archaeology, includes the reverse engineering of software modules, and the application of a variety of tools and processes for extracting and understanding program structure and recovering design information. Software archaeology may reveal dysfunctional team processes which have produced poorly designed or even unused software modules, and in some cases deliberately obfuscatory code may be found. The term has been in use for decades. Software archaeology has continued to be a topic of discussion at more recent software engineering conferences. Techniques A workshop on Software Archaeology at the 2001 OOPSLA (Object-Oriented Programming, Systems, Languages & Applications) conference identified the following software archaeology techniques, some of which are specific to object- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Baby Duck Syndrome

In psychology and ethology, imprinting is a relativly rapid learning process that occurs during a particular Developmental psychology, developmental phase or stage of life and leads to corresponding behavioural adaptations. Originally, the term was used to describe situations in which an animal or human internalises (learns) the characteristics of a perceived object, independent of a theory of psychological development occurring in phases (critical period). Even ancient philosophers speculated about the material nature of the memory what would be necessary for the lerning process, assuming a kind of tabula rasa in the brain like consisting of clay or wax and empty until an experience were mechanicaly "imprinted" on it. More recently, the founder of psychoanalysis developed the thesis that the brain can store experiences in its neural network through "a permanent change after an event", providing the first scientific explanation of how imprinting work. Filial imprinting The best ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rewrite (programming)

A rewrite in computer programming is the act or result of re-implementing a large portion of existing functionality without re-use of its source code. When the rewrite uses no existing code at all, it is common to speak of a rewrite from scratch. Motivations A piece of software is typically rewritten when one or more of the following apply: *its source code is not available or is only available under an incompatible license *its code cannot be adapted to a new target platform *its existing code has become too difficult to handle and extend *the task of debugging it seems too complicated *the programmer finds it difficult to understand its source code *developers learn new techniques or wish to do a big feature overhaul which requires much change *the programming language of the source code has to be changed Risks Several software engineers, such as Joel Spolsky have warned against total rewrites, especially under schedule constraints or competitive pressures. While developers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |