|

Serial Concatenated Convolutional Codes

Serial concatenated convolutional codes (SCCC) are a class of forward error correction (FEC) codes highly suitable for turbo (iterative) decoding. Data to be transmitted over a noisy channel may first be encoded using an SCCC. Upon reception, the coding may be used to remove any errors introduced during transmission. The decoding is performed by repeated decoding and enterleaving of the received symbols. SCCCs typically include an inner code, an outer code, and a linking interleaver. A distinguishing feature of SCCCs is the use of a recursive convolutional code as the inner code. The recursive inner code provides the 'interleaver gain' for the SCCC, which is the source of the excellent performance of these codes. The analysis of SCCCs was spawned in part by the earlier discovery of turbo codes in 1993. This analysis of SCCC's took place in the 1990s in a series of publications from NASA's Jet Propulsion Laboratory (JPL). The research offered SCCC's as a form of turbo-like ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Forward Error Correction

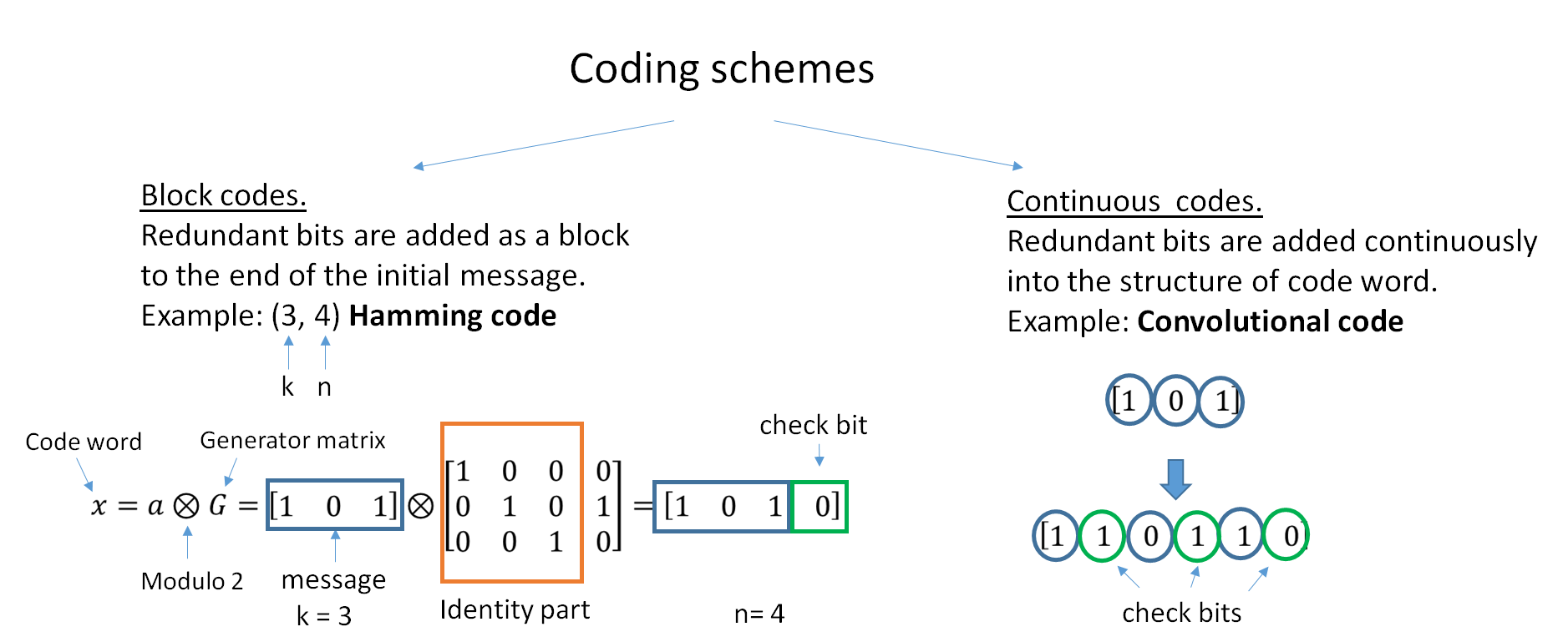

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. ECC contrasts with error detection in that errors that are encountered can be corrected, not simply detected. The advantage is that a system using ECC does not require a reverse channel to request retransmission of data when an error occurs. The downside is that there is a fixed overhead that is added to the message, thereby requiring a hi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum A Posteriori

In Bayesian statistics, a maximum a posteriori probability (MAP) estimate is an estimate of an unknown quantity, that equals the mode of the posterior distribution. The MAP can be used to obtain a point estimate of an unobserved quantity on the basis of empirical data. It is closely related to the method of maximum likelihood (ML) estimation, but employs an augmented optimization objective which incorporates a prior distribution (that quantifies the additional information available through prior knowledge of a related event) over the quantity one wants to estimate. MAP estimation can therefore be seen as a regularization of maximum likelihood estimation. Description Assume that we want to estimate an unobserved population parameter \theta on the basis of observations x. Let f be the sampling distribution of x, so that f(x\mid\theta) is the probability of x when the underlying population parameter is \theta. Then the function: :\theta \mapsto f(x \mid \theta) \! is known a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data

In the pursuit of knowledge, data (; ) is a collection of discrete values that convey information, describing quantity, quality, fact, statistics, other basic units of meaning, or simply sequences of symbols that may be further interpreted. A datum is an individual value in a collection of data. Data is usually organized into structures such as tables that provide additional context and meaning, and which may themselves be used as data in larger structures. Data may be used as variables in a computational process. Data may represent abstract ideas or concrete measurements. Data is commonly used in scientific research, economics, and in virtually every other form of human organizational activity. Examples of data sets include price indices (such as consumer price index), unemployment rates, literacy rates, and census data. In this context, data represents the raw facts and figures which can be used in such a manner in order to capture the useful information out of i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Turbo Equalizer

In digital communications, a turbo equalizer is a type of receiver used to receive a message corrupted by a communication channel with intersymbol interference (ISI). It approaches the performance of a maximum a posteriori (MAP) receiver via iterative message passing between a soft-in soft-out (SISO) equalizer and a SISO decoder. It is related to turbo codes in that a turbo equalizer may be considered a type of iterative decoder if the channel is viewed as a non-redundant convolutional code. The turbo equalizer is different from classic a turbo-like code, however, in that the 'channel code' adds no redundancy and therefore can only be used to remove non-gaussian noise. History Turbo codes were invented by Claude Berrou in 1990–1991. In 1993, turbo codes were introduced publicly via a paper listing authors Berrou, Glavieux, and Thitimajshima. In 1995 a novel extension of the turbo principle was applied to an equalizer by Douillard, Jézéquel, and Berrou. In parti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Repeat-accumulate Code

In computer science, repeat-accumulate codes (RA codes) are a low complexity class of error-correcting codes. They were devised so that their ensemble weight distributions are easy to derive. RA codes were introduced by Divsalar ''et al.'' In an RA code, an information block of length is repeated times, scrambled by an interleaver of size , and then encoded by a rate 1 accumulator. The accumulator can be viewed as a truncated rate 1 recursive Recursion (adjective: ''recursive'') occurs when a thing is defined in terms of itself or of its type. Recursion is used in a variety of disciplines ranging from linguistics to logic. The most common application of recursion is in mathemati ... convolutional encoder with transfer function , but Divsalar ''et al.'' prefer to think of it as a block code whose input block and output block are related by the formula and x_i = x_+z_i for i > 1. The encoding time for RA codes is linear and their rate is 1/q. They are nonsystemati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Low-density Parity-check Code

In information theory, a low-density parity-check (LDPC) code is a linear error correcting code, a method of transmitting a message over a noisy transmission channel. An LDPC code is constructed using a sparse Tanner graph (subclass of the bipartite graph). LDPC codes are capacity-approaching codes, which means that practical constructions exist that allow the noise threshold to be set very close to the theoretical maximum (the Shannon limit) for a symmetric memoryless channel. The noise threshold defines an upper bound for the channel noise, up to which the probability of lost information can be made as small as desired. Using iterative belief propagation techniques, LDPC codes can be decoded in time linear to their block length. LDPC codes are finding increasing use in applications requiring reliable and highly efficient information transfer over bandwidth-constrained or return-channel-constrained links in the presence of corrupting noise. Implementation of LDPC codes ha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BCJR Algorithm

The BCJR algorithm is an algorithm for maximum a posteriori decoding of error correcting codes defined on trellises (principally convolutional codes). The algorithm is named after its inventors: Bahl, Cocke, Jelinek and Raviv.L.Bahl, J.Cocke, F.Jelinek, and J.Raviv, "Optimal Decoding of Linear Codes for minimizing symbol error rate", IEEE Transactions on Information Theory, vol. IT-20(2), pp. 284-287, March 1974. This algorithm is critical to modern iteratively-decoded error-correcting codes, including turbo codes and low-density parity-check codes. Steps involved Based on the trellis: * Compute forward probabilities \alpha * Compute backward probabilities \beta * Compute smoothed probabilities based on other information (i.e. noise variance for AWGN, bit crossover probability for binary symmetric channel) Variations SBGT BCJR Berrou, Glavieux and Thitimajshima simplification. Log-Map BCJR P. Robertson, P. Hoeher and E. Villebrun, "Optimal and Sub-Optimal Maximum A Poster ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interleaver

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. ECC contrasts with error detection in that errors that are encountered can be corrected, not simply detected. The advantage is that a system using ECC does not require a reverse channel to request retransmission of data when an error occurs. The downside is that there is a fixed overhead that is added to the message, thereby requiring a h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Soft-decision Decoding

In information theory, a soft-decision decoder is a kind of decoding methods – a class of algorithm used to decode data that has been encoded with an error correcting code. Whereas a hard-decision decoder operates on data that take on a fixed set of possible values (typically 0 or 1 in a binary code), the inputs to a soft-decision decoder may take on a whole range of values in-between. This extra information indicates the reliability of each input data point, and is used to form better estimates of the original data. Therefore, a soft-decision decoder will typically perform better in the presence of corrupted data than its hard-decision counterpart. Soft-decision decoders are often used in Viterbi decoders and turbo code decoders. References See also * Forward error correction In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Viterbi Algorithm

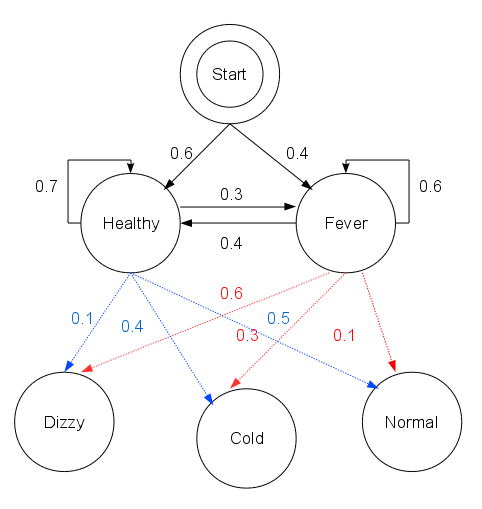

The Viterbi algorithm is a dynamic programming algorithm for obtaining the maximum a posteriori probability estimate of the most likely sequence of hidden states—called the Viterbi path—that results in a sequence of observed events, especially in the context of Markov information sources and hidden Markov models (HMM). The algorithm has found universal application in decoding the convolutional codes used in both CDMA and GSM digital cellular, dial-up modems, satellite, deep-space communications, and 802.11 wireless LANs. It is now also commonly used in speech recognition, speech synthesis, diarization, keyword spotting, computational linguistics, and bioinformatics. For example, in speech-to-text (speech recognition), the acoustic signal is treated as the observed sequence of events, and a string of text is considered to be the "hidden cause" of the acoustic signal. The Viterbi algorithm finds the most likely string of text given the acoustic signal. History The Viterb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Code

In telecommunication, a convolutional code is a type of error-correcting code that generates parity symbols via the sliding application of a boolean polynomial function to a data stream. The sliding application represents the 'convolution' of the encoder over the data, which gives rise to the term 'convolutional coding'. The sliding nature of the convolutional codes facilitates trellis decoding using a time-invariant trellis. Time invariant trellis decoding allows convolutional codes to be maximum-likelihood soft-decision decoded with reasonable complexity. The ability to perform economical maximum likelihood soft decision decoding is one of the major benefits of convolutional codes. This is in contrast to classic block codes, which are generally represented by a time-variant trellis and therefore are typically hard-decision decoded. Convolutional codes are often characterized by the base code rate and the depth (or memory) of the encoder ,k,K/math>. The base code rate is ty ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BCJR

The BCJR algorithm is an algorithm for maximum a posteriori decoding of error correcting codes defined on trellises (principally convolutional codes). The algorithm is named after its inventors: Bahl, Cocke, Jelinek and Raviv.L.Bahl, J.Cocke, F.Jelinek, and J.Raviv, "Optimal Decoding of Linear Codes for minimizing symbol error rate", IEEE Transactions on Information Theory, vol. IT-20(2), pp. 284-287, March 1974. This algorithm is critical to modern iteratively-decoded error-correcting codes, including turbo codes and low-density parity-check codes. Steps involved Based on the trellis: * Compute forward probabilities \alpha * Compute backward probabilities \beta * Compute smoothed probabilities based on other information (i.e. noise variance for AWGN, bit crossover probability for binary symmetric channel) Variations SBGT BCJR Berrou, Glavieux and Thitimajshima simplification. Log-Map BCJR P. Robertson, P. Hoeher and E. Villebrun, "Optimal and Sub-Optimal Maximum A Posteri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |