|

RuleML Symposium

The annual International Web Rule Symposium (RuleML) is an international academic conference on research, applications, languages and standards for rule technologies. Since 2017 it is organised as International Joint Conference on Rules and Reasoning (RuleML+RR). It is a conference in the field of rule-based programming and rule-based systems including production rules systems, logic programming rule engines, and business rules engines/ business rules management systems; Semantic Web rule languages and rule standards (e.g., RuleML, LegalRuleML, Reaction RuleML, SWRL, RIF, Common Logic, PRR, Decision Model and Notation (DMN), SBVR); rule-based event processing languages (EPLs) and technologies; and research on inference rules, constraint handling rules, transformation rules, decision rules, production rules, and ECA rules. RuleML+RR is the leading conference to build bridges between academia and industry in the field of Web rules and its applications, especially as part of t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Artificial Intelligence

Artificial intelligence (AI) is the capability of computer, computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of research in computer science that develops and studies methods and software that enable machines to machine perception, perceive their environment and use machine learning, learning and intelligence to take actions that maximize their chances of achieving defined goals. High-profile applications of AI include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon (company), Amazon, and Netflix); virtual assistants (e.g., Google Assistant, Siri, and Amazon Alexa, Alexa); autonomous vehicles (e.g., Waymo); Generative artificial intelligence, generative and Computational creativity, creative tools (e.g., ChatGPT and AI art); and Superintelligence, superhuman play and analysis in strategy games (e.g., ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Common Logic

Common Logic (CL) is a framework for a family of logic languages, based on first-order logic, intended to facilitate the exchange and transmission of knowledge in computer-based systems. The CL definition permits and encourages the development of a variety of different syntactic forms, called ''dialects''. A dialect may use any desired syntax, but it must be possible to demonstrate precisely how the concrete syntax of a dialect conforms to the abstract CL semantics, which are based on a model theoretic interpretation. Each dialect may be then treated as a formal language. Once syntactic conformance is established, a dialect gets the CL semantics for free, as they are specified relative to the abstract syntax only, and hence are inherited by any conformant dialect. In addition, all CL dialects are comparable (i.e., can be automatically translated to a common language), although some may be more expressive than others. In general, a less expressive subset of CL may be translate ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Artificial Intelligence Conferences

Artificiality (the state of being artificial, anthropogenic, or man-made) is the state of being the product of intentional human manufacture, rather than occurring naturally through processes not involving or requiring human activity. Connotations Artificiality often carries with it the implication of being false, counterfeit, or deceptive. The philosopher Aristotle wrote in his ''Rhetoric'': However, artificiality does not necessarily have a negative connotation, as it may also reflect the ability of humans to replicate forms or functions arising in nature, as with an artificial heart or artificial intelligence. Political scientist and artificial intelligence expert Herbert A. Simon observes that "some artificial things are imitations of things in nature, and the imitation may use either the same basic materials as those in the natural object or quite different materials.Herbert A. Simon, ''The Sciences of the Artificial'' (1996), p. 4. Simon distinguishes between the artific ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

DBLP

DBLP is a computer science bibliography website. Starting in 1993 at Universität Trier in Germany, it grew from a small collection of HTML files and became an organization hosting a database and logic programming bibliography site. Since November 2018, DBLP is a branch of Schloss Dagstuhl – Leibniz-Zentrum für Informatik (LZI). DBLP listed more than 5.4 million journal articles, conference papers, and other publications on computer science in December 2020, up from about 14,000 in 1995 and 3.66 million in July 2016. All important journals on computer science are tracked. Proceedings papers of many conferences are also tracked. It is mirrored at three sites across the Internet. For his work on maintaining DBLP, Michael Ley received an award from the Association for Computing Machinery (ACM) and the VLDB Endowment Special Recognition Award in 1997. Furthermore, he was awarded the ACM Distinguished Service Award for "creating, developing, and curating DBLP" in 2019. ''DBLP'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Event Condition Action

Event condition action (ECA) is a short-cut for referring to the structure of active rules in event-driven architecture and active database systems. Such a rule traditionally consisted of three parts: *The ''event'' part specifies the signal that triggers the invocation of the rule *The ''condition'' part is a logical test that, if satisfied or evaluates to true, causes the action to be carried out *The ''action'' part consists of updates or invocations on the local data This structure was used by the early research in active databases which started to use the term ECA. Current state of the art ECA rule engines use many variations on rule structure. Also other features not considered by the early research is introduced, such as strategies for event selection into the event part. In a memory-based rule engine, the condition could be some tests on local data and actions could be updates to object attributes. In a database system, the condition could simply be a query to the database ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Production (computer Science)

In computer science, a production or production rule is a rewrite rule that replaces some symbols with other symbols. A finite set of productions P is the main component in the specification of a formal grammar (specifically a generative grammar). The other components are a finite set N of nonterminal symbols, a finite set (known as an alphabet) \Sigma of terminal symbols that is disjoint from N and a distinguished symbol S \in N that is the ''start symbol''. In an unrestricted grammar, a production is of the form u \to v, where u and v are arbitrary strings of terminals and nonterminals, and u may not be the empty string. If v is the empty string, this is denoted by the symbol \epsilon, or \lambda (rather than leaving the right-hand side blank). So productions are members of the cartesian product :V^*NV^* \times V^* = (V^*\setminus\Sigma^*) \times V^*, where V := N \cup \Sigma is the ''vocabulary'', ^ is the Kleene star operator, V^*NV^* indicates concatenation, \cup deno ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

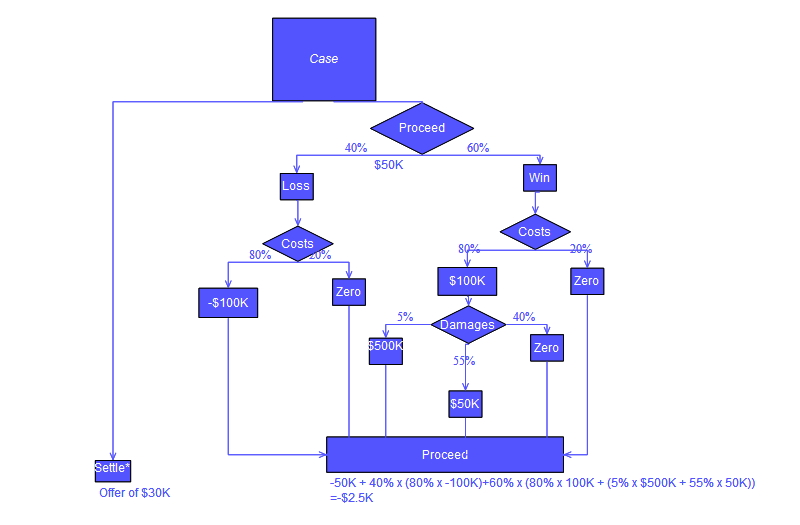

Decision Rules

A decision tree is a decision support recursive partitioning structure that uses a tree-like model of decisions and their possible consequences, including chance event outcomes, resource costs, and utility. It is one way to display an algorithm that only contains conditional control statements. Decision trees are commonly used in operations research, specifically in decision analysis, to help identify a strategy most likely to reach a goal, but are also a popular tool in machine learning. Overview A decision tree is a flowchart-like structure in which each internal node represents a test on an attribute (e.g. whether a coin flip comes up heads or tails), each branch represents the outcome of the test, and each leaf node represents a class label (decision taken after computing all attributes). The paths from root to leaf represent classification rules. In decision analysis, a decision tree and the closely related influence diagram are used as a visual and analytical decis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Rule Of Inference

Rules of inference are ways of deriving conclusions from premises. They are integral parts of formal logic, serving as norms of the Logical form, logical structure of Validity (logic), valid arguments. If an argument with true premises follows a rule of inference then the conclusion cannot be false. ''Modus ponens'', an influential rule of inference, connects two premises of the form "if P then Q" and "P" to the conclusion "Q", as in the argument "If it rains, then the ground is wet. It rains. Therefore, the ground is wet." There are many other rules of inference for different patterns of valid arguments, such as ''modus tollens'', disjunctive syllogism, constructive dilemma, and existential generalization. Rules of inference include rules of implication, which operate only in one direction from premises to conclusions, and rules of replacement, which state that two expressions are equivalent and can be freely swapped. Rules of inference contrast with formal fallaciesinvalid argu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Constraint Handling Rules

Constraint Handling Rules (CHR) is a declarative, rule-based programming language, introduced in 1991 by Thom Frühwirth at the time with European Computer-Industry Research Centre (ECRC) in Munich, Germany.Thom Frühwirth. ''Theory and Practice of Constraint Handling Rules''. Special Issue on Constraint Logic Programming (P. Stuckey and K. Marriott, Eds.), Journal of Logic Programming, Vol 37(1-3), October 1998. Originally intended for constraint programming, CHR finds applications in grammar induction, type systems, abductive reasoning, multi-agent systems, natural language processing, compilation, scheduling, spatial-temporal reasoning, testing, and verification. A CHR program, sometimes called a ''constraint handler'', is a set of rules that maintain a ''constraint store'', a multi-set of logical formulas. Execution of rules may add or remove formulas from the store, thus changing the state of the program. The order in which rules "fire" on a given constraint store is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Inference

Inferences are steps in logical reasoning, moving from premises to logical consequences; etymologically, the word '' infer'' means to "carry forward". Inference is theoretically traditionally divided into deduction and induction, a distinction that in Europe dates at least to Aristotle (300s BC). Deduction is inference deriving logical conclusions from premises known or assumed to be true, with the laws of valid inference being studied in logic. Induction is inference from particular evidence to a universal conclusion. A third type of inference is sometimes distinguished, notably by Charles Sanders Peirce, contradistinguishing abduction from induction. Various fields study how inference is done in practice. Human inference (i.e. how humans draw conclusions) is traditionally studied within the fields of logic, argumentation studies, and cognitive psychology; artificial intelligence researchers develop automated inference systems to emulate human inference. Statist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Complex Event Processing

Event processing is a method of tracking and analyzing (processing) streams of information (data) about things that happen (events), and deriving a conclusion from them. Complex event processing (CEP) consists of a set of concepts and techniques developed in the early 1990s for processing real-time events and extracting information from event streams as they arrive. The goal of complex event processing is to identify meaningful events (such as opportunities or threats) in real-time situations and respond to them as quickly as possible. These events may be happening across the various layers of an organization as sales leads, orders or customer service calls. Or, they may be news items, text messages, social media posts, business processes (such as supply chain), traffic reports, weather reports, or other kinds of data. An event may also be defined as a "change of state," when a measurement exceeds a predefined threshold of time, temperature, or other value. Analysts have suggest ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |