|

Random Indexing

Random indexing is a dimensionality reduction method and computational framework for distributional semantics, based on the insight that very-high-dimensional vector space model implementations are impractical, that models need not grow in dimensionality when new items (e.g. new terminology) are encountered, and that a high-dimensional model can be projected into a space of lower dimensionality without compromising L2 distance metrics if the resulting dimensions are chosen appropriately. This is the original point of the random projection approach to dimension reduction first formulated as the Johnson–Lindenstrauss lemma, and locality-sensitive hashing has some of the same starting points. Random indexing, as used in representation of language, originates from the work of Pentti Kanerva on sparse distributed memory, and can be described as an incremental formulation of a random projection. It can be also verified that random indexing is a random projection technique for the constr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dimensionality Reduction

Dimensionality reduction, or dimension reduction, is the transformation of data from a high-dimensional space into a low-dimensional space so that the low-dimensional representation retains some meaningful properties of the original data, ideally close to its intrinsic dimension. Working in high-dimensional spaces can be undesirable for many reasons; raw data are often sparse as a consequence of the curse of dimensionality, and analyzing the data is usually computationally intractable (hard to control or deal with). Dimensionality reduction is common in fields that deal with large numbers of observations and/or large numbers of variables, such as signal processing, speech recognition, neuroinformatics, and bioinformatics. Methods are commonly divided into linear and nonlinear approaches. Approaches can also be divided into feature selection and feature extraction. Dimensionality reduction can be used for noise reduction, data visualization, cluster analysis, or as an intermediat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributional Semantics

Distributional semantics is a research area that develops and studies theories and methods for quantifying and categorizing semantic similarities between linguistic items based on their distributional properties in large samples of language data. The basic idea of distributional semantics can be summed up in the so-called distributional hypothesis: ''linguistic items with similar distributions have similar meanings.'' Distributional hypothesis The distributional hypothesis in linguistics is derived from the semantic theory of language usage, i.e. words that are used and occur in the same contexts tend to purport similar meanings. The underlying idea that "a word is characterized by the company it keeps" was popularized by Firth in the 1950s. The distributional hypothesis is the basis for statistical semantics. Although the Distributional Hypothesis originated in linguistics, it is now receiving attention in cognitive science especially regarding the context of word use. In rec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector Space Model

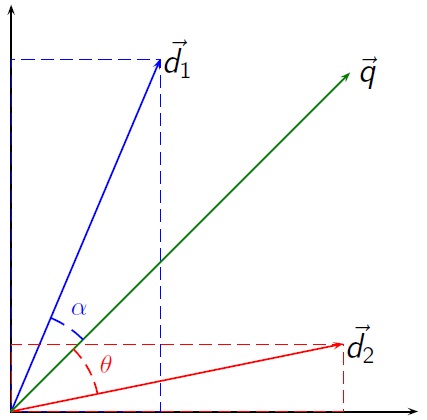

Vector space model or term vector model is an algebraic model for representing text documents (and any objects, in general) as vectors of identifiers (such as index terms). It is used in information filtering, information retrieval, indexing and relevancy rankings. Its first use was in the SMART Information Retrieval System. Definitions Documents and queries are represented as vectors. :d_j = ( w_ ,w_ , \dotsc ,w_ ) :q = ( w_ ,w_ , \dotsc ,w_ ) Each dimension corresponds to a separate term. If a term occurs in the document, its value in the vector is non-zero. Several different ways of computing these values, also known as (term) weights, have been developed. One of the best known schemes is tf-idf weighting (see the example below). The definition of ''term'' depends on the application. Typically terms are single words, keywords, or longer phrases. If words are chosen to be the terms, the dimensionality of the vector is the number of words in the vocabulary (the number of dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Projection

In mathematics and statistics, random projection is a technique used to reduce the dimensionality of a set of points which lie in Euclidean space. Random projection methods are known for their power, simplicity, and low error rates when compared to other methods. According to experimental results, random projection preserves distances well, but empirical results are sparse. They have been applied to many natural language tasks under the name random indexing. Dimensionality reduction Dimensionality reduction, as the name suggests, is reducing the number of random variables using various mathematical methods from statistics and machine learning. Dimensionality reduction is often used to reduce the problem of managing and manipulating large data sets. Dimensionality reduction techniques generally use linear transformations in determining the intrinsic dimensionality of the manifold as well as extracting its principal directions. For this purpose there are various related techniques, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Johnson–Lindenstrauss Lemma

In mathematics, the Johnson–Lindenstrauss lemma is a result named after William B. Johnson and Joram Lindenstrauss concerning low-distortion embeddings of points from high-dimensional into low-dimensional Euclidean space. The lemma states that a set of points in a high-dimensional space can be embedded into a space of much lower dimension in such a way that distances between the points are nearly preserved. The map used for the embedding is at least Lipschitz, and can even be taken to be an orthogonal projection. The lemma has applications in compressed sensing, manifold learning, dimensionality reduction, and graph embedding. Much of the data stored and manipulated on computers, including text and images, can be represented as points in a high-dimensional space (see vector space model for the case of text). However, the essential algorithms for working with such data tend to become bogged down very quickly as dimension increases. It is therefore desirable to reduce the dimensi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Locality-sensitive Hashing

In computer science, locality-sensitive hashing (LSH) is an algorithmic technique that hashes similar input items into the same "buckets" with high probability. (The number of buckets is much smaller than the universe of possible input items.) Since similar items end up in the same buckets, this technique can be used for data clustering and nearest neighbor search. It differs from conventional hashing techniques in that hash collisions are maximized, not minimized. Alternatively, the technique can be seen as a way to reduce the dimensionality of high-dimensional data; high-dimensional input items can be reduced to low-dimensional versions while preserving relative distances between items. Hashing-based approximate nearest neighbor search algorithms generally use one of two main categories of hashing methods: either data-independent methods, such as locality-sensitive hashing (LSH); or data-dependent methods, such as locality-preserving hashing (LPH). Definitions An ''LSH family'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pentti Kanerva

Pentti Kanerva is a research affiliate at the Redwood Neuroscience Institute, and is the originator of the sparse distributed memory model.Kanerva, Pentti. Sparse distributed memory. MIT press, 1988. He is responsible for relating the properties of long-term memory to mathematical properties of high-dimensional spaces and compares artificial neural-net associative memory to conventional computer random-access memory and to the neurons in the brain. This theory has been applied to design and implement the Random indexing approach to learning semantic relations from linguistic data.Joshi, Aditya, Johan Halseth, and Pentti Kanerva. "Language Recognition using Random Indexing." arXiv preprint arXiv:1412.7026 (2014). Education Kanerva has an A.A. from Warren Wilson College, M.S. in forestry, with a minor in mathematics and statistics from the University of Helsinki, and has a Ph.D. in Philosophy, from Stanford University. Employment After earning his Ph.D. at Stanford in 1984, Kaner ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sparse Distributed Memory

Sparse distributed memory (SDM) is a mathematical model of human long-term memory introduced by Pentti Kanerva in 1988 while he was at NASA Ames Research Center. It is a generalized random-access memory (RAM) for long (e.g., 1,000 bit) binary words. These words serve as both addresses to and data for the memory. The main attribute of the memory is sensitivity to similarity, meaning that a word can be read back not only by giving the original write address but also by giving one close to it, as measured by the number of mismatched bits (i.e., the Hamming distance between memory addresses). SDM implements transformation from logical space to physical space using distributed data representation and storage, similarly to encoding processes in human memory. A value corresponding to a logical address is stored into many physical addresses. This way of storing is robust and not deterministic. A memory cell is not addressed directly. If input data (logical addresses) are partially damaged at ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bit Vector

A bit array (also known as bitmask, bit map, bit set, bit string, or bit vector) is an array data structure that compactly stores bits. It can be used to implement a simple set data structure. A bit array is effective at exploiting bit-level parallelism in hardware to perform operations quickly. A typical bit array stores ''kw'' bits, where ''w'' is the number of bits in the unit of storage, such as a byte or word, and ''k'' is some nonnegative integer. If ''w'' does not divide the number of bits to be stored, some space is wasted due to internal fragmentation. Definition A bit array is a mapping from some domain (almost always a range of integers) to values in the set . The values can be interpreted as dark/light, absent/present, locked/unlocked, valid/invalid, et cetera. The point is that there are only two possible values, so they can be stored in one bit. As with other arrays, the access to a single bit can be managed by applying an index to the array. Assuming its size (o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hamming Distance

In information theory, the Hamming distance between two strings of equal length is the number of positions at which the corresponding symbols are different. In other words, it measures the minimum number of ''substitutions'' required to change one string into the other, or the minimum number of ''errors'' that could have transformed one string into the other. In a more general context, the Hamming distance is one of several string metrics for measuring the edit distance between two sequences. It is named after the American mathematician Richard Hamming. A major application is in coding theory, more specifically to block codes, in which the equal-length strings are vectors over a finite field. Definition The Hamming distance between two equal-length strings of symbols is the number of positions at which the corresponding symbols are different. Examples The symbols may be letters, bits, or decimal digits, among other possibilities. For example, the Hamming distance between: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Retrieval

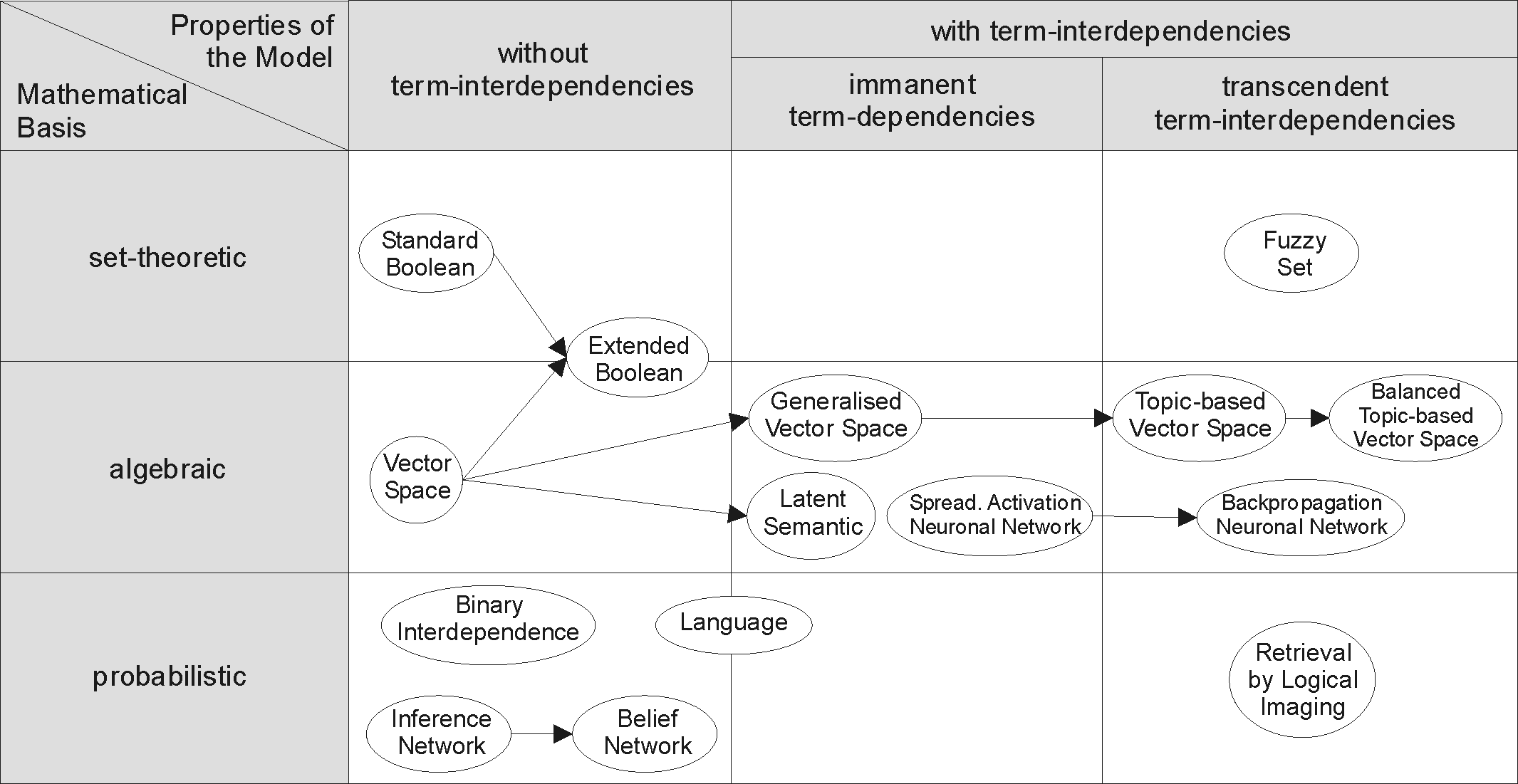

Information retrieval (IR) in computing and information science is the process of obtaining information system resources that are relevant to an information need from a collection of those resources. Searches can be based on full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds. Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; stores and manages those documents. Web search engines are the most visible IR applications. Overview An information retrieval process begins when a user or searcher enters a query into the system. Queries are formal statements of information needs, for example search strings in web search engines. In inf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Document Clustering

Document clustering (or text clustering) is the application of cluster analysis to textual documents. It has applications in automatic document organization, topic extraction and fast information retrieval or filtering. Overview Document clustering involves the use of descriptors and descriptor extraction. Descriptors are sets of words that describe the contents within the cluster. Document clustering is generally considered to be a centralized process. Examples of document clustering include web document clustering for search users. The application of document clustering can be categorized to two types, online and offline. Online applications are usually constrained by efficiency problems when compared to offline applications. Text clustering may be used for different tasks, such as grouping similar documents (news, tweets, etc.) and the analysis of customer/employee feedback, discovering meaningful implicit subjects across all documents. In general, there are two common algorit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |