|

RV Coefficient

In statistics, the RV coefficient is a multivariate generalization of the ''squared'' Pearson correlation coefficient (because the RV coefficient takes values between 0 and 1). It measures the closeness of two set of points that may each be represented in a matrix. The major approaches within statistical multivariate data analysis can all be brought into a common framework in which the RV coefficient is maximised subject to relevant constraints. Specifically, these statistical methodologies include: :*principal component analysis :*canonical correlation analysis :*multivariate regression :*statistical classification ( linear discrimination). One application of the RV coefficient is in functional neuroimaging where it can measure the similarity between two subjects' series of brain scans or between different scans of a same subject. Definitions The definition of the RV-coefficient makes use of ideas concerning the definition of scalar-valued quantities which are called the "v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multivariate Statistics

Multivariate statistics is a subdivision of statistics encompassing the simultaneous observation and analysis of more than one outcome variable. Multivariate statistics concerns understanding the different aims and background of each of the different forms of multivariate analysis, and how they relate to each other. The practical application of multivariate statistics to a particular problem may involve several types of univariate and multivariate analyses in order to understand the relationships between variables and their relevance to the problem being studied. In addition, multivariate statistics is concerned with multivariate probability distributions, in terms of both :*how these can be used to represent the distributions of observed data; :*how they can be used as part of statistical inference, particularly where several different quantities are of interest to the same analysis. Certain types of problems involving multivariate data, for example simple linear regression a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pearson Correlation Coefficient

In statistics, the Pearson correlation coefficient (PCC, pronounced ) ― also known as Pearson's ''r'', the Pearson product-moment correlation coefficient (PPMCC), the bivariate correlation, or colloquially simply as the correlation coefficient ― is a measure of linear correlation between two sets of data. It is the ratio between the covariance of two variables and the product of their standard deviations; thus, it is essentially a normalized measurement of the covariance, such that the result always has a value between −1 and 1. As with covariance itself, the measure can only reflect a linear correlation of variables, and ignores many other types of relationships or correlations. As a simple example, one would expect the age and height of a sample of teenagers from a high school to have a Pearson correlation coefficient significantly greater than 0, but less than 1 (as 1 would represent an unrealistically perfect correlation). Naming and history It was developed by ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix (mathematics)

In mathematics, a matrix (plural matrices) is a rectangular array or table of numbers, symbols, or expressions, arranged in rows and columns, which is used to represent a mathematical object or a property of such an object. For example, \begin1 & 9 & -13 \\20 & 5 & -6 \end is a matrix with two rows and three columns. This is often referred to as a "two by three matrix", a "-matrix", or a matrix of dimension . Without further specifications, matrices represent linear maps, and allow explicit computations in linear algebra. Therefore, the study of matrices is a large part of linear algebra, and most properties and operations of abstract linear algebra can be expressed in terms of matrices. For example, matrix multiplication represents composition of linear maps. Not all matrices are related to linear algebra. This is, in particular, the case in graph theory, of incidence matrices, and adjacency matrices. ''This article focuses on matrices related to linear algebra, an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principal Component Analysis

Principal component analysis (PCA) is a popular technique for analyzing large datasets containing a high number of dimensions/features per observation, increasing the interpretability of data while preserving the maximum amount of information, and enabling the visualization of multidimensional data. Formally, PCA is a statistical technique for reducing the dimensionality of a dataset. This is accomplished by linearly transforming the data into a new coordinate system where (most of) the variation in the data can be described with fewer dimensions than the initial data. Many studies use the first two principal components in order to plot the data in two dimensions and to visually identify clusters of closely related data points. Principal component analysis has applications in many fields such as population genetics, microbiome studies, and atmospheric science. The principal components of a collection of points in a real coordinate space are a sequence of p unit vectors, where the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Canonical Correlation Analysis

In statistics, canonical-correlation analysis (CCA), also called canonical variates analysis, is a way of inferring information from cross-covariance matrices. If we have two vectors ''X'' = (''X''1, ..., ''X''''n'') and ''Y'' = (''Y''1, ..., ''Y''''m'') of random variables, and there are correlations among the variables, then canonical-correlation analysis will find linear combinations of ''X'' and ''Y'' which have maximum correlation with each other. T. R. Knapp notes that "virtually all of the commonly encountered parametric tests of significance can be treated as special cases of canonical-correlation analysis, which is the general procedure for investigating the relationships between two sets of variables." The method was first introduced by Harold Hotelling in 1936, although in the context of angles between flats the mathematical concept was published by Jordan in 1875. Definition Given two column vectors X = (x_1, \dots, x_n)^T and Y ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression Analysis

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one or more independent variables (often called 'predictors', 'covariates', 'explanatory variables' or 'features'). The most common form of regression analysis is linear regression, in which one finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion. For example, the method of ordinary least squares computes the unique line (or hyperplane) that minimizes the sum of squared differences between the true data and that line (or hyperplane). For specific mathematical reasons (see linear regression), this allows the researcher to estimate the conditional expectation (or population average value) of the dependent variable when the independent variables take on a given ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Classification

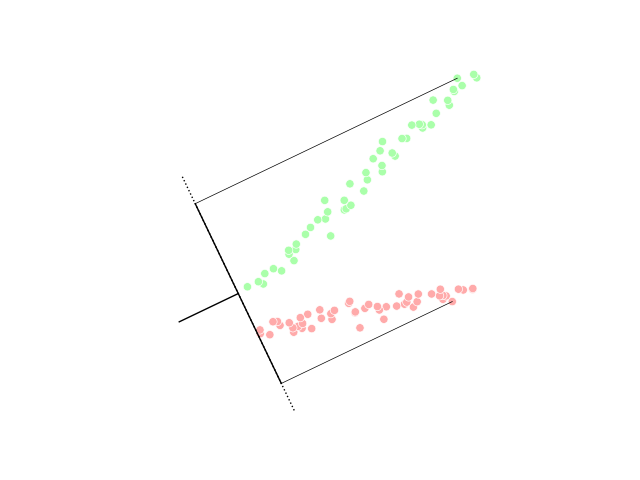

In statistics, classification is the problem of identifying which of a set of categories (sub-populations) an observation (or observations) belongs to. Examples are assigning a given email to the "spam" or "non-spam" class, and assigning a diagnosis to a given patient based on observed characteristics of the patient (sex, blood pressure, presence or absence of certain symptoms, etc.). Often, the individual observations are analyzed into a set of quantifiable properties, known variously as explanatory variables or ''features''. These properties may variously be categorical (e.g. "A", "B", "AB" or "O", for blood type), ordinal (e.g. "large", "medium" or "small"), integer-valued (e.g. the number of occurrences of a particular word in an email) or real-valued (e.g. a measurement of blood pressure). Other classifiers work by comparing observations to previous observations by means of a similarity or distance function. An algorithm that implements classification, especially in a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Discriminant Analysis

Linear discriminant analysis (LDA), normal discriminant analysis (NDA), or discriminant function analysis is a generalization of Fisher's linear discriminant, a method used in statistics and other fields, to find a linear combination of features that characterizes or separates two or more classes of objects or events. The resulting combination may be used as a linear classifier, or, more commonly, for dimensionality reduction before later classification. LDA is closely related to analysis of variance (ANOVA) and regression analysis, which also attempt to express one dependent variable as a linear combination of other features or measurements. However, ANOVA uses categorical independent variables and a continuous dependent variable, whereas discriminant analysis has continuous independent variables and a categorical dependent variable (''i.e.'' the class label). Logistic regression and probit regression are more similar to LDA than ANOVA is, as they also explain a categorica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Functional Neuroimaging

Functional neuroimaging is the use of neuroimaging technology to measure an aspect of brain function, often with a view to understanding the relationship between activity in certain brain areas and specific mental functions. It is primarily used as a research tool in cognitive neuroscience, cognitive psychology, neuropsychology, and social neuroscience. Overview Common methods of functional neuroimaging include * Positron emission tomography (PET) * Functional magnetic resonance imaging (fMRI) * Electroencephalography (EEG) * Magnetoencephalography (MEG) * Functional near-infrared spectroscopy (fNIRS) * Single-photon emission computed tomography (SPECT) * Functional ultrasound imaging (fUS) PET, fMRI, fNIRS and fUS can measure localized changes in cerebral blood flow related to neural activity. These changes are referred to as ''activations''. Regions of the brain which are activated when a subject performs a particular task may play a role in the neural computations which ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Variables

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the possible upper sides of a flipped coin such as heads H and tails T) in a sample space (e.g., the set \) to a measurable space, often the real numbers (e.g., \ in which 1 corresponding to H and -1 corresponding to T). Informally, randomness typically represents some fundamental element of chance, such as in the roll of a dice; it may also represent uncertainty, such as measurement error. However, the interpretation of probability is philosophically complicated, and even in specific cases is not always straightforward. The purely mathematical analysis of random variables is independent of such interpretational difficulties, and can be based upon a rigorous axiomatic setup. In the formal mathematical language of measure theory, a rando ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Congruence Coefficient

In multivariate statistics, the congruence coefficient is an index of the similarity between factors that have been derived in a factor analysis. It was introduced in 1948 by Cyril Burt who referred to it as ''unadjusted correlation''. It is also called ''Tucker's congruence coefficient'' after Ledyard Tucker who popularized the technique. Its values range between -1 and +1. It can be used to study the similarity of extracted factors across different samples of, for example, test takers who have taken the same test.Lorenzo-Seva, U. & ten Berge, J.M.F. (2006). Tucker’s Congruence Coefficient as a Meaningful Index of Factor Similarity. ''Methodology, 2,'' 57–64.Jensen, A.R. (1998). ''The ''g'' factor: The science of mental ability''. Westport, CT: Praeger, pp. 99–100.Abdi, H. (2007)RV Coefficient and Congruence Coefficient.In Neil Salkind (Ed.), ''Encyclopedia of Measurement and Statistics.'' Thousand Oaks (CA): Sage. Definition Let ''X'' and ''Y'' be column vectors of factor lo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distance Correlation

In statistics and in probability theory, distance correlation or distance covariance is a measure of dependence between two paired random vectors of arbitrary, not necessarily equal, dimension. The population distance correlation coefficient is zero if and only if the random vectors are independent. Thus, distance correlation measures both linear and nonlinear association between two random variables or random vectors. This is in contrast to Pearson's correlation, which can only detect linear association between two random variables. Distance correlation can be used to perform a statistical test of dependence with a permutation test. One first computes the distance correlation (involving the re-centering of Euclidean distance matrices) between two random vectors, and then compares this value to the distance correlations of many shuffles of the data. Background The classical measure of dependence, the Pearson correlation coefficient, is mainly sensitive to a linear relation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |