|

Richardson Extrapolation

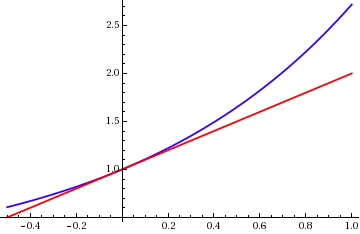

In numerical analysis, Richardson extrapolation is a sequence acceleration method used to improve the rate of convergence of a sequence of estimates of some value A^\ast = \lim_ A(h). In essence, given the value of A(h) for several values of h, we can estimate A^\ast by extrapolating the estimates to h=0. It is named after Lewis Fry Richardson, who introduced the technique in the early 20th century, though the idea was already known to Christiaan Huygens in his calculation of π. In the words of Birkhoff and Rota, "its usefulness for practical computations can hardly be overestimated."Page 126 of Practical applications of Richardson extrapolation include Romberg integration, which applies Richardson extrapolation to the trapezoid rule, and the Bulirsch–Stoer algorithm for solving ordinary differential equations. Example of Richardson extrapolation Suppose that we wish to approximate A^*, and we have a method A(h) that depends on a small parameter h in such a way that A( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Richardson Extra 2d

Richardson may refer to: People * Richardson (surname), an English and Scottish surname * Richardson Gang, a London crime gang in the 1960s * Richardson Dilworth, Mayor of Philadelphia (1956-1962) Places Australia *Richardson, Australian Capital Territory Canada * Richardson Islands, Nunavut *Richardson Mountains, mountain range in northern Yukon United States * Richardson, Kentucky *Richardson, Texas *Richardson, West Virginia *Richardson, Wisconsin *Richardson Bay, California *Richardson Beach, Hawaii *Richardson County, Nebraska *Richardson Township, Minnesota *Richardson Township, Butler County, Nebraska Other uses *Richardson number, dimensionless number that expresses the ratio of potential to kinetic energy *Fort Richardson (Alaska) in Alaska, United States *Richardson (1903 cyclecar), an early British car *Richardson (1919 cyclecar), a car made in Sheffield, England *"Richardson", a 2011 single by Diego's Umbrella also released on their 2012 album ''Proper Cowboy'' *R ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Approximation Error

The approximation error in a data value is the discrepancy between an exact value and some ''approximation'' to it. This error can be expressed as an absolute error (the numerical amount of the discrepancy) or as a relative error (the absolute error divided by the data value). An approximation error can occur because of computing machine precision or measurement error (e.g. the length of a piece of paper is 4.53 cm but the ruler only allows you to estimate it to the nearest 0.1 cm, so you measure it as 4.5 cm). In the mathematical field of numerical analysis, the numerical stability of an algorithm indicates how the error is propagated by the algorithm. Formal definition One commonly distinguishes between the relative error and the absolute error. Given some value ''v'' and its approximation ''v''approx, the absolute error is :\epsilon = , v-v_\text, \ , where the vertical bars denote the absolute value. If v \ne 0, the relative error is : \eta = \frac = \left, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Numerical Analysis

Numerical analysis is the study of algorithms that use numerical approximation (as opposed to symbolic manipulations) for the problems of mathematical analysis (as distinguished from discrete mathematics). It is the study of numerical methods that attempt at finding approximate solutions of problems rather than the exact ones. Numerical analysis finds application in all fields of engineering and the physical sciences, and in the 21st century also the life and social sciences, medicine, business and even the arts. Current growth in computing power has enabled the use of more complex numerical analysis, providing detailed and realistic mathematical models in science and engineering. Examples of numerical analysis include: ordinary differential equations as found in celestial mechanics (predicting the motions of planets, stars and galaxies), numerical linear algebra in data analysis, and stochastic differential equations and Markov chains for simulating living cells in medicine an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Michela Redivo-Zaglia

Michela Redivo-Zaglia is an Italian numerical analyst known for her works on numerical linear algebra and on extrapolation-based acceleration of numerical methods. She is an associate professor in the department of mathematics at the University of Padua. Education and career Redivo-Zaglia earned a degree in mathematics at the University of Padua in 1975, and completed her Ph.D. in 1992 at the University of Lille in France. Her dissertation, ''Extrapolation, Méthodes de Lanczos et Polynômes Orthogonaux: Théorie et Conception de Logiciels'' was supervised by Claude Brezinski. She worked at the University of Padua, in the department of electronics and computer science, from 1984 to 1998, when she became an associate professor in 1998 at the University of Calabria The University of Calabria ( it, Università della Calabria, UNICAL) is a state-run university in Italy. Located in Arcavacata, a hamlet of Rende and a suburb of Cosenza, the university was founded in 1972. Among its f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Richardson Iteration

Modified Richardson iteration is an iterative method for solving a system of linear equations. Richardson iteration was proposed by Lewis Fry Richardson in his work dated 1910. It is similar to the Jacobi and Gauss–Seidel method. We seek the solution to a set of linear equations, expressed in matrix terms as : A x = b.\, The Richardson iteration is : x^ = x^ + \omega \left( b - A x^ \right), where \omega is a scalar parameter that has to be chosen such that the sequence x^ converges. It is easy to see that the method has the correct fixed points, because if it converges, then x^ \approx x^ and x^ has to approximate a solution of A x = b. Convergence Subtracting the exact solution x, and introducing the notation for the error e^ = x^-x, we get the equality for the errors : e^ = e^ - \omega A e^ = (I-\omega A) e^. Thus, : \, e^\, = \, (I-\omega A) e^\, \leq \, I-\omega A\, \, e^\, , for any vector norm and the corresponding induced matrix norm. Thus, if ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Aitken's Delta-squared Process

In numerical analysis, Aitken's delta-squared process or Aitken extrapolation is a series acceleration method, used for accelerating the rate of convergence of a sequence. It is named after Alexander Aitken, who introduced this method in 1926.Alexander Aitken, "On Bernoulli's numerical solution of algebraic equations", ''Proceedings of the Royal Society of Edinburgh'' (1926) 46 pp. 289–305. Its early form was known to Seki Kōwa (end of 17th century) and was found for rectification of the circle, i.e. the calculation of π. It is most useful for accelerating the convergence of a sequence that is converging linearly. Definition Given a sequence X = _, one associates with this sequence the new sequence :A X=_, which can, with improved numerical stability, also be written as : (A X)_n = x_n-\frac, or equivalently as :(A X)_n = x_ - \frac = x_ - \frac where :\Delta x_=,\ \Delta x_=, and :\Delta^2 x_n=x_n -2x_ + x_=\Delta x_-\Delta x_,\ for n = 0, 1, 2, 3, \dots \, Obviou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trapezoidal Method

In calculus, the trapezoidal rule (also known as the trapezoid rule or trapezium rule; see Trapezoid for more information on terminology) is a technique for approximating the definite integral. \int_a^b f(x) \, dx. The trapezoidal rule works by approximating the region under the graph of the function f(x) as a trapezoid and calculating its area. It follows that \int_^ f(x) \, dx \approx (b-a) \cdot \tfrac(f(a)+f(b)). The trapezoidal rule may be viewed as the result obtained by averaging the left and right Riemann sums, and is sometimes defined this way. The integral can be even better approximated by partitioning the integration interval, applying the trapezoidal rule to each subinterval, and summing the results. In practice, this "chained" (or "composite") trapezoidal rule is usually what is meant by "integrating with the trapezoidal rule". Let \ be a partition of ,b/math> such that a=x_0 < x_1 < \cdots < x_ < x_N = b and be the length of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Truncation Error

In numerical analysis and scientific computing, truncation error is an error caused by approximating a mathematical process. Examples Infinite series A summation series for e^x is given by an infinite series such as e^x=1+ x+ \frac + \frac+ \frac+ \cdots In reality, we can only use a finite number of these terms as it would take an infinite amount of computational time to make use of all of them. So let's suppose we use only three terms of the series, then e^x\approx 1+x+ \frac In this case, the truncation error is \frac+\frac+ \cdots Example A: Given the following infinite series, find the truncation error for if only the first three terms of the series are used. S = 1 + x + x^2 + x^3 + \cdots, \qquad \left, x\<1. Solution Using only first three terms of the series gives The sum of an infinite geometrical series |

Sequence Transformation

In mathematics, a sequence transformation is an operator acting on a given space of sequences (a sequence space). Sequence transformations include linear mappings such as convolution with another sequence, and resummation of a sequence and, more generally, are commonly used for series acceleration, that is, for improving the rate of convergence of a slowly convergent sequence or series. Sequence transformations are also commonly used to compute the antilimit of a divergent series numerically, and are used in conjunction with extrapolation methods. Overview Classical examples for sequence transformations include the binomial transform, Möbius transform, Stirling transform and others. Definitions For a given sequence :S=\_,\, the transformed sequence is :\mathbf(S)=S'=\_,\, where the members of the transformed sequence are usually computed from some finite number of members of the original sequence, i.e. :s_n' = T(s_n,s_,\dots,s_) for some k which often depends on n (c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Recurrence Relation

In mathematics, a recurrence relation is an equation according to which the nth term of a sequence of numbers is equal to some combination of the previous terms. Often, only k previous terms of the sequence appear in the equation, for a parameter k that is independent of n; this number k is called the ''order'' of the relation. If the values of the first k numbers in the sequence have been given, the rest of the sequence can be calculated by repeatedly applying the equation. In ''linear recurrences'', the th term is equated to a linear function of the k previous terms. A famous example is the recurrence for the Fibonacci numbers, F_n=F_+F_ where the order k is two and the linear function merely adds the two previous terms. This example is a linear recurrence with constant coefficients, because the coefficients of the linear function (1 and 1) are constants that do not depend on n. For these recurrences, one can express the general term of the sequence as a closed-form expression ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big O Notation

Big ''O'' notation is a mathematical notation that describes the limiting behavior of a function when the argument tends towards a particular value or infinity. Big O is a member of a family of notations invented by Paul Bachmann, Edmund Landau, and others, collectively called Bachmann–Landau notation or asymptotic notation. The letter O was chosen by Bachmann to stand for '' Ordnung'', meaning the order of approximation. In computer science, big O notation is used to classify algorithms according to how their run time or space requirements grow as the input size grows. In analytic number theory, big O notation is often used to express a bound on the difference between an arithmetical function and a better understood approximation; a famous example of such a difference is the remainder term in the prime number theorem. Big O notation is also used in many other fields to provide similar estimates. Big O notation characterizes functions according to their growth rates: d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |