|

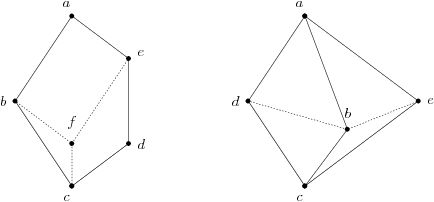

Residuated Lattice

In abstract algebra, a residuated lattice is an algebraic structure that is simultaneously a lattice (order), lattice ''x'' ≤ ''y'' and a monoid ''x''•''y'' which admits operations ''x''\''z'' and ''z''/''y'', loosely analogous to division or implication, when ''x''•''y'' is viewed as multiplication or conjunction, respectively. Called respectively right and left residuals, these operations coincide when the monoid is commutative. The general concept was introduced by Morgan Ward and Robert P. Dilworth in 1939. Examples, some of which existed prior to the general concept, include Boolean algebra (structure), Boolean algebras, Heyting algebras, residuated Boolean algebras, relation algebras, and MV-algebras. Residuated lattice#Residuated semilattice, Residuated semilattices omit the meet operation ∧, for example Kleene algebras and action algebras. Definition In mathematics, a residuated lattice is an algebraic structure such that : (i) (''L'', ≤) is a lattice (ord ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Abstract Algebra

In mathematics, more specifically algebra, abstract algebra or modern algebra is the study of algebraic structures, which are set (mathematics), sets with specific operation (mathematics), operations acting on their elements. Algebraic structures include group (mathematics), groups, ring (mathematics), rings, field (mathematics), fields, module (mathematics), modules, vector spaces, lattice (order), lattices, and algebra over a field, algebras over a field. The term ''abstract algebra'' was coined in the early 20th century to distinguish it from older parts of algebra, and more specifically from elementary algebra, the use of variable (mathematics), variables to represent numbers in computation and reasoning. The abstract perspective on algebra has become so fundamental to advanced mathematics that it is simply called "algebra", while the term "abstract algebra" is seldom used except in mathematical education, pedagogy. Algebraic structures, with their associated homomorphisms, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Logic

Linear logic is a substructural logic proposed by French logician Jean-Yves Girard as a refinement of classical and intuitionistic logic, joining the dualities of the former with many of the constructive properties of the latter. Although the logic has also been studied for its own sake, more broadly, ideas from linear logic have been influential in fields such as programming languages, game semantics, and quantum physics (because linear logic can be seen as the logic of quantum information theory), as well as linguistics, particularly because of its emphasis on resource-boundedness, duality, and interaction. Linear logic lends itself to many different presentations, explanations, and intuitions. Proof-theoretically, it derives from an analysis of classical sequent calculus in which uses of (the structural rules) contraction and weakening are carefully controlled. Operationally, this means that logical deduction is no longer merely about an ever-expanding collection of pe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Peirce's Law

In logic, Peirce's law is named after the philosopher and logician Charles Sanders Peirce. It was taken as an Axiom#Mathematics, axiom in his first axiomatisation of propositional logic. It can be thought of as the law of excluded middle written in a form that involves only one sort of connective, namely implication. In propositional calculus, Peirce's law says that ((''P''→''Q'')→''P'')→''P''. Written out, this means that ''P'' must be true if there is a proposition ''Q'' such that the truth of ''P'' Logical consequence, follows from the truth of "if ''P'' then ''Q''". Peirce's law does not hold in intuitionistic logic or intermediate logics and cannot be deduced from the deduction theorem alone. Under the Curry–Howard isomorphism, Peirce's law is the type of continuation operators, e.g. call/cc in Scheme (programming language), Scheme. v \end \end and where Peirce's law as a formula can be simplified to: : \begin ((u \mathrel v) \ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language

A natural language or ordinary language is a language that occurs naturally in a human community by a process of use, repetition, and change. It can take different forms, typically either a spoken language or a sign language. Natural languages are distinguished from constructed and formal languages such as those used to program computers or to study logic. Defining natural language Natural languages include ones that are associated with linguistic prescriptivism or language regulation. ( Nonstandard dialects can be viewed as a wild type in comparison with standard languages.) An official language with a regulating academy such as Standard French, overseen by the , is classified as a natural language (e.g. in the field of natural language processing), as its prescriptive aspects do not make it constructed enough to be a constructed language or controlled enough to be a controlled natural language. Natural language are different from: * artificial and constructed la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Formal Language

In logic, mathematics, computer science, and linguistics, a formal language is a set of strings whose symbols are taken from a set called "alphabet". The alphabet of a formal language consists of symbols that concatenate into strings (also called "words"). Words that belong to a particular formal language are sometimes called ''well-formed words''. A formal language is often defined by means of a formal grammar such as a regular grammar or context-free grammar. In computer science, formal languages are used, among others, as the basis for defining the grammar of programming languages and formalized versions of subsets of natural languages, in which the words of the language represent concepts that are associated with meanings or semantics. In computational complexity theory, decision problems are typically defined as formal languages, and complexity classes are defined as the sets of the formal languages that can be parsed by machines with limited computational power. In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Relations

In mathematics, a binary relation associates some elements of one set called the ''domain'' with some elements of another set called the ''codomain''. Precisely, a binary relation over sets X and Y is a set of ordered pairs (x, y), where x is an element of X and y is an element of Y. It encodes the common concept of relation: an element x is ''related'' to an element y, if and only if the pair (x, y) belongs to the set of ordered pairs that defines the binary relation. An example of a binary relation is the " divides" relation over the set of prime numbers \mathbb and the set of integers \mathbb, in which each prime p is related to each integer z that is a multiple of p, but not to an integer that is not a multiple of p. In this relation, for instance, the prime number 2 is related to numbers such as -4, 0, 6, 10, but not to 1 or 9, just as the prime number 3 is related to 0, 6, and 9, but not to 4 or 13. Binary relations, and especially homogeneous relations, are used in m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monus

In mathematics, monus is an operator on certain commutative monoids that are not groups. A commutative monoid on which a monus operator is defined is called a commutative monoid with monus, or CMM. The monus operator may be denoted with the − symbol because the natural numbers are a CMM under subtraction; it is also denoted with the \mathop symbol to distinguish it from the standard subtraction operator. Notation A use of the monus symbol is seen in Dennis Ritchie's PhD Thesis from 1968. Definition Let (M, +, 0) be a commutative monoid. Define a binary relation \leq on this monoid as follows: for any two elements a and b, define a \leq b if there exists an element c such that a + c = b. It is easy to check that \leq is reflexive and that it is transitive. M is called ''naturally ordered'' if the \leq relation is additionally antisymmetric and hence a partial order. Further, if for each pair of elements a and b, a unique smallest element c exists such that a \le ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Total Order

In mathematics, a total order or linear order is a partial order in which any two elements are comparable. That is, a total order is a binary relation \leq on some set X, which satisfies the following for all a, b and c in X: # a \leq a ( reflexive). # If a \leq b and b \leq c then a \leq c ( transitive). # If a \leq b and b \leq a then a = b ( antisymmetric). # a \leq b or b \leq a ( strongly connected, formerly called totality). Requirements 1. to 3. just make up the definition of a partial order. Reflexivity (1.) already follows from strong connectedness (4.), but is required explicitly by many authors nevertheless, to indicate the kinship to partial orders. Total orders are sometimes also called simple, connex, or full orders. A set equipped with a total order is a totally ordered set; the terms simply ordered set, linearly ordered set, toset and loset are also used. The term ''chain'' is sometimes defined as a synonym of ''totally ordered set'', but generally refers to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributive Lattice

In mathematics, a distributive lattice is a lattice (order), lattice in which the operations of join and meet distributivity, distribute over each other. The prototypical examples of such structures are collections of sets for which the lattice operations can be given by set union (set theory), union and intersection (set theory), intersection. Indeed, these lattices of sets describe the scenery completely: every distributive lattice is—up to order isomorphism, isomorphism—given as such a lattice of sets. Definition As in the case of arbitrary lattices, one can choose to consider a distributive lattice ''L'' either as a structure of order theory or of universal algebra. Both views and their mutual correspondence are discussed in the article on lattice (order), lattices. In the present situation, the algebraic description appears to be more convenient. A lattice (''L'',∨,∧) is distributive if the following additional identity holds for all ''x'', ''y'', and ''z'' i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heyting Algebras

In mathematics, a Heyting algebra (also known as pseudo-Boolean algebra) is a bounded lattice (with join and meet operations written ∨ and ∧ and with least element 0 and greatest element 1) equipped with a binary operation ''a'' → ''b'' called ''implication'' such that (''c'' ∧ ''a'') ≤ ''b'' is equivalent to ''c'' ≤ (''a'' → ''b''). From a logical standpoint, ''A'' → ''B'' is by this definition the weakest proposition for which modus ponens, the inference rule ''A'' → ''B'', ''A'' ⊢ ''B'', is sound. Like Boolean algebras, Heyting algebras form a variety axiomatizable with finitely many equations. Heyting algebras were introduced in 1930 by Arend Heyting to formalize intuitionistic logic. Heyting algebras are distributive lattices. Every Boolean algebra is a Heyting algebra when ''a'' → ''b'' is defined as ¬''a'' ∨ ''b'', as is every complete distributive lattice satisfying a one-sided infinite distributive law when ''a'' → ''b'' is taken to be t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Commutative Algebra

Commutative algebra, first known as ideal theory, is the branch of algebra that studies commutative rings, their ideal (ring theory), ideals, and module (mathematics), modules over such rings. Both algebraic geometry and algebraic number theory build on commutative algebra. Prominent examples of commutative rings include polynomial rings; rings of algebraic integers, including the ordinary integers \mathbb; and p-adic number, ''p''-adic integers. Commutative algebra is the main technical tool of algebraic geometry, and many results and concepts of commutative algebra are strongly related with geometrical concepts. The study of rings that are not necessarily commutative is known as noncommutative algebra; it includes ring theory, representation theory, and the theory of Banach algebras. Overview Commutative algebra is essentially the study of the rings occurring in algebraic number theory and algebraic geometry. Several concepts of commutative algebras have been developed in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conductor (ring Theory)

In ring theory, a branch of mathematics, the conductor is a measurement of how far apart a commutative ring and an extension ring are. Most often, the larger ring is a domain integrally closed in its field of fractions, and then the conductor measures the failure of the smaller ring to be integrally closed. The conductor is of great importance in the study of non-maximal orders in the ring of integers of an algebraic number field. One interpretation of the conductor is that it measures the failure of unique factorization into prime ideals. Definition Let ''A'' and ''B'' be commutative rings, and assume . The conductor of ''A'' in ''B'' is the ideal :\mathfrak(B/A) = \operatorname_A(B/A). Here is viewed as a quotient of ''A''- modules, and denotes the annihilator. More concretely, the conductor is the set :\mathfrak(B/A) = \. Because the conductor is defined as an annihilator, it is an ideal of ''A''. If ''B'' is an integral domain, then the conductor may be rewrit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |