|

Quadratic Programming

Quadratic programming (QP) is the process of solving certain mathematical optimization problems involving quadratic functions. Specifically, one seeks to optimize (minimize or maximize) a multivariate quadratic function subject to linear constraints on the variables. Quadratic programming is a type of nonlinear programming. "Programming" in this context refers to a formal procedure for solving mathematical problems. This usage dates to the 1940s and is not specifically tied to the more recent notion of "computer programming." To avoid confusion, some practitioners prefer the term "optimization" — e.g., "quadratic optimization." Problem formulation The quadratic programming problem with variables and constraints can be formulated as follows. Given: * a real-valued, -dimensional vector , * an -dimensional real symmetric matrix , * an -dimensional real matrix , and * an -dimensional real vector , the objective of quadratic programming is to find an -dimensional vector , that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maxima and minima, maximizing or minimizing a Function of a real variable, real function by systematically choosing Argument of a function, input values from within an allowed set and computing the Value (mathematics), value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. Optimization problems Opti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Active Set

In mathematical optimization, the active-set method is an algorithm used to identify the active constraints in a set of inequality constraints. The active constraints are then expressed as equality constraints, thereby transforming an inequality-constrained problem into a simpler equality-constrained subproblem. An optimization problem is defined using an objective function to minimize or maximize, and a set of constraints : g_1(x) \ge 0, \dots, g_k(x) \ge 0 that define the feasible region, that is, the set of all ''x'' to search for the optimal solution. Given a point x in the feasible region, a constraint : g_i(x) \ge 0 is called active at x_0 if g_i(x_0) = 0, and inactive at x_0 if g_i(x_0) > 0. Equality constraints are always active. The active set at x_0 is made up of those constraints g_i(x_0) that are active at the current point . The active set is particularly important in optimization theory, as it determines which constraints will influence the final result of o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Positive-definite Matrix

In mathematics, a symmetric matrix M with real entries is positive-definite if the real number \mathbf^\mathsf M \mathbf is positive for every nonzero real column vector \mathbf, where \mathbf^\mathsf is the row vector transpose of \mathbf. More generally, a Hermitian matrix (that is, a complex matrix equal to its conjugate transpose) is positive-definite if the real number \mathbf^* M \mathbf is positive for every nonzero complex column vector \mathbf, where \mathbf^* denotes the conjugate transpose of \mathbf. Positive semi-definite matrices are defined similarly, except that the scalars \mathbf^\mathsf M \mathbf and \mathbf^* M \mathbf are required to be positive ''or zero'' (that is, nonnegative). Negative-definite and negative semi-definite matrices are defined analogously. A matrix that is not positive semi-definite and not negative semi-definite is sometimes called ''indefinite''. Some authors use more general definitions of definiteness, permitting the matrices to be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wolfe Duality

In mathematical optimization, Wolfe duality, named after Philip Wolfe, is type of dual problem in which the objective function and constraints are all differentiable functions. Using this concept a lower bound for a minimization problem can be found because of the weak duality principle. Mathematical formulation For a minimization problem with inequality constraints, : \begin &\underset& & f(x) \\ &\operatorname & &g_i(x) \leq 0, \quad i = 1,\dots,m \end the Lagrangian dual problem is : \begin &\underset& & \inf_x \left(f(x) + \sum_^m u_j g_j(x)\right) \\ &\operatorname & &u_i \geq 0, \quad i = 1,\dots,m \end where the objective function is the Lagrange dual function. Provided that the functions f and g_1, \ldots, g_m are convex and continuously differentiable, the infimum In mathematics, the infimum (abbreviated inf; : infima) of a subset S of a partially ordered set P is the greatest element in P that is less than or equal to each element of S, if such an element e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Infimum

In mathematics, the infimum (abbreviated inf; : infima) of a subset S of a partially ordered set P is the greatest element in P that is less than or equal to each element of S, if such an element exists. If the infimum of S exists, it is unique, and if ''b'' is a lower bound of S, then ''b'' is less than or equal to the infimum of S. Consequently, the term ''greatest lower bound'' (abbreviated as ) is also commonly used. The supremum (abbreviated sup; : suprema) of a subset S of a partially ordered set P is the least element in P that is greater than or equal to each element of S, if such an element exists. If the supremum of S exists, it is unique, and if ''b'' is an upper bound of S, then the supremum of S is less than or equal to ''b''. Consequently, the supremum is also referred to as the ''least upper bound'' (or ). The infimum is, in a precise sense, dual to the concept of a supremum. Infima and suprema of real numbers are common special cases that are important in anal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dual Problem

In mathematical optimization theory, duality or the duality principle is the principle that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem. If the primal is a minimization problem then the dual is a maximization problem (and vice versa). Any feasible solution to the primal (minimization) problem is at least as large as any feasible solution to the dual (maximization) problem. Therefore, the solution to the primal is an upper bound to the solution of the dual, and the solution of the dual is a lower bound to the solution of the primal. This fact is called weak duality. In general, the optimal values of the primal and dual problems need not be equal. Their difference is called the duality gap. For convex optimization problems, the duality gap is zero under a constraint qualification condition. This fact is called strong duality. Dual problem Usually the term "dual problem" refers to the ''Lagrangian dual problem'' but o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conjugate Gradient Method

In mathematics, the conjugate gradient method is an algorithm for the numerical solution of particular systems of linear equations, namely those whose matrix is positive-semidefinite. The conjugate gradient method is often implemented as an iterative algorithm, applicable to sparse systems that are too large to be handled by a direct implementation or other direct methods such as the Cholesky decomposition. Large sparse systems often arise when numerically solving partial differential equations or optimization problems. The conjugate gradient method can also be used to solve unconstrained optimization problems such as energy minimization. It is commonly attributed to Magnus Hestenes and Eduard Stiefel, who programmed it on the Z4, and extensively researched it. The biconjugate gradient method provides a generalization to non-symmetric matrices. Various nonlinear conjugate gradient methods seek minima of nonlinear optimization problems. Description of the problem addres ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Null Space

In mathematics, the kernel of a linear map, also known as the null space or nullspace, is the part of the domain which is mapped to the zero vector of the co-domain; the kernel is always a linear subspace of the domain. That is, given a linear map between two vector spaces and , the kernel of is the vector space of all elements of such that , where denotes the zero vector in , or more symbolically: \ker(L) = \left\ = L^(\mathbf). Properties The kernel of is a linear subspace of the domain .Linear algebra, as discussed in this article, is a very well established mathematical discipline for which there are many sources. Almost all of the material in this article can be found in , , and Strang's lectures. In the linear map L : V \to W, two elements of have the same image in if and only if their difference lies in the kernel of , that is, L\left(\mathbf_1\right) = L\left(\mathbf_2\right) \quad \text \quad L\left(\mathbf_1-\mathbf_2\right) = \mathbf. From this, it follows ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LU Factorization

In numerical analysis and linear algebra, lower–upper (LU) decomposition or factorization factors a matrix as the product of a lower triangular matrix and an upper triangular matrix (see matrix multiplication and matrix decomposition). The product sometimes includes a permutation matrix as well. LU decomposition can be viewed as the matrix form of Gaussian elimination. Computers usually solve square systems of linear equations using LU decomposition, and it is also a key step when inverting a matrix or computing the determinant of a matrix. It is also sometimes referred to as LR decomposition (factors into left and right triangular matrices). The LU decomposition was introduced by the Polish astronomer Tadeusz Banachiewicz in 1938, who first wrote product equation LU=A=h^Tg (The last form in his alternate yet equivalent matrix notation appears as g\times h. ) Definitions Let ''A'' be a square matrix. An LU factorization refers to expression of ''A'' into product of two facto ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lagrange Multipliers

In mathematical optimization, the method of Lagrange multipliers is a strategy for finding the local maxima and minima of a function subject to equation constraints (i.e., subject to the condition that one or more equations have to be satisfied exactly by the chosen values of the variables). It is named after the mathematician Joseph-Louis Lagrange. Summary and rationale The basic idea is to convert a constrained problem into a form such that the derivative test of an unconstrained problem can still be applied. The relationship between the gradient of the function and gradients of the constraints rather naturally leads to a reformulation of the original problem, known as the Lagrangian function or Lagrangian. In the general case, the Lagrangian is defined as \mathcal(x, \lambda) \equiv f(x) + \langle \lambda, g(x)\rangle for functions f, g; the notation \langle \cdot, \cdot \rangle denotes an inner product. The value \lambda is called the Lagrange multiplier. In simple ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

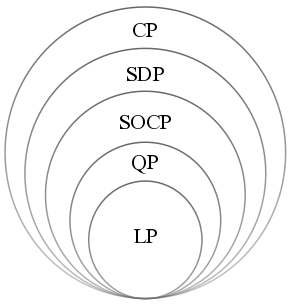

Convex Optimization

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Definition Abstract form A convex optimization problem is defined by two ingredients: * The ''objective function'', which is a real-valued convex function of ''n'' variables, f :\mathcal D \subseteq \mathbb^n \to \mathbb; * The ''feasible set'', which is a convex subset C\subseteq \mathbb^n. The goal of the problem is to find some \mathbf \in C attaining :\inf \. In general, there are three options regarding the existence of a solution: * If such a point ''x''* exists, it is referred to as an ''optimal point'' or ''solution''; the set of all optimal points is called the ''optimal set''; and the problem is called ''solvable''. * If f is unbou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Simplex Algorithm

In mathematical optimization, Dantzig's simplex algorithm (or simplex method) is a popular algorithm for linear programming. The name of the algorithm is derived from the concept of a simplex and was suggested by T. S. Motzkin. Simplices are not actually used in the method, but one interpretation of it is that it operates on simplicial ''cones'', and these become proper simplices with an additional constraint. The simplicial cones in question are the corners (i.e., the neighborhoods of the vertices) of a geometric object called a polytope. The shape of this polytope is defined by the constraints applied to the objective function. History George Dantzig worked on planning methods for the US Army Air Force during World War II using a desk calculator. During 1946, his colleague challenged him to mechanize the planning process to distract him from taking another job. Dantzig formulated the problem as linear inequalities inspired by the work of Wassily Leontief, however, at tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |