|

Pseudo-variance

In probability theory and statistics, complex random variables are a generalization of real-valued random variables to complex numbers, i.e. the possible values a complex random variable may take are complex numbers. Complex random variables can always be considered as pairs of real random variables: their real and imaginary parts. Therefore, the distribution of one complex random variable may be interpreted as the joint distribution of two real random variables. Some concepts of real random variables have a straightforward generalization to complex random variables—e.g., the definition of the mean of a complex random variable. Other concepts are unique to complex random variables. Applications of complex random variables are found in digital signal processing, quadrature amplitude modulation and information theory. Definition A complex random variable Z on the probability space (\Omega,\mathcal,P) is a function Z \colon \Omega \rightarrow \mathbb such that both its real part ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pseudo-covariance

In probability theory and statistics, complex random variables are a generalization of real-valued random variables to complex numbers, i.e. the possible values a complex random variable may take are complex numbers. Complex random variables can always be considered as pairs of real random variables: their real and imaginary parts. Therefore, the distribution of one complex random variable may be interpreted as the joint distribution of two real random variables. Some concepts of real random variables have a straightforward generalization to complex random variables—e.g., the definition of the mean of a complex random variable. Other concepts are unique to complex random variables. Applications of complex random variables are found in digital signal processing, quadrature amplitude modulation and information theory. Definition A complex random variable Z on the probability space (\Omega,\mathcal,P) is a function Z \colon \Omega \rightarrow \mathbb such that both its real part ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion). Although it is not possible to perfectly predict random events, much can be said about their behavior. Two major results in probability ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Operator

In mathematics, and more specifically in linear algebra, a linear map (also called a linear mapping, linear transformation, vector space homomorphism, or in some contexts linear function) is a mapping V \to W between two vector spaces that preserves the operations of vector addition and scalar multiplication. The same names and the same definition are also used for the more general case of modules over a ring; see Module homomorphism. If a linear map is a bijection then it is called a . In the case where V = W, a linear map is called a (linear) ''endomorphism''. Sometimes the term refers to this case, but the term "linear operator" can have different meanings for different conventions: for example, it can be used to emphasize that V and W are real vector spaces (not necessarily with V = W), or it can be used to emphasize that V is a function space, which is a common convention in functional analysis. Sometimes the term ''linear function'' has the same meaning as ''linear map' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Complex Random Vector

In probability theory and statistics, a complex random vector is typically a tuple of complex-valued random variables, and generally is a random variable taking values in a vector space over the field of complex numbers. If Z_1,\ldots,Z_n are complex-valued random variables, then the ''n''-tuple \left( Z_1,\ldots,Z_n \right) is a complex random vector. Complex random variables can always be considered as pairs of real random vectors: their real and imaginary parts. Some concepts of real random vectors have a straightforward generalization to complex random vectors. For example, the definition of the mean of a complex random vector. Other concepts are unique to complex random vectors. Applications of complex random vectors are found in digital signal processing. Definition A complex random vector \mathbf = (Z_1,\ldots,Z_n)^T on the probability space (\Omega,\mathcal,P) is a function \mathbf \colon \Omega \rightarrow \mathbb^n such that the vector (\Re,\Im,\ldots,\Re,\Im)^T is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Central Moment

In probability theory and statistics, a central moment is a moment of a probability distribution of a random variable about the random variable's mean; that is, it is the expected value of a specified integer power of the deviation of the random variable from the mean. The various moments form one set of values by which the properties of a probability distribution can be usefully characterized. Central moments are used in preference to ordinary moments, computed in terms of deviations from the mean instead of from zero, because the higher-order central moments relate only to the spread and shape of the distribution, rather than also to its location. Sets of central moments can be defined for both univariate and multivariate distributions. Univariate moments The ''n''th moment about the mean (or ''n''th central moment) of a real-valued random variable ''X'' is the quantity ''μ''''n'' := E 'X''.html"_;"title="''X'' − E[''X''">''X'' − E[''X''''n'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Characteristic Function (probability Theory)

In probability theory and statistics, the characteristic function of any real-valued random variable completely defines its probability distribution. If a random variable admits a probability density function, then the characteristic function is the Fourier transform of the probability density function. Thus it provides an alternative route to analytical results compared with working directly with probability density functions or cumulative distribution functions. There are particularly simple results for the characteristic functions of distributions defined by the weighted sums of random variables. In addition to univariate distributions, characteristic functions can be defined for vector- or matrix-valued random variables, and can also be extended to more generic cases. The characteristic function always exists when treated as a function of a real-valued argument, unlike the moment-generating function. There are relations between the behavior of the characteristic function of a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hölder's Inequality

In mathematical analysis, Hölder's inequality, named after Otto Hölder, is a fundamental inequality between integrals and an indispensable tool for the study of spaces. :Theorem (Hölder's inequality). Let be a measure space and let with . Then for all measurable real number, real- or complex number, complex-valued function (mathematics), functions and on , ::\, fg\, _1 \le \, f\, _p \, g\, _q. :If, in addition, and and , then Hölder's inequality becomes an equality if and only if and are Linear dependence, linearly dependent in , meaning that there exist real numbers , not both of them zero, such that -almost everywhere. The numbers and above are said to be Hölder conjugates of each other. The special case gives a form of the Cauchy–Schwarz inequality. Hölder's inequality holds even if is infinite, the right-hand side also being infinite in that case. Conversely, if is in and is in , then the pointwise product is in . Hölder's inequality is used to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Triangle Inequality

In mathematics, the triangle inequality states that for any triangle, the sum of the lengths of any two sides must be greater than or equal to the length of the remaining side. This statement permits the inclusion of degenerate triangles, but some authors, especially those writing about elementary geometry, will exclude this possibility, thus leaving out the possibility of equality. If , , and are the lengths of the sides of the triangle, with no side being greater than , then the triangle inequality states that :z \leq x + y , with equality only in the degenerate case of a triangle with zero area. In Euclidean geometry and some other geometries, the triangle inequality is a theorem about distances, and it is written using vectors and vector lengths ( norms): :\, \mathbf x + \mathbf y\, \leq \, \mathbf x\, + \, \mathbf y\, , where the length of the third side has been replaced by the vector sum . When and are real numbers, they can be viewed as vectors in , and the trian ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

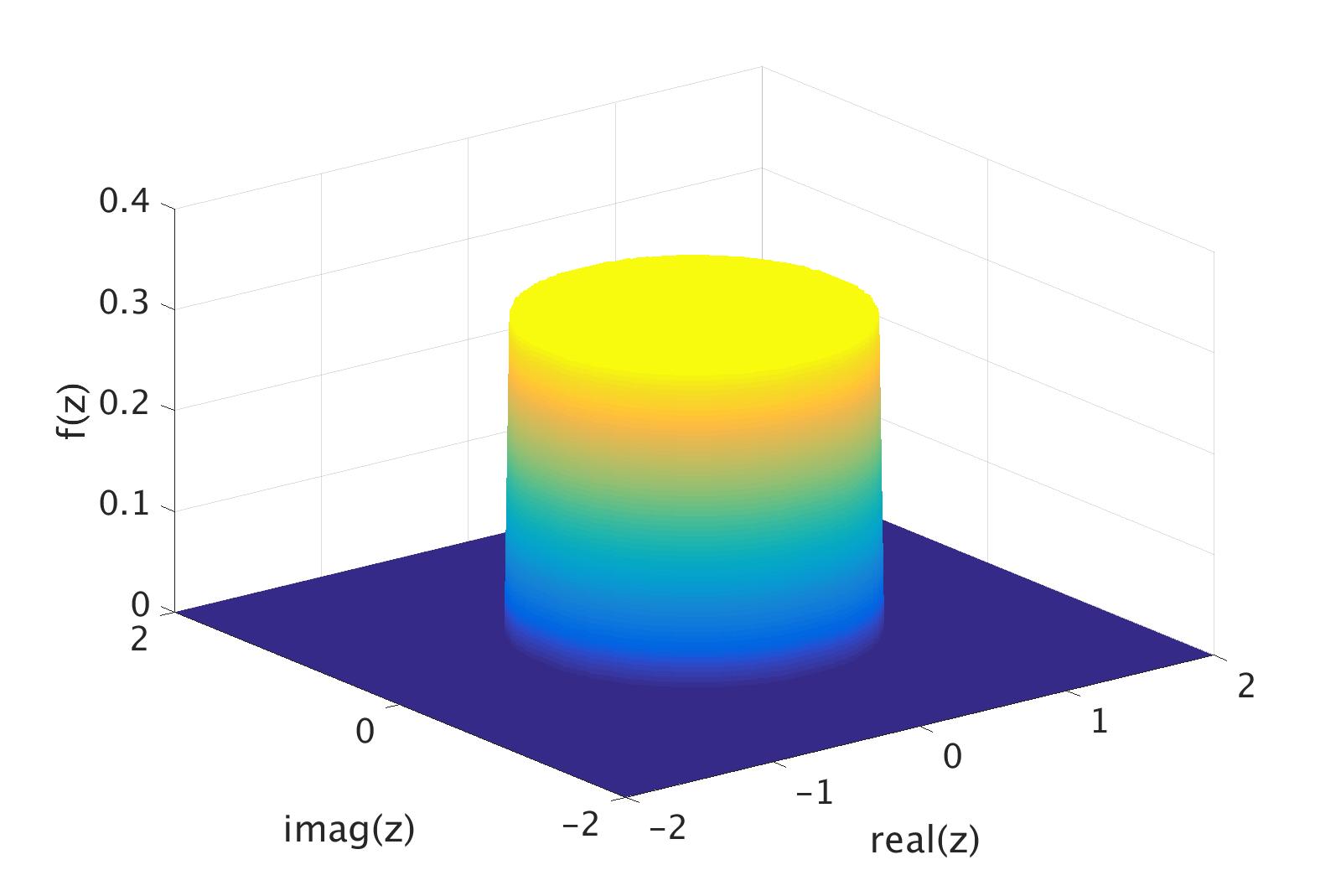

Complex Normal Distribution

In probability theory, the family of complex normal distributions, denoted \mathcal or \mathcal_, characterizes complex random variables whose real and imaginary parts are jointly normal. The complex normal family has three parameters: ''location'' parameter ''μ'', ''covariance'' matrix \Gamma, and the ''relation'' matrix C. The standard complex normal is the univariate distribution with \mu = 0, \Gamma=1, and C=0. An important subclass of complex normal family is called the circularly-symmetric (central) complex normal and corresponds to the case of zero relation matrix and zero mean: \mu = 0 and C=0 . This case is used extensively in signal processing, where it is sometimes referred to as just complex normal in the literature. Definitions Complex standard normal random variable The standard complex normal random variable or standard complex Gaussian random variable is a complex random variable Z whose real and imaginary parts are independent normally distributed random var ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uncorrelatedness (probability Theory)

In probability theory and statistics, two real-valued random variables, X, Y, are said to be uncorrelated if their covariance, \operatorname ,Y= \operatorname Y- \operatorname \operatorname /math>, is zero. If two variables are uncorrelated, there is no linear relationship between them. Uncorrelated random variables have a Pearson correlation coefficient, when it exists, of zero, except in the trivial case when either variable has zero variance (is a constant). In this case the correlation is undefined. In general, uncorrelatedness is not the same as orthogonality, except in the special case where at least one of the two random variables has an expected value of 0. In this case, the covariance is the expectation of the product, and X and Y are uncorrelated if and only if \operatorname Y= 0. If X and Y are independent, with finite second moments, then they are uncorrelated. However, not all uncorrelated variables are independent. Definition Definition for two real random var ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Covariance Matrix

In probability theory and statistics, a covariance matrix (also known as auto-covariance matrix, dispersion matrix, variance matrix, or variance–covariance matrix) is a square matrix giving the covariance between each pair of elements of a given random vector. Any covariance matrix is symmetric and positive semi-definite and its main diagonal contains variances (i.e., the covariance of each element with itself). Intuitively, the covariance matrix generalizes the notion of variance to multiple dimensions. As an example, the variation in a collection of random points in two-dimensional space cannot be characterized fully by a single number, nor would the variances in the x and y directions contain all of the necessary information; a 2 \times 2 matrix would be necessary to fully characterize the two-dimensional variation. The covariance matrix of a random vector \mathbf is typically denoted by \operatorname_ or \Sigma. Definition Throughout this article, boldfaced unsubsc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |