|

Pronunciation Lexicon Specification

The Pronunciation Lexicon Specification (PLS) is a W3C Recommendation, which is designed to enable interoperable specification of pronunciation information for both speech recognition and speech synthesis engines within voice browsing applications. The language is intended to be easy to use by developers while supporting the accurate specification of pronunciation information for international use. The language allows one or more pronunciations for a word or phrase to be specified using a standard pronunciation alphabet or if necessary using vendor specific alphabets. Pronunciations are grouped together into a PLS document which may be referenced from other markup languages, such as the Speech Recognition Grammar Specification SRGS and the Speech Synthesis Markup Language SSML. Usage Here is an example PLS document: judgment judgement ˈdʒʌdʒ.mənt fiancé fiance fiˈɒns.eɪ ˌfiː.ɑːnˈseɪ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speech Recognition

Speech recognition is an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that enable the recognition and translation of spoken language into text by computers with the main benefit of searchability. It is also known as automatic speech recognition (ASR), computer speech recognition or speech to text (STT). It incorporates knowledge and research in the computer science, linguistics and computer engineering fields. The reverse process is speech synthesis. Some speech recognition systems require "training" (also called "enrollment") where an individual speaker reads text or isolated vocabulary into the system. The system analyzes the person's specific voice and uses it to fine-tune the recognition of that person's speech, resulting in increased accuracy. Systems that do not use training are called "speaker-independent" systems. Systems that use training are called "speaker dependent". Speech recognition ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speech Synthesis

Speech synthesis is the artificial production of human speech. A computer system used for this purpose is called a speech synthesizer, and can be implemented in software or hardware products. A text-to-speech (TTS) system converts normal language text into speech; other systems render symbolic linguistic representations like phonetic transcriptions into speech. The reverse process is speech recognition. Synthesized speech can be created by concatenating pieces of recorded speech that are stored in a database. Systems differ in the size of the stored speech units; a system that stores phones or diphones provides the largest output range, but may lack clarity. For specific usage domains, the storage of entire words or sentences allows for high-quality output. Alternatively, a synthesizer can incorporate a model of the vocal tract and other human voice characteristics to create a completely "synthetic" voice output. The quality of a speech synthesizer is judged by its similarity to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SRGS

Speech Recognition Grammar Specification (SRGS) is a W3C standard for how ''speech recognition grammars'' are specified. A speech recognition grammar is a set of word patterns, and tells a speech recognition system what to expect a human to say. For instance, if you call an auto-attendant application, it will prompt you for the name of a person (with the expectation that your call will be transferred to that person's phone). It will then start up a speech recognizer, giving it a speech recognition grammar. This grammar contains the names of the people in the auto attendant's directory and a collection of sentence patterns that are the typical responses from callers to the prompt. SRGS specifies two alternate but equivalent syntaxes, one based on XML, and one using augmented BNF format. In practice, the XML syntax is used more frequently. Both the ABNF and XML form have the expressive power of a context-free grammar. A grammar processor that does not support recursive grammars ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speech Synthesis Markup Language

Speech Synthesis Markup Language (SSML) is an XML-based markup language for speech synthesis applications. It is a recommendation of the W3C's Voice Browser Working Group. SSML is often embedded in VoiceXML scripts to drive interactive telephony systems. However, it also may be used alone, such as for creating audio books. For desktop applications, other markup languages are popular, including Apple's embedded speech commands, and Microsoft's SAPI Text to speech (TTS) markup, also an XML language. It is also used to produce sounds via Azure Cognitive Services' Text to Speech API or when writing third-party skills for Google Assistant or Amazon Alexa. SSML is based on the Java Speech Markup Language (JSML) developed by Sun Microsystems, although the current recommendation was developed mostly by speech synthesis vendors. It covers virtually all aspects of synthesis, although some areas have been left unspecified, so each vendor accepts a different variant of the language. Also, in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speech Recognition

Speech recognition is an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that enable the recognition and translation of spoken language into text by computers with the main benefit of searchability. It is also known as automatic speech recognition (ASR), computer speech recognition or speech to text (STT). It incorporates knowledge and research in the computer science, linguistics and computer engineering fields. The reverse process is speech synthesis. Some speech recognition systems require "training" (also called "enrollment") where an individual speaker reads text or isolated vocabulary into the system. The system analyzes the person's specific voice and uses it to fine-tune the recognition of that person's speech, resulting in increased accuracy. Systems that do not use training are called "speaker-independent" systems. Systems that use training are called "speaker dependent". Speech recognition ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speech Recognition

Speech recognition is an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that enable the recognition and translation of spoken language into text by computers with the main benefit of searchability. It is also known as automatic speech recognition (ASR), computer speech recognition or speech to text (STT). It incorporates knowledge and research in the computer science, linguistics and computer engineering fields. The reverse process is speech synthesis. Some speech recognition systems require "training" (also called "enrollment") where an individual speaker reads text or isolated vocabulary into the system. The system analyzes the person's specific voice and uses it to fine-tune the recognition of that person's speech, resulting in increased accuracy. Systems that do not use training are called "speaker-independent" systems. Systems that use training are called "speaker dependent". Speech recognition ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Homophones

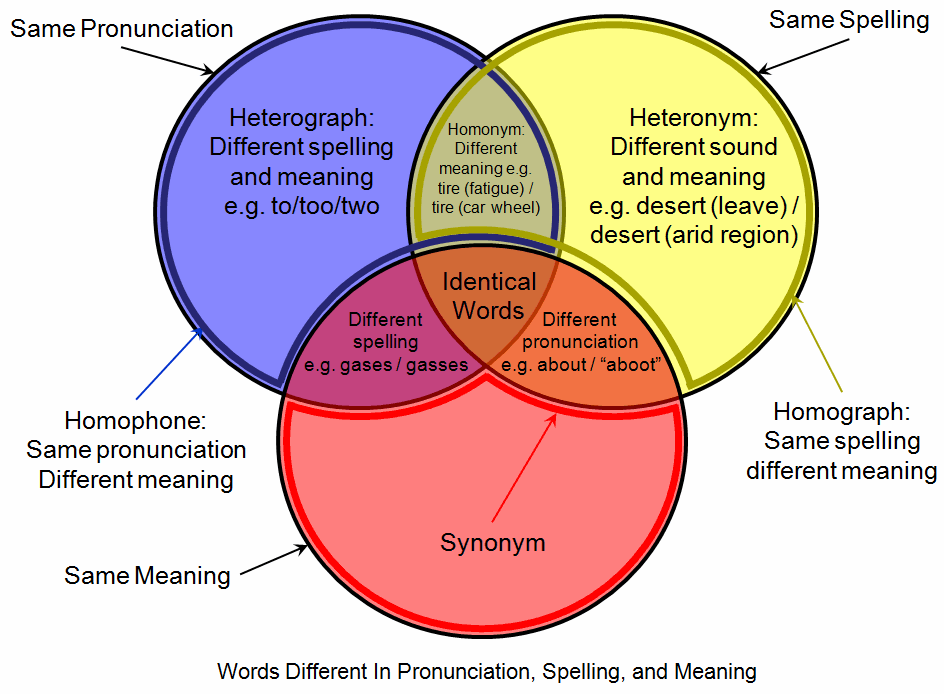

A homophone () is a word that is pronounced the same (to varying extent) as another word but differs in meaning. A ''homophone'' may also differ in spelling. The two words may be spelled the same, for example ''rose'' (flower) and ''rose'' (past tense of "rise"), or spelled differently, as in ''rain'', ''reign'', and ''rein''. The term ''homophone'' may also apply to units longer or shorter than words, for example a phrase, letter, or groups of letters which are pronounced the same as another phrase, letter, or group of letters. Any unit with this property is said to be ''homophonous'' (). Homophones that are spelled the same are also both homographs and homonyms, e.g. the word ''read'', as in "He is well ''read''" (he is very learned) vs. the sentence "I ''read'' that book" (I have finished reading that book). Homophones that are spelled differently are also called heterographs, e.g. ''to'', ''too'', and ''two''. Etymology "Homophone" derives from Greek ''homo-'' (ὁμο� ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Homographs

A homograph (from the el, ὁμός, ''homós'', "same" and γράφω, ''gráphō'', "write") is a word that shares the same written form as another word but has a different meaning. However, some dictionaries insist that the words must also be pronounced differently, while the Oxford English Dictionary says that the words should also be of "different origin". In this vein, ''The Oxford Guide to Practical Lexicography'' lists various types of homographs, including those in which the words are discriminated by being in a different ''word class'', such as ''hit'', the verb ''to strike'', and ''hit'', the noun ''a blow''. If, when spoken, the meanings may be distinguished by different pronunciations, the words are also heteronyms. Words with the same writing ''and'' pronunciation (i.e. are both homographs and homophones) are considered homonyms. However, in a looser sense the term "homonym" may be applied to words with the same writing ''or'' pronunciation. Homograph disambiguat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Orthographies

An orthography is a set of conventions for writing a language, including norms of spelling, hyphenation, capitalization, word breaks, emphasis, and punctuation. Most transnational languages in the modern period have a writing system, and most of these systems have undergone substantial standardization, thus exhibiting less dialect variation than the spoken language. These processes can fossilize pronunciation patterns that are no longer routinely observed in speech (e.g., "would" and "should"); they can also reflect deliberate efforts to introduce variability for the sake of national identity, as seen in Noah Webster's efforts to introduce easily noticeable differences between American and British spelling (e.g., "honor" and "honour"). Some nations (e.g. France and Spain) have established language academies in an attempt to regulate orthography officially. For most languages (including English) however, there are no such authorities and a sense of 'correct' orthography evol ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pronunciations

Pronunciation is the way in which a word or a language is spoken. This may refer to generally agreed-upon sequences of sounds used in speaking a given word or language in a specific dialect ("correct pronunciation") or simply the way a particular individual speaks a word or language. Contested or widely mispronounced words are typically verified by the sources from which they originate, such as names of cities and towns or the word GIF. A word can be spoken in different ways by various individuals or groups, depending on many factors, such as: the duration of the cultural exposure of their childhood, the location of their current residence, speech or voice disorders, their ethnic group, their social class, or their education. Linguistic terminology Syllables are counted as units of sound (phones) that they use in their language. The branch of linguistics which studies these units of sound is phonetics. Phones which play the same role are grouped together into classes called p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

VoiceXML

VoiceXML (VXML) is a digital document standard for specifying interactive media and voice dialogs between humans and computers. It is used for developing audio and voice response applications, such as banking systems and automated customer service portals. VoiceXML applications are developed and deployed in a manner analogous to how a web browser interprets and visually renders the Hypertext Markup Language (HTML) it receives from a web server. VoiceXML documents are interpreted by a voice browser and in common deployment architectures, users interact with voice browsers via the public switched telephone network (PSTN). The VoiceXML document format is based on Extensible Markup Language (XML). It is a standard developed by the World Wide Web Consortium (W3C). Usage VoiceXML applications are commonly used in many industries and segments of commerce. These applications include order inquiry, package tracking, driving directions, emergency notification, wake-up, flight tracking, voice ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SISR

Semantic Interpretation for Speech Recognition (SISR) defines the syntax and semantics of annotations to grammar rules in the Speech Recognition Grammar Specification (SRGS). Since 5 April 2007, it is a World Wide Web Consortium recommendation. By building upon SRGS grammars, it allows voice browsers via ECMAScript to semantically interpret complex grammars and provide the information back to the application. For example, it allows utterances like "I would like a Coca-cola and three large pizzas with pepperoni and mushrooms." to be interpreted into an object that can be understood by an application. For example, the utterance could produce the following object named : If used against this grammar that includes SISR markup in addition to the standard SRGS grammar in XML format: I would like a out.drink = new Object(); out.drink.liquid=rules.drink.type; out.drink.drinksize=rules.drink.drinksize; and out.pizza=rules.pizz ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |