|

Probability Density Estimation

In statistics, probability density estimation or simply density estimation is the construction of an estimate, based on observed data, of an unobservable underlying probability density function. The unobservable density function is thought of as the density according to which a large population is distributed; the data are usually thought of as a random sample from that population. A variety of approaches to density estimation are used, including Parzen windows and a range of data clustering techniques, including vector quantization. The most basic form of density estimation is a rescaled histogram. Example We will consider records of the incidence of diabetes. The following is quoted verbatim from the data set description: :''A population of women who were at least 21 years old, of Pima Indian heritage and living near Phoenix, Arizona, was tested for diabetes mellitus according to World Health Organization criteria. The data were collected by the US National Insti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Blood Plasma

Blood plasma is a light amber-colored liquid component of blood in which blood cells are absent, but contains proteins and other constituents of whole blood in suspension. It makes up about 55% of the body's total blood volume. It is the intravascular part of extracellular fluid (all body fluid outside cells). It is mostly water (up to 95% by volume), and contains important dissolved proteins (6–8%; e.g., serum albumins, globulins, and fibrinogen), glucose, clotting factors, electrolytes (, , , , , etc.), hormones, carbon dioxide (plasma being the main medium for excretory product transportation), and oxygen. It plays a vital role in an intravascular osmotic effect that keeps electrolyte concentration balanced and protects the body from infection and other blood-related disorders. Blood plasma is separated from the blood by spinning a vessel of fresh blood containing an anticoagulant in a centrifuge until the blood cells fall to the bottom of the tube. The blood plasma is t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

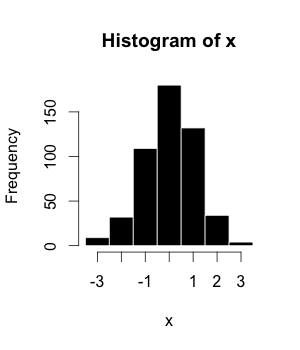

Histogram

A histogram is an approximate representation of the distribution of numerical data. The term was first introduced by Karl Pearson. To construct a histogram, the first step is to " bin" (or "bucket") the range of values—that is, divide the entire range of values into a series of intervals—and then count how many values fall into each interval. The bins are usually specified as consecutive, non-overlapping intervals of a variable. The bins (intervals) must be adjacent and are often (but not required to be) of equal size. If the bins are of equal size, a bar is drawn over the bin with height proportional to the frequency—the number of cases in each bin. A histogram may also be normalized to display "relative" frequencies showing the proportion of cases that fall into each of several categories, with the sum of the heights equaling 1. However, bins need not be of equal width; in that case, the erected rectangle is defined to have its ''area'' proportional to the frequency ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean Integrated Squared Error

In statistics, the mean integrated squared error (MISE) is used in density estimation. The MISE of an estimate of an unknown probability density is given by :\operatorname\, f_n-f\, _2^2=\operatorname\int (f_n(x)-f(x))^2 \, dx where ''ƒ'' is the unknown density, ''ƒ''''n'' is its estimate based on a sample of ''n'' independent and identically distributed random variables. Here, E denotes the expected value with respect to that sample. The MISE is also known as ''L''2 risk function. See also * Minimum distance estimation * Mean squared error In statistics, the mean squared error (MSE) or mean squared deviation (MSD) of an estimator (of a procedure for estimating an unobserved quantity) measures the average of the squares of the errors—that is, the average squared difference between ... References {{DEFAULTSORT:Mean Integrated Squared Error Estimation of densities Nonparametric statistics Point estimation performance ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kernel Density Estimation

In statistics, kernel density estimation (KDE) is the application of kernel smoothing for probability density estimation, i.e., a non-parametric method to estimate the probability density function of a random variable based on ''kernels'' as weights. KDE answers a fundamental data smoothing problem where inferences about the population are made, based on a finite data sample. In some fields such as signal processing and econometrics it is also termed the Parzen–Rosenblatt window method, after Emanuel Parzen and Murray Rosenblatt, who are usually credited with independently creating it in its current form. One of the famous applications of kernel density estimation is in estimating the class-conditional marginal densities of data when using a naive Bayes classifier, which can improve its prediction accuracy. Definition Let (''x''1, ''x''2, ..., ''xn'') be independent and identically distributed samples drawn from some univariate distribution with an unknown density ''ƒ'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that the coin is fair). Examples of random phenomena include the weather conditions at some future date, the height of a randomly selected person, the fraction of male students in a school, the results of a survey to be conducted, etc. Introduction A probability distribution is a mathematical description of the probabilities of events, subsets of the sample space. The sample space, often denoted by \Omega, is the set of all possible outcomes of a random phe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hydrology

Hydrology () is the scientific study of the movement, distribution, and management of water on Earth and other planets, including the water cycle, water resources, and environmental watershed sustainability. A practitioner of hydrology is called a hydrologist. Hydrologists are scientists studying earth or environmental science, civil or environmental engineering, and physical geography. Using various analytical methods and scientific techniques, they collect and analyze data to help solve water related problems such as environmental preservation, natural disasters, and water management. Hydrology subdivides into surface water hydrology, groundwater hydrology (hydrogeology), and marine hydrology. Domains of hydrology include hydrometeorology, surface hydrology, hydrogeology, drainage-basin management, and water quality, where water plays the central role. Oceanography and meteorology are not included because water is only one of many important aspects within those fields. H ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Novelty Detection

Novelty detection is the mechanism by which an intelligent organism is able to identify an incoming sensory pattern as being hitherto unknown. If the pattern is sufficiently salient or associated with a high positive or strong negative utility, it will be given computational resources for effective future processing. The principle is long known in neurophysiology, with roots in the orienting response research by E. N. SokolovSokolov, E. N., (1960). Neuronal models and the orienting reflex, In ''The Central Nervous System and Behavior'', Mary A.B. Brazier, ed. NY: JosiahMacy, Jr. Foundation, pp. 187–276 in the 1950s. The reverse phenomenon is habituation, i.e., the phenomenon that known patterns yield a less marked response. Early neural modeling attempts were by Yehuda Salu.Salu, Y. (1988)Models of neural novelty detectors, with similarities to cerebral cortex BioSystems, 21, pp. 99-113, Elsevier. An increasing body of knowledge has been collected concerning the corresponding m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Anomaly Detection

In data analysis, anomaly detection (also referred to as outlier detection and sometimes as novelty detection) is generally understood to be the identification of rare items, events or observations which deviate significantly from the majority of the data and do not conform to a well defined notion of normal behaviour. Such examples may arouse suspicions of being generated by a different mechanism, or appear inconsistent with the remainder of that set of data. Anomaly detection finds application in many domains including cyber security, medicine, machine vision, statistics, neuroscience, law enforcement and financial fraud to name only a few. Anomalies were initially searched for clear rejection or omission from the data to aid statistical analysis, for example to compute the mean or standard deviation. They were also removed to better predictions from models such as linear regression, and more recently their removal aids the performance of machine learning algorithms. However, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gumbel Distribtion ''

{{surname, Gumbel ...

Gumbel or Gumble is a surname. Notable people with the surname include: * Bryant Gumbel (born 1948), American television sportscaster, brother of Greg * David Heinz Gumbel (1906–1992), Israeli designer and silversmith * Emil Julius Gumbel (1891–1966), German mathematician, pacifist and anti-Nazi campaigner **creator of Gumbel distribution * Greg Gumbel (born 1946), American television sportscaster, brother of Bryant * Nicky Gumbel (born 1955), Anglican priest and author * Thomas Gumble (died 1676), English biographer * Wilhelm Theodor Gumbel (1812–1858), German bryologist * Wilhelm von Gumbel (1823–1898), German geologist Fictional * Barney Gumble, a fictional character from ''The Simpsons ''The Simpsons'' is an American animated sitcom created by Matt Groening for the Fox Broadcasting Company. The series is a satirical depiction of American life, epitomized by the Simpson family, which consists of Homer Simpson, Homer, Marge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayes' Rule

In probability theory and statistics, Bayes' theorem (alternatively Bayes' law or Bayes' rule), named after Thomas Bayes, describes the probability of an event, based on prior knowledge of conditions that might be related to the event. For example, if the risk of developing health problems is known to increase with age, Bayes' theorem allows the risk to an individual of a known age to be assessed more accurately (by conditioning it on their age) than simply assuming that the individual is typical of the population as a whole. One of the many applications of Bayes' theorem is Bayesian inference, a particular approach to statistical inference. When applied, the probabilities involved in the theorem may have different probability interpretations. With Bayesian probability interpretation, the theorem expresses how a degree of belief, expressed as a probability, should rationally change to account for the availability of related evidence. Bayesian inference is fundamental to Bayesi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean

There are several kinds of mean in mathematics, especially in statistics. Each mean serves to summarize a given group of data, often to better understand the overall value (magnitude and sign) of a given data set. For a data set, the ''arithmetic mean'', also known as "arithmetic average", is a measure of central tendency of a finite set of numbers: specifically, the sum of the values divided by the number of values. The arithmetic mean of a set of numbers ''x''1, ''x''2, ..., x''n'' is typically denoted using an overhead bar, \bar. If the data set were based on a series of observations obtained by sampling from a statistical population, the arithmetic mean is the ''sample mean'' (\bar) to distinguish it from the mean, or expected value, of the underlying distribution, the ''population mean'' (denoted \mu or \mu_x).Underhill, L.G.; Bradfield d. (1998) ''Introstat'', Juta and Company Ltd.p. 181/ref> Outside probability and statistics, a wide range of other notions of mean are o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |