|

Probabilistic Relevance Model (BM25)

The probabilistic relevance model was devised by Stephen E. Robertson and Karen Spärck Jones as a framework for probabilistic models to come. It is a formalism of information retrieval useful to derive ranking functions used by search engines and web search engines in order to rank matching documents according to their relevance Relevance is the connection between topics that makes one useful for dealing with the other. Relevance is studied in many different fields, including cognitive science, logic, and library and information science. Epistemology studies it in gener ... to a given search query. It is a theoretical model estimating the probability that a document ''dj'' is relevant to a query ''q''. The model assumes that this probability of relevance depends on the query and document representations. Furthermore, it assumes that there is a portion of all documents that is preferred by the user as the answer set for query ''q''. Such an ideal answer set is called ''R' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Stephen Robertson (computer Scientist)

Stephen Robertson is a British computer scientist. He is known for his work on probabilistic information retrieval together with Karen Spärck Jones and the Okapi BM25 weighting model. Early life Robertson was born in London, England, to (Theodosia) Cecil, ''née'' Spring Rice (1921–1984) and Martin Robertson (1911–2004), an internationally distinguished professor of Classical Greek Art and Archaeology at the University of London, Oxford University, and Trinity College, Cambridge. His younger brother is composer and musician Thomas Dolby. Career Okapi BM25 is very successful in experimental search evaluations and found its way in many information retrieval systems and products, including open source search systems like Lucene, Lemur, Xapian, and Terrier. BM25 is used as one of the most important signals in large web search engines, certainly in Microsoft Bing, and probably in other web search engines too. BM25 is also used in various other Microsoft products such as Mic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Karen Spärck Jones

Karen Ida Boalth Spärck Jones (26 August 1935 – 4 April 2007) was a self-taught programmer and a pioneering British computer and information scientist responsible for the concept of inverse document frequency (IDF), a technology that underlies most modern search engines. She was an advocate for women in computer science, her slogan being, "Computing is too important to be left to men." In 2019, ''The New York Times'' published her belated obituary in its series ''Overlooked'', calling her "a pioneer of computer science for work combining statistics and linguistics, and an advocate for women in the field." From 2008, to recognise her achievements in the fields of information retrieval (IR) and natural language processing (NLP), the Karen Spärck Jones Award is awarded annually to a recipient for outstanding research in one or both of her fields. Early life and education Karen Ida Boalth Spärck Jones was born in Huddersfield, Yorkshire, England. Her parents were Alfred Owe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Statistical Model

A statistical model is a mathematical model that embodies a set of statistical assumptions concerning the generation of sample data (and similar data from a larger population). A statistical model represents, often in considerably idealized form, the data-generating process. When referring specifically to probabilities, the corresponding term is probabilistic model. All statistical hypothesis tests and all statistical estimators are derived via statistical models. More generally, statistical models are part of the foundation of statistical inference. A statistical model is usually specified as a mathematical relationship between one or more random variables and other non-random variables. As such, a statistical model is "a formal representation of a theory" ( Herman Adèr quoting Kenneth Bollen). Introduction Informally, a statistical model can be thought of as a statistical assumption (or set of statistical assumptions) with a certain property: that the assumption allows us ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Information Retrieval

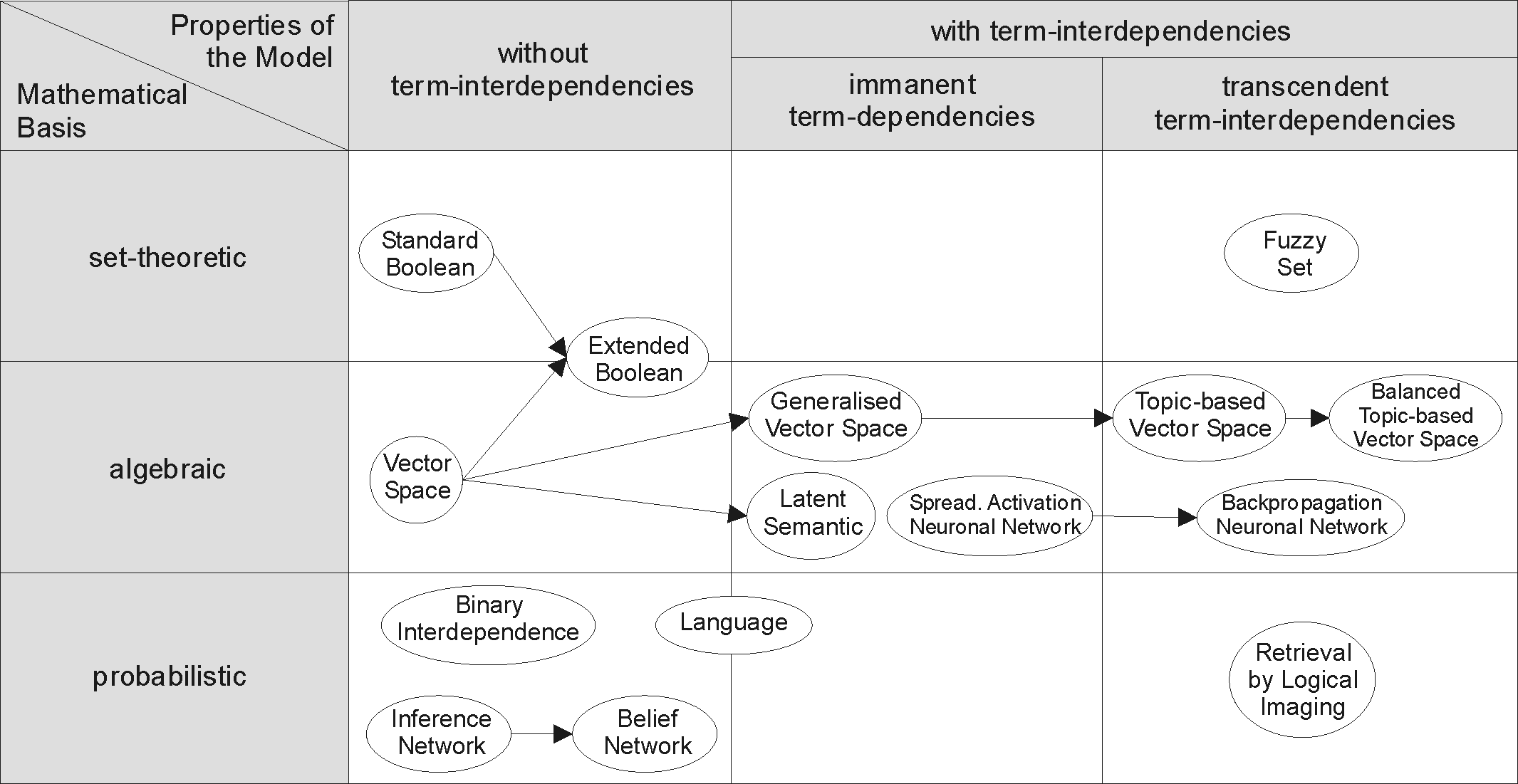

Information retrieval (IR) in computing and information science is the task of identifying and retrieving information system resources that are relevant to an Information needs, information need. The information need can be specified in the form of a search query. In the case of document retrieval, queries can be based on full-text search, full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds. Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; it also stores and manages those documents. Web search engines are the most visible IR applications. Overview An information retrieval process begins when a user enters a query into the sys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Ranking Function

Ranking of query is one of the fundamental problems in information retrieval (IR), the scientific/engineering discipline behind search engines. Given a query and a collection of documents that match the query, the problem is to rank, that is, sort, the documents in according to some criterion so that the "best" results appear early in the result list displayed to the user. Ranking in terms of information retrieval is an important concept in computer science and is used in many different applications such as search engine queries and recommender systems. A majority of search engines use ranking algorithms to provide users with accurate and relevant results. History The notion of page rank dates back to the 1940s and the idea originated in the field of economics. In 1941, Wassily Leontief developed an iterative method of valuing a country's sector based on the importance of other sectors that supplied resources to it. In 1965, Charles H Hubbell at the University of California, San ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |