|

Primitive Types

In computer science, primitive data types are a set of basic data types from which all other data types are constructed. Specifically it often refers to the limited set of data representations in use by a particular processor, which all compiled programs must use. Most processors support a similar set of primitive data types, although the specific representations vary. More generally, ''primitive data types'' may refer to the standard data types built into a programming language (built-in types). Data types which are not primitive are referred to as ''derived'' or ''composite''. Primitive types are almost always value types, but composite types may also be value types. Common primitive data types The most common primitive types are those used and supported by computer hardware, such as integers of various sizes, floating-point numbers, and Boolean logical values. Operations on such types are usually quite efficient. Primitive data types which are native to the processor have ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computer Science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, applied disciplines (including the design and implementation of Computer architecture, hardware and Software engineering, software). Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and preventing security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Octet (computing)

The octet is a unit of digital information in computing and telecommunications that consists of eight bits. The term is often used when the term '' byte'' might be ambiguous, as the byte has historically been used for storage units of a variety of sizes. The term ''octad(e)'' for eight bits is no longer common. Definition The international standard IEC 60027-2, chapter 3.8.2, states that a byte is an octet of bits. However, the unit byte has historically been platform-dependent and has represented various storage sizes in the history of computing. Due to the influence of several major computer architectures and product lines, the byte became overwhelmingly associated with eight bits. This meaning of ''byte'' is codified in such standards as ISO/IEC 80000-13. While ''byte'' and ''octet'' are often used synonymously, those working with certain legacy systems are careful to avoid ambiguity. Octets can be represented using number systems of varying bases such as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Single-precision Floating-point Format

Single-precision floating-point format (sometimes called FP32 or float32) is a computer number format, usually occupying 32 bits in computer memory; it represents a wide dynamic range of numeric values by using a floating radix point. A floating-point variable can represent a wider range of numbers than a fixed-point variable of the same bit width at the cost of precision. A signed 32-bit integer variable has a maximum value of 231 − 1 = 2,147,483,647, whereas an IEEE 754 32-bit base-2 floating-point variable has a maximum value of (2 − 2−23) × 2127 ≈ 3.4028235 × 1038. All integers with seven or fewer decimal digits, and any 2''n'' for a whole number −149 ≤ ''n'' ≤ 127, can be converted exactly into an IEEE 754 single-precision floating-point value. In the IEEE 754 standard, the 32-bit base-2 format is officially referred to as binary32; it was called single in IEEE 754-1985. IEEE 754 specifies additional floating-point types, such as 64-bit base-2 ''doubl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Real Number

In mathematics, a real number is a number that can be used to measure a continuous one- dimensional quantity such as a duration or temperature. Here, ''continuous'' means that pairs of values can have arbitrarily small differences. Every real number can be almost uniquely represented by an infinite decimal expansion. The real numbers are fundamental in calculus (and in many other branches of mathematics), in particular by their role in the classical definitions of limits, continuity and derivatives. The set of real numbers, sometimes called "the reals", is traditionally denoted by a bold , often using blackboard bold, . The adjective ''real'', used in the 17th century by René Descartes, distinguishes real numbers from imaginary numbers such as the square roots of . The real numbers include the rational numbers, such as the integer and the fraction . The rest of the real numbers are called irrational numbers. Some irrational numbers (as well as all the rationals) a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

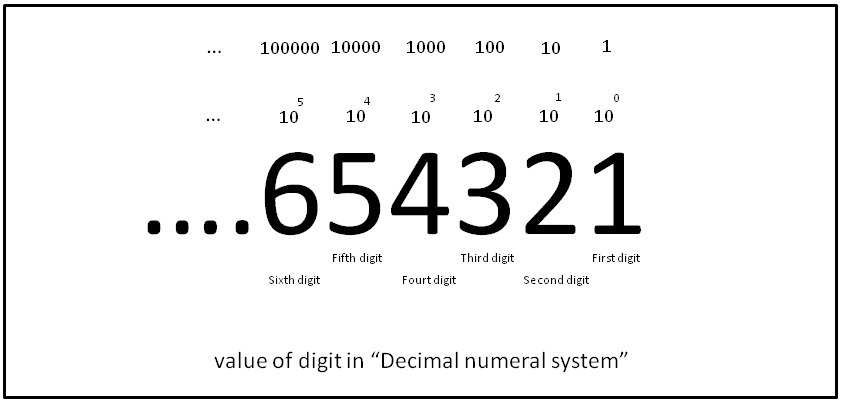

Decimal

The decimal numeral system (also called the base-ten positional numeral system and denary or decanary) is the standard system for denoting integer and non-integer numbers. It is the extension to non-integer numbers (''decimal fractions'') of the Hindu–Arabic numeral system. The way of denoting numbers in the decimal system is often referred to as ''decimal notation''. A decimal numeral (also often just ''decimal'' or, less correctly, ''decimal number''), refers generally to the notation of a number in the decimal numeral system. Decimals may sometimes be identified by a decimal separator (usually "." or "," as in or ). ''Decimal'' may also refer specifically to the digits after the decimal separator, such as in " is the approximation of to ''two decimals''". Zero-digits after a decimal separator serve the purpose of signifying the precision of a value. The numbers that may be represented in the decimal system are the decimal fractions. That is, fractions of the form , w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Binary Numeral System

A binary number is a number expressed in the base-2 numeral system or binary numeral system, a method for representing numbers that uses only two symbols for the natural numbers: typically "0" ( zero) and "1" ( one). A ''binary number'' may also refer to a rational number that has a finite representation in the binary numeral system, that is, the quotient of an integer by a power of two. The base-2 numeral system is a positional notation with a radix of 2. Each digit is referred to as a bit, or binary digit. Because of its straightforward implementation in digital electronic circuitry using logic gates, the binary system is used by almost all modern computers and computer-based devices, as a preferred system of use, over various other human techniques of communication, because of the simplicity of the language and the noise immunity in physical implementation. History The modern binary number system was studied in Europe in the 16th and 17th centuries by Thomas Harrio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Scientific Notation

Scientific notation is a way of expressing numbers that are too large or too small to be conveniently written in decimal form, since to do so would require writing out an inconveniently long string of digits. It may be referred to as scientific form or standard index form, or standard form in the United Kingdom. This base ten notation is commonly used by scientists, mathematicians, and engineers, in part because it can simplify certain arithmetic operations. On scientific calculators, it is usually known as "SCI" display mode. In scientific notation, nonzero numbers are written in the form or ''m'' times ten raised to the power of ''n'', where ''n'' is an integer, and the coefficient ''m'' is a nonzero real number (usually between 1 and 10 in absolute value, and nearly always written as a terminating decimal). The integer ''n'' is called the exponent and the real number ''m'' is called the '' significand'' or ''mantissa''. The term "mantissa" can be ambiguous where loga ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Rational Number

In mathematics, a rational number is a number that can be expressed as the quotient or fraction of two integers, a numerator and a non-zero denominator . For example, is a rational number, as is every integer (for example, The set of all rational numbers is often referred to as "the rationals", and is closed under addition, subtraction, multiplication, and division by a nonzero rational number. It is a field under these operations and therefore also called the field of rationals or the field of rational numbers. It is usually denoted by boldface , or blackboard bold A rational number is a real number. The real numbers that are rational are those whose decimal expansion either terminates after a finite number of digits (example: ), or eventually begins to repeat the same finite sequence of digits over and over (example: ). This statement is true not only in base 10, but also in every other integer base, such as the binary and hexadecimal ones (see ). A real n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Floating-point

In computing, floating-point arithmetic (FP) is arithmetic on subsets of real numbers formed by a ''significand'' (a Sign (mathematics), signed sequence of a fixed number of digits in some Radix, base) multiplied by an integer power of that base. Numbers of this form are called floating-point numbers. For example, the number 2469/200 is a floating-point number in base ten with five digits: 2469/200 = 12.345 = \! \underbrace_\text \! \times \! \underbrace_\text\!\!\!\!\!\!\!\overbrace^ However, 7716/625 = 12.3456 is not a floating-point number in base ten with five digits—it needs six digits. The nearest floating-point number with only five digits is 12.346. And 1/3 = 0.3333… is not a floating-point number in base ten with any finite number of digits. In practice, most floating-point systems use Binary number, base two, though base ten (decimal floating point) is also common. Floating-point arithmetic operations, such as addition and division, approximate the correspond ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

X86-64

x86-64 (also known as x64, x86_64, AMD64, and Intel 64) is a 64-bit extension of the x86 instruction set architecture, instruction set. It was announced in 1999 and first available in the AMD Opteron family in 2003. It introduces two new operating modes: 64-bit mode and compatibility mode, along with a new four-level paging mechanism. In 64-bit mode, x86-64 supports significantly larger amounts of virtual memory and physical memory compared to its 32-bit computing, 32-bit predecessors, allowing programs to utilize more memory for data storage. The architecture expands the number of general-purpose registers from 8 to 16, all fully general-purpose, and extends their width to 64 bits. Floating-point arithmetic is supported through mandatory SSE2 instructions in 64-bit mode. While the older x87 FPU and MMX registers are still available, they are generally superseded by a set of sixteen 128-bit Processor register, vector registers (XMM registers). Each of these vector registers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

IA-32

IA-32 (short for "Intel Architecture, 32-bit", commonly called ''i386'') is the 32-bit version of the x86 instruction set architecture, designed by Intel and first implemented in the i386, 80386 microprocessor in 1985. IA-32 is the first incarnation of x86 that supports 32-bit computing; as a result, the "IA-32" term may be used as a Metonymy, metonym to refer to all x86 versions that support 32-bit computing. Within various programming language directives, IA-32 is still sometimes referred to as the "i386" architecture. In some other contexts, certain iterations of the IA-32 ISA are sometimes labelled ''i486'', ''i586'' and ''i686'', referring to the instruction supersets offered by the i486, 80486, the P5 (microarchitecture), P5 and the P6 (microarchitecture), P6 microarchitectures respectively. These updates offered numerous additions alongside the base IA-32 set including X87, floating-point capabilities and the MMX (instruction set), MMX extensions. Intel was historically ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Pointer (computer Programming)

In computer science, a pointer is an object in many programming languages that stores a memory address. This can be that of another value located in computer memory, or in some cases, that of memory-mapped computer hardware. A pointer ''references'' a location in memory, and obtaining the value stored at that location is known as ''dereferencing'' the pointer. As an analogy, a page number in a book's index could be considered a pointer to the corresponding page; dereferencing such a pointer would be done by flipping to the page with the given page number and reading the text found on that page. The actual format and content of a pointer variable is dependent on the underlying computer architecture. Using pointers significantly improves performance for repetitive operations, like traversing iterable data structures (e.g. strings, lookup tables, control tables, linked lists, and tree structures). In particular, it is often much cheaper in time and space to copy and deref ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |