|

Prefetching

Prefetching in computer science is a technique for speeding up fetch operations by beginning a fetch operation whose result is expected to be needed soon. Usually this is before it is ''known'' to be needed, so there is a risk of wasting time by prefetching data that will not be used. The technique can be applied in several circumstances: * Cache prefetching, a speedup technique used by computer processors where instructions or data are fetched before they are needed * Prefetch input queue (PIQ), in computer architecture, pre-loading machine code from memory * Link prefetching, a web mechanism for prefetching links * Prefetcher technology in modern releases of Microsoft Windows * prefetch instructions, for example provided by ** PREFETCH, an X86 instruction in computing * Prefetch buffer Synchronous dynamic random-access memory (synchronous dynamic RAM or SDRAM) is any DRAM where the operation of its external pin interface is coordinated by an externally supplied clock sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Prefetching

Cache prefetching is a technique used by computer processors to boost execution performance by fetching instructions or data from their original storage in slower memory to a faster local memory before it is actually needed (hence the term 'prefetch'). Most modern computer processors have fast and local cache memory in which prefetched data is held until it is required. The source for the prefetch operation is usually main memory. Because of their design, accessing cache memories is typically much faster than accessing main memory, so prefetching data and then accessing it from caches is usually many orders of magnitude faster than accessing it directly from main memory. Prefetching can be done with non-blocking cache control instructions. Data vs. instruction cache prefetching Cache prefetching can either fetch data or instructions into cache. * Data prefetching fetches data before it is needed. Because data access patterns show less regularity than instruction patterns, accura ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Link Prefetching

Link prefetching allows web browsers to pre-load resources. This speeds up both the loading and rendering of web pages. Prefetching was first introduced in HTML5. Prefetching is accomplished through hints in web pages. These hints are used by the browser to prefetch links. Resources which can be prefetched include: JavaScript, CSS, image, audio, video, and web fonts. DNS names and TCP connections can also be hinted for prefetching. Prefetching in HTML5 There are two W3C standards covering prefetching for HTML5: * Link preload ** Hints to specific URLs. Common hints include JavaScript, CSS, images and web fonts. * Resource hints ** Hints to the browser. Common hints include DNS queries, opening TCP connections, and page pre-rendering. HTML5 methods for prefetch hints: * Standard link prefetch (supported by most browsers): * DNS prefetch (Mozilla Firefox, Google Chrome, and others): * Page pre-rendering (Google Chrome, Internet Explorer and others): * Lazy-load of images (Intern ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prefetch Input Queue

Fetching the instruction opcodes from program memory well in advance is known as prefetching and it is served by using prefetch input queue (PIQ).The pre-fetched instructions are stored in data structure - namely a queue. The fetching of opcodes well in advance, prior to their need for execution increases the overall efficiency of the processor boosting its speed. The processor no longer has to wait for the memory access operations for the subsequent instruction opcode to complete. This architecture was prominently used in the Intel 8086 microprocessor. Introduction Pipelining was brought to the forefront of computing architecture design during the 1960s due to the need for faster and more efficient computing. Pipelining is the broader concept and most modern processors load their instructions some clock cycles before they execute them. This is achieved by pre-loading machine code from memory into a ''prefetch input queue''. This behavior only applies to von Neumann computer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prefetcher

The Prefetcher is a component of Microsoft Windows which was introduced in Windows XP. It is a component of the Memory Manager that can speed up the Windows boot process and shorten the amount of time it takes to start up programs. It accomplishes this by caching files that are needed by an application to RAM as the application is launched, thus consolidating disk reads and reducing disk seeks. This feature was covered by US patent 6,633,968. Since Windows Vista, the Prefetcher has been extended by SuperFetch and ReadyBoost. SuperFetch attempts to accelerate application launch times by monitoring and adapting to application usage patterns over periods of time, and caching the majority of the files and data needed by them into memory in advance so that they can be accessed very quickly when needed. ReadyBoost (when enabled) uses external memory like a USB flash drive to extend the system cache beyond the amount of RAM installed in the computer. ReadyBoost also has a component calle ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Control Instruction

In computing, a cache control instruction is a hint embedded in the instruction stream of a processor intended to improve the performance of hardware caches, using foreknowledge of the memory access pattern supplied by the programmer or compiler. They may reduce cache pollution, reduce bandwidth requirement, bypass latencies, by providing better control over the working set. Most cache control instructions do not affect the semantics of a program, although some can. Examples Several such instructions, with variants, are supported by several processor instruction set architectures, such as ARM, MIPS, PowerPC, and x86. Prefetch Also termed ''data cache block touch'', the effect is to request loading the cache line associated with a given address. This is performed by the PREFETCH instruction in the x86 instruction set. Some variants bypass higher levels of the cache hierarchy, which is useful in a 'streaming' context for data that is traversed once, rather than held in the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

X86 Instruction Listings

The x86 instruction set refers to the set of instructions that x86-compatible microprocessors support. The instructions are usually part of an executable program, often stored as a computer file and executed on the processor. The x86 instruction set has been extended several times, introducing wider registers and datatypes as well as new functionality. x86 integer instructions Below is the full 8086/8088 instruction set of Intel (81 instructions total). Most if not all of these instructions are available in 32-bit mode; they just operate on 32-bit registers (eax, ebx, etc.) and values instead of their 16-bit (ax, bx, etc.) counterparts. The updated instruction set is also grouped according to architecture (i386, i486, i686) and more generally is referred to as (32-bit) x86 and (64-bit) x86-64 (also known as AMD64). Original 8086/8088 instructions Added in specific Intel processors Added with 80186/ 80188 Added with 80286 Added with 80386 Compared to ea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

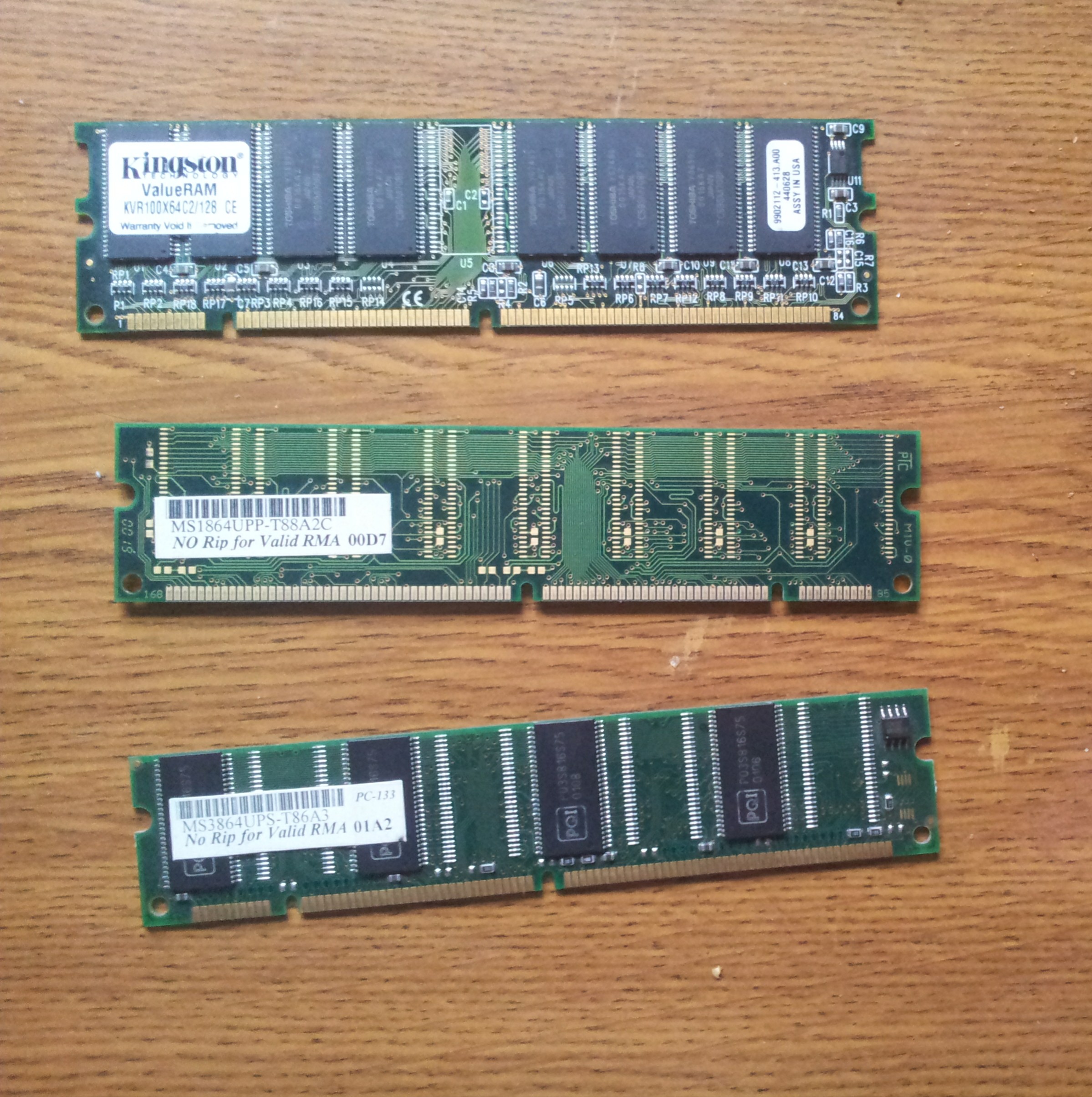

Prefetch Buffer

Synchronous dynamic random-access memory (synchronous dynamic RAM or SDRAM) is any Dynamic RAM, DRAM where the operation of its external pin interface is coordinated by an externally supplied clock signal. DRAM integrated circuits (ICs) produced from the early 1970s to early 1990s used an ''asynchronous'' interface, in which input control signals have a direct effect on internal functions only delayed by the trip across its semiconductor pathways. SDRAM has a ''synchronous'' interface, whereby changes on control inputs are recognised after a rising edge of its clock input. In SDRAM families standardized by JEDEC, the clock signal controls the stepping of an internal finite-state machine that responds to incoming commands. These commands can be pipelined to improve performance, with previously started operations completing while new commands are received. The memory is divided into several equally sized but independent sections called ''Memory bank, banks'', allowing the device t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Paging

In computer operating systems, memory paging is a memory management scheme by which a computer stores and retrieves data from secondary storage for use in main memory. In this scheme, the operating system retrieves data from secondary storage in same-size blocks called ''pages''. Paging is an important part of virtual memory implementations in modern operating systems, using secondary storage to let programs exceed the size of available physical memory. For simplicity, main memory is called "RAM" (an acronym of random-access memory) and secondary storage is called "disk" (a shorthand for hard disk drive, drum memory or solid-state drive, etc.), but as with many aspects of computing, the concepts are independent of the technology used. Depending on the memory model, paged memory functionality is usually hardwired into a CPU/MCU by using a Memory Management Unit (MMU) or Memory Protection Unit (MPU) and separately enabled by privileged system code in the operating system's ke ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |