|

Postselection

In probability theory, to postselect is to condition a probability space upon the occurrence of a given event. In symbols, once we postselect for an event E, the probability of some other event F changes from \operatorname /math> to the conditional probability \operatorname \, E/math>. For a discrete probability space, \operatorname \, E= \frac, and thus we require that \operatorname /math> be strictly positive in order for the postselection to be well-defined. See also PostBQP, a complexity class defined with postselection. Using postselection it seems quantum Turing machines are much more powerful: Scott Aaronson Scott Joel Aaronson (born May 21, 1981) is an American theoretical computer scientist and David J. Bruton Jr. Centennial Professor of Computer Science at the University of Texas at Austin. His primary areas of research are quantum computing ... proved PostBQP is equal to PP. Some quantum experiments use post-selection after the experiment as a replacement for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PostBQP

In computational complexity theory, PostBQP is a complexity class consisting of all of the computational problems solvable in polynomial time on a quantum Turing machine with postselection and bounded error (in the sense that the algorithm is correct at least 2/3 of the time on all inputs). Postselection is not considered to be a feature that a realistic computer (even a quantum one) would possess, but nevertheless postselecting machines are interesting from a theoretical perspective. Removing either one of the two main features (quantumness, postselection) from PostBQP gives the following two complexity classes, both of which are subsets of PostBQP: * BQP is the same as PostBQP except without postselection * BPPpath is the same as PostBQP except that instead of quantum, the algorithm is a classical randomized algorithm (with postselection) The addition of postselection seems to make quantum Turing machines much more powerful: Scott Aaronson proved. Preprint available a/ref> Po ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PP (complexity)

In complexity theory, PP is the class of decision problems solvable by a probabilistic Turing machine in polynomial time, with an error probability of less than 1/2 for all instances. The abbreviation PP refers to probabilistic polynomial time. The complexity class was defined by Gill in 1977. If a decision problem is in PP, then there is an algorithm for it that is allowed to flip coins and make random decisions. It is guaranteed to run in polynomial time. If the answer is YES, the algorithm will answer YES with probability more than 1/2. If the answer is NO, the algorithm will answer YES with probability less than 1/2. In more practical terms, it is the class of problems that can be solved to any fixed degree of accuracy by running a randomized, polynomial-time algorithm a sufficient (but bounded) number of times. Turing machines that are polynomially-bound and probabilistic are characterized as PPT, which stands for probabilistic polynomial-time machines. This characterizatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion). Although it is not possible to perfectly predict random events, much can be said about their behavior. Two major results in probab ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Probability

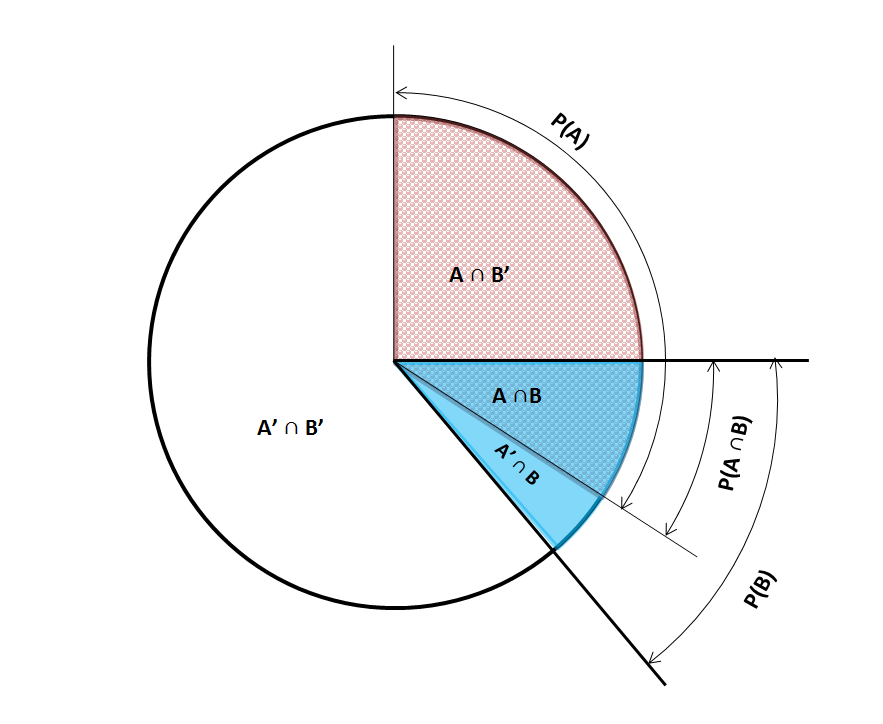

In probability theory, conditional probability is a measure of the probability of an event occurring, given that another event (by assumption, presumption, assertion or evidence) has already occurred. This particular method relies on event B occurring with some sort of relationship with another event A. In this event, the event B can be analyzed by a conditional probability with respect to A. If the event of interest is and the event is known or assumed to have occurred, "the conditional probability of given ", or "the probability of under the condition ", is usually written as or occasionally . This can also be understood as the fraction of probability B that intersects with A: P(A \mid B) = \frac. For example, the probability that any given person has a cough on any given day may be only 5%. But if we know or assume that the person is sick, then they are much more likely to be coughing. For example, the conditional probability that someone unwell (sick) is coughing might b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Space

In probability theory, a probability space or a probability triple (\Omega, \mathcal, P) is a mathematical construct that provides a formal model of a random process or "experiment". For example, one can define a probability space which models the throwing of a die. A probability space consists of three elements:Stroock, D. W. (1999). Probability theory: an analytic view. Cambridge University Press. # A sample space, \Omega, which is the set of all possible outcomes. # An event space, which is a set of events \mathcal, an event being a set of outcomes in the sample space. # A probability function, which assigns each event in the event space a probability, which is a number between 0 and 1. In order to provide a sensible model of probability, these elements must satisfy a number of axioms, detailed in this article. In the example of the throw of a standard die, we would take the sample space to be \. For the event space, we could simply use the set of all subsets of the sampl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Probability

In probability theory, conditional probability is a measure of the probability of an event occurring, given that another event (by assumption, presumption, assertion or evidence) has already occurred. This particular method relies on event B occurring with some sort of relationship with another event A. In this event, the event B can be analyzed by a conditional probability with respect to A. If the event of interest is and the event is known or assumed to have occurred, "the conditional probability of given ", or "the probability of under the condition ", is usually written as or occasionally . This can also be understood as the fraction of probability B that intersects with A: P(A \mid B) = \frac. For example, the probability that any given person has a cough on any given day may be only 5%. But if we know or assume that the person is sick, then they are much more likely to be coughing. For example, the conditional probability that someone unwell (sick) is coughing might b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discrete Probability Distribution

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that the coin is fair). Examples of random phenomena include the weather conditions at some future date, the height of a randomly selected person, the fraction of male students in a school, the results of a survey to be conducted, etc. Introduction A probability distribution is a mathematical description of the probabilities of events, subsets of the sample space. The sample space, often denoted by \Omega, is the set of all possible outcomes of a random ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantum Turing Machine

A quantum Turing machine (QTM) or universal quantum computer is an abstract machine used to model the effects of a quantum computer. It provides a simple model that captures all of the power of quantum computation—that is, any quantum algorithm can be expressed formally as a particular quantum Turing machine. However, the computationally equivalent quantum circuit is a more common model. Quantum Turing machines can be related to classical and probabilistic Turing machines in a framework based on transition matrices. That is, a matrix can be specified whose product with the matrix representing a classical or probabilistic machine provides the quantum probability matrix representing the quantum machine. This was shown by Lance Fortnow. Informal sketch A way of understanding the quantum Turing machine (QTM) is that it generalizes the classical Turing machine (TM) in the same way that the quantum finite automaton (QFA) generalizes the deterministic finite automaton (DFA). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scott Aaronson

Scott Joel Aaronson (born May 21, 1981) is an American theoretical computer scientist and David J. Bruton Jr. Centennial Professor of Computer Science at the University of Texas at Austin. His primary areas of research are quantum computing and computational complexity theory. Early life and education Aaronson grew up in the United States, though he spent a year in Asia when his father—a science writer turned public-relations executive—was posted to Hong Kong. He enrolled in a school there that permitted him to skip ahead several years in math, but upon returning to the US, he found his education restrictive, getting bad grades and having run-ins with teachers. He enrolled in The Clarkson School, a gifted education program run by Clarkson University, which enabled Aaronson to apply for colleges while only in his freshman year of high school. He was accepted into Cornell University, where he obtained his BSc in computer science in 2000, [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Proceedings Of The Royal Society A

''Proceedings of the Royal Society'' is the main research journal of the Royal Society. The journal began in 1831 and was split into two series in 1905: * Series A: for papers in physical sciences and mathematics. * Series B: for papers in life sciences. Many landmark scientific discoveries are published in the Proceedings, making it one of the most historically significant science journals. The journal contains several articles written by the most celebrated names in science, such as Paul Dirac, Werner Heisenberg, Ernest Rutherford, Erwin Schrödinger, William Lawrence Bragg, Lord Kelvin, J.J. Thomson, James Clerk Maxwell, Dorothy Hodgkin and Stephen Hawking. In 2004, the Royal Society began ''The Journal of the Royal Society Interface'' for papers at the interface of physical sciences and life sciences. History The journal began in 1831 as a compilation of abstracts of papers in the ''Philosophical Transactions of the Royal Society'', the older Royal Society publica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Theoretical Computer Science

computer science (TCS) is a subset of general computer science and mathematics that focuses on mathematical aspects of computer science such as the theory of computation, lambda calculus, and type theory. It is difficult to circumscribe the theoretical areas precisely. The ACM's Special Interest Group on Algorithms and Computation Theory (SIGACT) provides the following description: History While logical inference and mathematical proof had existed previously, in 1931 Kurt Gödel proved with his incompleteness theorem that there are fundamental limitations on what statements could be proved or disproved. Information theory was added to the field with a 1948 mathematical theory of communication by Claude Shannon. In the same decade, Donald Hebb introduced a mathematical model of learning in the brain. With mounting biological data supporting this hypothesis with some modification, the fields of neural networks and parallel distributed processing were established. I ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |